Abstract

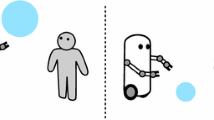

In this paper, the biological ability of visual attention is modeled for social robots to understand scenes and circumstance. Visual attention is determined by evaluating visual stimuli and prior knowledge in the intelligent saliency searching. Visual stimuli are measured using information entropy and biological color sensitivities, where the information entropy evaluates information qualities and the color sensitivity assesses biological attraction of a presented scene. We also learn and utilize the prior knowledge of people’s focus in the prediction of visual attention. The performance of the proposed technique is studied on different sorts of natural scenes and evaluated with fixation data of actual eye-tracking database. The experimental results proved the effectiveness of the proposed technique in discovering salient regions and predicting visual attention. The robustness of the proposed technique to transformation and illumination variance is also investigated. Social robots equipped with the proposed technique can autonomously determine their attention to a scene autonomously so as to behave naturally in the human robot interaction.

Similar content being viewed by others

References

Sha’ashua A, Ullman S (1988) Structural saliency: The detection of globally salient structures using a locally connected network. In: ICCV88

Rosin P (1997) Edges: saliency measures and automatic thresholding. Mach Vis Appl 9(4):139–159

Sun Y, Fisher R (2003) Object-based visual attention for computer vision. Artif Intell 146(1):77–123

Hou X, Zhang L (2007) Saliency detection: A spectral residual approach. In: IEEE conference on computer vision and pattern recognition (CVPR07). IEEE Computer Society, Citeseer, pp 1–8

Gao D, Vasconcelos N (2005) Discriminant saliency for visual recognition from cluttered scenes. Adv Neural Inf Process Syst 17:481–488

Gao D, Mahadevan V, Vasconcelos N (2007) The discriminant center-surround hypothesis for bottom-up saliency. In: Neural information processing systems (NIPS), Vancouver, Canada

Kadir T, Brady M (2001) Saliency, scale and image description. Int J Comput Vis 45(2):83–105

Chan A, Mahadevan V, Vasconcelos N (000) Generalized Stauffer–Grimson background subtraction for dynamic scenes, Mach. Vis. Appl. (2010) 1–16

Wixson L (2002) Detecting salient motion by accumulating directionally-consistent flow. IEEE Trans Pattern Anal Mach Intell 22(8):774–780

Li Z (2002) A saliency map in primary visual cortex. Trends Cogn Sci 6(1):9–16

Itti L, Koch C, Niebur E (2002) A model of saliency-based visual attention for rapid scene analysis. IEEE Trans Pattern Anal Mach Intell 20(11):1254–1259

Parkhurst D, Law K, Niebur E (2002) Modeling the role of salience in the allocation of overt visual attention. Vis Res 42(1):107–123

Park S, Shin J, Lee M (2010) Biologically inspired saliency map model for bottom-up visual attention. In: Biologically motivated computer vision. Springer, Berlin, pp 113–145

Borji A, Ahmadabadi M, Araabi B (2009) Cost-sensitive learning of top-down modulation for attentional control, Mach Vis Appl 1–16

Oliva A, Torralba A, Castelhano M, Henderson J (2003) Top-down control of visual attention in object detection. In: 2003 international conference on image processing. ICIP 2003. Proceedings

Ramanathan S, Katti H, Sebe N, Kankanhalli M, Chua T (2010) An eye fixation database for saliency detection in images. In: Computer vision–ECCV 2010, pp 30–43

Judd T, Ehinger K, Durand F, Torralba A (2010) Learning to predict where humans look. In: IEEE 12th international conference on computer vision, 2009 IEEE, New York, pp 2106–2113

Kandel E, Schwartz J, Jessell T, Mack S, Dodd J (1985) Principles of neural science. Elsevier, New York

Sharpe L, Stockman A, Jagla W, Jagle H (2005) A luminous efficiency function, V∗ λ, for daylight adaptation. J Vis 5:948–968

Smith T, Guild J (1931) The cie colorimetric standards and their use. Trans Opt Soc 33:73

Stokes M, Anderson M, Chandrasekar S, Motta R A standard default color space for the Internet–sRGB. Microsoft and Hewlett-Packard Joint Report

Wright W (1929) A re-determination of the trichromatic coefficients of the spectral colours. Trans Opt Soc 30:141

Ge SS, Yang Y, Lee T (2008) Hand gesture recognition and tracking based on distributed locally linear embedding. Image Vis Comput 26(12):1607–1620

Sun J, Zhang X, Cui J, Zhou L (2006) Image retrieval based on color distribution entropy. Pattern Recognit Lett 27(10):1122–1126

Rao A, Srihari RK, Zhang Z (1999) Spatial color histograms of content-based image retrieval. In: 11th IEEE international conference on tools with artifical intelligence, 1999. Proceedings. IEEE Press, New York, pp 183–186

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

He, H., Ge, S.S. & Zhang, Z. Visual Attention Prediction Using Saliency Determination of Scene Understanding for Social Robots. Int J of Soc Robotics 3, 457–468 (2011). https://doi.org/10.1007/s12369-011-0105-z

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-011-0105-z