Abstract

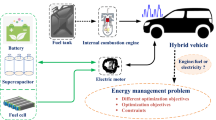

As an effective reinforcement learning (RL) algorithm, Q-learning has been applied to energy management strategy of hybrid electric vehicle (HEV) in recent years. In the existing literatures, the values of three hyperparameters based on Q-learning are all given in advance, which are respectively exploratory rate ε, discount factor γ and learning rate α. However, different values of hyperparameters will influence on fuel economy of the vehicle and offline computation speed. In this paper, it is proposed that the method of optimization on hyperparameters adapting to driving cycle. Firstly, the mathematical model between three hyperparameters and iteration times is established based on inherent regularity of hyperparameters influencing on vehicle performance respectively. Secondly, it is determined that the optimal changing index k_index of iteration number based on Q-learning corresponding to typical driving cycles. Finally, the simulation model of Yubei District in Chongqing is constructed based on the method of Kullback-Leibler (KL) driving cycle identification. The simulation results indicate that equivalent fuel consumption of the proposed strategy is reduced by 0.4 % and the offline operation time is reduced by 6 s. It can be concluded that the proposed strategy can not only improve fuel economy of the vehicle, but also accelerate the computation speed.

Similar content being viewed by others

References

Chen, Z. and Liu, Y. (2017). Energy management strategy for plug-in hybrid electric bus with evolutionary reinforcement learning method. J. Mechanical Engineering 53, 16, 86–93.

Dextreit, C. and Kolmanovsky, I. V. (2013). Game theory controller for hybrid electric vehicles. IEEE Trans. Systems Technology 22, 2, 652–663.

Du, G., Zou, Y., Zhang, X., Kong, Z., Wu, J. and He, D. (2019). Intelligent energy management for hybrid electric tracked vehicles using online reinforcement learning. Applied Energy, 251, 113388.

Gao, Y. and Ehsani, M. (2009). Design and control methodology of plug-in hybrid electric vehicles. IEEE Trans. Industrial Electronics 57, 2, 633–640.

Guo, J., He, H., Peng, J. and Zhou, N. (2019). A novel MPC-based adaptive energy management strategy in plug-in hybrid electric vehicles. Energy, 115, 378–392.

Han, J., Kum, D. and Park, Y. (2017). Synthesis of predictive equivalent consumption minimization strategy for hybrid electric vehicles based on closed form solution of optimal equivalence factor. IEEE Trans. Vehicular Technology 66, 1, 5604–5616.

Hu, X., Zou, Y. and Yang, Y. (2016). Greener plug-in hybrid electric vehicles incorporating renewable energy and rapid system optimization. Energy, 111, 971–980.

Hu, Y., Li, W., Xu, K., Zahid, T., Qin, F. and Li, C. (2018). Energy management strategy for a hybrid electric vehicle based on deep reinforcement learning. Applied Sciences 8, 2, 187.

Kang, J., Yu, R., Huang, X., Maharjan, S., Zhang, Y. and Hossain, E. (2017). Enabling localized peer-to-peer electricity trading among plug-in hybrid electric vehicles using consortium blockchains. IEEE Trans. Industrial Informatics 13, 6, 3154–3164.

Kim, H. and Kum, D. (2016). Comprehensive design methodology of input- and output-split hybrid electric vehicles: In search of optimal configuration. IEEE/ASME Trans. Mechatronics 21, 6, 2912–2923.

Li, L., Yan, B., Yang, C., Zhang, Y., Chen, Z. and Jiang, G. (2015). Application oriented stochastic energy management for plug-in hybrid electric bus with AMT. IEEE Trans. Vehicular Technology 65, 6, 4459–4470.

Li, L., You, S., Yang, C., Yan, B., Song, J. and Chen, Z. (2016). Driving-behavior-aware stochastic model predictive control for plug-in hybrid electric buses. Applied Energy, 162, 868–879.

Lin, X., Feng, Q., Mo, L. and Li, H. (2019). Optimal adaptation equivalent factor of energy management strategy for plug-in CVT HEV. Proc. Institution of Mechanical Engineers, Part D: J. Automobile Engineering 233, 4, 877–889.

Liu, C. and Murphey, Y. L. (2014). Power management for plug-in hybrid electric vehicles using reinforcement learning with trip information. IEEE Transportation Electrification Conf. Expo (ITEC), Dearborn, MI, USA.

Liu, T. and Hu, X. (2018). A bi-level control for energy efficiency improvement of a hybrid tracked vehicle. IEEE Trans. Industrial Informatics 14, 4, 1616–1625.

Liu, T., Hu, X., Hu, W. and Zou, Y. (2019). A heuristic planning reinforcement learning-based energy management for power-split plug-in hybrid electric vehicles. IEEE Trans. Industrial Informatics 15, 12, 6436–6445.

Liu, T., Hu, X., Li, S. E. and Cao, D. (2017). Reinforcement learning optimized look-ahead energy management of a parallel hybrid electric vehicle. IEEE/ASME Trans. Mechatronics 22, 4, 1497–1507.

Liu, T., Wang, B. and Yang, C. (2018a). Online Markov Chain-based energy management for a hybrid tracked vehicle with speedy Q-learning. Energy, 160, 544–555.

Liu, T., Yu, H., Guo, H., Qin, Y. and Zou, Y. (2018b). Online energy management for multimode plug-in hybrid electric vehicles. IEEE Trans. Industrial Informatics 15, 7, 4352–4361.

Liu, T., Zou, Y., Liu, D. and Sun, F. (2015a). Reinforcement learning-based energy management strategy for a hybrid electric tracked vehicle. Energies 8, 7, 7243–7260.

Liu, T., Zou, Y., Liu, D. and Sun, F. (2015b). Reinforcement learning of adaptive energy management with transition probability for a hybrid electric tracked vehicle. IEEE Trans. Industrial Electronics 62, 12, 7837–7846.

Martinez, C. M., Hu, X., Cao, D., Velenis, E., Gao, B. and Wellers, M. (2016). Energy management in plug-in hybrid electric vehicles: Recent progress and a connected vehicles perspective. IEEE Trans. Vehicular Technology 66, 6, 4534–4549.

Peng, J., He, H. and Xiong, R. (2017). Rule based energy management strategy for a series-parallel plug-in hybrid electric bus optimized by dynamic programming. Applied Energy, 185, 1633–1643.

Qi, X., Luo, Y., Wu, G., Boriboonsomsin, K. and Barth, M. (2019). Deep reinforcement learning enabled self-learning control for energy efficient driving. Transportation Research Part C: Emerging Technologies, 99, 67–81.

Qi, X., Wu G., Boriboonsomsin, K., Barth, M. J. and Gonder, J. (2016). Data-driven reinforcement learning-based realtime energy management system for plug-in hybrid electric vehicles. Transportation Research Record 2572, 1, 1–8.

Qi, X., Wu, G., Boriboonsomsin, K. and Barth, M. J. (2015). A novel blended real-time energy management strategy for plug-in hybrid electric vehicle commute trips. IEEE 18th Int. Conf. Intelligent Transportation Systems (ITSC), Gran Canaria, Spain.

Qi, Y., Xiang, C., Wang, W., Wen, B. and Ding, F. (2018). Model predictive coordinated control for dual-mode power-split hybrid electric vehicle. Int. J. Automotive Technology 19, 2, 345–358.

Qin, D., Zhan, S., Zeng, Y. and Su, L. (2016). Management strategy of hybrid electrical vehicle based on driving style recognition. J. Mechanical Engineering 52, 8, 162–169.

Tang, X., Wang, B., Yang, S., Di, L. and Xia, H. (2016). Control strategy for hybrid electric bus based on state transition probability. Automotive Engineering 38, 3, 263–268.

Wang, W. and Cheng, X. (2013). Forward simulation on the energy management system for series hybrid electric bus. AutomotiveEngineering 35, 2, 121–126.

Wang, Y., Wu, Z., Chen, Y., Xia, A., Guo, C. and Tang, Z. (2018). Research on energy optimization control strategy of the hybrid electric vehicle based on Pontryagin’s minimum principle. Computers & Electrical Engineering, 72, 203–213.

Wirasingha, S. G. and Emadi, A. (2010). Classification and review of control strategies for plug-in hybrid electric vehicles. IEEE Trans. Vehicular Technology 60, 1, 111–122.

Wu, J., He, H., Peng, J., Li, Y. and Li, Z. (2018). Continuous reinforcement learning of energy management with deep Q network for a power split hybrid electric bus. Applied Energy, 222, 799–811.

Xu, B., Malmir, F., Rathod, D. and Filipi, Z. (2019). Real-time reinforcement learning optimized energy management for a 48V mild hybrid electric vehicle. SAE Paper No. 2019-01-1208.

Yang, Y., Ye, P., Hu, X., Pu, B., Hong, L. and Zhang, K. (2016). A research on the fuzzy control strategy for a speed-coupling ISG HEV based on dynamic programming optimization. Automotive Engineering 38, 6, 674–679.

Yu, H., Tarsitano, D., Hu, X. and Cheli, F. (2016). Real time energy management strategy for a fast charging electric urban bus powered by hybrid energy storage system. Energy, 112, 322–331.

Yuan, J., Yang, L. and Chen, Q. (2018). Intelligent energy management strategy based on hierarchical approximate global optimization for plug-in fuel cell hybrid electric vehicles. Int. J. Hydrogen Energy 43, 16, 8063–8078.

Zeng, X., Wang, Z., Wang, Y. and Song, D. (2020). Online optimal control strategy methodology for power-split hybrid electric bus based on historical data. Int. J. Automotive Technology 21, 5, 1247–1256.

Zhang, S., Xiong, R. and Sun, F. (2017). Model predictive control for power management in a plug-in hybrid electric vehicle with hybrid energy storage system. Applied Energy, 185, 1654–1662.

Zhao, G. (2013). Energy management strategy for series hybrid electric vehicle. J. Northeastern University (Natural Science), 34, 4, 583–587.

Zhou, Z., Gong, J., He, Y. and Zhang, Y. (2017). Software defined machine-to-machine communication for smart energy management. IEEE Communications Magazine 55, 10, 52–60.

Zou, Y., Kong, Z., Liu, T. and Liu, D. (2017). A real-time Markov chain driver model for tracked vehicles and its validation: Its adaptability via stochastic dynamic programming. IEEE Trans. Vehicular Technology 66, 5, 3571–3582.

Acknowledgement

This research is supported by Chongqing Key Laboratory of Urban Rail Transit System Integration and Control Open Fund (Grant No. CKLURTSICKFKT-212005), Scientific and Technology Research Program of Chongqing Municipal Education Commission (Grant No. KJQN202000734), Technological Innovation and Application Development Research Program of the Chongqing Municipal Science and Technology Commission (Grant No. cstc2020jscxdxwtBX0025), Provincial Engineering Research Center for New Energy Vehicle Intelligent Control and Simulation Test Technology of Sichuan (Grant No. XNYQ2022-003) and Graduate Research Innovation Program of Chongqing Jiaotong University(Grant No. CYS22412).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yin, Y., Huang, X., Pan, X. et al. Hyperparameters of Q-Learning Algorithm Adapting to the Driving Cycle Based on KL Driving Cycle Recognition. Int.J Automot. Technol. 23, 967–981 (2022). https://doi.org/10.1007/s12239-022-0084-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12239-022-0084-0