Abstract

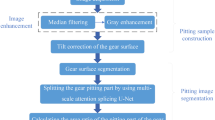

To quantitatively detect the gear pitting, this paper proposes a vision measurement method based on deep convolutional generative adversarial network (DCGAN) and fully convolutional segmentation network (U-Net) for measuring the area ratio of gear pitting. A machine vision system is designed to automatically collect the pitting images of all gear teeth obtained from gear fatigue tests, but the pitting images are not sufficient due to the high cost of gear fatigue test. To solve the problem of small sample, DCGAN is applied to expand pitting samples. By the expansive sample set, the edge detection algorithm and U-Net are respectively used to segment the tooth surface and pitting. The proposed approach is applied to measure the gear pitting, and a comprehensive evaluation index is designed to evaluate the performance of gear pitting detection. Experimental results show that the average relative error and the absolute error of pitting area ratio obtained by the proposed method are respectively 7.83 % and 0.18 %, which are much lower than those obtained by the conventional detection method without sample augmentation. Thus, the proposed method has satisfactory accuracy and precede the detection method without sample augmentation.

Similar content being viewed by others

References

S. Xiang, Y. Qin, C. Zhu, Y. Wang and H. Chen, Long short-term memory neural network with weight amplification and its application into gear remaining useful life prediction, Eng. Appl. Artif. Intel., 91 (2020) 103587.

K. Ko et al., A study on the bending stress of the hollow sun gear in a planetary gear train, J. Mech. Sci. Technol., 24 (2010) 29–32.

D. Han, N. Zhao and P. Shi, Gear fault feature extraction and diagnosis method under different load excitation based on EMD, PSO-SVM and fractal box dimension, J. Mech. Sci. Technol., 33 (2019) 487–494.

Y. Qin, Y. F. Mao, B. P. Tang, Y. Wang and H. Z. Chen, M-band flexible wavelet transform and its application to the fault diagnosis of planetary gear transmission systems, Mech. Syst. Signal Pr., 134 (2019) 106298.

Y. Qin, X. Wang and J. Q. Zou, The optimized deep belief networks with improved logistic sigmoid units and their application in fault diagnosis for planetary gearboxes of wind turbines, IEEE Trans Ind. Electron., 66 (2019) 3814–3824.

D. S. Ramteke, A. Parey and R. B. Pachori, Automated gear fault detection of micron level wear in bevel gears using variational mode decomposition, J. Mech. Sci. Technol., 33 (2019) 5769–5777.

J. L. Li, H. Q. Wang and L. Y. Song, A novel sparse feature extraction method based on sparse signal via dual-channel self-adaptive TQWT, Chinese Journal of Aeronautics (2020) (in press).

Y. Qin, S. Xiang, Y. Chai and H. Chen, Macroscopic-microscopic attention in LSTM networks based on fusion features for gear remaining life prediction, IEEE Trans. Ind. Electron., 67 (2020) 10865–10875.

H. T. Xue, D. Y. Ding, Z. M. Zhang, M. Wu and H. Q. Wang, A fuzzy system of operation safety assessment using multimodel linkage and multi-stage collaboration for in-wheel motor, IEEE Transactions on Fuzzy Systems (2021) (in press).

A. R. Rao and N. Chang, Machine Vision Applications in Industrial Inspection IV, The International Society for Optics and Photonics, 4301 (2001) 1–19.

C. Jian, L. Maohai, L. Rui and C. Liguo, Flying vision system design of placement machine for microphone, Intelligent Industrial Systems, 3 (2017) 5–14.

K. D. Joshi and B. W. Surgenor, Small parts classification with flexible machine vision and a hybrid classifier, I. C. Mech. Mach. Vis Pr. (2018) 73–78.

W. H. Zhang, X. Wang, W. You, J. F. Chen, P. Dai and P. B. Zhang, RESLS: region and edge synergetic level set framework for image segmentation, IEEE Trans. Image Process, 29 (2020) 57–71.

L. Wang, L. Y. Xu, J. Yu, Y. L. Xue and G. W. Zhang, Context-aware edge similarity segmentation algorithm of time series, Cluster Comput., 19 (2016) 1421–1436.

Z. Y. Zhu, P. Luo, X. G. Wang and X. O. Tang, Deep learning identity-preserving face space, IEEE International Conf. Comp. Vis. (2013) 113–120.

Y. Sun, X. G. Wang and X. Tang, Deep learning face representation from predicting 10000 classes, 2014 IEEE Conference on Computer Vision and Pattern Recognition (2014) 1891–1898.

Z. Jin, J. Y. Yang, Z. S. Hu and Z. Lou, Face recognition based on the uncorrelated discriminant transformation, Pattern Recogn., 34 (2001) 1405–1416.

I. J. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville and Y. Bengio, Generative adversarial nets, NIPS Proceedings, 27 (2014).

A. Radford, L. Metz and S. Chintala, Unsupervised representation learning with deep convolutional generative adversarial networks, Computer Science (2015).

J. Y. Zhu, T. Park, P. Isola and A. A. Efros, Unpaired image-to-image translation using cycle-consistent adversarial networks, 2017 IEEE International Conference on Computer Vision (ICCV) (2017) 2242–2251.

A. Krizhevsky, I. Sutskever and G. E. Hinton, ImageNet classification with deep convolutional neural networks, Commun. Acm., 60 (2017) 84–90.

E. Shelhamer, J. Long and T. Darrell, Fully convolutional networks for semantic segmentation, IEEE Trans. Pattern Anal., 39 (2017) 640–651.

O. Ronneberger, P. Fischer and T. Brox, U-Net: convolutional networks for biomedical image segmentation, International Conference on Medical Image Computing and Computer-Assisted Intervention (2015) 234–241.

V. Badrinarayanan, A. Kendall and R. Cipolla, SegNet: a deep convolutional encoder-decoder architecture for image segmentation, IEEE Trans. Pattern Anal., 39 (2017) 2481–2495.

L. C. Chen, G. Papandreou, I. Kokkinos, K. Murphy and A. L. Yuille, DeepLab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs, IEEE Trans. Pattern Anal., 40 (2018) 834–848.

Y. J. Li and Y. F. Li, Randon and ridgelet transformation applicaton in image digital watermark, Advanced Materials Research, 756–759 (2013) 1925–1929.

W. J. D. H. W. Guangzhi and D. H. Z. Yiyi, The frequency features and application of edge detection differential operators in medical image, Journal of Biomedical Engineering, 1 (2005).

C. Goutte and E. Gaussier, A probabilistic interpretation of precision, recall and F-score, with implication for evaluation, Advances in Information Retrieval, 3408 (2005) 345–359.

F. Ahmed, D. Tarlow and D. Batra, Optimizing expected intersection-over-union with candidate-constrained CRFs, 2015 IEEE International Conference on Computer Vision (ICCV) (2015) 1850–1858.

L. Hamers, Y. Hemeryck, G. Herweyers, M. Janssen, H. Keters, R. Rousseau and A. Vanhoutte, Similarity measures in scientometric research-the jaccard index versus salton cosine formula, Inform. Process. Manag., 25 (1989) 315–318.

Acknowledgments

The work described in this paper was supported by the National Key R&D Program of China (No. 2018YFB2001300), and National Natural Science Foundation of China (No. 51675065).

Author information

Authors and Affiliations

Corresponding author

Additional information

Zhiwen Wang received the B.Eng. degree in Mechatronic Engineering in 2018 from Southwest Petroleum University, Chengdu, China. He is currently pursuing a Master’s degree in Mechanical Engineering at Chongqing University and his research interest is in machine vision, deep learning and damage detection.

Yi Qin received the B.Eng. and Ph.D. degrees in Mechanical Engineering from Chongqing University, Chongqing, China, in 2004 and 2008, respectively. Since January 2009, he has been with the Chongqing University, Chongqing, China, where he is currently a Professor in the College of Mechanical Engineering. His current research interests include signal processing, fault prognosis, mechanical dynamics and smart structure.

Weiwei Chen received the B.Eng. degree in Mechanical Engineering in 2017 from Harbin Institute of Technology (Weihai), Weihai, China. He is currently pursuing a Master’s degree in Mechanical Engineering at Chongqing University and his research interests include computer vision, image processing and intelligent diagnosis of mechanical faults.

Rights and permissions

About this article

Cite this article

Wang, Z., Qin, Y. & Chen, W. Vision measurement of gear pitting based on DCGAN and U-Net. J Mech Sci Technol 35, 2771–2779 (2021). https://doi.org/10.1007/s12206-021-0601-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12206-021-0601-5