Abstract

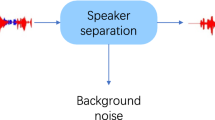

This paper describes a speaker-attributed automatic speech recognition (SA-ASR) system submitted to the multi-channel multi-party meeting transcription challenge, which aims to address the “who spoke what” problem. We align the serialized output training-based multi-speaker ASR hypotheses and speaker diarization (SD) results to obtain speaker-attributed transcriptions. We use a pre-trained multi-frame cross-channel attention (MFCCA) model as the ASR module. We build a cascade system which includes a pre-trained speaker overlap-aware neural diarization and target-speaker voice activity detection model as the SD module. Decoding and alignment strategies are further used to improve the SA-ASR performance. Our proposed system outperforms the baseline with a relative improvement of 40.3% in terms of concatenated minimum-permutation character error rate on the AliMeeting dataset, which ranks top-3 on the fixed sub-track.

摘要

本文介绍了我们提交给多通道多方会议转录(M2MeT2.0)比赛的说话人相关自动语音识别 (SA-ASR)系统, 该系统旨在解决“谁说了什么”问题。将基于序列化输出训练的多说话人语音识别转录和说话人日志结果对齐, 以获得说话人相关的转录。使用预训练的多帧跨通道注意力(MFCCA)模型作为语音识别模块。构建了一个级联系统, 其中包括一个预训练的说话人重叠感知神经日志和目标说话人语音活动检测模型作为说话人日志模块。使用解码和对齐策略来进一步提高SA-ASR性能。提出的系统在AliMeeting数据集上的级联最小排列字符错误率方面优于基线, 且相对提高了40.3%, 在限定数据子赛道上排名前三。

Similar content being viewed by others

References

FISCUS J G, AJOT J, GAROFOLO J S. The rich transcription 2007 meeting recognition evaluation [M]//Multimodal technologies for perception of humans. Berlin, Heidelberg: Springer Berlin Heidelberg, 2008: 373–389.

YU D, CHANG X K, QIAN Y M. Recognizing multi-talker speech with permutation invariant training [C]//Interspeech 2017. Stockholm: ISCA, 2017: 2456–2460.

SHI M, DU Z, CHEN Q, et al. CASA-ASR: Context-aware speaker-attributed ASR [DB/OL]. (2023-05-21). https://arxiv.org/abs/2305.12459

SEKI H, HORI T, WATANABE S, et al. A purely end-to-end system for multi-speaker speech recognition [C]//Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics. Melbourne: ACL, 2018: 2620–2630.

KANDA N, GAUR Y, WANG X F, et al. Serialized output training for end-to-end overlapped speech recognition [C]//Interspeech 2020. Shanghai: ISCA, 2020: 2797–2801.

YU F, ZHANG S L, FU Y H, et al. M2MeT: The ICASSP 2022 multi-channel multi-party meeting transcription challenge [DB/OL]. (2021-10-14). http://arxiv.org/abs/2110.07393

YU F, ZHANG S L, GUO P C, et al. Summary on the ICASSP 2022 multi-channel multi-party meeting transcription grand challenge [DB/OL]. (2022-02-08). http://arxiv.org/abs/2202.03647

YU F, DU Z H, ZHANG S L, et al. A comparative study on speaker-attributed automatic speech recognition in multi-party meetings [C]//Interspeech 2022. Incheon: ISCA, 2022: 560–564.

FU Y H, CHENG L Y, LV S B, et al. AISHELL-4: An open source dataset for speech enhancement, separation, recognition and speaker diarization in conference scenario [C]//Interspeech 2021. Brno: ISCA, 2021: 3665–3669.

FAN Y, KANG J W, LI L T, et al. CN-CELEB: A challenging Chinese speaker recognition dataset [DB/OL]. (2019-10-31). http://arxiv.org/abs/1911.01799

HE M K, LV X, ZHOU W L, et al. The USTC-Ximalaya system for the ICASSP 2022 multichannel multi-party meeting transcription (M2MeT) challenge [DB/OL]. (2022-02-10). http://arxiv.org/abs/2202.04855

DU Z H, ZHANG S L, ZHENG S Q, et al. Speaker overlap-aware neural diarization for multi-party meeting analysis [C]//Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing. Abu Dhabi: ACL, 2022: 7458–7469.

SHI M H, ZHANG J, DU Z H, et al. A comparative study on multichannel speaker-attributed automatic speech recognition in multi-party meetings [DB/OL]. (2022-11-01). http://arxiv.org/abs/2211.00511

YU F, ZHANG S L, GUO P C, et al. MFCCA: Multiframe cross-channel attention for multi-speaker ASR in multi-party meeting scenario [C]//2022 IEEE Spoken Language Technology Workshop. Doha: IEEE, 2023: 144–151.

CHANG F J, RADFAR M, MOUCHTARIS A, et al. Multi-channel transformer transducer for speech recognition [C]//Interspeech 2021. Brno: ISCA, 2021: 296–300.

WANG W Q, QIN X Y, LI M. Cross-channel attention-based target speaker voice activity detection: Experimental results for the M2met challenge [C]//ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing. Singapore: IEEE, 2022: 9171–9175.

MEDENNIKOV I, KORENEVSKY M, PRISYACH T, et al. Target-speaker voice activity detection: A novel approach for multi-speaker diarization in a dinner party scenario [C]//Interspeech 2020. Shanghai: ISCA, 2020: 274–278.

HE M K, RAJ D, HUANG Z L, et al. Target-speaker voice activity detection with improved i-vector estimation for unknown number of speaker [C]//Interspeech 2021. Brno: ISCA, 2021: 3555–3559.

SNYDER D, CHEN G G, POVEY D. MUSAN: A music, speech, and noise corpus [DB/OL]. (2015-10-28). http://arxiv.org/abs/1510.08484

YOSHIOKA T, NAKATANI T. Generalization of multi-channel linear prediction methods for blind MIMO impulse response shortening [J]. IEEE Transactions on Audio, Speech, and Language Processing, 2012, 20(10): 2707–2720.

ZHANG H Y, CISSE M, DAUPHIN Y N, et al. Mixup: Beyond empirical risk minimization [DB/OL]. (2017-10-25). http://arxiv.org/abs/1710.09412

KINGMA D P, BA J. Adam: A method for stochastic optimization [DB/OL]. (2014-12-22). http://arxiv.org/abs/1412.6980

FISCUS J G, AJOT J, MICHEL M, et al. The rich transcription 2006 spring meeting recognition evaluation [M]//Machine learning for multimodal interaction. Berlin, Heidelberg: Springer Berlin Heidelberg, 2006: 309–322.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest The authors declare that they have no conflict of interest.

Additional information

Foundation item: the National Natural Science Foundation of China (No. 62101523), the Joint AI Laboratory of CMB-USTC (No. FTIT2022058), and the USTC Research Funds of the Double First-Class Initiative (No. YD2100002008)

Rights and permissions

About this article

Cite this article

Xu, L., Yan, H., He, M. et al. Multi-Frame Cross-Channel Attention and Speaker Diarization Based Speaker-Attributed Automatic Speech Recognition System for Multi-Channel Multi-Party Meeting Transcription. J. Shanghai Jiaotong Univ. (Sci.) (2024). https://doi.org/10.1007/s12204-024-2715-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12204-024-2715-2

Keywords

- multi-channel multi-party meeting transcription

- speaker-attributed automatic speech recognition (SA-ASR)

- serialized output training

- speaker diarization

- concatenated minimum-permutation character error rate