Abstract

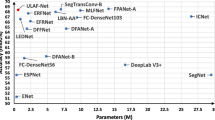

Moving object segmentation (MOS) is one of the essential functions of the vision system of all robots, including medical robots. Deep learning-based MOS methods, especially deep end-to-end MOS methods, are actively investigated in this field. Foreground segmentation networks (FgSegNets) are representative deep end-to-end MOS methods proposed recently. This study explores a new mechanism to improve the spatial feature learning capability of FgSegNets with relatively few brought parameters. Specifically, we propose an enhanced attention (EA) module, a parallel connection of an attention module and a lightweight enhancement module, with sequential attention and residual attention as special cases. We also propose integrating EA with FgSegNet_v2 by taking the lightweight convolutional block attention module as the attention module and plugging EA module after the two Maxpooling layers of the encoder. The derived new model is named FgSegNet_v2_EA. The ablation study verifies the effectiveness of the proposed EA module and integration strategy. The results on the CDnet2014 dataset, which depicts human activities and vehicles captured in different scenes, show that FgSegNet_v2_EA outperforms FgSegNet_v2 by 0.08% and 14.5% under the settings of scene dependent evaluation and scene independent evaluation, respectively, which indicates the positive effect of EA on improving spatial feature learning capability of FgSegNet_v2.

摘要

运动物体分割 (MOS) 是包括医疗机器人在内的所有机器人视觉系统的基本功能之一。基于 深度学习的 MOS 方法, 特别是深度端到端 MOS 方法, 在该领域正得到积极研究。前景分割网络 (FgSegNets) 是最近提出的代表性深度端到端 MOS 方法。本研究探索了一种新的机制, 通过引入 相对较少的参数来提高 FgSegNets 的空间特征学习能力。具体来说, 我们提出了增强注意力 (EA) 模块, 它是注意力模块和轻量级增强模块的并行连接, 顺序注意力和残差注意力为其特殊情况。还 提出将 EA 与 FgSegNet_v2 集成, 采用轻量级卷积块注意力模块作为注意力模块, 并在编码器的两 个最大池化层之后插入 EA 。派生的新模型名为 FgSegNet_v2_EA 。消融研究验证了所提出的 EA 模块 和集成策略的有效性。 CDnet2014 数据集上的实验结果显示, FgSegNet_v2_EA 在场景相关评估和 场景无关评估设置下分别比 FgSegNet_v2 提高了 0.08% 和 14.5%, 这表明 EA 对提高 FgSegNet_v2 的空间特征学习能力具有积极作用。

Similar content being viewed by others

References

MANDAL M, VIPPARTHI S K. An empirical review of deep learning frameworks for change detection: Model design, experimental frameworks, challenges and research needs [J]. IEEE Transactions on Intelligent Transportation Systems, 2022, 23(7): 6101–6122.

BOUWMANS T, JAVED S, SULTANA M, et al. Deep neural network concepts for background subtraction: A systematic review and comparative evaluation [J]. Neural Networks, 2019, 117: 8–66.

RAMAMOORTHY M, BANU U S. Video enhancement for medical and surveillance applications [J]. Current Medical Imaging Reviews, 2017, 13(2): 195–203.

CHEN M Q, ZHENG Y F, MUELLER K, et al. Enhancement of organ of interest via background subtraction in cone beam rotational angiocardiogram [C]//2012 9th IEEE International Symposium on Biomedical Imaging. Barcelona: IEEE, 2012: 622–625.

JIANG R, ZHU R, SU H, et al. Deep learning-based moving object segmentation: Recent progress and research prospects [J]. Machine Intelligence Research, 2023. https://doi.org/10.1007/s11633-022-1378-4

LIM L A, YALIM KELES H. Foreground segmentation using convolutional neural networks for multiscale feature encoding [J]. Pattern Recognition Letters, 2018, 112: 256–262.

LIM L A, KELES H Y. Learning multi-scale features for foreground segmentation [J]. Pattern Analysis and Applications, 2020, 23(3): 1369–1380.

WANG Y, JODOIN P M, PORIKLI F, et al. CD-net 2014: An expanded change detection benchmark dataset [C]//2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops. Columbus: IEEE, 2014: 393–400.

TEZCAN M O, ISHWAR P, KONRAD J. BSUV-net: A fully-convolutional neural network for background subtraction of unseen videos [C]//2020 IEEE Winter Conference on Applications of Computer Vision. Snowmass: IEEE, 2020: 2763–2772.

YANG Y Z, RUAN J H, ZHANG Y Q, et al. STP-Net: A spatial-temporal propagation network for background subtraction [J]. IEEE Transactions on Circuits and Systems for Video Technology, 2022, 32(4): 2145–2157.

ZHANG J, ZHANG X, ZHANG Y Y, et al. Metaknowledge learning and domain adaptation for unseen background subtraction [J]. IEEE Transactions on Image Processing, 2021, 30: 9058–9068.

POSNER M I, PETERSEN S E. The attention system of the human brain [J]. Annual Review of Neuro-science, 1990, 13: 25–42.

GUO M H, XU T X, LIU J J, et al. Attention mechanisms in computer vision: A survey [J]. Computational Visual Media, 2022, 8(3): 331–368.

DE SANTANA CORREIA A, COLOMBINI E L. Attention, please! A survey of neural attention models in deep learning [J]. Artificial Intelligence Review, 2022, 55(8): 6037–6124.

PATIL P W, DUDHANE A, MURALA S, et al. Deep adversarial network for scene independent moving object segmentation [J]. IEEE Signal Processing Letters, 2021, 28: 489–493.

SIMONYAN K, ZISSERMAN A. Very deep convolutional networks for large-scale image recognition [DB/OL]. (2014-09-04). https://arxiv.org/abs/1409.1556

AKILAN T, JONATHAN WU Q M, ZHANG W D. Video foreground extraction using multi-view receptive field and encoder-decoder DCNN for traffic and surveillance applications [J]. IEEE Transactions on Vehicular Technology, 2019, 68(10): 9478–9493.

AKILAN T, JONATHAN WU Q M. sEnDec: An improved image to image CNN for foreground localization [J]. IEEE Transactions on Intelligent Transportation Systems, 2020, 21(10): 4435–4443.

LIANG D, WEI Z Q, SUN H, et al. Robust cross-scene foreground segmentation in surveillance video [C]//2021 IEEE International Conference on Multimedia and Expo. Shenzhen: IEEE, 2021: 1–6.

MANDAL M, DHAR V, MISHRA A, et al. 3DFR: A swift 3D feature reductionist framework for scene independent change detection [J]. IEEE Signal Processing Letters, 2019, 26(12): 1882–1886.

MANDAL M, DHAR V, MISHRA A, et al. 3DCD: Scene independent end-to-end spatiotemporal feature learning framework for change detection in unseen videos [J]. IEEE Transactions on Image Processing, 2021, 30: 546–558.

AKILAN T, WU Q J, SAFAEI A, et al. A 3D CNN-LSTM-based image-to-image foreground segmentation [J]. IEEE Transactions on Intelligent Transportation Systems, 2020, 21(3): 959–971.

TUNG H, ZHENG C, MAO X S, et al. Multi-lead ECG classification via an information-based attention convolutional neural network [J]. Journal of Shanghai Jiao Tong University (Science), 2022, 27(1): 55–69.

HU J, SHEN L, SUN G. Squeeze-and-excitation networks [C]//2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City: IEEE, 2018: 7132–7141.

LIU J J, HOU Q B, CHENG M M, et al. Improving convolutional networks with self-calibrated convolutions [C]//2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Seattle: IEEE, 2020: 10093–10102.

WOO S, PARK J, LEE J Y, et al. CBAM: convolutional block attention module [M]//Computer vision -ECCV 2018. Cham: Springer, 2018: 3–19.

PARKJ, WOOS,LEEJY,etal. BAM: Bottleneck attention module [DB/OL]. (2018-07-17). https://arxiv.org/abs/1807.06514

CHEN Y Y, WANG J Q, ZHU B K, et al. Pixelwise deep sequence learning for moving object detection [J]. IEEE Transactions on Circuits and Systems for Video Technology, 2019, 29(9): 2567–2579.

LIANG D, LIU X Y. Coarse-to-fine foreground segmentation based on Co-occurrence pixel-block and spatio-temporal attention model [C]//2020 25th International Conference on Pattern Recognition. Milan: IEEE, 2021: 3807–3813.

LIANG D, KANG B, LIU X Y, et al. Cross-scene foreground segmentation with supervised and unsupervised model communication [J]. Pattern Recognition, 2021, 117: 107995.

TANG Y Q, ZHANG X, CHEN D H, et al. Motion-augmented change detection for video surveillance [C]//2021 IEEE 23rd International Workshop on Multimedia Signal Processing. Tampere: IEEE, 2021: 1–6.

HE K M, ZHANG X Y, REN S Q, et al. Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification [C]//2015 IEEE International Conference on Computer Vision. Santiago: IEEE, 2015: 1026–1034.

ZENG D D, ZHU M. Background subtraction using multiscale fully convolutional network [J]. IEEE Access, 2018, 6: 16010–16021.

Author information

Authors and Affiliations

Corresponding author

Additional information

Foundation item: the National Natural Science Foundation of China (No. 61702323)

Rights and permissions

About this article

Cite this article

Jiang, R., Zhu, R., Cai, X. et al. Foreground Segmentation Network with Enhanced Attention. J. Shanghai Jiaotong Univ. (Sci.) 28, 360–369 (2023). https://doi.org/10.1007/s12204-023-2603-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12204-023-2603-1

Key words

- human-computer interaction

- moving object segmentation

- foreground segmentation network

- enhanced attention

- convolutional block attention module