Abstract

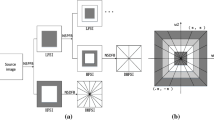

In order to enhance the contrast of the fused image and reduce the loss of fine details in the process of image fusion, a novel fusion algorithm of infrared and visible images is proposed. First of all, regions of interest (RoIs) are detected in two original images by using saliency map. Then, nonsubsampled contourlet transform (NSCT) on both the infrared image and the visible image is performed to get a low-frequency sub-band and a certain amount of high-frequency sub-bands. Subsequently, the coefficients of all sub-bands are classified into four categories based on the result of RoI detection: the region of interest in the low-frequency sub-band (LSRoI), the region of interest in the high-frequency sub-band (HSRoI), the region of non-interest in the low-frequency sub-band (LSNRoI) and the region of non-interest in the high-frequency sub-band (HSNRoI). Fusion rules are customized for each kind of coefficients and fused image is achieved by performing the inverse NSCT to the fused coefficients. Experimental results show that the fusion scheme proposed in this paper achieves better effect than the other fusion algorithms both in visual effect and quantitative metrics.

Similar content being viewed by others

References

Stathaki T. Image fusion: Algorithms and applications [M]. New York: Academic Press, 2008.

Rockinger O, Fechner T. Pixel-level image fusion: The case of image sequences [J]. Proceedings of SPIE, 1998, 3374: 378–388.

Burt P J, Adelson E H. Merging images through pattern decomposition [J]. Proceedings of SPIE, 1985, 575: 173–181.

Toet A, Van Ruyven L J, Valeton J M. Merging thermal and visual images by a contrast pyramid [J]. Optical Engineering, 1989, 28(7): 789–792.

Burt P J, Kolczynski R J. Enhanced image capture through fusion [C]//Proceedings of the 4th International Conference on Computer Vision. Berlin: IEEE, 1993: 173–182.

Hill P R, Bull D R, Canagarajah C N. Image fusion using a new framework for complex wavelet transforms [C]//Proceedings of the IEEE International Conference on Image Processing. Genoa: IEEE, 2005: 1338–1341.

Li S T, Wang Y N. Landsat TM and SAR image fusion by multi-wavelet transform [J]. Proceedings of SPIE. 2001, 4548: 146–150.

Simoncelli E P, Freeman W T. The steerable pyramid: A flexible architecture for multi-scale derivative computation [C]//Proceedings of International Conference on Image Processing. Washington DC: IEEE, 1995: 444–447.

Candes E J, Donoho D L. Curvelets: A suprizingly effective nonadaptive representation for objects with edges [C]//Saint-Malo Proceedings. Nashville, TN: Vanderbilt University Press, 1999: 1–16.

Do M N, Vetterli M. Beyond wavelets: Contourlets [M]. New York: Academic Press, 2003.

Cunha A L, Zhou J P, Donoho M N. The nonsubsampled contourlet transform: Theory, design, and applications [J]. IEEE Transactions on Image Processing, 2006, 15(10): 3089–3101.

Hou X D, Zhang L Q. Saliency detection: A spectral residual approach [C]// Proceedings of IEEE Conference on Computer Vision and Pattern Recognition. Minneapolis, MN: IEEE, 2007: 1–8.

Lewis J J, Nikolov S G, Canagarajah C N, et al. Uni-modal versus joint segmentation for region-based image fusion [C]//Proceedings of the 9th International Conference on Information Fusion. Florence, Italy: IEEE, 2006: 10–13.

Doyle W. Operation useful for similarity-invariant pattern recognition [J]. Journal of Association of Computer Mechanics, 1962, 9(2): 259–267.

Author information

Authors and Affiliations

Corresponding author

Additional information

Foundation item: the National Natural Science Foundation of China (No. 61105022), the Research Fund for the Doctoral Program of Higher Education of China (No. 20110073120028), and the Jiangsu Provincial Natural Science Foundation (No. BK2012296)

Rights and permissions

About this article

Cite this article

Liu, Hx., Zhu, Th. & Zhao, Jj. Infrared and visible image fusion based on region of interest detection and nonsubsampled contourlet transform. J. Shanghai Jiaotong Univ. (Sci.) 18, 526–534 (2013). https://doi.org/10.1007/s12204-013-1437-7

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12204-013-1437-7