Abstract

This research is a part of a broader project exploring how movement qualities can be recognized by means of the auditory channel: can we perceive an expressive full-body movement quality by means of its interactive sonification? The paper presents a sonification framework and an experiment to evaluate if embodied sonic training (i.e., experiencing interactive sonification of your own body movements) increases the recognition of such qualities through the auditory channel only, compared to a non-embodied sonic training condition. We focus on the sonification of two mid-level movement qualities: fragility and lightness. We base our sonification models, described in the first part, on the assumption that specific compounds of spectral features of a sound can contribute to the cross-modal perception of a specific movement quality. The experiment, described in the second part, involved 40 participants divided into two groups (embodied sonic training vs. no training). Participants were asked to report the level of lightness and fragility they perceived in 20 audio stimuli generated using the proposed sonification models. Results show that (1) both expressive qualities were correctly recognized from the audio stimuli, (2) a positive effect of embodied sonic training was observed for fragility but not for lightness. The paper is concluded by the description of the artistic performance that took place in 2017 in Genoa (Italy), in which the outcomes of the presented experiment were exploited.

Similar content being viewed by others

1 Introduction

Interactive sonification of human movement has been receiving growing interest from both researchers and industry (e.g., see [14, 22], and the ISon Workshop series). The work presented in this paper was part of the European Union H2020 ICT Dance Project,Footnote 1 which aimed at developing techniques for the real-time analysis of movement qualities and their translation to the auditory channel. Applications of the project’s outcome include systems for visually impaired and blind-folded people allowing them to “see” the qualities of movement through the auditory channel. Dance adopted a participative interaction design involving artists, with particular reference to composers, choreographers and dancers. One of its outcomes was the artistic project “Atlante del Gesto” realized in collaboration with the choreographer Virgilio Sieni,Footnote 2 that took place in Genoa in the first part of 2017.

Expressive movement sonification is the process of translating a movement into a sound that “evokes” some of the movement’s expressive characteristics. It can be applied in the design of multimodal interfaces enabling users to exploit non-verbal full-body movement expressivity in communication and social interaction. In this work, sonification models are inspired by several sources, including [10, 11], the analysis of literature in cinema soundtracks [2] and research in cross-modality [34]. The first part of the paper presents the sonification of two expressive movement qualities, lightness and fragility, studied in the Dance Project. These two qualities are taken from the middle level of the framework defined in [8]. They involve full-body movements analyzed in time windows going from 0.5 to 5 s. The second part describes an experiment evaluating the role of embodied sonic training (i.e., experiencing interactive sonification of your own body movements) on the recognition of such qualities from their sonification.

The rest of the paper is organized as it follows: after illustrating the related works in Sect. 2, definitions and computational models of lightness and fragility are described in Sect. 3, while the corresponding sonification models are presented in Sect. 4. In Sects. 5 and 6 we describe the experiment and its results. Section 7 is dedicated to the description of an artistic performance based on the interactive sonification framework. We conclude the paper in Sect. 8.

2 Related work

The design of sonifications able to effectively communicate expressive qualities of movement—as a sort of “translation” from the visual to the auditory modality—is an interesting open research challenge that can have a wide number of applications in therapy and rehabilitation [6, 33], sport [15, 23] education [19] and human–machine interfaces [3].

Several studies (e.g., [9, 14, 18, 23]) investigated how to translate movement into the auditory domain, and a number of possible associations between sound, gestures and movements trajectories were proposed. For instance, Kolykhalova et al. [27] developed a serious game platform for validating mappings between human movements and sonification parameters. Singh et al. [33] and Vogt et al. [36] applied sonification in rehabilitation. The former paper investigates how sound feedback can motivate and affect body perception during rehabilitation sessions for patients suffering from chronic back pain. The latter presents a movement-to-sound mapping system for patients with arm motoric disabilities.

Dance is a physical activity involving non-functional movements and gestures conveying an expressive content (e.g., an emotional state). Table 1 reports a list of existing studies on sonification techniques for dance. Many of them, e.g., [1, 5, 25, 26, 31], only considered low-level movement features (i.e., at the level of motion capture data, wearable sensors, video, and so on) and mapped them into sound. Studies that proposed sonification models to translate higher-level movement features are less common. Some, e.g., [12, 16, 17], focus on the sonification of Effort qualities from the Laban movement analysis (LMA) system [28]. Camurri et al. [7] proposed a interactive sonification system to support the process of learning specific movement qualities like, for example, dynamic symmetry.

The majority of the existing studies used post experiment questionnaires only as a procedure to validate sonification. In our work, we additionally analyze spectral characteristics of the sounds generated by the sonification models.

3 Analysis of movement: lightness and fragility

In [8] Camurri et al. introduced a multi-layered conceptual framework modeling human movement quality. The first layer (called “physical”) includes low-level features computed frame-by-frame, while higher-level layers include features computed at larger temporal scales. In the presented work we focus on two mid-level features: lightness and fragility. This choice is motivated by two reasons: (1) they both contribute to expressive communication and (2) they clearly differ in terms of motor planning. While fragility is characterized by irregular and unpredictable interruptions of the motor plan, Lightness is a continuous, smooth execution of a fixed motor plan. A recent study of Vaessen et al. [35] confirms these peculiarities and differences also in terms of brain response in fMRI data (this study involved participants observing Light vs. Fragile dance performances).

In the paper, we choose the perceptive of an observer of the movements (e.g., the audience during the performance) and we do not focus on intentions of the performer. An observer usually does not give the same importance to all the movement s/he can see. Indeed, mid-level features are perceived in particular, salient moments. Therefore, their computational model follows the same principle: we compute the low-level features first, then we evaluate their saliency and the mid-level feature is detected as a result of the application of saliency algorithms.

An example of Rarity computed on the feature X: a values of X on 1000 frames and the corresponding values of Rarity computed on a 100 frames sliding window, b histogram for the data segment S1 and the bin containing the value of X at frame 400 (red arrow), c histogram of the data segment S2 and the bin containing the value of X at frame 463 (red arrow)

3.1 Lightness

A full-body movement is perceived by an observer as light if at least one of the following conditions occurs:

-

the movement has a low amount of downward vertical acceleration,

-

the movement of a single body part has a high amount of downward vertical acceleration that is counterbalanced by a simultaneous upward acceleration of another part of the body (for example, the fall of an arm is simultaneously counterbalanced by the raise of a knee),

-

a movement starting with significant downward vertical acceleration of a single body part is resolved into the horizontal plane, typically through a spiral movement (i.e., rotating the velocity vector from the vertical to the horizontal plane).

An example of a dancer moving with a prevalence of Lightness can be seen at:

The low-level movement features Weight Index and Motion Index are used to compute Lightness. Weight Index (of a body part) models verticality of movement and is computed as the ratio between the vertical component of kinetic energy and the total (i.e., all the directions) energy. Then, full-body Weight Index is computed as average of the Weight Index of all body parts. Motion Index models the overall amount of full-body kinetic energy.

To compute Lightness, we additionally need an approximated measure of saliency of the Weight Index. Several computational models of saliency exist in the literature, e.g., [13, 21, 30], but they are computationally demanding. We propose to model saliency using a simple analysis primitive, that we call Rarity.

Rarity is an analysis primitive that can be computed on any movement feature X. The idea is to consider the histogram of X and to estimate the “distance” between the bin in which lies the current value of X and the bin corresponding to the most frequently occurring values of X in the “past”.

Given the time series \(x = x_1,\ldots ,x_n\) of n observations of movement feature X (\(x_n\) is the latest observation), Rarity is computed as follows:

-

we compute \({ Hist}_X\), the histogram of X, considering \(\sqrt{n}\) equally spaced intervals; we call \({ occ}_i\) the number of occurrences in interval i (\(i={1,\ldots ,\sqrt{n}}\)) of the elements of x,

-

let \(i_{{ MAX}}\) be the interval corresponding to the highest bin (i.e., the bin of highest number of occurrences), and let \({ occ}_{{ MAX}}\) be the number of occurrences in interval \(i_{{ MAX}}\),

-

let \(i_n\) be the interval to which \(x_n\) belongs to, and let \({ occ}_n\) be the number of occurrences in \(i_n\),

-

we compute \(D1 =|i_{{ MAX}} - i_{n}|\),

-

we compute \(D2 = { occ}_{{ MAX}} - { occ}_{n}\),

-

we compute Rarity as \(D1*D2*\alpha \), where \(\alpha \) is a constant positive real normalization factor.

An example of Rarity computation is illustrated in Fig. 1. Figure 1a shows 1000 consecutive observations of X (dotted red line) and the corresponding values of Rarity (continuous blue line). Next, two histograms corresponding to two data segments S1 and S2 are shown in Fig. 1b, c, respectively. Segment S1 starts at frame 301 and ends at frame 400, while segment S2 starts at frame 364 and ends at frame 463. The value of X at frame 400 is 0.01 and at frame 463 is 0.85. Both histograms show the distances between the highest bin and the one in which the “current” value of X lies in (see the red arrow), i.e., the bins containing the values 0.01 (Fig. 1b) and 0.85 (Fig. 1c). In the case of segment S1 (Fig. 1b) the distance is small and consequently the value of Rarity at frame 400 is very low. In the case of segment S2 (Fig. 1c) the distance is high and the corresponding value of Rarity at frame 463 is very high.

Rarity is applied in our case to the Weight Index, and is computed on a time window of 100 frames. The rarely appearing values of Weight Index are more salient compared to frequent values. Lightness is high when Weight Index is low and Rarity is high.

3.2 Fragility

The low-level components of Fragility are Upper Body Crack and Leg ReleaseFootnote 3:

-

Upper Body Crack is an isolated discontinuity in movement, due to a sudden interruption and change of the motor plan, typically occurring in the upper body;

-

Leg Release is a sudden, little but abrupt, downward movement of the hip and knee.

Fragility emerges when a salient non-periodic sequence of Upper Body Cracks and/or Leg Releases occurs. For example, moving at the boundary between balance and fall results in a series of short non-periodic movements with frequent interruptions and re-planning. An example of a dancer moving with a prevalence of Fragility can be seen at:

To compute the value of Fragility, first the occurrences of upper body crack and leg release are detected. Upper body cracks are computed by measuring synchronous abrupt variation of hands accelerations. Leg releases are computed by detecting synchronous abrupt variations in the vertical component of hips acceleration. Next, the analysis primitive Regularity is computed on the occurrences of upper body cracks and leg releases. Regularity determines whether or not these occurrences appear at non-equally spaced times. Fragility is detected in correspondence of non-regular sequences of upper body cracks and leg releases.

In detail, Regularity is an analysis primitive that can be applied on any movement binary feature Y, that is \(Y\in \{0,1\}\), where the value 1 represents an event occurrence (e.g., an upper body crack or a leg release). Given the time series \(y=y_1,\ldots ,y_n\) of n observations of Y in the time window T, Regularity is computed as follows:

-

for each couple of consecutive events (i.e., for each \((y_i,y_j)|y_i=y_j=1\)) we compute the distance \(d_k=j-i\), with \(k = 1,\ldots ,n\),

-

we compute the maximum and minimum events distance: \(M={ max}(d_k), m={ min}(d_k)\),

-

we check whether or not \(M-m<\tau \), where \(\tau \) is a predefined tolerance value; if M and m are equal with a tolerance \(\tau \) then Regularity is 0; otherwise Regularity is 1.

In our case regularity is computed on a sliding window of 50 frames and the value of fragility is 1 when the corresponding value of Regularity is 0.

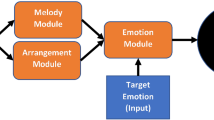

4 Sonification framework

The sonification framework is illustrated in Fig. 2. The left side of the figure shows the low- and mid-level movement features described in the previous section.

Following the approach described in [2, 27] for the fluidity mid-level feature, we created a sonification model for lightness and fragility based on the following assumption: specific compounds of spectral features in a sound are cross-modally convergent with a specific movement quality.

In particular, when considering the sonification of lightness:

-

(at low temporal scale) sonification has high spectral smoothness and high spectral centroid; these conditions are necessary but not sufficient: we are currently investigating other features as well, such as auditory roughness and spectral skewness;

-

(at higher temporal scale) we use the metaphor of a very small object (e.g., a small feather) floating in the air, surrounded by gentle air currents. Such an object would move gradually and slowly, without impacts or sudden changes of direction. It is implemented as a sound with predictable and slowly varying timbral evolutions, and a pitch/centroid that rises when excited, and falls down very slowly in absence of excitation. Additionally, if a descending pitch/centroid is present, it needs to be counterbalanced by a parallel ascending sound of comparable energy range.

The (necessary but not sufficient) conditions for the sonification of Fragility are the following:

-

(at low temporal scale) sonification has low spectral smoothness and high spectral centroid;

-

(at higher temporal scale) we use sounds that are sudden and non-periodic, and which contain non-predictable discontinuities and frequent silence breaks.

Following these design guidelines, we implemented sonifications for the two qualities, described in the following two subsections. A more detailed description of the sonification framework is available as the Supplementary Material.

4.1 Implementation of the sonification of lightness

The concept underlying the sonification of Lightness is the following: the sound can be imagined as the production of external (to the full-body) soft and light elements, gently pushed away in all directions by the body movement, via an invisible medium, like air, wind, breath. Similar approaches were discussed in [10, 11, 34]. Additionally, Lightness is a “bipolar” feature (Light/Heavy): certain sounds are generated for highly light movements, and some other sounds appear when the movement displays very low Lightness. At intermediate values of Lightness, sounds might be almost inaudible, or even absent.

The sonification of very light movements (bottom-right part of Fig. 2) is realized using a technique loosely inspired by swarming systems (as described by Blackwell [4]). It has been adopted to achieve the impression of hearing autonomous elements in the sonification. Thirty-two identical audio-agents (each implementing a filtered white noise engine and a triangular wave playback engine) are connected in the feedback chain: the last agent of the chain is connected to the first, creating a data feedback loop. The feedback-chain reacts to the Weight Index parameter with changes in spectral centroid and ADSR envelope. The ADSR settings are designed to produce slow attack/release, overlapping, and smooth textures. Their output level is controlled by the Lightness parameter (see details in the Supplementary Material). The overall sonic behavior of this architecture evokes a continuum of breathing, airy and whispery events, like short bouts of wind or air through pipes. When the Weight Index is low, the sounds react by slowly jumping towards a wide range of high pitched zones. If Weight Index increases, the sounds start gently but quickly step down to a narrow low pitch, and to fade out. If Weight Index goes at maximum levels (the movement in not light), the agents are not audible, and they give space to the sonification of the loss of Lightness.

The sonification of the movements, which are characterized by very low Lightness, is made with a patch based on a granulator. Its buffer is a continuous, low-pitched sound, slightly varying in amplitude and timbral color. The Weight Index and Motion Index parameters are also used to control the granulator. The Weight Index parameter controls the granulator window size in a subtle way (to give the sound a natural instability and variability) and, more consistently, the pitch randomness: the timbre is more static for low Lightness movements. When the movement starts to be only slightly more Light, the sound starts to randomly oscillate in pitch. At the same time, the Weight Index parameter also controls the overall output level of this part of sonification patch: when the Weight Index even slightly decrease, the output level of this module starts to fade out. The general impression is that low Lightness movements trigger static and loud sounds while slightly more Light movement triggers unstable and disappearing sounds.

4.2 Implementation of the sonification of fragility

Fragile movements are spatially fractured and incoherent. For this reason, the sonification of Fragility is realized with short (between 100 and 1000 ms) clusters of crackling (hence with low spectral smoothness) noises. As illustrated in the top-right part of Fig. 2, we used four sample playback engines to create a stream of very short, partially overlapping sound clusters. The nature of the sound cluster is critical in our model: we recorded selected and isolated manipulations of different physical objects close to their breaking point. We chose light metal objects, dry leaves, small tree branches, wood sticks. Each sample (having a duration between 500 and 1000 ms) has a particular morphology, exhibiting isolated small events (e.g., loud cracks, which last between 50 and 100 ms) and other less important small cracklings interleaved with silence. The physical size of the objects we recorded is small, to ensure a high sound centroid. Each time Fragility emerges, the playback engine randomly selects portions of the recorded sound (between 100 and 200 ms) to be played back.

The experiment: Phase 1—preparation of the auditory stimuli; Phase 2—preparation, training of the participants and rating of auditory stimuli. The sonification framework is explained in details in Fig. 2

4.3 Sonification example

Figure 3 shows the spectral analysis of lightness and fragility sonifications corresponding to 35 s of movement data. Centroid and Smoothness plots were generated with Sonic Visualizer.Footnote 4 The audio material used to generate the plots in Fig. 3a, c is the sound output of the main patch, fed with a stream of data simulating very Fragile movements, whereas the plots Fig. 3b, d were generated by simulating very Light movements.

We decided to artificially generate sonification examples of Fragility and Lightness which were sufficiently long to perform analysis, as it would be difficult to obtain similarly long sequences from real dancer’s data. For the Fragility feature, data consisted of a sequence of integers (a single 1 followed by several zeros for about 20 ms), randomly distributed (5–15 events in windows of 5 s). For the Lightness feature, we fed the sonification model with a constant value corresponding to the minimum of Weight Index. To increase the length of the audio segments, we deactivated the amplitude controller linked to the Lightness parameter, to avoid the audio-agents to fade out.

In the figure, the spectral analysis of Lightness confirms the expected sonification design guidelines described in the previous section (high spectral smoothness and high spectral centroid in correspondence with high Lightness values). The analysis of Fragility also confirms a low spectral smoothness, and high spectral centroid. Please note that the graph of “Fragility spectral smoothness” shows very low values associated with the Fragility sounds alternated with higher values associated with the silences between the sounds.

5 Experiment

We now present the experiment we conducted to study (i) whether it is possible to communicate mid-level expressive movement features by means of sonification and (ii) whether a training of embodied sonification improves the recognition of the movement features. We asked a group of people to rate the perceived level of movement expressive qualities only from the generated audio stimuli. Half of the participants performed an embodied sonic training which consisted of experiencing the real-time translation of their own movement into the sonification of lightness and fragility. We expected that this experience should provide an improved capability of understanding the association between the two movement qualities and corresponding sonifications to the participants, improving the recognition rate.

To maintain the ecological validity, we use short extracts of the real dance performances to generate the sonifications used as stimuli.

To sum up, we verify the following hypotheses:

-

H1 Can an expressive feature be communicated only by means of an a priori unknown sonification?

-

H2 Does a preliminary embodied sonic training influence the perception of the expressive quality from the sonifications?

5.1 Phase 1: Preparation of the auditory stimuli

The top part of the Fig. 4 illustrates the process going from the creation of the movement segments to the generation of the corresponding sonification.

Twenty segments, lasting about 10 s each and split into two subsets of 10 segments displaying Lightness and ten displaying Fragility, were chosen from a larger dataset of about 150 movement segments [32] by 4 experts (i.e., professional dancers and movement experts). In the remainder of this paper we will use the label Lightness Segments (LS) to describe the segments that contain, according to the experts, full-body expression of Lightness, and Fragility Segments (FS) to describe the segments that contain full-body expression of Fragility.

The selected 20 segments exhibit, according to the 4 experts, a clear prevalence of one of the two movement qualities. Therefore, the stimuli do not cover all range of values of a quality. Since the objective of the experiment is to demonstrate that participants are able to recognize these two qualities from sonification only, we did not include stimuli containing the simultaneous absence of both qualities.

The data used for the sonifications consists of the values of IMU sensors (x-OSC) placed on the dancer’s wrists and ankles, captured at 50 frames per second. Each sensor frame consists of 9 values: the values of accelerometer, gyroscope, and magnetometer on the three axis (x, y, z).

Technically, in order to generate the audio stimuli the low-level features, i.e., Weight Index, Motion Index, Upper Body Crack and Leg Release, as well as mid-level features, i.e., lightness and fragility were computed using the EyesWeb XMIFootnote 5 on pre-recorded IMU data of the dancer and sent to Max MSP3,Footnote 6 running a patch implementing lightness and fragility sonifications. It is worth to note that the whole sonification framework including the two sub-patches (for Fragility and Lightness) was always present in the generation of the audio. The prevalence of one of the movement qualities causes the prevalence of corresponding sonification. For example, in a few experiment stimuli, the presence of small components of Lightness can be heard also in Fragility segments (e.g., during pausessilence between cracks). Examples of the resulting sonifications of Fragility and Lightness can be listened in the following video: https://youtu.be/9FnBj_f6HdQ

All 20 sonifications were uploaded as a part of the Supplementary Material.

5.2 Phase 2: Preparation and training of the participants

Forty persons were invited to our laboratory to participate to the experiment. We divided them into two groups:

-

Group N (non-sonic embodiment) did not participate in the embodied sonic training;

-

Group E (sonic embodiment) experienced the sonifications by performing the movements and listen immediately corresponding sounds (i.e, embodied sonic training).

Group N was composed of twenty persons (18 females): thirteen had some prior experience with dance (twelve at amatorial level and one being a professional dancer); six had some prior experience with music creation (four at amatorial level and two being professionists); seven declared not to have any particular experience in any of the two domains.

Similarly, the Group E was also composed of twenty persons (18 females): nineteen had some prior experience with dance (thirteen at amateur, and six at professional level); thirteen had some prior experience with music creation (nine at amateur level and four being professionists); one declared not to have any experience in any of the two domains.

The experiment procedure is illustrated in the bottom part of Fig. 4.

-

Part A: Before starting the experiment, all participants (Group E and Group N) were explained two expressive qualities of the movement and they seen the video-examples of the performances of the professional dancers expressing both qualities. To better understand the two qualities the participants were also asked to rehearse (under the supervision of the professional dancer) some movements displaying these two expressive qualities.

-

Part B: Next, each participant of Group E worn the sensor systems consisting of IMUs and performed, under the supervision of the professional dancer, some movements displaying these two expressive qualities. When performing movements with requested qualities, she could experience sonifications of her moving body. The duration of the training session was around 10 min.

-

Part C: Consecutively all the participants (Group E and Group N) were asked to fill personal questionnaires. Next, they were played 20 audio stimuli (see Sect. 4). For each audio segment, they were asked to rate the global level of Fragility and Lightness they perceived using two independent 5-point Likert scales (from “absent” to “very high”). We used two separate rating scales for these two qualities and participants were not informed that only one quality was present in each stimulus. Thus, they could also rate that any of (or both) qualities were present in the played stimulus.

Neither the word “Fragility“ nor “Lightness” was pronounced during the Phase A and B of experiment by experimenters to the possibility that these labels might influence the participants’ training.

The audio segments were played in random order using a Latin Square Design for randomization. Each audio segment was played once. Once the participants expressed their rating on an audio segment they could not change their answer and they could not go back to previous audio segment or skip any of the audio segments. At no time during the experiment the participants could see the body movements of the dancers (i.e., the movements generating the sonification they were hearing).

Each segment was sonified using the model described in Sect. 4. The results of the sonification process were stereo audio files (WAV file format, 48 KHz sampling rate). During the experiment, the sonifications were played to participants using a professional setup consisting of an AVID M-Box mini audio card and two Genelec 8040 A loudspeakers. The experiment took place in a large lab office (around 50 square meters).

5.3 Results

In total (for both Group N and E) we collected 1600 answers. Experiment design introduces two dependent variables: Perceived Lightness (PL) and Perceived Fragility (PF). The results of the statistical analysis are presented below separately for Hypothesis H1 and H2.

To address the Hypothesis H1 we considered only the rankings given by untrained participants (Group N). Figure 5 and Table 2 report the average values of the PL and PF for each type of stimuli (Lightness Segments vs. Fragility Segments).

First we checked the assumptions of ANOVA test. Verification of normal distribution for each experimental group separately using Shapiro–Wilks test as well as the verification of the normal distribution of the residuals were performed and the results showed that the data are not normally distributed (see also Fig. 6). This result is not surprising because we ask our participants to rate the perceived Fragility and Lightness of the sonifications of the segments that contain evident examples of Fragility or Lightness. The distributions are skewed because people tended to answer “very high” or “absent” (i.e., two extremes of 5 point scale used in the experiment). Consequently, to test our hypotheses we applied non-parametrical tests.

As for the perception of the Lightness from the audio stimuli, a Mann–Whitney test showed that participants reported a higher degree of Lightness in Lightness Segments as compared to Fragility Segments (\(U=5775.5\), \(p <0.001\)). At the same time, they perceived a higher level of Fragility in Fragility Segments than in Lightness Segments (\(U =5346.5\), \(p <0.001\)).

Additionally, we checked whether the reported values for Fragility (PF) and Lightness (PL) differ within Lightness (LS) or within Fragility segments (FS). A Wilcoxon signed-rank test showed that the participants perceived a higher degree of Lightness than Fragility in Lightness Segments (\(Z =-\,10.156\), \(p <0.001\), 2-tailed). At the same time, they perceived a higher degree of Fragility than Lightness in Fragility Segments (\(Z =-\,10.451\), \(p <0.001\), 2-tailed).

To investigate the Hypothesis H2 we compared the rankings given by the participants who participated in the embodied sonic training (Group E) with whose did not (Group N). The overall results divided by the type of stimuli are presented in Fig. 7 and Table 2.

For the reasons discussed above the assumptions of ANOVA test were not satisfied (see Fig. 6). Consequently, to test the Hypothesis H2 we opted for non-parametrical Mann–Whitney U (M–W) test (with Bonferroni correction) and we used it separately on each independent variable.

For Lightness stimuli (LS), the M–W test indicated that people who did not participate in the embodied sonic training (Group N) perceived a higher level of Fragility than people who participated in training (Group E) (\(U =14{,}728\), \(p <0.001\)). At the same time, there was no significant difference in the perception of Lightness (\(U =19{,}744\), \(p =0.818\)).

For Fragility stimuli (FS), the M–W test indicated the tendency for untrained participants (Group N) to perceive a lower level of Fragility compared to the trained participants (\(U = 1812.5\), \(p = 0.088\)). Again, there was no significant difference in the perception of Lightness (\(U = 18{,}348\), \(p =0.125\)).

6 Discussion

Regarding the Hypothesis H1 our participants were able to perceive the expressive qualities of the movement only from their sonifications correctly. Differences in the perception of lightness and fragility were observed between the sonifications of the Fragility and Lightness Segments. The results confirm that it is possible to design interfaces which transmit the expressive quality through the auditory channel even without sonic training.

Regarding the hypothesis H2, the effect of the embodied sonic training (i.e. interactive sonification) was observed on the perception of one out of two qualities, namely Fragility. The results show that participants who did the embodied sonic training perceived less Fragility in Lightness stimuli, and they had tendency to perceive more Fragility in Fragility stimuli. It means that the embodied sonic training improved the association between the expressive quality and sonification. In the case of Lightness, the embodied sonic training did not influence the perception of Lightness. This fact might be due to the complexity of Fragility with respect to Lightness: Fragility implies a continuous interruption and re-planning of motor actions [8]. Further, there is an important difference between these two qualities: while Lightness is bipolar, i.e., the movement, which is opposite to Light, is “Heavy”, Fragility is not. The bipolar nature of Lightness may contribute to the perception of the quality through sound as different sounds were associated with high and low Lightness. This is not present for Fragility. Consequently, it might be more difficult, without embodied sonic training, to perceive Fragility.

To sum up, although the expressive qualities, namely Fragility and Lightness, can be successfully recognized from unknown sonifications even without any preparation phase, an embodied sonic training can improve it. These results might be a premise to realize a future research to verify whether congenital blind people are able to perceive similarly the expressive qualities of movement from sonifications.

7 Application

The results of this study and the system built to perform the experiment enabled us to design public events. The system is able to sonify two expressive qualities using the models presented in Sect. 4. It uses the data captured by Inertial Measurement Units (IMUs) placed on the dancer limbs, and generate the corresponding sounds in real time.

In particular, the system was used during a public performance “Di Fronte agli Occhi degli Altri” that took place at Casa Paganini, Genoa, Italy in March 2017. During the performance, at first, two professional dancers, one of which was visually impaired, performed a dance improvisation, involving also other blind persons. The performers took turns in wearing the IMU sensors: the performer wearing the sensors was generating in real-time a sonification influencing the movement qualities of the other (see Fig. 8). In a second phase, the dancers involved the audience in the performance by again taking turns in wearing the sensors (with an audience of blind as well as non-blind people) and generating the sonifications. The involved audience included both visually impaired and normally sighted people (see the video: https://youtu.be/qOtsiAXKqb8).

It is important to notice that the concept of this performance was based on the results of our experiment. The tasks of dancers and audience correspond to the experimental conditions of our study. Indeed while the visually impaired protagonist dancer participated in a short embodied sonic training session before the artistic performance, the audience, which was invited to dance with him, could not know the sonifications before the performance. Thus, they tried to move in correspondence to the sounds they hear.

This work is a part of a broader research initiative, in which we are further developing our theoretical framework, the movement analysis techniques, cross-modal sonifications, saliency and prediction of movement qualities, interactive narrative structures at multiple temporal scales (see the new EU H2020 FET Proactive project EnTimeMent). The proposed sonification framework, characterized by the introduction of analysis and sonification at multiple temporal scales, and focusing not only on low-level (e.g., speed, positions) but also on mid- and high-level qualities and their analysis primitives (e.g., saliency), opens novel perspectives for the development of evolving, “living” interactive systems. The support of time-varying sonification, in which the context (expressed for example in terms of evolution of clusters of mid- and high-level qualities) may contribute to changes in the mapping strategies and in the interactive non-verbal narrative structures. Such “living” interactive systems might open novel directions in therapy and rehabilitation, movement training, wellness and sport, audiovisual interactive experience of cultural content (e.g., virtual museums, education), entertainment technologies, to mention a few examples. These directions will be explored in the EnTimeMent Project.

8 Conclusion

In this paper, we presented an experiment to evaluate the impact of sonic versus non-sonic embodied training in the recognition of two expressive qualities only by the auditory channel through their sonifications. Results showed a good recognition of Fragility and Lightness, which can be improved (in the case Fragility) with embodied sonic training. Additionally we showed that the findings of this study can inform the design of artistic projects. Our framework and system were used during public dance performances consisting of a blind dancer improvising with non dancers (blind as well as non-blind), and in other events in the framework of the “Atlante del Gesto”,Footnote 7 a part of the Dance Project.

The paper brings the following novel contributions:

-

(i) it is one of the first attempts to propose a multi-layered sonification framework including the interactive sonifications of mid-level expressive movement qualities;

-

(ii) movement expressive qualities are successfully perceived only by their sonifications,

-

(iii) a sonic embodied training significantly influences the perception of Fragility.

The multimodal (video, IMU sensors, and sonification) repository of fragments of movement qualities performed by 12 dancers, was developed for this and other scientific experiments, and are freely available to the research community.Footnote 8 Evidence from parallel neuroscience experiments on fMRI [35] applied to this repository contribute to the validity of the results presented in this paper.

Ongoing steps of this work include the extension of the results to further movement qualities and sonifications, and, in particular, for cases of simultaneous presence of different expressive movement qualities. The experiment showed that sonifications lead to the correct interpretation when they are two possible outcomes and quantitative scales. It would be also interesting to extend this work by adding an explanatory qualitative study where participants, listening the audio stimuli, would be free to give their description of the corresponding movement qualities.

Notes

These two terms were originally introduced by the choreographer Virgilio Sieni, with their original names in Italian Incrinatura and Cedimento.

References

Akerly J (2015) Embodied flow in experiential media systems: a study of the dancer’s lived experience in a responsive audio system. In: Proceedings of the 2nd international workshop on movement and computing, MOCO’15. ACM, New York, pp 9–16. https://doi.org/10.1145/2790994.2790997

Alborno P, Cera A, Piana S, Mancini M, Niewiadomski R, Canepa C, Volpe G, Camurri A (2016) Interactive sonification of movement qualities—a case study on fluidity. In: Proceedings of ISon 2016, 5th interactive sonification workshop

Alonso-Arevalo MA, Shelley S, Hermes D, Hollowood J, Pettitt M, Sharples S, Kohlrausch A (2012) Curve shape and curvature perception through interactive sonification. ACM Trans Appl Percept (TAP) 9(4):17

Blackwell T (2007) Swarming and music. Evol Comput Music 2007:194–217

Brown C, Paine G (2015) Interactive tango milonga: designing internal experience. In: Proceedings of the 2nd international workshop on movement and computing. ACM, pp 17–20

Brückner HP, Schmitz G, Scholz D, Effenberg A, Altenmüller E, Blume H (2014) Interactive sonification of human movements for stroke rehabilitation. In: 2014 IEEE international conference on consumer electronics (ICCE)

Camurri A, Canepa C, Ferrari N, Mancini M, Niewiadomski R, Piana S, Volpe G, Matos J.M, Palacio P, Romero M (2016) A system to support the learning of movement qualities in dance: a case study on dynamic symmetry. In: Proceedings of the 2016 ACM international joint conference on pervasive and ubiquitous computing: adjunct, UbiComp’16. ACM, New York, pp 973–976. https://doi.org/10.1145/2968219.2968261

Camurri A, Volpe G, Piana S, Mancini M, Niewiadomski R, Ferrari N, Canepa C (2016) The dancer in the eye: towards a multi-layered computational framework of qualities in movement. In: Proceedings of the 3rd international symposium on movement and computing. ACM, p 6. https://doi.org/10.1145/2948910.2948927

Caramiaux B, Françoise J, Schnell N, Bevilacqua F (2014) Mapping through listening. Comput Music J 38(3):34–48

Carron M, Dubois F, Misdariis N, Talotte C, Susini P (2014) Designing sound identity: providing new communication tools for building brands corporate sound. In: Proceedings of the 9th audio mostly: a conference on interaction with sound. ACM, p 15

Carron M, Rotureau T, Dubois F, Misdariis N, Susini P (2015) Portraying sounds using a morphological vocabulary. In: EURONOISE 2015

Cuykendall S, Junokas M, Amanzadeh M, Tcheng D.K, Wang Y, Schiphorst T, Garnett G, Pasquier P (2015) Hearing movement: how taiko can inform automatic recognition of expressive movement qualities. In: Proceedings of the 2nd international workshop on movement and computing. ACM, pp 140–147

De Coensel B, Botteldooren D, Berglund B, Nilsson ME (2009) A computational model for auditory saliency of environmental sound. J Acoust Soc Am 125(4):2528–2528. https://doi.org/10.1121/1.4783528

Dubus G, Bresin R (2013) A systematic review of mapping strategies for the sonification of physical quantities. PLoS ONE 8(12):e82,491. https://doi.org/10.1371/journal.pone.0082491

Dubus G, Bresin R (2015) Exploration and evaluation of a system for interactive sonification of elite rowing. Sports Eng 18(1):29–41. https://doi.org/10.1007/s12283-014-0164-0

Fehr J, Erkut C (2015) Indirection between movement and sound in an interactive sound installation. In: Proceedings of the 2nd international workshop on movement and computing. ACM, pp 160–163

Françoise J, Fdili Alaoui S, Schiphorst T, Bevilacqua, F (2014) Vocalizing dance movement for interactive sonification of Laban effort factors. In: Proceedings of the 2014 conference on designing interactive systems. ACM, pp 1079–1082

Frid E, Bresin R, Alborno P, Elblaus L (2016) Interactive sonification of spontaneous movement of children? cross-modal mapping and the perception of body movement qualities through sound. Front Neurosci 10:521

Ghisio S, Alborno P, Volta E, Gori M, Volpe G (2017) A multimodal serious-game to teach fractions in primary school. In: Proceedings of the 1st ACM SIGCHI international workshop on multimodal interaction for education, MIE 2017. ACM, New York, pp 67–70. https://doi.org/10.1145/3139513.3139524

Großhauser T, Bläsing B, Spieth C, Hermann T (2012) Wearable sensor-based real-time sonification of motion and foot pressure in dance teaching and training. J Audio Eng Soc 60(7/8):580–589

Guo C, Zhang L (2010) A novel multiresolution spatiotemporal saliency detection model and its applications in image and video compression. IEEE Trans Image Process 19(1):185–198. https://doi.org/10.1109/TIP.2009.2030969

Hermann T (2011) Model-based sonification. In: Hermann T, Hunt A, Neuhoff JG (eds) The sonification handbook, pp 399–427. ISBN 978-3-8325-2819-5

Hermann T, Höner O, Ritter H (2005) Acoumotion—an interactive sonification system for acoustic motion control. In: International gesture workshop. Springer, Berlin, pp 312–323

Hsu A, Kemper S (2015) Kinesonic approaches to mapping movement and music with the remote electroacoustic kinesthetic sensing (RAKS) system. In: Proceedings of the 2nd international workshop on movement and computing. ACM, pp 45–47

Jensenius AR, Bjerkestrand KAV (2011) Exploring micromovements with motion capture and sonification. In: International conference on arts and technology. Springer, pp 100–107

Katan S (2016) Using interactive machine learning to sonify visually impaired dancers’ movement. In: Proceedings of the 3rd international symposium on movement and computing. ACM, p 40

Kolykhalova K, Alborno P, Camurri A, Volpe G (2016) A serious games platform for validating sonification of human full-body movement qualities. In: Proceedings of the 3rd international symposium on movement and computing, MOCO’16. ACM, New York, pp 39:1–39:5. https://doi.org/10.1145/2948910.2948962

Laban R, Lawrence FC (1947) Effort. Macdonald & Evans, New York

Landry S, Jeon M (2017) Participatory design research methodologies: a case study in dancer sonification. In: The 23rd international conference on auditory display (ICAD 2017), pp 182–187. https://doi.org/10.21785/icad2017.069

Mancas M, Glowinski D, Volpe G, Coletta P, Camurri A (2010) Gesture saliency: a context-aware analysis. In: Kopp S, Wachsmuth I (eds) Gesture in embodied communication and human–computer interaction. Springer, Berlin, pp 146–157

Naveda LA, Leman M (2008) Sonification of samba dance using periodic pattern analysis. In: Artech08. Portuguese Católica University, pp 16–26

Niewiadomski R, Mancini M, Piana S, Alborno P, Volpe G, Camurri A (2017) Low-intrusive recognition of expressive movement qualities. In: Proceedings of the 19th ACM international conference on multimodal interaction, ICMI 2017. ACM, New York, pp 230–237. https://doi.org/10.1145/3136755.3136757

Singh A, Piana S, Pollarolo D, Volpe G, Varni G, Tajadura-Jiménez A, Williams AC, Camurri A, Bianchi-Berthouze N (2016) Go-with-the-flow: tracking, analysis and sonification of movement and breathing to build confidence in activity despite chronic pain. Hum Comput Interact 31(3–4):335–383

Spence C (2011) Crossmodal correspondences: a tutorial review. Atten Percept Psychophys 73(4):971–995. https://doi.org/10.3758/s13414-010-0073-7

Vaessen MJ, Abassi E, Mancini M, Camurri A, de Gelder B (2018) Computational feature analysis of body movements reveals hierarchical brain organization. Cereb Cortex. https://doi.org/10.1093/cercor/bhy228

Vogt K, Pirrò D, Kobenz I, Höldrich R, Eckel G (2010) Physiosonic—evaluated movement sonification as auditory feedback in physiotherapy. In: Auditory display. Springer, Berlin, Heidelberg, pp 103–120. https://doi.org/10.1007/978-3-642-12439-6_6

Acknowledgements

This research has received funding from the European Union’s Horizon 2020 research and innovation programme under Grant Agreement No. 645553 (DANCE). We thank the choreographer Virgilio Sieni and the members of his dance company supporting this research and artistic Project, and for the in-depth discussion and brainstorming on movement qualities, and the blind dancer Giuseppe Comuniello who participated in the “Di Fronte agli Occhi degli Altri”, the Istituto Chiossone for blind people, the Goethe-Institute Turin und Genua, and the Teatro dell’Archivolto. We are very grateful to all the citizens of Genoa who participated in the experiment and the public performances organized in the framework of the EU DANCE Project. We would also thank the dancer Federica Loredan, the Director of Goethe-Institute Turin und Genua Roberta Canu, Roberta Messa.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

M. Mancini participated to this work while being member of the Casa Paganini-InfoMus Research Centre, DIBRIS, University of Genoa, Genoa (Italy).

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Niewiadomski, R., Mancini, M., Cera, A. et al. Does embodied training improve the recognition of mid-level expressive movement qualities sonification?. J Multimodal User Interfaces 13, 191–203 (2019). https://doi.org/10.1007/s12193-018-0284-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12193-018-0284-0