Abstract

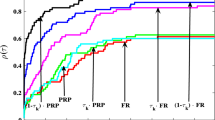

A new family of conjugate gradient methods is proposed by minimizing the distance between two certain directions. It is a subfamily of Dai–Liao family, which consists of Hager–Zhang family and Dai–Kou method. The direction of the proposed method is an approximation to that of the memoryless Broyden–Fletcher–Goldfarb–Shanno method. With the suitable intervals of parameters, the direction of the proposed method possesses the sufficient descent property independent of the line search. Under mild assumptions, we analyze the global convergence of the method for strongly convex functions and general functions where the stepsize is obtained by the standard Wolfe rules. Numerical results indicate that the proposed method is a promising method which outperforms CGOPT and CG_DESCENT on a set of unconstrained optimization testing problems.

Similar content being viewed by others

References

Andrei, N.: Open problems in conjugate gradient algorithms for unconstrained optimization. Bull. Malays. Math. Sci. Soc. 34, 319–330 (2011)

Andrei, N.: An unconstrained optimization test functions collection. Environ. Sci. Technol. 10, 6552–6558 (2008)

Andrei, N.: An adaptive conjugate gradient algorithm for large-scale unconstrained optimization. J. Comput. Appl. Math. 292, 83–91 (2016)

Babaie-Kafaki, S., Ghanbari, R.: The Dai-Liao nonlinear conjugate gradient method with optimal parameter choices. Eur. J. Oper. Res. 234, 625–630 (2014)

Barzilai, J., Borwein, J.M.: Two-point step size gradient methods. IMA J. Numer. Anal. 8, 141–148 (1988)

Bongartz, I., Conn, A.R., Gould, N., et al.: CUTE : constrained and unconstrained testing environment. ACM Trans. Math. Softw. 50, 123–160 (1995)

Dai, Y.H.: Nonlinear conjugate gradient methods. Wiley Encyclopedia of Operations Research and Management Science. (2011). https://doi.org/10.1002/9780470400531/pdf

Dai, Y.H., Kou, C.X.: A nonlinear conjugate gradient algorithm with an optimal property and an improved Wolfe line search. SIAM J. Optim. 23, 296–320 (2013)

Dai, Y.H., Liao, L.Z.: New conjugacy conditions and related nonlinear conjugate gradient methods. Appl. Math. Optim. 43, 87–101 (2001)

Dai, Y.H., Yuan, Y.: A nonlinear conjugate gradient method with a strong global convergence property. Soc. Ind. Appl. Math. 10, 177–182 (1999)

Deng, S.H., Wan, Z., Chen, X.: An improved spectral conjugate gradient algorithm for nonconvex unconstrained optimization problems. J. Optimiz. Theory Appl. 157, 820–842 (2013)

Deng, S.H., Wan, Z.: A three-term conjugate gradient algorithm for large-scale unconstrained optimization problems. Appl. Numer. Math. 92, 70–81 (2015)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Prog. 91, 201–213 (2002)

Fatemi, M.: A new efficient conjugate gradient method for unconstrained optimization. J. Comput. Appl. Math. 300, 207–216 (2016)

Fletcher, R., Reeves, C.M.: Function minimization by conjugate gradients. Comput. J. 7, 149–154 (1964)

Gilbert, J.C., Nocedal, J.: Global convergence properties of conjugate gradient methods for optimization. SIAM J. Optim. 2, 21–42 (1992)

Hager, W.W., Zhang, H.C.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16, 170–192 (2005)

Hager, W.W., Zhang, H.C.: A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2, 35–58 (2006)

Hestenes, M.R., Stiefel, E.: Methods of conjugate gradients for solving linear systems. J. Res. Nat. Bur. Stand. 49, 409–436 (1952)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer Series in Operations Research. Springer, New York (1999)

Oren, S.S.: Perspectives on self-scaling variable metric algorithms. J. Optim. Theory Appl. 37, 137–147 (1982)

Oren, S.S.: Self-scaling variable metric (SSVM) algorithms. II. Implementation and experiments. Manag. Sci. 20, 863–874 (1974)

Perry, A.: A class of conjugate gradient algorithms with a two-step variable metric memory. In: Discussion Papers 269. Northwestern University, Evanston, Center for Mathematical studies in Economics and Management Sciences (1977)

Polak, E., Ribière, G.: Note sur la convergence de méthodes de directions conjuguées. Rev. Franccaise Inform. Rech. Opérationnelle 16, 35–43 (1969)

Polyak, B.T.: The conjugate gradient method in extremal problems. USSR Comput. Math. Math Phys. 9, 94–112 (1969)

Pytlak, R.: Conjugate Gradient Algorithms in Nonconvex Optimization. Springer, Berlin Heidelberg. Berlin (2009)

Shanno, D.F.: On the convergence of a new conjugate gradient algorithm. SIAM J. Num. Anal. 15, 1247–1257 (1978)

Sun, W.Y., Yuan, Y.: Optimization Theory and Methods. Springer, New York (2006)

Yuan, G., Wei, Z., Li, G.: A modified Polak–Ribière–Polyak conjugate gradient algorithm for nonsmooth convex programs. J. Comput. Appl. Math. 255, 86–96 (2014)

Yuan, G., Zhang, M.: A three-terms Polak–Ribière–Polyak conjugate gradient algorithm for large-scale nonlinear equations. J. Comput. Appl. Math. 286, 186–195 (2015)

Yuan, G., Meng, Z., Li, Y.: A modified Hestenes and Stiefel conjugate gradient algorithm for large-scale nonsmooth minimizations and nonlinear equations. J. Optim. Theory Appl. 168, 129–152 (2016)

Acknowledgements

We would like to thank anonymous referees for their valuable comments, which are helpful to improve the quality of this paper. This work is supported by National Natural Science Foundation of China(Grant Nos. 11461021 and 61573014), Natural Science Basic Research Plan in Shaanxi Province of China (Program No. 2017JM1014) and Scientific Research Project of Hezhou University (Nos. 2014YBZK06, 2016HZXYSX03). The authors are grateful to Professor William W. Hager for providing the CG_DESCENT code and Professor Y.H. Dai and Ph.D Caixia Kou for the CGOPT code.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, M., Liu, H. & Liu, Z. A new family of conjugate gradient methods for unconstrained optimization. J. Appl. Math. Comput. 58, 219–234 (2018). https://doi.org/10.1007/s12190-017-1141-0

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12190-017-1141-0