Abstract

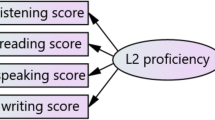

Over the years, in order to assess the quality of education various measures including high stakes tests have been employed. The validity of these measures has always been a matter of discussion. Thus, the present study addresses the concerns deal with the validity issues of the most important Iranian high-stake test known as Konkur measuring English language proficiency of the participants. In the present study, by taking into account the scores of 5000 high school students who sat for Konkur examination, the dimensionality and factorial structure of the test was examined through running generalizability and IRT analyses; At the first stage, in order to check the data standards along with the descriptive statistics a generalizability analysis was run; it was found that the reliability of the data equals .92 and the generalizability coefficient is 0.9 showing a high dependability for the analysis. In the second stage, the item statistics and parameter measures were analyzed and the misfit items were investigated. Next, the data was checked in terms of dimensionality and three IRT models namely unidimensional, bifactor and testlet model were run and compared. Findings showed that in terms of fit indices (M2, AIC, BIC) testlet model has the best model fit, followed by bifactor and unidimensional respectively, in determining variance of the responses to test and functions well for assessing language proficiency of the EFL leaners. The findings also showed that the variance of the factor structure of language proficiency is best explained when it is assessed through examination of several language sub-skills(i.e. testlet) rather than being measured through a number of items directly (i.e. bifactor).

Similar content being viewed by others

Data Availability

The datasets analyzed during the current study are not publicly available due because it contains personal information of the participants and it belongs to a third party but are available from the corresponding author on reasonable request.

References

Alderson, J. C., Clapham, C., & Wall, D. (1995). Language test construction and evaluation. Ernst Klett Sprachen.

Bachman, L. F. (1990). Fundamental considerations in language testing. Oxford University Press.

Bachman, L. F., Davidson, F., Ryan, K., & Choi, I. C. (1995). 1995: An investigation into the comparability of two tests of English as a foreign language: The Cambridge-TOEFL comparability study. Cambridge University Press.

Brennan, R. L. (2010). Generalizability theory and classical test theory. Applied Measurement in Education, 24(1), 1–21.

Cai, Y., & Kunnan, A. J. (2018). Examining the inseparability of content knowledge from LSP reading ability: An approach combining bifactor-multidimensional item response theory and structural equation modeling. Language Assessment Quarterly, 15(2), 109–129.

Camastra, F. (2003). Data dimensionality estimation methods: A survey. Pattern Recognition, 36(12), 2945–2954.

Chalhoub-Deville, M. (2016). Validity theory: Reform policies, accountability testing, and consequences. Language Testing, 33(4), 453–472.

Chen, F. F., West, S. G., & Sousa, K. H. (2006). A comparison of bifactor and second-order models of quality of life. Multivariate Behavioral Research, 41(2), 189–225.

Chen, F., Liu, Y., Xin, T., & Cui, Y. (2018). Applying the M2 statistic to evaluate the fit of diagnostic classification models in the presence of attribute hierarchies. Frontiers in Psychology, 9, 1875.

Cucina, J., & Byle, K. (2017). The bifactor model fits better than the higher-order model in more than 90% of comparisons for mental abilities test batteries. Journal of Intelligence, 5(3), 27.

Dunn, K. J., & McCray, G. (2020). The place of the bifactor model in confirmatory factor analysis investigations into construct dimensionality in language testing. Frontiers in Psychology, 11, 1357.

Embretson, S. E., & Reise, S. P. Item response theory for psychologists. (2000). Lawrence Earlbaum associates.

Embretson, S. E., & Reise, S. P. (2013). Item response theory. Psychology Press.

Finch, H., & Edwards, J. (2016). Rasch model parameter estimation in the presence of a nonnormal latenttrait using a nonparametric Bayesian approach. Educational and Psychological Measurement, 76, 662–684. https://doi.org/10.1177/0013164415608418.

Finch, H., & Monahan, P. (2008). A bootstrap generalization of modified parallel analysis for IRT dimensionality assessment. Applied Measurement in Education, 21(2), 119–140.

Gautam, P. (2019). Integrated and segregated teaching of language skills: An exploration. Journal of NELTA Gandaki, 1, 100–107.

George, D., & Mallery, P. (2019). IBM SPSS statistics 26 step by step: A simple guide and reference. Routledge.

Gu, L., Turkan, S., & Garcia Gomez, P. (2015). Examining the internal structure of the test of English-for-teaching (TEFT™). ETS Research Report Series, 2015(1), 1–12.

Haertel, E., & Herman, J. (2005). A historical perspective on validity: Arguments for accountability testing. CSE report 654. National Center for Research on Evaluation, Standards, and Student Testing (CRESST).

Hattie, J. (1985). Methodology review: Assessing unidimensionality of tests and ltenls. Applied Psychological Measurement, 9(2), 139–164.

Henson, R. A., Templin, J. L., & Willse, J. T. (2009). Defining a family of cognitive diagnosis models using log-linear models with latent variables. Psychometrika, 74, 191–210. https://doi.org/10.1007/s11336-008-9089-5.

Kane, M. (2010). Validity and fairness. Language Testing, 27(2), 177–182.

Karami, H., & Khodi, A. (2021). Differential Item Functioning and test performance: a comparison between the Rasch model, Logistic Regression and Mantel-Haenszel. Foreign Language Research Journal, 10(4), 842–853. https://doi.org/10.22059/jflr.2021.315079.783.

Khalilzadeh, S., & Khodi, A. (2018). Teachers’ personality traits and students’ motivation: A structural equation modeling analysis. Current Psychology, 1–16.

Kyburg, H. E., Jr. (1968). The rule of detachment in inductive logic. Studies in Logic and the Foundations of Mathematics, 51, 98–165.

Li, Z., & Cai, L. (2018). Summed score likelihood–based indices for testing latent variable distribution fit in item response theory. Educational and Psychological Measurement, 78(5), 857–886.

Lynch, B. K., & McNamara, T. F. (1998). Using G-theory and many-facet Rasch measurement in the development of performance assessments of the ESL speaking skills of immigrants. Language Testing, 15(2), 158–180.

McDonald, R. P. (1981). The dimensionality of tests and items. British Journal of Mathematical and Statistical Psychology, 34(1), 100–117.

Mehrani, M. B. (2018). An elicited imitation test for measuring preschoolers’ language development. Psychological reports, 121(4), 767–786.

Mehrani, M. B., & Khodi, A. (2014). An appraisal of the Iranian academic research on English language teaching. International Journal of Language Learning and Applied Linguistics World, 6(3), 89–97.

Mehrani, M. B., & Peterson, C. (2017). Children’s recency tendency: A cross-linguistic study of Persian, Kurdish and English. First Language, 37(4), 350–367.

Menon, P. (2018). Role of assessment conversations in a technology-aided classroom with english language learners: an exploratory study. Multicultural Education, 25(2), 42–50.

Nandakumar, R. (1991). Traditional dimensionality versus essential dimensionality. Journal of Educational Measurement, 28(2), 99–117.

Pae, T. I. (2012). Skill-based L2 anxieties revisited: Their intra-relations and the inter-relations with general foreign language anxiety. Applied Linguistics, 34(2), 232–252.

Rasch, G. (1960). Studies in mathematical psychology: I. Probabilistic models for some intelligence and attainment tests. Nielsen & Lydiche.

Reise, S. P. (2012). The rediscovery of bifactor measurement models. Multivariate Behavioral Research, 47(5), 667–696.

Reise, S. P., Morizot, J., & Hays, R. D. (2007). The role of the bifactor model in resolving dimensionality issues in health outcomes measures. Quality of Life Research, 16(1), 19–31.

Reise, S. P., Moore, T. M., & Haviland, M. G. (2010). Bifactor models and rotations: Exploring the extent to which multidimensional data yield univocal scale scores. Journal of Personality Assessment, 92(6), 544–559.

Reckase, M. D. (2009). Multidimensional item response theory models. In Multidimensional item response theory (pp. 79–112). Springer.

Reckase, M. D., & Xu, J. R. (2015). The evidence for a subscore structure in a test of english language competency for english language learners. Educational and Psychological Measurement, 75(5), 805–825.

Rios, J., & Wells, C. (2014). Validity evidence based on internal structure. Psicothema, 26(1), 108–116.

Rodriguez, A., Reise, S. P., & Haviland, M. G. (2016a). Applying bifactor statistical indices in the evaluation of psychological measures. Journal of Personality Assessment, 98(3), 223–237.

Rodriguez, A., Reise, S. P., & Haviland, M. G. (2016b). Evaluating bifactor models: Calculating and interpreting statistical indices. Psychological Methods, 21(2), 137–150.

Sawaki, Y. (2007). Construct validation of analytic rating scales in a speaking assessment: Reporting a score profile and a composite. Language Testing, 24(3), 355–390.

Shavelson, R. J., & Webb, N. M. (1991). A primer on generalizability theory. Sage Publications.

Stout, W. (1987). A nonparametric approach for assessing latent trait unidimensionality. Psychometrika, 52(4), 589–617.

Stout, W., Habing, B., Douglas, J., Kim, H. R., Roussos, L., & Zhang, J. (1996). Conditional covariance-based nonparametric multidimensionality assessment. Applied Psychological Measurement, 20(4), 331–354.

Strout, W. F. (1990). A new item response theory modeling approach with applications to unidimensionality assessment and ability estimation. Psychometrika, 55(2), 293–325.

Tate, R. (2003). A comparison of selected empirical methods for assessing the structure of responses to test items. Applied Psychological Measurement, 27(3), 159–203.

Tomblin, J. B., & Zhang, X. (2006). The dimensionality of language ability in school-age children. Journal of Speech, Language, and Hearing Research., 49, 1193–1208.

Wang, T., Strobl, C., Zeileis, A., & Merkle, E. C. (2018). Score-based tests of differential item functioning via pairwise maximum likelihood estimation. Psychometrika, 83(1), 132–155.

Weir, C. J. (2005). Language testing and validation. Palgrave McMillan.

Woods, C. M. (2006). Ramsay-curve item response theory (RCIRT) to detect and correct for nonnormal latent variables. Psychological Methods, 11, 253–270. https://doi.org/10.1037/1082-989X.11.3.253.

Zabihi, R., Mehrani-Rad, M., & Khodi, A. (2019). Assessment of authorial voice strength in l2 argumentative written task performances: contributions of voice components to text quality. Journal of Writing Research, 11(2).

Zhang, M. (2016). Exploring dimensionality of scores for mixed-format tests.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

All Authors declare that they have no conflict of interest.

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the national research committee and with the 2017 participants written declaration.

Consent to Participate

All participants gave the written informed consent prior to the study.

Consent for Publication

Neither the article nor portions of it have been previously published elsewhere. The manuscript is not under consideration for publication in another journal, and will not be submitted elsewhere until the Current Psychology editorial process is completed. All authors consent to the publication of the manuscript in Current Psychology, should the article be accepted by the Editor-in-chief upon completion of the refereeing process.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Alavi, S.M., Karami, H. & Khodi, A. Examination of factorial structure of Iranian English language proficiency test: An IRT analysis of Konkur examination. Curr Psychol 42, 8097–8111 (2023). https://doi.org/10.1007/s12144-021-01922-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12144-021-01922-1