Abstract

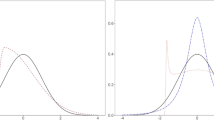

Through linear transformations of raw item scores, the paper converts 3-ponit, 4-point, 5-point and 7-point items to continuous, monotonic, normally distributed scores ranging between 1 to 5. This provides a platform for meaningful comparisons of scales with different number of response categories with respect to parameters like reliability, validity, discriminating power, and undertakes analysis in parametric set up. The method makes no assumption of continuous nature or linearity or normality for the observed variables or the underlying variable being measured. Thus, the assumption-free simple method can have wide applicability. Use of such methods of converting scores of Likert items is recommended for clear theoretical advantages and easiness in calculations. Inverse relationship derived between new measures of test discriminating value in terms of co-efficient of variation (CV) and theoretically defined test reliability. Empirically, such inverse relationship was observed for the scales. Number of response categories did not show much influence on discriminating value, reliability and factorial validity, even for the transformed normalized scores in the range 1 to 5. Thus, the study could not find optimum number of response categories which maximize validity, reliability or discriminating value. Future studies with multi-data set for generalization of findings are suggested.

Similar content being viewed by others

Data Availability

Nil (The paper used hypothetical data)

References

Arvidsson, R. (2019). On the use of ordinal scoring scales in social life cycle assessment. The Int. Jr. of Life Cycle Assessment, 24, 604–606. https://doi.org/10.1007/s11367-018-1557-2.

Bernstein, I. H., & Teng, H. (1989). Factoring items and factoring scales are different: Spurious evidence for multidimensionality due to item categorization. Psychological Bulletin, 76, 186–204.

Boote, A. S. (1981). Reliability testing of psychographic scales: five-point or seven-point? Anchored or labeled? Journal of Advertising Research, 21, 53–60.

Brown, G., Wilding II, R. E., & Coulter, R. L. (1991). Customer evaluation of retail salespeople using the SOCO scale: A replication extension and application. Journal of the Academy of Marketing Science, 9, 347–351.

Chakrabartty, S. N. (2020). Discriminating value of Item and Test. International Journal of Applied Mathematics and Statistics, 59(3), 61–78.

Chakrabartty, S.N. (2018): Cosine similarity approaches to Reliability of Likert Scale and Items, Romanian Jr. of Psychological Studies, Volume 6, Issue 1.

Chakrabartty, S. N., & Gupta, R. (2016). Test Validity and Number of Response Categories: A Case of Bullying Scale. Journal of the Indian Academy of Applied Psychology, 42(2), 344–353.

Cicchetti, D. V., Showalter, D., & Tyrer, P. J. (1985). The effect of number of rating scale categories on levels of interrater reliability: A Monte Carlo investigation. Applied Psychological Measurement, 9, 31–36.

Comrey, A. L., & Montang, I. (1982). Comparison of factor analytic results with two choice and seven choice personality item formats. Applied Psychological Measurement, 6, 285–289.

Colman, A. M., Norris, C. E., & Preston, C. C. (1997). Comparing rating scales of different lengths: Equivalence of scores from 5-point and 7-point scales. Psychological Reports, 80, 355–362.

Cummins, R. A. (1997). The Comprehensive Quality of Life Scale—intellectual/cognitive disability, (ComQol-I5) (5th ed.). School of Psychology, Deakin University.

Cummins, R. A. (2003). Normative life satisfaction: Measurement issues and homeostatic model. Social Indicators Research, 64, 225–240.

Field, A. P. (2003). Can meta-analysis be trusted? Psychologist, 16, 642–645.

Finn, R. H. (1972). Effects of some variations in rating scale characteristics on the means and reliabilities of ratings. Educational and Psychological Measurement, 32(7), 255–265.

Flora, D. B., & Curran, P. J. (2004). An empirical evaluation of alternative methods of estimation for confirmatory factor analysis with ordinal data. Psychological Methods, 9, 466–491.

Garcia, E. (2012). The Self-weighting Model. Communication in Statistics – Theory and Methods, 41(8), 1421–1427. https://doi.org/10.1080/03610926.2011.654037.

Green, P. E., & Rao, V. R. (1970). Rating scales and information recovery: How many scales and response categories to use? Journal of Marketing, 34(3), 33–39.

Green, S. B., & Yang, Y. (2009). Reliability of summed item scores using structural equation modeling: an alternative to coefficient Alpha. Psychometrika, 74, 155–167. https://doi.org/10.1007/s11336-008-9099-3.

Hancock, G. R., & Klockars, A. J. (1991). The effect of scale manipulations on validity: targeting frequency rating scales for anticipated performance levels. Applied Ergonomics, 22, 147.

Jabrayilov, R., Emons, W. H. M., & Sijtsma, K. (2016). Comparison of Classical Test Theory and Item Response Theory in Individual Change Assessment. Applied Psychological Measurement, 40(8), 559–572. https://doi.org/10.1177/0146621616664046.

Jamieson, S. (2004). Likert scales: how to (ab) use them. Medical Education, 38, 1212–1218.

Jenkings, C. D., & Taber, T. A. (1977). A Monte Carlo study of factors affecting three indices of composite scale reliability. Journal of Applied Psychology, 62, 392–398.

Jeong, H. J., & Lee, W. C. (2016). The level of collapse we are allowed: comparison of different response scales in safety attitudes questionnaire. Biom Biostat Int J, 4(4), 128–134. https://doi.org/10.15406/bbij.2016.04.00100.

King, L. A., King, D., & Klockars, A. J. (1983). Dichotomous and multipoint scales using bipolar adjectives. Applied Psychological Measurement, 7, 173–180.

Lim, H. E. (2008). The use of different happiness rating scales: bias and comparison problem? Social Indicators Research, 87, 259–267. https://doi.org/10.1007/s11205-007-9171-x.

Livingston, S. A. (2004). Equating test scores (without IRT). ETS.

Lozano, L. M., García-Cueto, E., & Muñiz, J. (2008). Effect of the number of response categories on the reliability and validity of rating scales. Methodology, 4, 73–79.

Matell, M. S., & Jacoby, J. (1971). Is there an optimal number of alternatives for Likert scale items? Study 1: reliability and validity. Educational and Psychological Measurement, 31, 657–674.

Mertler, C. A. (2002): Using standardized test data to guide instruction and intervention. College Park, MD: ERIC Clearinghouse on Assessment and Evaluation. (ERIC Document Reproduction Service No. ED470589.

Neumann, L. (1979): Effects of categorization on relationships in bivariate distributions and applications to rating scales. Dissertation Abstracts International, 40, 2262-B.

Nunnally, J. C. (1970). Psychometric theory. McGraw- Hill.

Preston CC, Colman AM. (2000): Optimal number of response categories in rating scales: reliability, validity, discriminating power, and respondent preferences. Acta Psychol.104:1–15

Sheng, Y., & Sheng, Z. (2012). Is coefficient alpha robust to non-normal data? Frontiers in Psychology, 3(34). https://doi.org/10.3389/fpstg.2012.00034.

Wakita, T., Ueshima, N., & Noguchi, H. (2012). Psychological Distance Between Categories in the Likert Scale: Comparing Different Numbers of Options. Educational and Psychological Measurement., 72(4), 533–546.

Zimmerman, D. W. (2009). Two separate effects on variance heterogeneity on the validity and power of significance tests of location. Statistical Methodology, 3(4), 351–337. https://doi.org/10.1016/j.stamet.2005.10.002.

Code Availability

No application of software package or custom code

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethical Statement

This is a methodological paper and no ethical approval is required

Informed Consent

Not relevant for this paper using hypothetical data

Conflict of Interests

The author has no conflicts of interest to declare that are relevant to the content of this article

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chakrabartty, S.N. Optimum number of Response categories. Curr Psychol 42, 5590–5598 (2023). https://doi.org/10.1007/s12144-021-01866-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12144-021-01866-6