Abstract

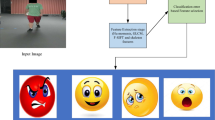

In computer vision, human activity recognition is an active research area for different contexts, such as human–computer interaction, healthcare, military applications, and security surveillance. Activity recognition is performed to recognize the goals and actions of one or more people from a sequence of observations based on the actions and the environmental conditions. Still, there are numbers of challenges and issues, which motivate the development of new activity recognition method to enhance the accuracy under more realistic conditions. This paper proposes an error-based fuzzy dragon deep belief network (error-based DDBN), which is the integration of fuzzy with DDBN classifier, to recognize the human activity from a complex and diverse scenario, for which the keyframes are generated based on the Bhattacharya coefficient from the set of frames of the given video. The key frames from the Bhattacharya are extracted using the scale invariant feature transform, color histogram of the spatio-temporal interest dominant points, and hierarchical skeleton. Finally, the features are fed to the classifier, where the classification is done using the proposed error-based fuzzy DDBN to recognize the person. The experimentation is performed using two datasets, namely KTH and Weizmann for analyzing the performance of the proposed classifier. The experimental results reveal that the proposed classifier performs the activity recognition in a better way by obtaining the maximum accuracy of 1, a sensitivity of 0.99, and the specificity of 0.991.

Similar content being viewed by others

References

Subetha T, Chitrakala S (2016) A survey on human activity recognition from videos. In: Proceedings on international conference on information communication and embedded system (ICICES)

Onofri L, Soda P, Pechenizkiy M, Iannello G (2016) A survey on using domain and contextual knowledge for human activity recognition in video streams. Expert Syst Appl 63:97–111

Nigam S, Khare A (2016) Recognizing human actions and uniform local binary patterns for human activity recognition in video sequences. Multimed Tools Appl 75(24):17303–17332

Aggarwal J, Ryoo MS (2011) Human activity analysis: a review. ACM Comput Surv (CSUR) 43(3):16

Gorelick L, Blank M, Shechtman E, Irani M, Basri R (2007) Actions as space–time shapes. Trans Pattern Anal Mach Intell 29(12):2247–2253

Chang S-F (2002) The holy grail of content-based media analysis. Multimed IEEE 9(2):6–10

McKenna T (2003) Video surveillance and human activity recognition for anti-terrorism and force protection. In: Proceedings of the IEEE conference on advanced video and signal based surveillance, Miami, FL, USA

Zouba N, Boulay B, Bremond F, Thonnat M (2008) Monitoring activities of daily living (ADLs) of elderly based on 3D key human postures. In: Caputo B, Vincze M (eds) Cognitive vision. Springer, Berlin, pp 37–50

Pentland A (1998) Smart rooms, smart clothes. In: Pattern recognition, proceedings on fourteenth international conference on IEEE, vol 2, pp 949–953

Wang B, Yongli H, Gao J, Sun Y, Yin B (2017) Laplacian LRR on product Grassmann manifolds for human activity clustering in multi-camera video surveillance. IEEE Trans Circuits Syst Video Technol 27(3):554–566

Singh D, Krishna Mohan C (2017) Graph formulation of video activities for abnormal activity recognition. Pattern Recognit 65:265–272

Mo S, Niu J, Yiming S, Das SK (2018) A novel feature set for video emotion recognition. Neurocomputing 291:11–20

Wang X, Gao L, Song J, Zhen X, Sebe N, Shen HT (2018) Deep appearance and motion learning for egocentric activity recognition. Neurocomputing 275:438–447

Jalal A, Kim Y-H, Kim Y-J, Kamal S, Kim D (2017) Robust human activity recognition from depth video using spatiotemporal multi-fused features. Pattern Recognit 61:295–308

Saleh A, Abdel-Nasser M, Garcia MA, Puiga D (2018) Aggregating the temporal coherent descriptors in videos using multiple learning kernel for action recognition. Pattern Recognit Lett 105:4–12

Sajjad Hossain HM, Abdullah Al Hafiz Khan M, Roy N (2017) Active learning enabled activity recognition. Pervasive Mobile Comput 38(2):312–330

Ullah J, Arfan Jaffar M (2018) Object and motion cues based collaborative approach for human activity localization and recognition in unconstrained videos. Clust Comput 21(1):311–322

Tao D, Jin L, Yuan Y, Xue Y (2016) Ensemble manifold rank preserving for acceleration-based human activity recognition. IEEE Trans Neural Netw Learn Syst 27(6):1392–1404

Hsu Y-L, Yang S-C, Chang H-C, Lai H-C (2018) Human daily and sport activity recognition using a wearable inertial sensor network. IEEE Access 6:31715–31728

YunisToruna and GülayTohumoğlu (2011) Designing simulated annealing and subtractive clustering based fuzzy classifier. Appl Soft Comput 11(2):2193–2201

Demirli K, Cheng SX, Muthukumaran P (2003) Subtractive clustering based modelling of job sequencing with parametric search. Fuzzy Sets Syst 137:235–270

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Schuldt C, Laptev I, Caputo B (2004) Recognizing human actions: a local SVM approach. In: Proceedings of the 17th international conference on pattern recognition, vol 3, pp 32–36

Yang C, Tiebe O, Shirahama K, Grzegorzek M (2016) Object matching with hierarchical skeletons. Pattern Recognit 55:183–197

Sudhakar R, Letitia S (2017) ASABSA: adaptive shape assisted block search algorithm and fuzzy holoentropy-enabled cost function for motion vector computation. Wirel Pers Commun 94(3):1663–1684

Ján Vojt Bc (2016) Deep neural networks and their implementation. Department of Theoretical Computer Science and Mathematical Logic, Prague

Mirjalili S (2016) Dragonfly algorithm: a new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput Appl 27(4):1053–1073

Action Database. http://www.nada.kth.se/cvap/actions/. Accessed on July 2018

Alpert S, Galun M, Basri R, Brandt A (2012) Image segmentation by probabilistic bottom-up aggregation and cue integration. IEEE Trans Pattern Anal Mach Intell 34(2):315–327

McCaffrey JD (2016) Deep neural network implementation. Software research, development, testing, and education, 25 Nov 2016

Zhou R, Dasheng W, Fang L, Aijun X (2018) A Levenberg–Marquardt backpropagation neural network for predicting forest growing stock based on the least-squares equation fitting parameters. Forests 9(12):757

Kumari S, Mitra SK (2011) Human action recognition using DFT. In: Third national conference on computer vision, pattern recognition, image processing and graphics, pp 239–242

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sheeba, P.T., Murugan, S. Fuzzy dragon deep belief neural network for activity recognition using hierarchical skeleton features. Evol. Intel. 15, 907–924 (2022). https://doi.org/10.1007/s12065-019-00245-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12065-019-00245-2