Abstract

The Evolutionary Attitudes and Literacy Survey (EALS) is a multidimensional scale consisting of 16 lower- and 6 higher-order constructs developed to measure the wide array of factors that influence both an individual’s endorsement of and objection to evolutionary theory. Past research has demonstrated the validity and utility of the EALS (Hawley et al., Evol Educ Outreach 4:117–132, 2011); however, the 104-item long-form scale may be excessive for researchers and educators. The present study sought to reduce the number of items in the EALS while maintaining the validity and structure of the long form. For the present study, and following best practices for short-form construction, we surveyed a new sample of several hundred undergraduates from multiple majors and reduced the long form by 40% while maintaining the scale structure and validity. A multiple-group confirmatory factor analysis supported strong factorial invariance across samples, and therefore verified structure and pattern between the six higher-order constructs of the long-form EALS and the EALS short form (EALS-SF). Regression analysis further demonstrated the short form’s validity (i.e., demographics and openness to experience) and replicated previous findings. In the end, the EALS-SF may be a versatile tool that may be used whole or in part for a variety of research areas, including curricular effectiveness of courses on evolution and/or biology.

Similar content being viewed by others

In the last decade, intense interest has been focused on educational issues regarding the teaching of evolutionary theory and topics to which it is related in fields such as biology, anthropology, and psychology. This literature suggests that (a) individuals who seek exposure to evolutionary theory (e.g., in coursework or museum visits) demonstrate greater knowledge and report more positive attitudes toward the theory than those who do not seek exposure (e.g., Lombrozo et al. 2008; McFadden et al. 2007), (b) religious identity and political ideology enhance or impede the seeking of such knowledge (e.g., genetic literacy; Scott 2004; Miller et al. 2006; Paterson and Rossow 1999), and (c) knowledge of scientific epistemology is positively associated with both evolutionary knowledge and attitudes about its relevance (Hawley et al. 2011). Until recently, no suitable comprehensive measure existed to assess curricular effectiveness, attitude change, or descriptions of regional populations.

Our previous work described and validated such a comprehensive measure that was designed to assess political and spiritual leanings, knowledge of evolution, distrust of and knowledge about the scientific enterprise, and attitudes toward and objections against evolutionary theory (Hawley et al. 2011). With this measurement tool, our ultimate goal was to create a standard by which to assess aspects of curricular influences of courses in colleges and universities, and to assess the regional effectiveness of the intelligent design movement. That 104-item measure (henceforth referred to as the long form) comprised 16 subscales which were structured into six higher-order factors. Moreover, we demonstrated that attitudes were less reliant on demographics than they were on personality factors such as openness to experience on a Kansas sample.

For practical purposes, however, the long-form version may be too time consuming for repeated assessment in educational settings. It is not clear that a long form of 104 items yields a significant benefit over a version with 40% fewer items. Thus, the goal of the present work is to significantly reduce the long-form version in a way that fully maintains its validated structure.

The Creation of the Short Form

Short-form creation involves several steps that require close attention to the psychometric properties of the measure. First, the long form is created and validated. This step is completed (Hawley et al. 2011). Second, data are collected with the long-form survey with a new sample to confirm the established long-form structure (i.e., the previously published long-form structure, our standard for comparison). If the long-form survey structure cannot be confirmed on the new sample, then the structure of the survey rests too heavily on individual sample characteristics. This possibility must first be ruled out. Third, the survey is systematically shortened according to pre-established criteria (details below) and compared once again to the published long-form structure. Last, the short form is applied to outcome measures (e.g., demographics and openness to experience; Hawley et al. 2011) to replicate documented patterns.

Method

Participants

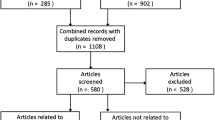

For the present study, data were collected from 526 undergraduate students at a large Midwestern university. These participants (233 men and 290 women) were surveyed at the start of the semester from an introductory biology course covering the principles of cellular and molecular biology. As such, these students were from over 30 majors. Their average age was 19.15 (SD = 2.64) years, the average high school graduating class size was 342.89 (SD = 338.92), and the most frequent response for both the participants’ father’s (N = 109, 29.38%) and mother’s (N = 176, 33.46%) education was four-year college degree. Last, the average rating for rurality of town of origin is 3.14 (SD = 1.86) on a seven-point scale ranging from one (not at all rural) to seven (very rural). Demographically speaking, the present sample was largely homogeneous with the original sample of 371 on which the published long form is based.Footnote 1 Group differences based on differing sample demographic characteristics are thus not anticipated.

As before in the initial long-form scale validation (see Hawley et al. 2011), the present students were invited to participate for extra-course credit and were asked to complete the web-based survey outside of class time via an e-mailed Web link.Footnote 2 The students reported informally that it took them 20–25 minutes to complete the long form of the survey containing 104 items.

The Evolutionary Attitudes and Literacy Survey

The long form Evolutionary Attitudes and Literacy Survey (EALS) consists of 17 pages of Web-presented items where respondents rate the degree to which they agree or disagree with 104 statements on a seven-point Likert scale ranging from one (strongly disagree) to seven (strongly agree) with the midpoint 4 (neither agree nor disagree). The EALS measures 16 theoretically derived constructs including adult exposure to evolution, youth exposure to evolution, religious activity, young earth creationist beliefs, moral objections, political ideology, political activity, attitudes toward life, intelligent design fallacies, knowledge about the scientific enterprise, genetic literacy, evolutionary knowledge, misconceptions about evolution, distrust of the scientific enterprise, relevance of evolutionary theory, and social objections. These 16 constructs or subscales can be further accounted for by six higher-order factors representing Political Activity, Political/Religious Conservatism, Creationist Reasoning, Knowledge/Relevance of Evolution, Evolutionary Misconceptions, and Exposure to Evolution (see Hawley et al. 2011 for details on survey creation and survey structure).

Analytic Methods

Sample Characteristics and Missing Data Imputation

The newly collected data for the short form construction were screened for univariate and multivariate outliers, item normality (with skewness and kurtosis values all within −2.0 and 2.0) and linear relations between all the items. Like the original sample for the long-form construction, the present sample met the assumptions for latent variable modeling techniques. Less than 5% of the data were missing. Thus, missing data were imputed one time via Markov Chain Monte Carlo estimation using Proc MI in SAS (version 9.1.3; Enders, 2010).

EALS Long-Form Measurement Invariance

The factor structure between the previously published long form and the present long form will be compared using mean and covariance structures modeling (MACS; Little 1997). Each sample (previously published and present) has its own representative model reflecting factor structure. We will be imposing constraints across the two models to see if equating the models for the two samples is defensible. If it is defensible, then one has demonstrated measurement invariance; namely, that the EALS long form is functioning similarly for both samples. Measurement invariance will be evaluated first by examining the equivalence of item factor loadings across the two models (weak measurement invariance) and second, by adding the equality constraint of item (indicator) intercepts (strong measurement invariance) across the two models.

Subscale Specification

As in Hawley et al. 2011, each subscale (e.g., religious activity, genetic literacy, and evolutionary misconceptions) is represented by an aggregate of items known as a parcel (Little et al. 2002; Little et al. 2012). Parceling has the added benefits of requiring fewer model parameter estimates, reduced sampling error, and decreasing the likelihood of correlated residuals between items (Little et al. 2002). These parceled indicators are computed by calculating the mean response for all items representing a particular subscale. The goal of the present study is to reduce the number of items in a parcel that represents a given subscale in a way that ensures that the subscale has not changed in function.

Item Reduction

Accordingly, each of the 104 items from the long-form scale was examined both quantitatively and qualitatively for candidacy for retention. We deemed the items appropriate for retention if they (a) possessed strong factor loadings (i.e., standardized factor loadings greater than 0.60), (b) were sufficiently distinct from other items (i.e., minimized redundancy), (c) qualitatively represented their intended construct, and (d) demonstrated normal distributive properties.

Examining Measurement Invariance Between EALS and EALS-SF

Finally, the previously published structure of the EALS long form and the newly derived EALS-SF were again examined for measurement invariance using MACS modeling using the same logic as above. That is, the published long-form model has parceled indicators based on 104 items and the present short-form model has parceled indicators based on the reduced list of items. The question is, are the models functioning similarly? All factor analyses were conducted using maximum likelihood estimation via the software package Mplus (version 6.0).

Replicating Validity with Regressions

Our initial analyses showed the relationships among Kansas demographics, personality factors, and the higher-order factors (Hawley et al. 2011). These analyses demonstrated that scores on the higher-order factor Knowledge/Relevance, for example, were less related to demographics than they were to openness to experience, a facet of personality. Thus, as a final check in the present study, we will seek to replicate these documented patterns with the newly created short form.

Results

EALS Long-Form Measurement Invariance

As outlined above, before we examined items for retention, the long-form structure (Hawley et al. 2011) of the previously published and present samples must first be evaluated for strong measurement invariance (i.e., equivalent loadings and intercepts). To specify the measurement model for each sample, we created parcels by calculating the mean of all of the items within a particular subscale and using these means as indicators of the higher-order EALS constructs. For example, the construct Political/Religious Conservatism was indicated by the parcels religious activity, conservative self-identity, attitudes toward life, young earth creationism, and relevance of evolution. We repeated this procedure for each of the six higher-order EALS constructs in order to recreate the previously published model and a second identical model for our present sample. We then conducted a multiple group confirmatory factor analysis (CFA) to test for measurement invariance between the two models by comparing the structure on the present sample against the previously published structure.

Table 1 displays the model fit statistics from the test of group invariance between the published and present long-form structures. Both weak and strong measurement invariance was met with no significant change in model fit. Consequently, we could confidently move forward to derive the short form on the present sample.

Item Reduction

Items were retained based on quantitative and qualitative criteria, including strong factor loadings, distinctness from other items, and normal distributive properties. For example, the social objections item, “applying the theory of evolution to human affairs implies we are not fully in control of our behavior” was removed because it did not demonstrate a sufficiently high factor loading. Similarly, “theories requiring more untested assumptions are generally better than theories with fewer assumptions” was also removed as its factor loading did not reach criterion. The item “the theory of evolution helps us understand plants” measuring relevance of evolutionary theory was removed because it was redundant with “evolutionary theory is highly relevant for biology.” Finally, “Humans were specially designed” was removed from intelligent design fallacies because it is not an unambiguous representative of the creationist movement despite its high factor loading on the construct. In order to maintain construct stability (see Brown 2006), we retained at least three items for each subscale. In the end, 42 items were removed from the 104-item long-form EALS to result in a 62-item short form (EALS-SF).

Measurement Invariance Between EALS and EALS-SF

As before, the structure of the previously published long from was used as the standard of comparison for the present short-form structure. Parceled indicators were computed for the present sample by calculating the mean response for the shortened list of items representing a particular subscale. For example, in the previously published EALS long form, the lower-order construct political activity was represented by six items, and as a parceled indicator, had a mean of 2.96 (SD = 1.14). The present abbreviated parceled indicator for Political Activity of the EALS-SF consisted of only three items which had a mean of 2.86 (SD = 1.25). This procedure was carried out for each of the 16 subscales of the shortened form. We then used these 16 newly created EALS-SF parcels to specify the same hierarchical model tested and validated in the previously published long-form EALS. We conducted another multiple groups CFA to examine the levels of measurement invariance between a hierarchical model indicated with parcels from the previously published long-form EALS, and a hierarchical model indicated with parcels from the 62 items of the EALS-SF.

Table 2 displays the model fit statistics from the test of measurement invariance between the EALS and the EALS-SF. Again, standards for both weak and strong measurement invariance were met suggesting that the short form is a suitable representation of the long form. Table 3 displays all retained items with their standardized factor loadings, alpha reliabilities, means, and standard deviations for each factor. Table 4 displays the latent correlations between the 16 parceled indicators representing interrelationships among subscales. Table 5 displays the latent correlations between six higher-order constructs of the EALS-SF. Finally, Fig. 1 displays the hierarchical model for the EALS-SF with standardized factor loadings. The EALS-SF demonstrated acceptable model fit (see Fig. 1 for fit statistics).

Replication of Validity with Regression

Finally, Hawley et al. (2011) showed that demographic variables in their collective accounted for less variability in the EALS higher-order constructs than common stereotypes would suggest (less than 10% for all six higher-order factors). In contrast to demographic information, openness to experience significantly predicted Knowledge/Relevance, Creationist Reasoning, and Exposure to Evolution, accounting for as much as 10% of the variance on its own. Similar to these published results, latent regressions on the present short form demonstrated that the same demographic variables again account for less than 10% of the variability (see Table 6). Likewise, openness to experience was again a strong predictor uniquely accounting for variability in Knowledge/Relevance, Creationist Reasoning, and Exposure to Evolution. Interestingly, the same set of predictors accounted for more variability in Exposure to Evolution for the short form than the long form (14% in the EALS and 24% for the EALS-SF). This pattern in the long form is probably due to the presence of weak scale items which in general tend to decrease a construct’s common variance. Thus, their removal in the short form increased the common variance shared among retained items in the construct, providing more variability for openness to experience to predict.

Discussion

The present study demonstrated the suitability of a short form of the EALS to stand in for the long form. Using best practice methodology, we reduced the 104-item survey to 62 items (a reduction of 40%), all the while maintaining weak and strong measurement invariance and replicating previously published patterns. For this reason, researchers can confidently use the short form over the long form which should substantially reduce the amount time needed for participants to complete the measure while maintaining survey validity.

With a workable short form of the EALS, researchers and educators can now more easily adopt the EALS-SF as a capable measurement tool for testing theoretically derived predictions about the relationships among political and religious ideation, and knowledge acquisition and attitudes. Additionally, the EALS-SF may benefit educators interested in assessing the curricular effectiveness of their evolutionary and biology themed courses. Future longitudinal research will assess students in a variety of evolutionary themed courses with the EALS and EALS-SF, as well as continue to validate these scales with cross-national samples.

Study Limitations

Work with the EALS and EALS-SF has been predominantly (though not solely; O’Brien et al. 2009) used with college students from Kansas. It could be argued that Kansas youth are not representative of youth at large and that this lack of representation affects the survey structure. Several issues stand in the way of this conclusion, however. First, the subscales were all theoretically derived and informed by others’ works based on non-Kansas samples (e.g., Carney et al. 2008; Ingram and Nelson 2006; Miller et al. 2006). Second, preliminary analyses (unpublished) suggest that the structures derived from Kansas and New York data are remarkably similar. Where states’ differences are expected to occur, however, are mean and variances across the higher-order structures. For example, some states may have lower means and be more homogenous (i.e., less variability) in constructs such as creationist reasoning. These latter types of questions, though highly important, stand outside of the present work.

Additionally, we sought concurrent validity in relationships among predictors of our survey subscales, but validity can also be sought in scales’ ability to predict important outcomes (i.e., predictive ability) such as academic achievement (e.g., grades in school). These are important questions for future work.

Last, not all content areas of evolution are represented in our survey. Indeed, the survey is not intended to be used as a comprehensive examination, but rather as a workable measurement tool for assessing knowledge and attitudes writ large. For this reason, we suggest that researchers freely include their own subscales in their assessment protocol in addition to those of interest from the EALS-SF. Questions concerning the relationships between additional subscales and validated EALS-SF subscales would certainly be interesting.

Conclusions

The EALS-SF is a comprehensive survey instrument with a validated structure. We hope it will be useful for college educators to assess curricular effectiveness and attainment of specified learning goals, changes in attitudes about course content, and predominant regional belief systems and their roles in science understanding and attitudes.

Notes

The sample on which the published long form is based included 371 undergraduates; 327 from a Child Psychology course and 44 from a Social Psychology course from the same large Midwestern University (see Hawley et al. 2011 for details). In their aggregate, they represented nearly 40 declared majors, 102 were men and 269 were women, and their average age was 20.67 (SD = 2.05) years.

Participants were informed their responses were anonymous and confidential with no identifying information being associated with their survey data, and that they could opt out of the study at any time without penalty. In addition, an alternative extra credit assignment was available for students who did not wish to participate in the current study. Assured anonymity and confidentiality were implemented to reduce the possibility of social desirability influencing survey responses.

References

Brown TA. Confirmatory factor analysis for applied research. New York: Guilford; 2006.

Carney DA, Jost JT, Gosling SD, Potter J. The secret lives of liberals and conservatives: personality profiles, interaction styles, and the things they leave behind. Polit Psychol. 2008;29:807–40.

Cheung GW, Rensvold RB. Evaluating goodness-of-fit indexes for testing measurement invariance. Struct Equ Model. 2002;9:233–55.

Dudley RL, Cruise RJ. Measuring religious maturity: a proposed scale. Rev Relig Res. 1990;32:97–109.

Hawley PH, Short SD, McCune LA, Osman MR, Little TD. What’s the matter with Kansas?: the development and confirmation of the Evolutionary Attitudes and Literacy Survey (EALS). Evol Educ Outreach. 2011;4:117–32.

Ingram EL, Nelson CE. Relationship between achievement and students’ acceptance of evolution or creation in an upper-level evolution course. J Res Sci Teach. 2006;43(1):7–24.

Little TD. Mean and covariance structures (MACS) analyses of cross-cultural data: practical and theoretical issues. Multivar Behav Res. 1997;32:53–76.

Little TD, Cunningham WA, Shahar G, Widaman KF. To parcel or not to parcel: exploring the question, weighing the merits. Struct Equ Model. 2002;9:151–73.

Little TD, Rhemtulla M, Gibson K, Schoemann AM. (2012) Why the items versus parcels controversy needn’t be one. Psychol Methods (in press).

Lombrozo T, Thanukos A, Weisberg M. The importance of understanding the nature of science for accepting evolution. Evol Educ Outreach. 2008;1:290–8.

McFadden BJ, Dunckel BA, Ellis S, Dierking LD, Abraham-Silver L, Kisiel J, Koke J. Natural history museum visitors’ understanding of evolution. Bioscience. 2007;57:875–82.

Miller JD, Scott EC, Okamoto S. Public acceptance of evolution. Science. 2006;313:765–6.

O’Brien DT, Wilson DS, Hawley PH. “Evolution for Everyone”: a course that expands evolutionary theory beyond the biological sciences. Evol Educ Outreach. 2009;2(3):445–57.

Paterson FRA, Rossow LA. “Chained to the devil’s throne”: evolution & creation science as a religio-political issue. Am Biol Teach. 1999;5:358–64.

Rutledge ML, Sadler KC. Reliability of the Measure of Acceptance of the Theory of Evolution (MATE) instrument with university students. Am Biol Teach. 2007;69:332–5.

Scott EC. Evolution vs. Creationism. Westport, CT: Greenwood Press; 2004.

Author information

Authors and Affiliations

Corresponding author

Additional information

Author Note

We extend our gratitude to Dr. James Orr for supporting our research and Dr. Todd Little for his statistical guidance. In addition, we thank the students of Kansas who participated in our survey.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Short, S.D., Hawley, P.H. Evolutionary Attitudes and Literacy Survey (EALS): Development and Validation of a Short Form. Evo Edu Outreach 5, 419–428 (2012). https://doi.org/10.1007/s12052-012-0429-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12052-012-0429-7