Abstract

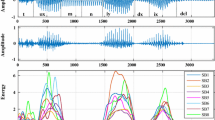

Detection of transitions between broad phonetic classes in a speech signal has applications such as landmark detection and segmentation. The proposed hierarchical method detects silence to non-silence transitions, sonorant to non-sonorant transitions and vice-versa. The subset of the extrema (minimum or maximum amplitude samples) above a threshold, occurring between every pair of successive zero-crossings, is selected from each frame of the bandpass-filtered speech signal. Locations of the first and the last extrema lie on either side far away from the mid-point (reference) of a frame, if the speech signal belongs to a non-transition segment; else, one of these locations lies within a few samples from the reference, indicating a transition frame. The transitions are detected from the entire TIMIT database for clean speech and 93.6% of them are within a tolerance of 20 ms from the phone boundaries. Sonorant, unvoiced non-sonorant and silence classes and their respective onsets are detected with an accuracy of about 83.5% for the same tolerance with respect to the labelled TIMIT database as reference. The results are as good as, and in some aspects better than, the state-of-the-art methods for similar tasks. The proposed method is also tested on the test set of the TIMIT database for robustness with respect to white, babble and Schroeder noise, and about 90% of the transitions are detected within a tolerance of 20 ms at the signal to noise ratio of 5 dB. On NTIMIT database, 62.7% of the transitions are detected, and 63.5% of the sonorant onsets, within 20 ms tolerance.

Similar content being viewed by others

References

Fant G 2003 Speech sounds and features. Cambridge, MA: The MIT Press (Chapter 2)

Hasegawa J M, Baker J, Borys S, Chen K, Coogan E, Greenberg S, Juneja A, Kirchhoff K, Livescu K, Mohan S, Muller J, Sonmez K and Wang T 2005 Landmark-based Speech Recognition: Report of the 2004 Johns Hopkins Summer Workshop

SaiJayram A K V, Ramasubramanian V and Sreenivas T V 2002 Robust parameters for automatic segmentation of speech. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, pp. I-513–I-516

van Hemert J P 1991 Automatic segmentation of speech. IEEE Trans. Signal Process. 39: 1008–1012

Muralishankar R, Srikanth R and Ramakrishnan A G 2003 Subspace and hypothesis based effective segmentation of co-articulated basic-units for concatenative speech synthesis. In: Proceedings of IEEE TENCON, October 15–17, Bangalore, vol. 1, pp. 388–392

Obrecht R A 1986 Automatic segmentation of continuous speech signals. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 2275–2278

Svendsen T and Soong F K 1987 On the automatic segmentation of speech signals. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 77–80

Sarkar A and Sreenivas T V 2005 Automatic speech segmentation using average level crossing rate information. In: Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, pp. 397–400

Ananthakrishnan G, Ranjani H G and Ramakrishnan A G 2006 Language independent automated segmentation of speech using Bach scale filter-banks. In: Proceedings of the IV International Conference on Intelligent Sensing and Information Processing, pp. 115–120

Jakobson R, Fant G and Halle M 1952 Preliminaries to speech analysis: the distinctive features and their correlates. Cambridge, MA: The MIT Press

Chomsky N and Halle M 1968 The sound pattern of English. Cambridge, MA: The MIT Pres

King S and Taylor P 2000 Detection of phonological features in continuous speech using neural networks. Comput. Speech Lang. 14: 333–353

Frankel J, Wester M and King S 2007 Articulatory feature recognition using dynamic Bayesian networks. Comput. Speech Lang. 21: 620–640

Juneja A and Espy-Wilson C Y 2002 Segmentation of continuous speech using acoustic-phonetic parameters and statistical learning. In: Proceedings of the IEEE International Conference on Neural Information Processing, pp. 726–730

Juneja A and Espy-Wilson C Y 2008 A probabilistic framework for landmark detection based on phonetic features for automatic speech recognition. J. Acoust. Soc. Am. 123: 1154–1168

Stevens K N 2002 Toward a model for lexical access based on acoustic landmarks and distinctive features. J. Acoust. Soc. Am. 111: 1872–1891

Salomon A, Espy-Wilson C Y and Deshmukh O 2004 Detection of speech landmarks: use of temporal information. J. Acoust. Soc. Am. 115: 1296–1305

Liu S A 1996 Landmark detection for distinctive feature-based speech recognition. J. Acoust. Soc. Am. 100: 3417–3430

Lippmann R P 1997 Speech recognition by machines and humans. Speech Commun. 22: 1–15

Mesgarani N, Cheung C, Johnson K and Chang E F 2014 Phonetic feature encoding in human superior temporal gyrus. Science 343: 1006–1010

Reddy D R 1966 Phoneme grouping for speech recognition. J. Acoust. Soc. Am. 41: 1295–1300

Ananthapadmanabha T V, Prathosh A P and Ramakrishnan A G 2014 Detection of the closure–burst transitions of stops and affricates in continuous speech using the plosion index. J. Acoust. Soc. Am. 135: 460–471

Garofolo J S, Lamel L F, Fisher W M, Fiscus J G, Pallett D S and Dahlgrena N L 1993 DARPA TIMIT acoustic-phonetic continuous speech corpus. NISTIR Publication No. 4930. Washington, DC: U.S. Department of Commerce

Niyogi P and Sondhi M M 2002 Detecting stop consonants in continuous speech. J. Acoust. Soc. Am. 111: 1063–1076

Noisex-92 [Online] Available: http://www.speech.cs.cmu.edu/comp.speech/Section1/Data/noisex.html

Rosen S 1992 Temporal information in speech: acoustic, auditory, and linguistic aspects. Philos. Trans. R. Soc. London B: Biol. Sci. 336: 367–373

Niyogi P and Ramesh P 2003 The voicing feature for stop consonants: recognition experiments with continuously spoken alphabets. Speech Commun. 41: 349–367

Prasanna S R M, Reddy B V S and Krishnamoorthy P 2009 Vowel onset point detection using source, spectral peaks, and modulation spectrum energies. IEEE Trans. Audio Speech Lang. Process. 17: 556–565

Jankowski C, Kalyanswamy A, Basson S and Spitz J 1990 NTIMIT: a phonetically balanced, continuous speech, telephone bandwidth speech database. In: Proceedings of the 1990 International Conference on Acoustics, Speech, and Signal Processing, ICASSP-90, pp. 109–112

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ananthapadmanabha, T.V., Vijay Girish, K.V. & Ramakrishnan, A.G. Relative occurrences and difference of extrema for detection of transitions between broad phonetic classes. Sādhanā 43, 153 (2018). https://doi.org/10.1007/s12046-018-0923-x

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12046-018-0923-x