Abstract

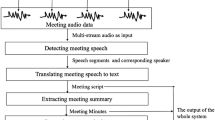

This paper is about the recognition and interpretation of multiparty meetings captured as audio, video and other signals. This is a challenging task since the meetings consist of spontaneous and conversational interactions between a number of participants: it is a multimodal, multiparty, multistream problem. We discuss the capture and annotation of the Augmented Multiparty Interaction (AMI) meeting corpus, the development of a meeting speech recognition system, and systems for the automatic segmentation, summarization and social processing of meetings, together with some example applications based on these systems.

Similar content being viewed by others

References

Al-Hames M, Dielmann A, Gatica-Perez D, Reiter S, Renals S, Rigoll G, Zhang D 2006 Multimodal integration for meeting group action segmentation and recognition, In S Renals, S Bengio (eds), Proc. MLMI ’05, LNCS, vol. 3869. Berlin, Heidelberg: Springer-Verlag, pp 52–63

Anderson A, Bader M, Bard E, Boyle E, Doherty G, Garrod S, Isard S, Kowtko J, McAllister J, Miller J, et al 1991 The HCRC map task corpus, Lang. Speech 34(4): 351–366

Ba S O, Odobez J M 2008 Multi-party focus of attention recognition in meetings from head pose and multimodal contextual cues, In Proc. IEEE ICASSP, pp 2221–2224

Bahl L, Brown P, de Souza P, Mercer R 1986 Maximum mutual information estimation of hidden markov model parameters for speech recognition, In Proc IEEE ICASSP, pp 49–52

Bales R F 1951 Interaction process analysis (Cambridge MA, USA: Addison Wesley)

Bulyko I, Ostendorf M, Siu M, Ng T, Stolcke A, Cetin O 2007 Web resources for language modeling in conversational speech recognition, ACM Trans. Speech Lang. Process. 5(1): 1–25

Carletta J, Evert S, Heid U, Kilgour J 2005 The NITE XML toolkit: Data model and query language, Lang. Resour. Evaluation 39(4): 313–334

Carletta J, Evert S, Heid U, Kilgour J, Robertson J, Voormann H 2003 The NITE XML Toolkit: flexible annotation for multimodal language data, Behav. Res. Meth. Instrum. Comput. 35(3): 353–363

Chen S F, Kingsbury B, Mangu L, Povey D, Saon G, Soltau H, Zweig G 2006 Advances in speech transcription at IBM under the DARPA EARS program. IEEE Trans. Audio Speech Lang. Process. 14(5): 1596–1608

Cohen J, Kamm T, Andreou A 1995 Vocal tract normalization in speech recognition: compensating for systematic speaker variability, J. Acoust. Soc. Am. 97(5, Pt. 2): 3246–3247

Cutler R, Rui Y, Gupta A, Cadiz J, Tashev I, He L, Colburn A, Zhang Z, Liu Z, Silverberg S 2002 Distributed meetings: a meeting capture and broadcasting system. In Proc. ACM Multimedia, pp 503–512

Dielmann A, Renals S 2007 Automatic meeting segmentation using dynamic Bayesian networks, IEEE Trans. Multimedia 9(1): 25–36

Digalakis V V, Rtischev D, Neumeyer L G 1995 Speaker adaptation using constrained estimation of Gaussian mixtures, IEEE Trans. Speech Audio Process. 3(5): 357–366

Dines J, Vepa J, Hain T 2006 The segmentation of multi-channel meeting recordings for automatic speech recognition, In Proc. Interspeech, pp 1213–1216

Gales M J F, Kim D Y, Woodland P C, Chan H Y, Mrva D, Sinha R, Tranter S E 2006 Progress in the CU-HTK broadcast news transcription system, IEEE Trans. Audio Speech Lang. Process. 14(5): 1513– 1525

Gales M J F, Young S J 2007 The application of hidden Markov models in speech recognition, Foundations Trends Signal Process. 1(3): 195–304

Garau G, Renals S 2008 Combining spectral representations for large vocabulary continuous speech recognition, IEEE Trans. Audio Speech Lang. Process. 16(3): 508–518

Garner P, Dines J, Hain T, El Hannani A, Karafiat M, Korchagin D, Lincoln M, Wan V, Zhang L 2009 Real-time ASR from meetings, In Proc. Interspeech, pp 2119–2122

Gatica-Perez D, Lathoud G, Odobez J M, McCowan I 2007 Audio-visual probabilistic tracking of multiple speakers in meetings, IEEE Trans. Audio Speech Lang. Process. 15(2): 601–616

Germesin S, Wilson T 2009 Agreement detection in multiparty conversation, In Proc ICMI-MLMI, pp 7–14

Godfrey J J, Holliman E C, McDaniel J 1992 SWITCHBOARD: Telephone speech corpus for research and development, In Proc. IEEE ICASSP, pp 517–520

Grezl F, Karafiat M, Kontar S, Cernocky J 2007 Probabilistic and bottle-neck features for LVCSR of meetings, In Proc IEEE ICASSP, pp IV-757–IV-760

Hain T, Woodland P C, Niesler T R, Whittaker E W D 1999 The 1998 HTK system for transcription of conversational telephone speech, In Proc IEEE ICASSP, pp 57–60

Hain T, Dines J, Garau G, Karafiat M, Moore D, Wan V, Ordelman R, Renals S 2005 Transcription of conference room meetings: an investigation, In Proc. Interspeech ’05, pp 1661–1664

Hain T, Burget L, Dines J, Garau G, Karafiat M, Lincoln M, Vepa J, Wan V 2007 The AMI system for the transcription of speech in meetings, In Proc. IEEE ICASSP, pp IV-357–IV-360

Huang S, Renals S 2008 Unsupervised language model adaptation based on topic and role information in multiparty meetings, In Proc. Interspeech, pp 833–836

Janin A, Baron D, Edwards J, Ellis D, Gelbart D, Morgan N, Peskin B, Pfau T, Shriberg E, Stolcke A, Wooters C 2003 The ICSI meeting corpus, In Proc. IEEE ICASSP, pp I-364–I-367

Kazman R, Al-Halimi R, Hunt W, Mantei M 1996 Four paradigms for indexing video conferences, IEEE Multimedia 3(1): 63–73

Kilgour J, Carletta J, Renals S 2010 The Ambient Spotlight: Queryless desktop search from meeting speech, In Proc ACM Multimedia 2010 Workshop SSCS 2010. doi:10.1145/1878101.1878112

Kumar N, Andreou A G 1998 Heteroscedastic discriminant analysis and reduced rank HMMs for improved recognition, Speech Commun. 26: 283–297

Lee D, Erol B, Graham J 2002 Portable meeting recorder, ACM Multimedia, pp 493–502

Leggetter C J, Woodland P C 1995 Maximum likelihood linear regression for speaker adaptation of continuous density hidden Markov models, Comput. Speech Lang. 9(2): 171–185

Lin C, Hovy E 2003 Automatic evaluation of summaries using n-gram co-occurrence statistics, In Proc. NAACL/HLT, pp 71–78

Liu F, Liu Y 2008 Correlation between rouge and human evaluation of extractive meeting summaries, In Proc. ACL, pp 201–204

McGrath J E 1991 Time, interaction, and performance (TIP): A theory of groups, Small Group Res. 22(2): 147

Morgan N, Baron D, Bhagat S, Carvey H, Dhillon R, Edwards J, Gelbart D, Janin A, Krupski A, Peskin B, Pfau T, Shriberg E, Stolcke A, Wooters C 2003 Meetings about meetings: research at ICSI on speech in multiparty conversations, In Proc. IEEE ICASSP, pp IV-740–IV-743

Murray G, Kleinbauer T, Poller P, Becker T, Renals S, Kilgour J 2009 Extrinsic summarization evaluation: A decision audit task, ACM Trans. Speech Lang. Process. 6(2): 1–29

Murray G, Renals S, Moore J, Carletta J 2006 Incorporating speaker and discourse features into speech summarization, In Proc NAACL, pp 367–374

Nadas A 1983 A decision theoretic formulation of a training problem in speech recognition and a comparison of training by unconditional versus conditional maximum likelihood, IEEE Trans. Acoust. Speech Signal Process. 31(4): 814–817

Pentland A 2008 Honest signals: how they shape our world (Cambridge, MA: The MIT Press)

Popescu-Belis A, Boertjes E, Kilgour J, Poller P, Castronovo S, Wilson T, Jaimes A, Carletta J 2008 The AMIDA automatic content linking device: Just-in-time document retrieval in meetings, In Machine Learning for Multimodal Interaction, Lecture Notes in Computer Science, vol. 5237/2008, pp 272–283. Berlin, Heidelberg: Springer, pp 272–283

Poppe R, Poel M 2008 Discriminative human action recognition using pairwise CSP classifiers, In IEEE FGR, pp 1–6

Povey D, Woodland P C 2002 Minimum phone error and i-smoothing for improved discriminative training, In Proc IEEE ICASSP, pp I-105–I-108

Renals S, Hain T 2010 Speech recognition, In A Clark, C Fox, S Lappin (eds), Handbook of computational linguistics and natural language processing (Chichester: Wiley Blackwell)

Roy D M, Luz S 1999 Audio meeting history tool: Interactive graphical user-support for virtual audio meetings, In Proc. ESCA Workshop on Accessing Information in Spoken Audio, pp 107–110

Stasser G, Taylor L 1991 Speaking turns in face-to-face discussions, J. Personality Social Psychol. 60(5): 675–684

Uchihashi S, Foote J, Girgensohn A, Boreczky J 1999 Video manga: generating semantically meaningful video summaries, In Proc. ACM Multimedia, pp 383–392

Waibel A, Schultz T, Bett M, Denecke M, Malkin R, Rogina I, Stiefelhagen R, Yang J 2003 SMaRT: the smart meeting room task at ISL, In Proc IEEE ICASSP, pp IV-752–IV-755

Wan V, Hain T 2006 Strategies for language model web-data collection, In Proc IEEE ICASSP, pp 1069–1072

Wilson T, Raaijmakers S 2008, Comparing word, character, and phoneme n-grams for subjective utterance recognition, In Proc. Interspeech, pp 1614–1617

Wölfel M, McDonough J 2009, Distant speech recognition (Chichester: Wiley)

Woodland P C, Povey D 2002 Large scale discriminative training of hidden Markov models for speech recognition, Comput. Speech Lang. 16(1): 25–47

Wooters C, Huijbregts M 2008 The ICSI RT07s speaker diarization system, In Multimodal Technologies for Perception of Humans, LNCS, no. 4625. Berlin, Heidelberg: Springer, pp 509–519

Wrigley S, Brown G, Wan V, Renals S 2005 Speech and crosstalk detection in multichannel audio, IEEE Trans. Speech Audio Process. 13(1): 84–91

Yong R, Gupta A, Cadiz J 2001 Viewing meetings captured by an omni-directional camera, Proc. ACM CHI, pp 450–457

Zechner K 2002 Automatic summarization of open-domain multiparty dialogues in diverse genres, Comput. Linguistics 28(4): 447–485

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

RENALS, S. Automatic analysis of multiparty meetings. Sadhana 36, 917–932 (2011). https://doi.org/10.1007/s12046-011-0051-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12046-011-0051-3