Abstract

Waves of large size can damage offshore infrastructures and affect marine facilities. In coastal engineering studies, it is essential to have the probability estimates of the most extreme wave height expected during the lifetime of the structure. This study predicts significant wave height using the machine learning (ML) technique with generalized extreme value (GEV) theory and its application to extreme wave analysis. The wind speed, wind direction, sea temperature, and swell height data consisting of wave characteristics for 60 years has been obtained from the European Centre for Medium-Range Weather Forecasts (ECMWF). While analyzing extreme waves, the block maxima approach in GEV was used to incorporate the seasonal variations present in the data. The estimated scale parameter, shape parameter, and location parameter of GEV are used in the ML model to predict the significant wave heights along with their return periods. The ML algorithms such as linear regression (LR), artificial neural networks (ANN), and support vector machines (SVM) are evaluated in terms of R2 performance. The model comparison results suggested that the SVM model outperforms the LR and ANN models with an accuracy of 99.80%. Finally, the GEV analysis gives the extreme wave height results of 2.348, 3.470, and 4.713 m with a return period of 5, 20, and 100 yrs, respectively. Hence, the model developed is capable of predicting both significant wave height and extreme waves for the design of coastal structures.

Similar content being viewed by others

References

Afzal M S and Kumar L 2021 Propagation of waves over a rugged topography; J. Ocean Eng. Sci. 7(1) 14–28.

Asma S, Sezer A and Ozdemir O 2012 MLR and ANN models of significant wave height on the west coast of India; Comp. Geosci. 49 231–237.

Bauer E, Hasselmann S, Hasselmann K and Graber H C 1992 Validation and assimilation of Seasat altimeter wave heights using the WAM wave model; J. Geophys. Res.: Oceans 97(C8) 12,671–12,682.

Baylar A, Hanbay D and Batan M 2009 Application of least square support vector machines in the prediction of aeration performance of plunging overfall jets from weirs; Expert Syst. Appl. 36(4) 8368–8374.

Booij N, Holthuijsen L H and Ris R C 1996 The SWAN wave model for shallow water; In: Coastal Engineering, pp. 668–676.

Browne M, Castelle B, Strauss D, Tomlinson R, Blumenstein M and Lane C 2007 Near-shore swell estimation from a global wind-wave model: Spectral process, linear, and artificial neural network models; Coast. Eng. 54(5) 445–460.

Brownlee J 2018 A gentle introduction to k-fold cross-validation; Machine Learning Mastery.

Camps-Valls G, Gómez-Chova L, Calpe-Maravilla J, Martín-Guerrero J D, Soria-Olivas E, Alonso-Chordá L and Moreno J 2004 Robust support vector method for hyperspectral data classification and knowledge discovery; IEEE Trans. Geosci. Remote Sens. 42(7) 1530–1542.

Dee D P, Uppala S M, Simmons A J, Berrisford P, Poli P, Kobayashi S, Andrae U, Balmaseda M A, Balsamo G and Bauer D P et al. 2011 The ERA-Interim reanalysis: Configuration and performance of the data assimilation system; Quart. J. Roy. Meteorol. Soc. 137(656) 553–597.

Dehghan M, Nourian M and Menhaj M B 2009 Numerical solution of Helmholtz equation by the modified Hopfield finite difference techniques; Numer. Methods Partial Differ. Equ. 25(3) 637–656.

Deshmukh A N, Deo M C, Bhaskaran P K, Nair T M B and Sandhya K G 2016 Neural-network-based data assimilation to improve numerical ocean wave forecast; IEEE J. Ocean. Eng. 41(4) 944–953.

Dutta D, Mandal A and Afzal M S 2020 Discharge performance of plan view of multi-cycle W-form and circular arc labyrinth weir using machine learning; Flow Measure. Instrument. 73 1–10.

El Adlouni S, Ouarda T B M J, Zhang X, Roy R and Bobée B 2007 Generalized maximum likelihood estimators for the nonstationary generalized extreme value model; Water Resour. Res. 43(3) 1–13.

Emanuel K and Jagger T 2010 On estimating hurricane return periods; J. Appl. Meteorol. Climatol. 49(5) 837–844.

Fadel S, Ghoniemy S, Abdallah M, Sorra H A, Ashour A and Ansary A 2016 Investigating the effect of different kernel functions on the performance of SVM for recognizing Arabic characters; Int. J. Adv. Computer Sci. Appl. 7(1) 446–450.

Fan S, Xiao N and Dong S 2020 A novel model to predict significant wave height based on long short-term memory network; Ocean Eng. 205 1–13.

Group T W 1988 The WAM model, A third generation ocean wave prediction model; J. Phys. Oceanogr. 18(12) 1775–1810.

Gunn S R 1998 Support vector machines for classification and regression; ISIS Technical Report 14(1) 5–16.

Huang C, Davis L and Townshend J 2002 An assessment of support vector machines for land cover classification; Int. J. Remote Sens. 23(4) 725–749.

Hydraulics D 1999 Delft-3D Flow Manual; Delft.

James S C, Zhang Y and O’Donncha F 2018 A machine learning framework to forecast wave conditions; Coast. Eng. 137 1–10.

Janssen P A E M 2003 The wave model: May 1995. ECMRF Meteorological Training Course Lecture Series.

Janssen P A E M, Lionello P, Reistad M and Hollingsworth A 1989 Hindcasts and data assimilation studies with the WAM model during the Seasat period; J. Geophys. Res. Ocean 94(C1) 973–993.

Jia X, Willard J, Karpatne A, Read J, Zwart J, Steinbach M and Kumar V 2019 Physics guided RNNs for modeling dynamical systems: A case study in simulating lake temperature profiles; Proceedings of the 2019 SIAM International Conference on Data Mining, pp. 558–566.

Juma B, Olang L O, Hassan M, Chasia S, Bukachi V, Shiundu P and Mulligan J 2021 Analysis of rainfall extremes in the Ngong River Basin of Kenya: Towards integrated urban flood risk management; Phys. Chem. Earth, Parts A/B/C 124(1) 1–11.

Kalra R, Deo M C, Kumar R and Agarwal V K 2005 RBF network for spatial mapping of wave heights; Mar. Struct. 18(3) 289–300.

Khlongkhoi P, Chayantrakom K and Kanbua W 2019 Application of a deep learning technique to the problem of oil spreading in the Gulf of Thailand; Adv. Differ. Equ. (2019)306 1–9.

Kumar L, Afzal M S and Afzal M M 2020 Mapping shoreline change using machine learning: A case study from the eastern Indian coast; Acta Geophys. 68 1127–1143.

Lin X G 2003 Statistical modelling of severe wind gust; International congress on modelling and simulation, Townsville, pp. 620–625.

Londhe S N, Shah S, Dixit P R, Nair T M B, Sirisha P and Jain R 2016 A coupled numerical and artificial neural network model for improving location specific wave forecast; Appl. Ocean Res. 59 483–491.

Lou R, Lv Z, Dang S, Su T and Li X 2021 Application of machine learning in ocean data; Multimedia Syst., pp. 1–10.

Luo W and Flather R 1997 Nesting a nearshore wave model (SWAN) into an ocean wave model (WAM) with application to the southern North Sea; WIT Trans. Built Environ., WIT Press 27 253–264.

Mahjoobi J and Mosabbeb E A 2009 Prediction of significant wave height using regressive support vector machines; Ocean Eng. 36(5) 339–347.

Mahjoobi J, Etemad-Shahidi A and Kazeminezhad M H 2008 Hindcasting of wave parameters using different soft computing methods; Appl. Ocean Res. 30(1) 28–36.

Maier H and Dandy G 2004 Artificial neural networks: A flexible approach to modelling; Water 31 55–65.

Melgani F and Bruzzone L 2004 Classification of hyperspectral remote sensing images with support vector machines; IEEE Trans. Geosci. Remote Sens. 42(8) 1778–1790.

Montgomery D C, Peck E A and Vining G G 2021 Introduction to linear regression analysis; John Wiley & Sons.

Musić S and Nicković S 2008 44-year wave hindcast for the Eastern Mediterranean; Coast. Eng. 55(11) 872–880.

Myung I J 2003 Tutorial on maximum likelihood estimation; J. Math. Psychol. 47(1) 90–100.

Nourani V and Babakhani A 2012 Integration of artificial neural networks with radial basis function interpolation in earthfill dam seepage modeling; J. Comput. Civil Eng. 27(2) 183–195.

Palutikof J P, Brabson B B, Lister D H and Adcock S T 1999 A review of methods to calculate extreme wind speeds; Meteorol. Appl. 6(2) 119–132.

Read J S, Jia X, Willard J, Appling A P, Zwart J A, Oliver S K, Karpatne A, Hansen G J A, Hanson P C and Watkins W et al. 2019 Process-guided deep learning predictions of lake water temperature; Water Resour. Res. 55(11) 9173–9190.

Shajitha S H and Perera K 2014 Estimating return values of significant sea wave heights in Colombo, Sri Lanka, pp. 469–473.

Smola A J and Schölkopf B 2004 A tutorial on support vector regression; Stat. Comput. 14(3) 199–222.

Tsai C C, Wei C C, Hou T H and Hsu T W 2017 Artificial neural network for forecasting wave heights along a ship’s route during hurricanes; J. Waterway, Port, Coastal, Ocean Eng. 144(2) 1–12.

Tur R, Pekpostalci D S, ArliKüçükosmanouglu Ö and Küçükosmanouglu A 2017 Prediction of significant wave height along Konyaalti Coast; Int. J. Eng. Appl. Sci. 9(4) 106–114.

Uppala S M, Kållberg P W, Simmons A J, Andrae U, Bechtold V D C, Fiorino M, Gibson J K, Haseler J, Hernandez A and Kelly G A et al. 2005 The ERA-40 re-analysis; Quart. J. Roy. Meteorol. Soc.: J. Atmos. Sci. Appl. Meteorol. Phys. Oceanogr. 131(612) 2961–3012.

Vapnik V 1963 Pattern recognition using generalized portrait method; Automat. Remote Control 24 774–780.

Vapnik V N and Chervonenkis AYa 1965 On a class of pattern-recognition learning algorithms; Automat. i Telemekh. 25 937–945.

Vimala J, Latha G and Venkatesan R 2014 Real Time wave forecasting using artificial neural network with varying input parameter; Indian J. Mar. Sci. 43 82–87.

Warren I R and Bach H 1992 MIKE 21: A modelling system for estuaries, coastal waters and seas; Environ. Softw. 7(4) 229–240.

Zhang X, Li Y, Gao S and Ren P 2021 Ocean wave height series prediction with numerical long short-term memory; J. Mar. Sci. Eng. 9(5) 5.

Acknowledgements

This work was carried out as part of the Institute Scheme for Innovative Research and Development (ISIRD) program from IIT Kharagpur. We also thank ECMWF for the 60 years wind, wind sea and swell parameter dataset for Mehamn.

Author information

Authors and Affiliations

Contributions

MSA: Conceptualisation of work, methodology, manuscript preparation, supervision and revision of the draft manuscript, LK: Data collection, numerical modelling, data analysis and interpretation of results and drafting the original and revised manuscript. VC and YK: Model analysis, numerical modelling and data analysis. MZ: Data analysis and interpretation, and drafting the revised manuscript.

Corresponding author

Additional information

Communicated by Parthasarathi Mukhopadhyay

Appendix A

Appendix A

1.1 A.1 Machine learning models

1.1.1 A.1.1 Linear regression (LR)

The linear regression method generally formulates the output as a linear function of the input variables in such a way that the values obtained using the formulated function are close to the actual output. This formulated linear function (hypothesis) can be further used to predict the output values for any given input. The method of LR involves creating a cost function which is a function of these parameters (constants). The lesser the value of the cost function the lower the difference between the predicted values and the actual values. Hence, the values of these parameters are determined by minimizing the value of the cost function. This is achieved using optimization techniques such as gradient descent.

1.1.2 A.1.2 Artificial neural network (ANN)

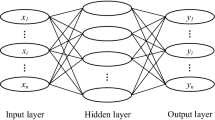

An artificial neural network (ANN) consists of an input layer, a series of hidden layers and an output layer, each having a various number of nodes. The input layer contains an equal number of nodes as input features. Similarly, the output layer is the same as the number of outputs desired by the model, which is generally one for regression models. The hidden layers contain a number of nodes that act as a parameter for the model.

In this neural network technique, the input layer uses feature value as input. The input layer contains an activation function (such as relu, tanh, or sigmoidal), which allow input to pass through it and provides the output from the input layer. The input layer is connected with the hidden layer with some weight factor. The weight factor determines the relative contribution of different nodes in the hidden layer. The output obtained from the input layer act as an input to the hidden layer. Further, the activation function determines the output of the hidden layer. Now, the hidden layer output is multiplied with the subsequent weight factor to obtain input to the output layer. Therefore, the final output is obtained by adding all the input of the output layer. After the first iteration, the weight is updated, and the processes are repeated until the minimum difference is obtained from the actual and predicted output, this process is also called back propagation algorithm.

The multilayer perceptron neural network is used for this study, which comprises three hidden layers with 10, 20 and 30 nodes, respectively. The number of nodes in each layer is decided by performing iterations for a different number of nodes in each layer. The relu (rectified linear unit) activation function was used for training the model. The other two widely used activation functions, namely tanh and logistic activation functions have output values in the range of [−1, 1] and [0, 1], respectively, and hence are more suitable for classification problems. As this is not the case with relu function, it is used as the activation function for training the model.

1.1.3 A.1.3 Support vector machine (SVM)

Support vector machine (SVM) algorithms employ a number of mathematical formulations, which are defined as the kernel. The kernel’s function uses input data and converts them into the required form. Basically, it returns the internal product between two points in an appropriate feature space. The linear, non-linear, polynomial, and radial basis function is used as kernel function in the SVM algorithm (Gunn et al. 1998). The kernel function gained considerable attention in the last few years, especially as the SVM algorithm gained popularity. In many applications, kernel functions provide a simple relationship between linearity and non-linearity for the algorithms, which can be expressed as dot products (Fadel et al. 2016). In this paper, the Gaussian radial basis function kernel (popular kernel function used in various kernelized learning algorithms) has been used for the prediction of significant wave height using the SVM model as it outperformed other RBF kernels by significant difference (comparison is shown in the results section).

Gamma (γ) is an important parameter of the Gaussian RBF kernel. The gamma parameter depicts the extent to which an individual training example influences, with low values that mean ‘far’ and high values that mean ‘close’. The gamma parameters can be viewed as the inverse of the sample’s influence radius selected as support vectors by the model. Analytically, the narrower the Gaussian RBF kernels get (larger gammas) the ‘spikier’ the hypersurface is going to get. On the other hand, if the Gaussian RBF kernels are too wide (small gammas), it would end up with a hypersurface that is almost flat. Hence, choosing an optimum value of gamma is very important.

The advantages of the RBF are ease of construction, good generalization, high input noise tolerance, and the capacity to learn. The radial basis function can be used to develop the SVM model as well as ANN Model. When dealing with a large number of high-dimensional datasets, the ANN with RBF techniques is ineffective due to their sensitivity to the Hughes phenomenon. SVM with radial basis function has been effectively applied for high dimensional datasets in recent years (Huang et al. 2002; Camps-Valls et al. 2004; Melgani and Bruzzone 2004). The properties of SVM with RBF kernel allow them to deal with a large set of high-dimensional datasets. The SVM with RBF kernel can handle large input spaces, cope with noisy samples robustly and create sparse solutions effectively.

Rights and permissions

About this article

Cite this article

Afzal, M.S., Kumar, L., Chugh, V. et al. Prediction of significant wave height using machine learning and its application to extreme wave analysis. J Earth Syst Sci 132, 51 (2023). https://doi.org/10.1007/s12040-023-02058-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12040-023-02058-5