Abstract

This article assesses whether current European law sufficiently captures gender-based biases and algorithmic discrimination in the context of artificial intelligence (AI) and provides a short analysis of a draft EU legislative proposal, the Artificial Intelligence Act. To this end, current trends and uses of algorithms with potential impacts on gender will be analysed through the lens of direct and indirect impacts for gender equality law, highlighting the implications for European gender equality enforcement. This article concludes that legislative and accompanying policy measures are necessary to ensure an effective gender equality policy and to avoid algorithmic discrimination.

Similar content being viewed by others

1 Introduction

AlgorithmsFootnote 1 have played a major role for many years but have only recently caught the attention of European and international regulators. Despite living in the “Age of Algorithms”,Footnote 2 the impact of algorithms on gender equalityFootnote 3 has received little attention,Footnote 4 requiring, as it does, detailed analysis of challenges and opportunities as well as possible discriminatory outcomes.Footnote 5 In case law,Footnote 6 in particular, when it comes to gender equality and discrimination, analysis of algorithms is still a rarity.Footnote 7 An outlier is the judgment of the Italian Consiglio di Stato reviewing the legality of using algorithms in public administration to automatically allocate school postings for teachers.Footnote 8

Some forms of discrimination are visible and perceived directly by women and men, such as the algorithm that denied access to a woman to the female lockers in a gym due to her “\(Dr\).” title being associated with men.Footnote 9 Others are invisible, for example, where algorithms sort CVs in a fully automated application procedure and do not select women or men because of their sex.Footnote 10 Other behaviour does not necessarily cross the threshold of anti-discriminatory behaviour under EU law but clearly poses problems in terms of gender bias, stereotypesFootnote 11 and gender equality policy goals. It therefore represents a threat to gender equality in general but also concretely paves the way for future gender-based discrimination. Besides biases and stereotypes contained in datasets used for training algorithmsFootnote 12 and the intentional or unintentional introduction of biases into the design and programming of the algorithms,Footnote 13 there is another underlying problem that might favour gender inequalities: since its early days the “gender make-up of the AI community”Footnote 14 greatly influences the way algorithms are shaped and consequently has an impact on how algorithms work, leading to potential discriminatory outcomes.

2 The challenges and opportunities of algorithms for achieving gender equality goals

Grasping gender-based discrimination when algorithms are used remains a legal challenge. The key problem is that under EU law, gender-based discrimination is prohibited if a certain type of behaviour - for example, not hiring a woman because of pregnancy or a company policy favouring men - represents a clear violation of gender equality rules.Footnote 15 If a company uses algorithms for its recruitment procedure,Footnote 16 the legal qualification as discrimination should be no different in principle. Indeed, algorithms might produce biases (“machine bias”)Footnote 17 or reproduce biases or stereotypes and might even favour gender-based discrimination. The real challenge for policy-makers is however that algorithms might only reproduce or reinforce existing societal biases and stereotypes. However, if algorithms such as those used in search engines merely favour certain biases, stereotypes and encourage behaviour, it is not clear whether the threshold of discrimination is crossed. In combating some behaviour, the EU would only deploy policy measures, whereas for others, legislative action could be the solution.

The proposed EU Artificial Intelligence Act (AIA)Footnote 18 foresees restrictions and regulatory actions for certain forms of behaviour that involve gender equality questions. One example relevant to many companies Footnote 19 is covered by the Artificial Intelligence Act: recruitment algorithms.Footnote 20 Legal scholars have raised concerns due to the increased risk and “clear and present danger” of biases and discrimination in such algorithms.Footnote 21 Recruitment algorithms could exert exclusionary power by automatically pre-selecting or discard CVs before they reach human eyes, thereby increasing the risk of gender-based discrimination.Footnote 22 It appears from the case law of the Court of Justice of the European Union (CJEU) that labour law issues such as recruitment and promotion procedures are often the subject of gender equality disputes.Footnote 23 To date, no directives or cases deal with algorithms and gender-based discrimination. Notably due to a lack of preliminary references, the CJEU has so far not interpreted any concepts in relation to algorithmic discrimination. Several cases could shed some light on how the CJEU might decide in the future, should a dispute on algorithmic discrimination arise.Footnote 24 In addition, recruitment algorithms provoke discriminatory behaviour towards women or men which could be partly covered by the future Artificial Intelligence Act. More problematic are algorithms producing discriminatory outcomes other than recruitment algorithms that do not fall under the proposed Artificial Intelligence Act. This category includes algorithms used by public or private operators as preparatory step,Footnote 25 merely triggering a decision but not representing a discriminatory act as such. The discrimination would need to be proven in a similar way as if the decision is taken by a human, in cases where there is a discriminatory impact on a potential employee.

A distinction must be made between algorithms impacting gender equality with a direct discriminatory effect and those that have indirect effects. Because the degree and consequences of alleged gender equality violations is different, they need to be addressed differently. Indirect effects should not be underestimated because these might undermine a gender equality policy aimed at tackling and fighting stereotypes and biases. Research and practice have shown that without a good gender equality policy,Footnote 26 discrimination cannot be addressed. In addition, indirect gender effects can also play a role in the formation of direct gender effects and thereby favour discrimination.

The core of gender equality policy is based on national constitutions, the EU Treaties and on national and European legislation. Other policies might help fight biases in the context of algorithmic discrimination and achieve more equality, such as increasing the number of women on boards and in leadership positions.Footnote 27 The proposed Directive COM/2012/0614 could have an impact in reality so that the search for CEOs would yield more female leaders over time. Equally, in the area of work-life balance, the WLB-DirectiveFootnote 28 could have tremendous effects, because a more equal sharing of caring responsibilities and more take-up of leave by men would shap perceptions, stereotypes which are reflected in the data used by algorithms and search engines. Other measures, such as positive action or gender mainstreaming are important in ensuring gender equality as well, as are addressing the gender pay gap, the gender pension gap and violence against women, notably as regards online violence and hate speech.Footnote 29 The more equality there is between women and men, and the more equal a society gets, the more this will be mirrored in the datasets used by algorithms. Such an approach could diminish overt, open and intentional discrimination. It is more difficult to eliminate unintentional and indirect discrimination that might occur unknowingly. However unintentional discrimination is equally covered under EU law as no subjective element or intent is necessary.

3 How gender equality is affected by indirect and direct effects of algorithms

As for human decisions, gender equality law and policy can be affected either directly (3.2) or indirectly (3.1) by algorithms.

3.1 The indirect gender effects of algorithms

Indirect gender effects (IGE) of algorithms can be defined as all effects that shape, influence and perpetuate gender biases and stereotypes by altering datasets that underlie algorithms and that have neither a direct impact nor represent a clear violation of EU gender equality law as such. An example is results of search queries. By promoting, perpetuating or combining different issues, creating new biases, stereotypes, and potentially discriminatory tendencies, search algorithms are problematic. For example, when typing CEO into a search engine, the algorithm shows nearly no pictures of female CEOs, but only male ones.Footnote 30 Even though there is huge inequality between women and men when it comes to leadership positions in companies, pictures shown in the search results are not in line with reality as the number of female board chairs is 7.5% and female CEOs is 7.7% in Europe.Footnote 31 Based on search results, a wrong perception is thus created and reinforced. These stereotypes could become a basis for discriminatory behaviour and enable preparation for discriminatory decisions. For example, in a recruitment procedure for CEOs, online information for hiring procedures might be (unconsciously) influenced and culminate in indirect gender effects. Search queries might feed into the process leading to gendered outcomes. While available datasets and training data for algorithms are part of what risks facilitating gender inequalities, (deep) neural networksFootnote 32 might worsen gender inequalities alongside inaccurate data: one algorithm that was trained to detect human activities in images developed gender biases.Footnote 33 Men tended to be shown doing outside activities such as driving cars, coaching and shooting while women tended to be shown doing shopping, being in the kitchen at the microwave or washing.Footnote 34

Although less visible and apparent, such indirect gender effects produced by using algorithms are potentially causing more harm than direct gender effects. This can be explained due to the widespread use and the ease with which biases and stereotypes in datasets spread and are used and re-used in different algorithms accessing common databases or accessing the internet for data and information.Footnote 35 In the end, indirect gender effects of algorithms, if perpetuated and distributed among networks and databases could ultimately lead to create direct gender effects, if an algorithm uses datasets and databases that have been shaped by indirect gender effects.

The Word2vec/Word vectors technique used by algorithms and search engines impacting gender equality is at the heart of the problem described.Footnote 36 Word2vec is

“a technique for natural language processing [that] uses a neural network model to learn word associations from a large corpus of text. Once trained, such a model can detect synonymous words or suggest additional words for a partial sentence. As the name implies, word2vec represents each distinct word with a particular list of numbers called a vector. [which] indicates the level of semantic similarity between the words represented by those vectors.”Footnote 37

Word2vec

“produce[s] word embeddings. These models [..] are trained to reconstruct linguistic contexts of words. [on] a large corpus of text and produces a vector space [..] with each unique word in the corpus being assigned a corresponding vector in the space. Word vectors are positioned in the vector space such that words that share common contexts in the corpus are located close to one another in the space.” Footnote 38

An oft-cited example is that the word man is more often associated with computer programmers and woman more often associated with homemakers.Footnote 39 While Word2vec facilitates the efficiency and user-friendliness of search engines and algorithms, the danger is that biases and stereotypes will not only result in showing certain search results but also be used as a basis for decision-making algorithms. If the Word2vec technique is used by algorithms, it may not only shape and distribute biases and stereotypes among search engines but also find its way into underlying datasets on which algorithms base their decisions and learn. Even though not necessarily crossing over the line into illegal behaviour under current EU gender equality rules, politically this undermines the Treaty goals of gender equalityFootnote 40 and might therefore necessitate a review of current EU rules. Consequently, the problem of the indirect gender effects of algorithms merits the same level of attention as the more obvious problem of direct gender effects.

3.2 The direct gender effects of algorithms

Direct gender effects (DGE) of algorithms can be defined as either violations of the gender equality norms or as behaviours of algorithms that are directly measurable and discriminatory. Examples are the exclusion of a female job applicant by an algorithm for the mere reason of being female or the non-granting of a credit by an algorithm based on data associated with women’s (lower) creditworthiness because of statistical association with the group “women”.Footnote 41 Direct gender effects are not only more obvious than indirect gender effects but are also more easily identified and understood as they are roughly identical in terms of outcome to the classical discrimination triggered by a human decision. However, many unsolved issues remain when a direct gender effect is caused by algorithms and causes harm, notably access to evidence and facilitating proof of alleged discrimination in court proceedings, which seem more difficult in the light of the opaqueness of algorithms.Footnote 42 A strict application of the principle of burden of proof and its possible reversal in case of alleged victims of discrimination could facilitate and encourage a better and more effective enforcement of equality rules in the area of algorithmic discrimination.Footnote 43

Other examples of direct gender effect include algorithms used for automatically granting access to lockers for gyms, for applications to universities or for benefits as well as recruitment decisions based on algorithms. In general, a distinction between algorithms that are used by private operators and public bodies can be useful, given that public bodies often represent a monopoly without alternatives whereas for private operators there is often choice. Therefore, in the case of an algorithm for labour or employment benefits used by the state for example,Footnote 44 the algorithm produces direct results and citizens need to be guaranteed non-discriminatory access.

3.3 Positioning indirect and direct gender effects in the direct/indirect discrimination dichotomy

The distinction between indirect gender effect and direct gender effect is of importance to the question of how to address gender inequalities with law and policy measures.Footnote 45 Often, when it comes to indirect gender effect, the threshold of reprimandable gender-based discriminations is not crossed and therefore gender equality law does not apply. In this case, policies need to be put in place to achieve the gender equality goals laid down in the Treaties. Under EU law, both direct and indirect discrimination is prohibited. However, as direct and indirect gender effects do not operate in the same way they cannot necessarily be addressed both under direct and indirect discrimination regimes. For discrimination to be found under EU law, a concrete discriminatory act or behaviour needs to be identified which is often lacking in the case of indirect gender effects. Indirect gender effects typically mirror, create or reinforce biases and stereotypes, but do not have a direct and concrete or visible impact on a person.Footnote 46 If biases and stereotypes are represented in the search results of a search engine, they might influence a person to take a certain action, discriminate or enable a person to prepare a discriminatory act, for example research on the internet, to prepare a recruitment for a specific job. This could lead a person relying too much on an algorithmFootnote 47 to take a decision based on and influenced by the data revealed in the search results, potentially discriminating. One remedy is to address the gender data gap in order to obtain more representative and diverse datasets that reflect reality.Footnote 48

4 The future legislative framework to address gender-based algorithmic discrimination

In this section, the core elements of the Artificial Intelligence Act regarding gender equality will be outlined (see 4.1 below), together with amendments proposed by the European Parliament, the Committee of the Regions and the European Economic and Social Committee in view of strengthening the gender equality perspective (see 4.2) and some views in the literature (see 4.3).

4.1 The EU proposal of the European Commission – the Artificial Intelligence Act in brief

The Artificial Intelligence Act can be considered as a leap forward for horizontal artificial intelligence regulation in that it seeks to create harmonised rules for AI.Footnote 49 According to the Artificial Intelligence Act proposal an “ ‘artificial intelligence system’ (AI system) means software that is developed [..] for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments they interact with”.Footnote 50

In essence, if the Artificial Intelligence Act applies, some artificial intelligence systems are prohibitedFootnote 51 while others are subject to regulation and high-risk systemsFootnote 52 require specific regulatory consideration.Footnote 53 With regard to its scope, the Artificial Intelligence Act applies not only to artificial intelligence systems within the EU (see Art. 2(b) of the Artificial Intelligence Act), but also “[..] irrespective of whether those providers are established within the Union or in a third country” and to “providers and users of AI systems that are located in a third country, where the output produced by the system is used in the Union”.Footnote 54 Any artificial intelligence system that has effects on Union citizens is thus covered by the Artificial Intelligence Act. Following a dynamic approach, future technological advances in artificial intelligence are included by referring to annexes that can be adopted by the Commission without following the ordinary legislative procedure.Footnote 55

Regarding gender equality and non-discrimination, the Artificial Intelligence Act complements the existing legislative frameworkFootnote 56 but includes gender equality and non-discrimination to the extent of prohibiting certain artificial intelligence applications and defining high-risk artificial intelligence systems that require specific regulation.Footnote 57 The Artificial Intelligence Act also addresses the violation of fundamental rightsFootnote 58 which include the principle of non-discrimination. Due to the horizontal nature of the Artificial Intelligence Act, discrimination and gender equality are not specifically addressed but referenced in the non-operative part.Footnote 59 Article 6 (2) of the Artificial Intelligence Act which refers to Annex III Nr. 4 regarding recruitment systems, is potentially relevant for gender-based algorithmic discrimination: “throughout the recruitment process and in the evaluation, promotion, or retention of persons in work-related contractual relationships, such systems may perpetuate historical patterns of discrimination, for example against women [..]”.Footnote 60 Recruitment software would therefore be considered as an artificial intelligence application that falls within the high-risk category of Article 6. In the case of this category, Article 8 requires respect for the specific requirements listed in Articles 9–14. This includes inter alia a risk management system,Footnote 61 compliance with data and data governance principles in terms of training, validation and testing of data sets,Footnote 62 technical documentation,Footnote 63 record-keeping,Footnote 64 transparency and provision of information to usersFootnote 65 and, finally, human oversight.Footnote 66 Those requirements could ensure sufficient regulation of algorithms and enable competent authorities to verify conformity with the Artificial Intelligence Act. Human oversight (see Art. 14 of the Artificial Intelligence Act) is a key requirement that also has been addressed in the GDPR Footnote 67 and has been discussed in the literature.Footnote 68 The obligations of providers (and users) of high-risk artificial intelligence systemsFootnote 69 include establishing a quality management system,Footnote 70 drawing up the technical documentation of the high-risk artificial intelligence system,Footnote 71 keeping the logs automatically generated by the high-risk AI systems,Footnote 72 ensuring relevant conformity with the assessment procedureFootnote 73 prior to market access, compliance with registration obligationsFootnote 74 or affixing the CE marking to their high-risk AI systems so as to indicate the conformity with the Artificial Intelligence ActFootnote 75 and – upon the request of a national competent authority - demonstrating the conformity of the high-risk artificial intelligence system with these requirements.Footnote 76 Regarding institutional set-up and enforcement, the draft Artificial Intelligence Act foresees sanctions for violations of the Artificial Intelligence ActFootnote 77 and the creation of a European Artificial Intelligence Board.Footnote 78 Including potentially discriminatory recruitment systems as a high-risk category would address some of the algorithmic discriminations occurring in disputes concerning access to the labour market while other relevant activities would still represent a challenge currently regulated only by Directive 2006/54/EU.Footnote 79 In the light of this, the further evolution of the Artificial Intelligence Act proposal will show the need to review or to propose separate legislation focussing specifically on gender equality and non-discrimination will remain on the agenda of the EU.

4.2 The Artificial Intelligence Act proposal in the European Economic and Social Committee, the Committee of the Regions and the European Parliament

The adoption of the Artificial Intelligence Act falls under the ordinary legislative procedure,Footnote 80 and is considered by the Parliament, the Council and the Commission as a common legislative priority for 2021, and one for which they want to ensure substantial progress.Footnote 81 Several national parliaments (those of Czechia, Germany, Portugal and Poland) have issued opinions among which only that of the German Bundesrat refers to gender equality and highlights the “Kohärenz mit der EU-Grundrechte-Charta und dem geltenden Sekundärrecht der Union zur Nichtdiskriminierung und zur Gleichstellung der Geschlechter gewährleistet”.Footnote 82 The AIDA committee of the European Parliament is currently preparing a draft report on artificial intelligence.Footnote 83 The main suggestions of Parliament’s draft report, which mentions gender and discrimination only two times, concern the issue of diversity and more female representation among coders and developers and the issue of biases and discrimination due to incomplete or non-diverse datasets. The amendments prepared by Parliament refer more frequently to gender and/or discrimination: amendments 1-281 (four references),Footnote 84 amendments 282-555 (four references),Footnote 85 amendments 556-825 (eight references),Footnote 86 amendments 826-1108Footnote 87 and amendments 1109-1384 (three references).Footnote 88

In this line the parallel legislative proposal Digital Services Act (DSA)Footnote 89 also tries to mitigate some of the risks for women:

“Specific groups (..) may be vulnerable or disadvantaged in their use of online services because of their gender (..) They can be disproportionately affected by restrictions [..] following from (unconscious or conscious) biases potentially embedded in the notification systems by users and third parties, as well as replicated in automated content moderation tools used by platforms.”

The Parliament adopted its amendments to the Digital Services Act on 20 January 2021 and included the right to gender equality and non-discrimination in recitals 57 and 91 and Article 26(1)(b) and the principle of equality between women and men in recital 3.Footnote 90

Both the European Economic and Social Committee (EESC) and the Committee of the Regions (CoR) have proposed concrete amendments to the Artificial Intelligence Act in the area of gender equality in their non-binding opinions.

The EESC adopted an opinion in December 2021, highlighting that

“the ‘list-based’ approach for high-risk AI runs the risk of normalising and mainstreaming a number of AI systems and uses that are still heavily criticised. The EESC warns that compliance with the requirements set for medium- and high-risk AI does not necessarily mitigate the risks of harm to [..] fundamental rights for all high-risk AI. [..] the requirements of (i) human agency, (ii) privacy, (iii) diversity, non-discrimination and fairness, (iv) explainability and [..] of the Ethics guidelines for trustworthy AI should be added.”Footnote 91

The Committee of Regions adopted some amendments, proposing that “the Board should be gender-balanced”, claiming that such gender balance is a precondition for diversity in issuing opinions and drafting guidelines.Footnote 92 Furthermore, it proposed to include as a recital “AI system providers shall refrain from any measure promoting unjustified discrimination based on sex, origin, religion or belief, disability, age, sexual orientation, or discrimination on any other grounds, in their quality management system”, reasoning that “unlawful discrimination originates in human action. AI system providers should refrain from any measures in their quality system that could promote discrimination.” Another suggestion was to introduce into Article 17(1) “measures to prevent unjustified discrimination based on sex, (..)”. Both the Economic and Social Committee’s and the Committee of the Region’s proposals would lead to the incorporation of a gender equality perspective into the Artificial Intelligence Act.

4.3 Critical and nuanced views in the academic literature

While the Artificial Intelligence Act has been generally welcomed, with 1216 contributions being received during the open public consultation by the Commission,Footnote 93 133 contributions on the roadmap and 304 feedback contributions following the adoption of the draft regulation, some concerns and alternative ideas have been voiced. Whereas some assume that the gender equality framework provides “useful yardsticks” highlighting that there are some systemic problems complicating the way EU law deals with algorithmic discrimination,Footnote 94 others regard EU law as in principle “well-equipped” all the while highlighting “areas for improvement”.Footnote 95 Others have called for a new regulatory regime, such as the Artificial Intelligence Act currently going through the process of legislative adoption in the EU.Footnote 96 Also identifying room for the improvement of EU anti-discrimination law so as to address algorithmic discrimination, Hacker suggests an “integrated vision of anti-discrimination and data protection law”.Footnote 97 Finally, others are more cautious on the regulatory front when it comes to artificial intelligence and propose a “purposive interpretation and instrumental application of EU non-discrimination law”.Footnote 98 Other authors identify shortcomings in the Artificial Intelligence Act, and propose moving away from the notion of individual harm and following a more holistic approach focusing as well on collective and societal harm.Footnote 99

While it is true that law in general, and European gender equality law in particular can cope to some extent with newly arising technologies that produce discriminatory outcomes, the author has advocated for regulation as conditio sine qua non and pinpointed to the need to find inspiration for EU artificial intelligence regulation in other international instruments currently under development that also partly address gender equality and non-discrimination issues.Footnote 100

5 Regulatory and policy recommendations

5.1 Regulatory suggestions

This section sketches out what can be done concretely in terms of policy measures and legislative action.Footnote 101 Legislation is fundamental to ensure gender equality and the Artificial Intelligence Act makes a good start by posing for the first time a general principle that some areas of artificial intelligence systems should be regulated. First, added value can be achieved by ensuring a good level of protection of equality between women and men in all fields by adopting adequate legislationFootnote 102 or by proposing legislation such as that on pay transparency,Footnote 103 women on boards,Footnote 104 violence against womenFootnote 105 and by ensuring its effective enforcement. Overall, an increase in gender equality in the real world will be reflected in datasets and thereby reduce potential algorithmic discriminations.

Second, on this basis, and complementary to the rules of the Artificial Intelligence Act that would apply to situations involving gender-based discriminations caused by recruitment algorithms, more technical or sector specific regulations could be explored, such as specific requirements to ensure the respect of gender equality norms when it comes to algorithmic discrimination. A review of the legislative gender equality acquis (which is required periodically by the better regulation guidelines of the EUFootnote 106) could be a good opportunity to incorporate more clearly and define algorithmic discrimination in EU gender equality law, as well as to detail rules on the (shifting) of the burden of proof in algorithmic discrimination cases.

5.2 Accompanying policy measures

In order to achieve gender equality, alongside legislative measures,Footnote 107 self-standing or accompanying policy measures could be taken to address the issues raised above.Footnote 108 Policy and awareness-raising measures should include designers and developers of algorithms, as well as general training on equality issues. Targeted training on gender equality is needed for developers when designing and coding algorithms. This does not avoid all biases, and bad design and biases would still be found in algorithms, but they would be less prone to biases, stereotypes and discriminatory behaviour.

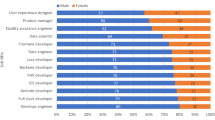

If more women were represented among IT developers,Footnote 109 this could increase the chances of more diverse and equal outcomes from algorithms. Navigating between fully-fledged regulations and policy measures, aside from encouraging training, one could also prescribe mandatory measures at the design stage/coding stage. Good practice principles and mandatory training on gender equality or mandatory reporting could bring change. It could also be left to companies to decide how to achieve objectives which could be fixed in the law. Concrete outcomes should not be fixed in law as it is probably impossible to create an algorithm that never will discriminate. The aim, therefore, is not to eliminate but to reduce the risk of gender-based discrimination.

Ensuring that humans remain in control as highlighted in Article 14 of the Artificial Intelligence Act, could help identify and mitigate gender biases in individual datasets.Footnote 110 For this to be effective, training and awareness-raising for developers/programmers are one way of mitigating the dangers of gender biases and stereotypes when designing algorithms in general and with regard to the Word2vec technique in particular. Regardless of whether designed as a mandatory or an optional requirement, this is relatively easy to implement and a way to reduce gender biases and stereotypes. A label certifying that gender and diversity knowledge is available in a company could be another way to incentivise IT companies to gain the relevant knowledge. Transparency could also increase compliance, by publishing on a company or general website, whether a specific algorithm has been built by a company which has the relevant gender and diversity knowledge.

More generally, increasing diversity and female participation in coding/artificial intelligence jobsFootnote 111 - a need also highlighted in the recent own-initiative report (“INI”) report of the Parliament’s AIDA committee, is vital.Footnote 112 Amendment 673 of the report also support skills and training in this regard: in that it “highlights the importance of including basic training in digital skills and AI in national education systems; [and e]mphasizes the importance of empowering and motivating girls for the subsequent study of STEM careers and eradicating the gender gap in this area”.Footnote 113 Increasingly firms are aware of the need for more equality and diversity in the IT and artificial intelligence world, as highlighted by the foundation of a consulting firm recently in California to address the issue of diversity and equality in the tech world.Footnote 114

5.3 Conclusion

Approaching the problem of gender-based algorithmic discrimination through the lens of indirect and direct gender impacts enables researchers and policy-makers not only to perceive the depth of the problem but also to identify the need for legal and policy measures. It facilitates understanding, by looking at the concrete mechanisms that underlie the functioning of algorithms and thereby sheds light on why indirect gender effects that might seem at first irrelevant from a legal gender equality enforcement perspective are key to understanding and solving the problem of gender-based algorithmic discrimination. The role played by algorithms and search engines in shaping, reinforcing and perpetuating gender stereotypes and biases has been highlighted and should be taken into account in legislative and policy actions. This also strengthens the argument for having not only a robust legislative framework but also accompanying non-legislative measures that reinforce and complement EU law in order to support and achieve the entirety of its aims.

Notes

Algorithms are “sufficiently detailed and systematic instructions of actions to solve a mathematical problem so that (..) the computer computes the correct output for each correct set of inputs” (translation by author), Zweig [56], p. 313.

See Abiteboul and Dowek [1].

See European Commission Algorithmic discrimination in Europe [16].

On hidden aspects of discrimination see, Broussard [6], p. 75.

See Rechtbank Den Haag [46].

For EU law, the lack of references from national courts (preliminary ruling procedure, Art. 267 TFEU) is most likely the reason underlying the absence of case law involving the interpretation of algorithmic discrimination with regard to Directive 2006/54/EC of the European Parliament and of the Council of 5 July 2006 on the implementation of the principle of equal opportunities and equal treatment of men and women in matters of employment and occupation (recast) [2006] OJ L 204, 26.7.2006, p. 23–36 and Council Directive 2004/113/EC of 13 December 2004 implementing the principle of equal treatment between men and women in the access to and supply of goods and services [2004] OJ L 373, 21.12.2004, p. 37–43.

The judgment highlighted two essential requirements for the use of algorithms: the knowability or understandability of the decision and the possibility of full judicial review, Consiglio di Stato [9], notably para. 8.2, 8.3, 8.4 and 9. In another judgment, the court tried to define algorithms, Consiglio di Stato [10], para. 3: “una sequenza finite di istruzioni”.

For a more optimistic view of the potential of algorithms to reduce biases and discrimination, see Kleinberg et al. [32].

See for the relationship between generalisation and stereotypes for the law Schauer [49].

See Mitchell [39], p. 124.

See Jean (2019) [29], p. 92.

See Wooldridge [52], p. 291.

In this case most likely, direct discrimination would be found. See Article 2 (1)(a), 14 (1)(a) Directive 2006/54/EC.

See for an overview of recruitment and gender, Kraft-Buchman et al. [34].

See Fry [25].

Proposal for a Regulation of the European Parliament and of the Council laying down harmonised rules on artificial intelligence (Artificial Intelligence Act) and amending certain Union legislative acts, COM/2021/206 final.

See Qu [45].

Article 6 (2) jointly with Annex III Nr. 4 AIA.

See Pasquale (2020), [43], p. 119.

US data shows that 72% of CVs are apparently automatically discarded by recruitment algorithms, see Criado Perez (2019) [11] p. 166.

Currently 31 cases are listed that include a reference to Art. 14 of Directive 2006/54, see https://curia.europa.eu/juris/liste.jsf?oqp=&for=&mat=or&jge=&td=%3BALL&jur=C%2CT%2CF&page=1&dates= &pcs=Oor&lg=&pro=&nat=or&cit=L%252CC%252CCJ%252CR%252C2008E%252C%252C2006 %252C54%252C%252C14%252C%252C%252C%252C%252Ctrue%252Cfalse%252Cfalse&language= en&avg=&cid=1781563.

Notably Cases C-109/88 Danfoss, ECLI:EU:C:1989:383; C-104/10 Kelly, ECLI:EU:C:2011:506; C-415/10 Meister, ECLI:EU:C:2012:217 and C-274/18 Shuch-Ghannadan, ECLI:EU:C:2019:828.

C-460/06 Nadine Paquay v Société d’architectes Hoet + Minne SPRL ECLI:EU:C:2007:601. See also Article 12 and Recital 41 of Directive 2019/1158, which extends protection from dismissal to “any preparatory steps for a possible dismissal”.

Communication from the Commission to the European Parliament, the Council, the European Economic and Social Committee and the Committee of the Regions, A Union of Equality: Gender Equality Strategy 2020-2025, COM/2020/152 final, [19], p. 2.

See Proposal for a Directive of the European Parliament and of the Council on improving the gender balance among non-executive directors of companies listed on stock exchanges and related measures, COM/2012/0614 final. A general approach was adopted in Council on 14.3.2022, see https://www.consilium.europa.eu/en/press/press-releases/2022/03/14/les-etats-membres-arretent-leur-position-sur-une-directive-europeenne-visant-a-renforcer-l-egalite-entre-les-femmes-et-les-hommes-dans-les-conseils-d-administration/. It has been reported that compromise seems possible after a decade of deadlock: see De La Baume [13].

Directive (EU) 2019/1158 of the European Parliament and of the Council of 20 June 2019 on work-life balance for parents and carers and repealing Council Directive 2010/18/EU [2019], OJ L 188, 12.7.2019, p. 79–93; See also Oliveira et al. [40].

See the recent legislative proposal of 8.3.2022 for a Directive of the European Parliament and of the Council on combating violence against women and domestic violence, COM(2022) 105 final.

The author checked these examples several times, most recently on 10.10.2021 on www.google.com and www.duckduckgo.com.

See European Commission, Gender Equality Strategy 2020-2025 [19].

See Kelleher [31], p. 252: “A deep neural network is a network that has multiple hidden layers of units”.

See Gigerenzer [26], p. 203.

Zhao et al. [55], para. 6.1.

See the draft report 2022 AIDA Parliament [23], para. 69: This “raises the question of whether certain biases can be resolved by using more diverse datasets, given the structural biases present in our society; specifies in this regard that algorithms learn to be as discriminatory as the society they observe and then suggest decisions that are inherently discriminatory, which again contributes to exacerbating discrimination within society; concludes that there is therefore no such thing as a completely impartial and objective algorithm.”

Wikipedia, https://en.wikipedia.org/wiki/Word2vec; see Alpaydin [3], p. 133-135.

Wikipedia, https://en.wikipedia.org/wiki/Word2vec; see Russel and Norvig [48], p. 908, 926 and 929.

Bolukbasi et al. [4].

Art. 2 and 3(3) of the Treaty on European Union (TEU), Art. 21 of the Charter of Fundamental Rights and Article 8 TFEU (concerning eliminating inequalities and promoting equality between men and women in all Union activities - so called gender-mainstreaming).

See for example Equinet [14] which highlights insufficient guarantees in the AIA proposal so that “AI-induced harm on the fundamental right to non-discrimination can be effectively identified, prevented or remedied”.

See Directive 2019/1158 (Art. 12(3)) or the Pay Transparency proposal COM(2021) 93 final (Art. 16(1)) providing:

“(..) when workers who consider themselves wronged (..) establish before a court or other competent authority, facts from which it may be presumed that there has been direct or indirect discrimination, it shall be for the defendant to prove that there has been no direct or indirect discrimination in relation to pay” and (3): “The claimant shall benefit from any doubt that might remain”.

See for example Austria’s AMS, Fröhlich and Spiecker [24]; https://www.ams.at/regionen/oberoesterreich/news/2019/01/ams-oberoesterreich-arbeitsprogramm-2019.

Brière and Dony [5], p. 297.

On biases, note the EP AIDA draft report 2022 [23], at para. 68:

“Stresses that bias in AI systems often occurs due to a lack of diverse and high-quality training data, for instance where data sets are used which do not sufficiently cover discriminated groups, or where the task definition or requirement setting themselves were biased; notes that bias can also arise due to a limited volume of training data, which can result from overly strict data protection provisions, or where a biased AI developer has compromised the algorithm; points out that some biases in the form of reasoned differentiation are, on the other hand, also intentionally created in order to improve the AI’s learning performance under certain circumstances.”

In general, it is considered that humans are better than algorithms “at work that involves unusual combinations of skills (..)” and recruitment is certainly an activity that requires the making of an holistic assessment of future employees. See Roose, [47], p. 71.

The EU Data Governance Act (Proposal for a Regulation of the European Parliament and of the Council on European data governance (Data Governance Act), COM/2020/767 final) does not directly address this issue but could help encourage a discussion in the direction of more diverse data and reduction of the gender data gap. See also Criado Perez (2020) [12].

Art. 1 AIA.

Art. 3(1).

Art. 5.

Art. 6.

Art. 8-14.

Art. 2.

Annex III lists AI applications that fall under the high-risk category.

See Explanatory Memorandum, 1.2.

Art. 6, Art. 6(2) + Annex III.

See Art. 7(1)(b) “usage in areas of Annex 1” and the “risk of adverse impact on fundamental rights”.

“(Non)-discrimination” (16 references), “Gender Equality” (1 reference) and “women” (2 references).

Recital 36.

See Art. 9 and Recitals 42 and 46: “A risk management system shall be established, implemented, documented and maintained in relation to high-risk AI systems.” (per Art. 9(1)) and “shall consist of a continuous iterative process run throughout the entire lifecycle of a high-risk AI system, requiring regular systematic updating” (per Art. 9(2)) which comprises the following steps: “(a) identification and analysis of the known and foreseeable risks associated with each high-risk AI system; (b) estimation and evaluation of the risks that may emerge when the high-risk AI system is used in accordance with its intended purpose and under conditions of reasonably foreseeable misuse; (c) evaluation of other possibly arising risks”.

Art. 10.

“High data quality is essential for the performance of many AI systems, especially when techniques involving the training of models are used, with a view to ensure that the high-risk AI system performs as intended and safely and it does not become the source of discrimination prohibited by Union law. High quality training, validation and testing data sets require the implementation of appropriate data governance and management practices. Training, validation and testing data sets should be sufficiently relevant, representative and free of errors and complete in view of the intended purpose of the system. They should also have the appropriate statistical properties, including as regards the persons or groups of persons on which the high-risk AI system is intended to be used. (..). In order to protect the right of others from the discrimination that might result from the bias in AI systems, the providers should be able to process also special categories of personal data, as a matter of substantial public interest, in order to ensure the bias monitoring, detection and correction in relation to high-risk AI systems” (See Recital 44, highlighted by the author.) See also the Proposal for a Regulation on European data governance (Data Governance Act) COM/2020/767.

Art. 11: “technical documentation shall be drawn up in such a way to demonstrate that the high-risk AI system complies with the requirements” (Art. 11(1)).

Art. 12: “High-risk AI systems shall be designed and developed with capabilities enabling the automatic recording of events (‘logs’) while the high-risk AI systems is operating”.

Art. 13: “their operation is sufficiently transparent to enable users to interpret the system’s output and use it appropriately”.

Art. 14(1): “High-risk AI systems shall be designed and developed in such a way, including with appropriate human-machine interface tools, that they can be effectively overseen by natural persons during the period in which the AI system is in use.” Art. 14(2) reminds the reason for human oversight is the risk for fundamental rights violations.

One might argue that every applicant needs to be aware if and to what extend an algorithm is involved in the selection procedure. See Art. 22 (1), (2)(c) Art. 22 (3) GDPR.

Art. 16 AIA.

Art. 17. See also European Law Institute [20].

Art. 18.

Art. 20.

Art. 19.

Art. 51.

Art. 49.

Art. 16 (i), Art. 19, Art. 27 (1), Art. 49 and Recital 67.

Art. 71.

Art. 56 (2) specifies that

“The Board shall provide advice and assistance to the Commission in order to: (a)contribute to the effective cooperation of the national supervisory authorities and the Commission with regard to matters covered by this Regulation; (b)coordinate and contribute to guidance and analysis by the Commission and the national supervisory authorities and other competent authorities on emerging issues across the internal market with regard to matters covered by this Regulation; (c)assist the national supervisory authorities and the Commission in ensuring the consistent application of this Regulation.”

See also Art. 59 of the Artificial Intelligence Act.

Directive 2006/54/EC.

2021/0106(COD), Artificial Intelligence Act, see https://oeil.secure.europarl.europa.eu/oeil/popups/ficheprocedure.do?reference=2021/0106(COD)&l=en. A joint committee of IMCO and LIBE (rapporteur Brando Benifei (S&D, Italy)) is responsible for the file and several committees associated for an opinion.

See Bundesrat [8], para. 2.

See AIDA Parliament [23] in its AI report. In addition a total of 1384 amendments have been proposed to amend the draft report.

AIDA Parliament Amendments [21], Amendment (“A”) 88: “Underlines the gender gap across all digital technology domains, which has a concrete impact on the development of AI, reproducing and enhancing stereotypes and bias, since it has predominantly been designed by males”; A 124: “Highlights that significant work still needs to be carried out in order to include certain groups, such as women and minority communities, in this transition; warns that the fact that only 22% of AI professionals globally are female has the potential to deepen already existing inequalities such as the gender pay gap as well as to lead to a devaluation of problems that affect mostly women, such as online gender-based violence”.

AIDA Parliament Amendments [21], A 377: “Expects the EU to shape the AI revolution globally by promoting its values such as respect for fundamental rights, democracy, non-discrimination and inclusivity”.

AIDA Parliament Amendments [21], A 476: “addressing the gender gap and the lack of diversity among developers of AI systems which is another crucial aspect in increasing EU’s competitiveness”.

AIDA Parliament Amendments [21], A 630: “non-discriminatory algorithms are those which prevent gender, racial and other social biases in the selection and treatment of different groups and do not reinforce inequalities and stereotypes” and A 669: “calls on the Commission and the Member States to align the measures shaping the EUs digital transition with the Union’s goals on gender equality; calls on the Commission and the Member States to provide appropriate funding to programmes aimed at attracting women to study and work in ICT and STEM, to develop strategies aimed at increasing women’s digital inclusion, in fields relating to STEM, AI and the research and innovation sector, and to adopt a multi-level approach to address the gender gap at all levels of education and employment in the digital sector”.

AIDA Parliament Amendments [21], A 1146: “Highlights that in order to address bias in AI, there is a need to promote diversity in the teams that develop, implement and assess the risks of specific AI applications; stresses the need for gender disaggregated data to be used to evaluate AI algorithms and for gender analysis to be part of all risk assessments”.

Proposal for a Regulation of the European Parliament and of the Council on a Single Market for Digital Services (Digital Services Act) and amending Directive 2000/31/EC, COM/2020/825 final.

Amendments adopted by the European Parliament on 20 January 2022 on the proposal for a regulation of the European Parliament and of the Council on a Single Market For Digital Services (Digital Services Act) and amending Directive 2000/31/EC (COM(2020)0825 – C9-0418/2020 – 2020/0361(COD)), available at: https://www.europarl.europa.eu/doceo/document/TA-9-2022-0014_EN.pdf.

See EESC [42], pp. 61–66.

See CoR [41], pp. 60–85.

Xenidis et al. [53], p. 181.

Allen et al. [2], p. 598.

Lütz (2022) [35].

Hacker [28], pp. 1143-1186.

Xenidis [54], p. 757.

Shmua [50], pp. 4-5 and 25-26.

For solutions other than legislative action, see Jean (2021) [30], p. 125.

In this regard, the recent legislation adopted (WLB-Directive) and proposed are a step in the right direction, each ensuring that the potential biases and stereotypes will be reduced in the data that is underlying algorithmic discrimination.

Proposal for a Directive of the European Parliament and of the Council to strengthen the application of the principle of equal pay for equal work or work of equal value between men and women through pay transparency and enforcement mechanisms, COM/2021/93 final.

Proposal for a Directive of the European Parliament and of the Council on improving the gender balance among non-executive directors of companies listed on stock exchanges and related measures, COM/2012/0614 final.

European Commission Better Regulation [17].

Notably using Arts. 19, 153 and 157 (4) TFEU as legal bases.

The importance, opportunities, and risks of AI for gender equality are also highlighted in European Commission, Gender Equality Strategy 2020-2025 [19], p. 6.

Only 22% of AI programmers are women. See Gender Equality Strategy 2020-2025 [19].

Mitchell [39], p. 126.

See Wooldridge [52], p. 290, who highlights that the kick-off event for AI at Dartmouth saw no representation by any woman, a situation that he considers unthinkable today despite the fact that women are still largely underrepresented in AI-related jobs.

AIDA Parliament [23], para. 75: “is concerned about the extensive gender gap in this area, with only one in six ICT specialists and one in three (..) STEM graduates being female”. A 670 proposes to add “stresses that this gap inevitably results in biased algorithms”. See https://www.europarl.europa.eu/meetdocs/2014_2019/plmrep/COMMITTEES/AIDA/AM/2022/01-13/1245946EN.pdf”; European Commission 2030 Digital Compass [18], p. 4-5.

A 777 equally supports this:

“recalls therefore the need to address the gender gap in STEM in which women are still under- represented; calls on the Commission and the Member States to provide appropriate funding to programmes aimed at attracting women to study and work in STEM, to develop strategies aimed at increasing women’s digital inclusion, in fields relating to STEM, AI and the research and innovation sector, and to adopt a multi-level approach to address the gender gap at all levels of education and employment in the digital sector”

AIDA Parliament Amendments [21].

See Lighthouse3 founded by Mia Shah-Dand, https://lighthouse3.com/about-us/. The EU is also renewing its Women TechEU program, as to which see https://eic.ec.europa.eu/eic-funding-opportunities/european-innovation-ecosystems/women-techeu_en.

References

Abiteboul, S., Dowek, G.: The Age of Algorithms. Cambridge University Press, Cambridge (2020)

Allen, R., Masters, D.: Artificial intelligence: the right to protection from discrimination caused by algorithms, machine learning and automated decision-making. ERA Forum 20, 585–598 (2020)

Alpaydin, E.: Machine Learning. Mit Press, Boston (2021)

Bolukbasi, T., Chang, K.-W., Zou, J., Saligrama, V., Kalai, A.: Man is to computer programmer as woman is to homemaker? Debiasing word embeddings (2016). arXiv:1607.06520

Brière, C., Dony, M.: Droit de l’Union européenne. Editions de l’Université de Bruxelles, Bruxelles (2022)

Broussard, M.: Artificial Unintelligence: How Computers Misunderstand the World. Mit Press, Boston (2018)

Buijsman, S., Jänicke, B.: Ada und die Algorithmen: wahre Geschichten aus der Welt der künstlichen Intelligenz. C.H. Beck, München (2021)

Bundesrat Drucksache, 488/21 (2021). Available at: https://www.europarl.europa.eu/RegData/docs_autres_institutions/parlements_nationaux/com/2021/0206/DE_BUNDESRAT_CONT1-COM(2021)0206_DE.pdf

Consiglio di Stato, sentenza n. 2270 del 8 April 2019. Available at: https://www.lavorodirittieuropa.it/images/Raiti_Consiglio_di_Stato_2270-2019_1.pdf

Consiglio di Stato, sentenza n. 7891 del 4-25 novembre 2021. Available at: https://www.eius.it/giurisprudenza/2021/655

Criado Perez, C.: Invisible Women: Exposing Data Bias in a World Designed for Men. Random House, London (2019)

Criado Perez, C.: We need to close the gender data gap by including women in our algorithms. Time Magazine 16.1.2020, (2020). Available at: https://time.com/collection-post/5764698/gender-data-gap/

De La Baume, M.: Germany to back EU’s women quota plan after a decade (2022). Available at: https://www.politico.eu/article/germany-will-adopt-women-on-board-directive-eu-proposal-after-10-years-of-deadlock/

Equinet, Contribution to the public consultation of the AIA (2021). Available at: https://ec.europa.eu/info/law/better-regulation/have-your-say/initiatives/12527-Artificial-intelligence-ethical-and-legal-requirements/details/F2665651_en

European Commission, Advisory Committee on Equal Opportunities for Women and Men, Opinion on Artificial Intelligence – opportunities and challenges for gender equality. Available at https://ec.europa.eu/info/sites/default/files/aid_development_cooperation_fundamental_rights/opinion_artificial_intelligence_gender_equality_2020_en.pdf

European Commission, Algorithmic discrimination in Europe - challenges and opportunities for gender equality and non-discrimination law (2021). Available at: https://doi.org/10.2838/544956

European Commission, Better Regulation (2022). Available at: https://ec.europa.eu/info/law/law-making-process/planning-and-proposing-law/better-regulation-why-and-how_de#internationale-zusammenarbeit-in-regulierungsfragen

European Commission, Communication from the Commission to the European Parliament, the Council, the European Economics and Social Committee and the Committee of the Regions, 2030 Digital Compass: the European way for the Digital Decade, COM(2021) 11 final. Available at: https://ec.europa.eu/info/sites/default/files/communication-digital-compass-2030_en.pdf

European Commission, Striving for a Union of Equality, the Gender Equality Strategy 2020-2025 (2020). Available at: https://ec.europa.eu/info/sites/default/files/aid_development_cooperation_fundamental_rights/gender_equality_strategy_factsheet_en.pdf

European Law Institute, Artificial Intelligence (AI) and Public Administration – Developing Impact Assessments and Public Participation for Digital Democracy (2022). Available at: https://www.europeanlawinstitute.eu/projects-publications/completed-projects-old/ai-and-public-administration/

European Parliament, Amendments to the draft report on artificial intelligence in a digital age (2020/2266(INI)), Special Committee on Artificial Intelligence in a Digital Age. Available at: https://emeeting.europarl.europa.eu/emeeting/committee/en/agenda/202201/AIDA; https://www.europarl.europa.eu/meetdocs/2014_2019/plmrep/COMMITTEES/AIDA/AM/2022/01-13/1245944EN.pdf; https://www.europarl.europa.eu/meetdocs/2014_2019/plmrep/COMMITTEES/AIDA/AM/2022/01-13/1245945EN.pdf; https://www.europarl.europa.eu/meetdocs/2014_2019/plmrep/COMMITTEES/AIDA/AM/2022/01-13/1245946EN.pdf; https://www.europarl.europa.eu/meetdocs/2014_2019/plmrep/COMMITTEES/AIDA/AM/2022/01-13/1245947EN.pdf; https://www.europarl.europa.eu/meetdocs/2014_2019/plmrep/COMMITTEES/AIDA/AM/2022/01-13/1245948EN.pdf

European Parliament, Committee on Women’s Rights and Gender Equality, on shaping the digital future of Europe: removing barriers to the functioning of the digital single market and improving the use of AI for European consumers (2020/2216(INI))

European Parliament, draft report on artificial intelligence in a digital age (2020/2266(INI)), Special Committee on Artificial Intelligence in a Digital Age. Available at: https://www.europarl.europa.eu/meetdocs/2014_2019/plmrep/COMMITTEES/AIDA/PR/2022/01-13/1224166EN.pdf

Fröhlich, W., Spiecker, I.: Können Algorithmen diskriminieren? Verfassungsblog, 26.12.2018. Available at: https://verfassungsblog.de/koennen-algorithmen-diskriminieren/#primary_menu_sandwich

Fry, H.: Hello World: How to Be Human in the Age of the Machine. Random House, London (2018)

Gigerenzer, G.: Klick: Wie wir in einer digitalen Welt die Kontrolle behalten und die richtigen Entscheidungen treffen-Vom Autor des Bestsellers »Bauchentscheidungen «. C. Bertelsmann Verlag, München (2021)

Gufran, A.: The Tech Industry’s Sexism, Racism Is Making Artificial Intelligence Less Intelligent (2019). Available at: https://theswaddle.com/inherent-bias-in-artifical-intelligence-perpetuate-racism-sexism-in-tech/

Hacker, P.: Teaching fairness to artificial intelligence: existing and novel strategies against algorithmic discrimination under EU law. Common Mark. Law Rev. 55, 1143–1186 (2018). Available at SSRN: https://ssrn.com/abstract=3164973

Jean, A.: De l’autre côté de la Machine - Voyage d’une scientifique au pays des algorithmes. Observatoire (Editions de l’), Paris (2019)

Jean, A.: Les algorithmes font-ils la loi? Observatoire (Editions de l’), Paris (2021)

Kelleher, J.D.: Deep Learning. MIT Press, Boston (2019)

Kleinberg, J., Ludwig, J., Mullainathan, S., Sunstein, C.R.: Algorithms as discrimination detectors. Proc. Natl. Acad. Sci. 117(48), 30096–30100 (2020)

Knight, W.: The Apple Card Didn’t ‘See’ Gender - and That’s the Problem, the way its algorithm determines credit lines makes the risk of bias more acute, Wired 19.11.2019 (2019). Available at: https://www.wired.com/story/the-apple-card-didnt-see-genderand-thats-the-problem/

Kraft-Buchman, C., Arian, R.: Artificial Intelligence Recruitment: Digital Dream or Dystopia of Bias? (2021). Available at: www.womenatthetable.net

Lütz, F.: Discrimination by correlation. Towards eliminating algorithmic biases and achieving gender equality. In: Quadflieg, S., Neuburg, K., Nestler, S. (eds.) (Dis)Obedience in Digital Societies. Perspectives on the Power of Algorithms and Data, pp. 250–294. Transcript Verlag, Bielefeld (2022)

Lütz, F.: How the ‘Brussels effect’ could shape the future regulation of algorithmic discrimination. Duodecim Astra 1, 142–163 (2021)

Lütz, F.: Towards a rights-based approach to algorithmic discrimination – Taking the ‘Jekyll and Hide’ nature of AI seriously as regulatory object and detection tool, Paper presented at the expert conference on “Artificial Intelligence & Human Rights: Friends or Foe?”, 28 October 2021, Erasmus University Rotterdam (Forthcoming in Conference Proceedings, August 2022)

Mikolov, T., Chen, K., Corrado, G., Dean, J.: Efficient estimation of word representations in vector spaces (2013). arXiv:1301.3781

Mitchell, M.: Artificial Intelligence: A Guide for Thinking Humans. Penguin UK, London (2019)

Oliviera, A., De La Corte, M., Lütz, F.: The new Directive on work-life balance: towards a new paradigm of family care and equality? Eur. Law Rev. 45(3), 295–323 (2020)

Opinion of the European Committee of the Regions — European approach to artificial intelligence — Artificial Intelligence Act (revised opinion) COR 2021/02682, OJ C 97, 28.2.2022, p. 60–85

Opinion of the European Economic and Social Committee on Proposal for a Regulation of the European Parliament and of the Council laying down harmonised rules on artificial intelligence (Artificial Intelligence Act) and amending certain union legislative acts (COM(2021) 206 final — 2021/106 (COD)), OJ C 517, 22.12.2021, p. 61–66

Pasquale, F.: New Laws of Robotics: Defending Human Expertise in the Age of AI. Belknap Press, Boston (2020)

Pasquale, F.: The Black Box Society. Cambridge University Press, Boston (2016)

Qu, L.: 99% of fortune 500 companies use applicant tracking systems (ATS). Job-scan Blog (2019). Available at: https://www.jobscan.co/blog/99-percent-fortune-500-ats/

Rechtbank Den Haag, C-09-550982-HA ZA 18-388. Available at: http://deeplink.rechtspraak.nl/uitspraak?id=ECLI:NL:RBDHA:2020:865

Roose, K.: Futureproof: 9 Rules for Humans in the Age of Automation. Random House Trade Paperbacks, London (2022)

Russel, S., Norvig, P.: Artificial Intelligence, a Modern Approach, 4th edn. Pearson, Harlow (2022)

Schauer, F.: Profiles, Probabilities, and Stereotypes. Harvard University Press, Boston (2009)

Smuha, N.A.: Beyond the individual: governing AI’s societal harm. Internet Policy Rev. 10(3), 1–32 (2021). https://doi.org/10.14763/2021.3.1574

Wheaton, O.: Gym’s computer assumed this woman was a man because she is a doctor. Metro 18.3.2015. Available at: https://metro.co.uk/2015/03/18/gyms-computer-assumed-this-woman-was-a-man-because-she-is-a-doctor-5110391/

Wooldridge, M.: The Road to Conscious Machines: The Story of AI. Penguin UK, London (2020)

Xenidis, R., Senden, L.: EU non-discrimination law in the era of artificial intelligence: mapping the challenges of algorithmic discrimination. Raphaële Xenidis and Linda Senden, ‘EU non-discrimination law in the era of artificial intelligence: mapping the challenges of algorithmic discrimination’. In: Bernitz, U., et al. (eds.) General Principles of EU Law and the EU Digital Order, pp. 151–182. Kluwer Law International, Alphen aan den Rijn (2020)

Xenidis, R.: Tuning EU equality law to algorithmic discrimination: three pathways to resilience. Maastricht J. Eur. Comp. Law 27(6), 736–758 (2020)

Zhao, J., Wang, T., Yatskar, M., Ordonez, V., Chang, K.-W.: Men also like shopping: reducing gender bias amplification using corpus-level constraints (2017). arXiv:1707.09457

Zweig, K.: Ein Algorithmus hat kein Taktgefühl: Wo künstliche Intelligenz sich irrt, warum uns das betrifft und was wir dagegen tun können. Heyne Verlag, München (2019)

Funding

Open access funding provided by University of Lausanne.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Fabian Lütz: Ass. iur., LL.M. (Bruges), Maître en droit (Paris).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lütz, F. Gender equality and artificial intelligence in Europe. Addressing direct and indirect impacts of algorithms on gender-based discrimination. ERA Forum 23, 33–52 (2022). https://doi.org/10.1007/s12027-022-00709-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12027-022-00709-6