Abstract

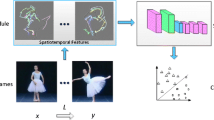

Action recognition is important for understanding the human behaviors in the video, and the video representation is the basis for action recognition. This paper provides a new video representation based on convolution neural networks (CNN). For capturing human motion information in one CNN, we take both the optical flow maps and gray images as input, and combine multiple convolutional features by max pooling across frames. In another CNN, we input single color frame to capture context information. Finally, we take the top full connected layer vectors as video representation and train the classifiers by linear support vector machine. The experimental results show that the representation which integrates the optical flow maps and gray images obtains more discriminative properties than those which depend on only one element. On the most challenging data sets HMDB51 and UCF101, this video representation obtains competitive performance.

Similar content being viewed by others

References

Sadanand S, Corso J J. Action bank: A high-level representation of activity in video [C] // 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Washington D C: IEEE Press, 2012: 1234–1241.

Wang H, Schmid C. Action recognition with improved trajectories [C] // Proceedings of the IEEE International Conference on Computer Vision. Washington D C: IEEE Press, 2013: 3551–3558.

Aggarwal J K, Ryoo M S. Human activity analysis: A review[J]. ACM Computing Surveys (CSUR), 2011, 43(3): 16.

Krizhevsky A, Sutskever I, Hinton G E. Imagenet classification with deep convolutional neural networks [C] // Advances in Neural Information Processing Systems. Washington D C: IEEE Press, 2012: 1097–1105.

Farabet C, Couprie C, Najman L, et al. Learning hierarchical features for scene labeling [J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2013, 35(8): 1915–1929.

Karpathy A, Toderici G, Shetty S, et al. Large-scale video classification with convolutional neural networks [C] // Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Washington D C: IEEE Press, 2014: 1725–1732.

Simonyan K, Zisserman A. Two-stream convolutional networks for action recognition in videos [C] // Advances in Neural Information Processing Systems. Washington D C: IEEE Press, 2014: 568–576.

Wang H, Ullah M M, Klaser A, et al. Evaluation of local spatio-temporal features for action recognition [C] // BMVC 2009-British Machine Vision Conference. London: BMVA Press, 2009: 124.1–124.11.

Laptev I. On space-time interest points [J]. International Journal of Computer Vision, 2005, 64(2-3): 107–123.

Willems G, Tuytelaars T, Van Gool L. An efficient dense and scale-invariant spatio-temporal interest point detector [C] // European Conference on Computer Vision. Berlin, Heidelberg: Springer-Verlag, 2008: 650–663.

Dalal N, Triggs B, Schmid C. Human detection using oriented histograms of flow and appearance [C] // European Conference on Computer Vision. Berlin, Heidelberg: Springer-Verlag, 2006: 428–441.

Laptev I, Marszalek M, Schmid C, et al. Learning realistic human actions from movies [C] // IEEE Conference on Computer Vision and Pattern Recognition, CVPR. Piscataway: IEEE Press, 2008: 1–8.

Ji S, Xu W, Yang M, et al. 3D convolutional neural networks for human action recognition [J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2013, 35(1): 221–231.

Chéron G, Laptev I, Schmid C. P-CNN: Pose-based CNN features for action recognition [C] // Proceedings of the IEEE International Conference on Computer Vision. Washington D C: IEEE Press, 2015: 3218–3226.

Yue-Hei Ng J, Hausknecht M, Vijayanarasimhan S, et al. Beyond short snippets: Deep networks for video classification [C] // Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Washington D C: IEEE Press, 2015: 4694–4702.

Yan Z, Zhang H, Piramuthu R, et al. HD-CNN: hierarchical deep convolutional neural networks for large scale visual recognition [C] // Proceedings of the IEEE International Conference on Computer Vision. Washington D C: IEEE Press, 2015: 2740–2748.

Wang L, Qiao Y, Tang X. Action recognition with trajectory-pooled deep-convolutional descriptors [C] // Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Washington D C: IEEE Press, 2015: 4305–4314.

Soomro K, Zamir A R, Shah M. UCF101: A dataset of 101 human actions classes from videos in the wild[EB/OL]. [2016-04-05]. http://aarxiv-okg/abs/1212.0402.

Kuehne H, Jhuang H, Garrote H A, et al. A large video database for human motion recognition [C] // Proc of IEEE International Conference on Computer Vision. Washington D C: IEEE Press, 2011: 2556–2563.

Jiang Y, Liu J, Zamir R, et al. THUMOS challenge: Action recognition with a large number of classes [C] // ICCV Workshop on Action Recognition with a Large Number of Classes. Piscataway: IEEE Press, 2013: 1–3.

Jia Y, Shelhamer E, Donahue J, et al. Caffe: Convolutional architecture for fast feature embedding [C] // Proceedings of the 22nd ACM International Conference on Multimedia. New York:ACM Press, 2014: 675–678.

Wang H, Schmid C. LEAR-INRIA submission for the THUMOS workshop [C] // ICCV Workshop on Action Recognition with a Large Number of Classes. Washington D C: IEEE Press, 2013, 2(7): 8–11.

Peng X, Wang L, Wang X, et al. Bag of visual words and fusion methods for action recognition: Comprehensive study and good practice [J]. Computer Vision and Image Understanding, 2016, 150: 109–125.

Peng X, Zou C, Qiao Y, et al. Action recognition with stacked fisher vectors [C] // European Conference on Computer Vision. Berlin: Springer-Verlag, 2014: 581–595.

Author information

Authors and Affiliations

Corresponding author

Additional information

Foundation item: Supported by the National High Technology Research and Development Program of China (863 Program, 2015AA016306), National Nature Science Foundation of China (61231015), Internet of Things Development Funding Project of Ministry of Industry in 2013(25), Technology Research Program of Ministry of Public Security (2016JSYJA12), the Nature Science Foundation of Hubei Province (2014CFB712)

Biography: LI Hongyang, male, Ph.D. candidate, research direction: multimedia analysis and computer vision.

Rights and permissions

About this article

Cite this article

Li, H., Chen, J. & Hu, R. Multiple feature fusion in convolutional neural networks for action recognition. Wuhan Univ. J. Nat. Sci. 22, 73–78 (2017). https://doi.org/10.1007/s11859-017-1219-4

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11859-017-1219-4