Abstract

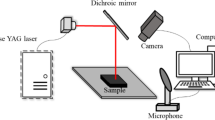

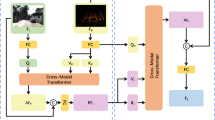

Laser cleaning is a highly nonlinear physical process for solving poor single-modal (e.g., acoustic or vision) detection performance and low inter-information utilization. In this study, a multi-modal feature fusion network model was constructed based on a laser paint removal experiment. The alignment of heterogeneous data under different modals was solved by combining the piecewise aggregate approximation and gramian angular field. Moreover, the attention mechanism was introduced to optimize the dual-path network and dense connection network, enabling the sampling characteristics to be extracted and integrated. Consequently, the multi-modal discriminant detection of laser paint removal was realized. According to the experimental results, the verification accuracy of the constructed model on the experimental dataset was 99.17%, which is 5.77% higher than the optimal single-modal detection results of the laser paint removal. The feature extraction network was optimized by the attention mechanism, and the model accuracy was increased by 3.3%. Results verify the improved classification performance of the constructed multi-modal feature fusion model in detecting laser paint removal, the effective integration of acoustic data and visual image data, and the accurate detection of laser paint removal.

摘要

激光清洗是一种高度非线性物理过程,为解决激光清洗单模态(例如声学或视觉)检测性能不高, 信息间利用率较低的问题,本文提出了基于激光除漆实验构建多模态特征融合网络模型。通过结合分 段聚合近似以及格拉米角场解决不同模态间异构数据转换与对齐问题。并通过引入注意力机制优化双 路径网络和密集连接网络增强对视觉图像和声波的特征提取与融合,从而实现激光除漆的多模态最优 判别检测。实验结果表明,所构建模型在实验数据集上的验证准确率为99.17%,相比激光除漆单模态 检测最优准确率提高5.77%。通过注意力机制优化特征提取网络,模型准确率提升3.3%。结果验证了 本文所构建的多模态特征融合模型在检测激光除漆检测中具有更好的分类性能,模型能有效融合声波 数据和视觉图像数据,实现激光除漆的准确检测。

Similar content being viewed by others

References

JIA Xian-shi, ZHANG Yu-dong, CHEN Yong-qian, et al. Laser cleaning of slots of chrome-plated die [J]. Optics & Laser Technology, 2019, 119: 105659. DOI: https://doi.org/10.1016/j.optlastec.2019.105659.

LI Guang, GAO Wen-yan, ZHANG Lin, et al. The quality improvement of laser rubber removal for laminated metal valves [J]. Optics & Laser Technology, 2021, 139: 106785. DOI: https://doi.org/10.1016/j.optlastec.2020.106785.

CHENG Jian, FANG Shi-chao, LIU Dun, et al. Laser cleaning technology of metal surface and its application [J]. Applied Laser, 2018, 38(6): 1028–1037. DOI: https://doi.org/10.14128/j.cnki.al.20183806.1028. (in Chinese)

GUO Nai-hao, WANG Jing-xuan, XIANG Xia. Study on laser cleaning process of sol-gel film optical surface [J]. Laser Technology, 2020(2): 156–160. (in Chinese)

LEI Zheng-long, TIAN Ze, CHEN Yan-bin. Laser cleaning technology in industrial fields [J]. Laser & Optoelectronics Progress, 2018, 55(3): 030005. DOI: https://doi.org/10.3788/lop55.030005.

ZHANG Xin, CHEN Yu-hua. Research progress and prospect of application of different types laser in laser cleaning technology [J]. Hot Working Technology, 2016, 45(8): 37–40. DOI: https://doi.org/10.14158/j.cnki.1001-3814.2016.08.009. (in Chinese)

DING Ye, XUE Yao, PANG Ji-hong, et al. Advances in in situ monitoring technology for laser processing [J]. Scientia Sinica Physica, Mechanica & Astronomica, 2019, 49(4): 044201. DOI: https://doi.org/10.1360/sspma2018-00311.

KHEDR A, PAPADAKIS V, POULI P, et al. The potential use of plume imaging for real-time monitoring of laser ablation cleaning of stonework [J]. Applied Physics B, 2011, 105(2): 485–492. DOI: https://doi.org/10.1007/s00340-011-4492-5.

SHI Tian-yi, ZHOU Long-zao, WANG Chun-ming, et al. Automatic start detection system based on machine vision [J]. China Laser, 2019, 46(4): 83–89. (in Chinese)

ZHANG Meng-qiao, DAI Hui-xin, ZHENG Yun-hao, et al. Research on laser cleaning detection of train paint coating based on color conversion [J]. Applied Laser, 2020, 40(4): 644–648. DOI: https://doi.org/10.14128/j.cnki.al.20204004.644. (in Chinese)

CAI Yue, CHEUNG N H. Photoacoustic monitoring of the mass removed in pulsed laser ablation [J]. Microchemical Journal, 2011, 97(2): 109–112. DOI: https://doi.org/10.1016/j.microc.2010.08.001.

PAPANIKOLAOU A, TSEREVELAKIS G J, MELESSANAKI K, et al. Development of a hybrid photoacoustic and optical monitoring system for the study of laser ablation processes upon the removal of encrustation from stonework [J]. Opto-Electronic Advances, 2020, 3(2): 19003701–19003711. DOI: https://doi.org/10.29026/oea.2020.190037.

RAMACHANDRAM D, TAYLOR G W. Deep multimodal learning: A survey on recent advances and trends [J]. IEEE Signal Processing Magazine, 2017, 34(6): 96–108. DOI: https://doi.org/10.1109/MSP.2017.2738401.

BALTRUŠAITIS T, AHUJA C, MORENCY L P. Multimodal machine learning: A survey and taxonomy [J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2019, 41(2): 423–443. DOI: https://doi.org/10.1109/TPAMI.2018.2798607.

SUN Ying-ying, JIA Zhen-tang, ZHU Hao-yu. Overview of multimodal deep learning [J]. Computer Engineering and Application, 2020, 56(21): 1–10. DOI: https://doi.org/10.3778/j.issn.1002-8331.2002-0342.

HE Jun, ZHANG Cai-qing, LI Xiao-zhen, et al. A review of research on multimodal fusion technology for deep learning [J]. Computer Engineering, 2020, 46(5): 1–11. DOI: https://doi.org/10.19678/j.issn.1000-3428.0057370. (in Chinese)

RASIWASIA N, COSTA PEREIRA J, COVIELLO E, et al. A new approach to cross-modal multimedia retrieval [C]//Proceedings of the International Conference on Multimedia. New York: ACM Press, 2010: 251–260. DOI: https://doi.org/10.1145/1873951.1873987.

LIU Ya-nan, FENG Xiao-qing, ZHOU Zhi-guang. Multimodal video classification with stacked contractive autoencoders [J]. Signal Processing, 2016, 120: 761–766. DOI: https://doi.org/10.1016/j.sigpro.2015.01.001.

HABIBIAN A, MENSINK T, SNOEK C G M. Video2vec embeddings recognize events when examples are scarce [J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(10): 2089–2103. DOI: https://doi.org/10.1109/TPAMI.2016.2627563.

LECUN Y, BENGIO Y, HINTON G. Deep learning [J]. Nature, 2015, 521(7553): 436–444. DOI: https://doi.org/10.1038/nature14539.

LUO Yong-peng, WANG Lin-kun, GUO Xu, et al. Structural damage identification based on GAF-CNN using single sensor data [J]. Vibration. Test and Diagnosis, 2022, 42(1): 169–176, 202–203. DOI: https://doi.org/10.16450/j.cnki.issn.1004-6801.2022.01.026. (in Chinese)

JIANG Jia-guo, GUO Man-li, YANG Si-guo. Fault diagnosis method of rolling bearing based on GAF and DenseNet [J]. Industrial and Mining Automation, 2021, 47(8): 84–89. DOI: https://doi.org/10.13272/j.issn.1671-251x.2021040095. (in Chinese)

LI Hai-lin. Method of dimensionality reduction and feature representation for time series [J]. Control and Decision, 2015, 30(3): 441–447. DOI: https://doi.org/10.13195/j.kzyjc.2014.0132.

HUANG Zhi-yong, LI Wen-bin, LI Jin-xin, et al. Dual-path attention network for single image super-resolution [J]. Expert Systems with Applications, 2021, 169: 114450. DOI: https://doi.org/10.1016/j.eswa.2020.114450.

WOO S, PARK J, LEE J Y, et al. CBAM: Convolutional block attention module [C]//Proceedings of the European Conference on Computer Vision (ECCV). 2018: 3–19. DOI: https://doi.org/10.1007/978-3-030-01234-2_1.

HUANG Gao, LIU Zhuang, van der MAATEN L, et al. Densely connected convolutional networks [C]//2017 IEEE Conference on Computer Vision and Pattern Recognition. Honolulu, HI, USA: IEEE, 2017: 2261–2269. DOI: https://doi.org/10.1109/CVPR.2017.243.

CHEN Bo-lin, ZHAO Tie-song, LIU Jia-hui, et al. Multipath feature recalibration DenseNet for image classification [J]. International Journal of Machine Learning and Cybernetics, 2021, 12(3): 651–660. DOI: https://doi.org/10.1007/s13042-020-01194-4.

Funding

Project(51875491) supported by the National Natural Science Foundation of China; Project(2021T3069) supported by the Fujian Science and Technology Plan STS Project, China

Author information

Authors and Affiliations

Corresponding author

Additional information

Contributors

HUANG Hai-peng formulated the overall research objectives and edited the first draft. HAO Ben-tian verified the proposed method experimentally and wrote the first draft. YE De-jun, GAO Hao and LI Liang edited the manuscript. All authors replied to reviewers’ comments and revised the final version.

Conflict of interest

HUANG Hai-peng, HAO Ben-tian, YE De-jun, GAO Hao and LI Liang declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Huang, Hp., Hao, Bt., Ye, Dj. et al. Test method of laser paint removal based on multi-modal feature fusion. J. Cent. South Univ. 29, 3385–3398 (2022). https://doi.org/10.1007/s11771-022-5163-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11771-022-5163-x