Abstract

Choice-based conjoint (CBC) analysis features prominently in market research to predict consumer purchases. This study focuses on two principles that seek to enhance CBC: incentive alignment and adaptive choice-based conjoint (ACBC) analysis. While these principles have individually demonstrated their ability to improve the forecasting accuracy of CBC, no research has yet evaluated both simultaneously. The present study fills this gap by drawing on two lab and two online experiments. On the one hand, results reveal that incentive-aligned CBC and hypothetical ACBC predict comparatively well. On the other hand, ACBC offers a more efficient cost-per-information ratio in studies with a high sample size. Moreover, the newly introduced incentive-aligned ACBC achieves the best predictions but has the longest interview time. Based on our studies, we help market researchers decide whether to apply incentive alignment, ACBC, or both. Finally, we provide a tutorial to analyze ACBC datasets using open-source software (R/Stan).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Conjoint analysis is one of the most widely applied preference measurement techniques (Keller et al., 2021; Orme & Chrzan, 2017; Pachali et al., 2023). To shape product innovation, pricing, and market penetration decisions, the industry relies on this technique to understand consumers’ product and service requirements (e.g., Papies et al., 2011; Voleti et al., 2017). By using conjoint data, marketers strive to predict consumers’ purchase behavior, thereby minimizing the risk of failure when introducing new products or changing existing product assortments (Wlömert & Eggers, 2016). Therefore, managers must be able to rely on the results of the conjoint analysis (Kübler et al., 2020; Schmidt & Bijmolt, 2020).

Choice-based conjoint (CBC) analysis is a particularly interesting conjoint method as it follows the notion that product choice predictions should ideally stem from studies involving actual choice behavior (Louviere & Woodworth, 1983). At its core, CBC interviews require participants to complete a static series of choice tasks compiled according to statistical design routines (e.g., Kuhfeld et al., 1994). In each task, relevant product and service dimensions with specific attribute levels describe the choice alternatives (Eggers & Sattler, 2009; Rao, 2014, p. 185). CBC thereby closely mimics real-life decision situations.

Nonetheless, research highlights two deficiencies in CBC. First, standard CBC studies almost exclusively use hypothetical settings, where product choices do not have real economic consequences (as participants do not have to pay in return for a product; Miller et al., 2011; Schmidt & Bijmolt, 2020). Considering this, academic and applied researchers noted that participants in standard CBC studies typically rush through questionnaires in an unreasonably brief time and rely on choice heuristics. Researchers, therefore, question the validity of the underlying assumption of CBC, namely compensatory decision-making (e.g., Orme & Chrzan, 2017, p. 150; Li et al., 2022). In addition, the absence of economic consequences in standard CBC causes a hypothetical bias that unfolds, e.g., as exaggerated estimates of willingness-to-pay (WTP) (e.g., Miller et al., 2011; Wlömert & Eggers, 2016).

Second, CBC analysis bears the risk of presenting participants with irrelevant choice tasks because its static choice set construction fails to account for the adaptation of consumers’ unique consideration sets (Gilbride & Allenby, 2004). Consequently, participants often face choice tasks that do not include any relevant alternative, making this data collection type ineffective if the goal is to uncover a nuanced utility function for the involved product attributes (Toubia et al., 2003). In this context, any no-purchase selection in CBC choice tasks represents a missed chance to learn about a consumer’s utility function.

Researchers adopted two approaches to address these limitations. First, they have applied incentive alignment, which provides economic consequences for participants’ decisions (e.g., Ding et al., 2005; Dong et al., 2010; Meyer et al., 2018). Second, they have introduced adaptive CBC designs, including polyhedral adaptive CBC (Toubia et al., 2004), adaptive choice-based conjoint analysis (ACBC, Johnson & Orme, 2007), hybrid individualized two-level CBC (HIT-CBC, Eggers & Sattler, 2009), and individually adapted sequential (Bayesian) designs (Yu et al., 2011). Among these approaches, ACBC (Johnson & Orme, 2007) is the most frequently applied adaptive CBC method (see Web Appendix A for a literature review).

Incentive alignment increases consumer motivation to process choice-relevant information and to reveal true preferences (Yang et al., 2018). Adaptive designs enhance CBC’s efficiency in learning about consumer preferences by preventing fatigue and maximizing the precision of partworth utilities via improved utility balance and information content (e.g., Johnson & Orme, 2007; Toubia et al., 2004). Previous research confirms that incentive alignment and adaptive designs in isolation increase the predictive validity of CBC in terms of consequential product and holdout task choices (e.g., Ding, 2007; Ding et al., 2005; Dong et al., 2010; Huang & Luo, 2016). To date, however, these research streams have been entirely independent, and therefore, existing research might only partially exploit the potential of CBC improvements. The lack of a direct comparison of the effectiveness of each principle does not answer the question of which of these two improvements, if any, is superior in predictive validity. Likewise, researchers are left alone to decide which principle to apply. This issue is significant as academia views incentive-aligned CBC as a promising conjoint methodology (e.g., Keller et al., 2021; Wlömert & Eggers, 2016; Yang et al., 2018), while management practice increasingly relies on adaptive designs, particularly ACBC (Sawtooth Software Inc. 2022b).

Another unanswered question pertains to whether combining incentive alignment with adaptive designs produces better predictions than either principle in isolation. In other words, whether the whole is different from the sum of its parts. This is a complex question, as we shall discuss later. Previous research has indicated that combining design enhancements in CBC with incentive alignment may not necessarily further improve predictive validity (e.g., Hauser et al., 2019; Toubia et al., 2012; Wlömert & Eggers, 2016). Likewise, managers face uncertainty regarding how much CBC outcomes, beyond predicting future purchases (e.g., extracted reservation prices, estimated competition, overall partworth utility differences) and expected interview times, are influenced by adaptive designs or incentive alignment.

Finally, so far, the analysis of ACBC datasets relied on Sawtooth Software’s proprietary software with an annual subscription system (Sawtooth Software Inc., 2023), which is unsatisfying for the academic community and practitioners alike. One can no longer extract findings from an ACBC if the subscription expires.

The present study addresses these concerns and is the first to evaluate whether applying incentive alignment or adaptive designs in CBC leads to superior predictive validity. For this purpose, we introduce two mechanisms to incentive-align ACBC, drawing on Johnson and Orme (2007). In addition, we provide a novel open-source analysis script written in R/Stan for the state-of-the-art estimation of utility functions based on ACBC designs (Web Appendix E offers a tutorial for analysts). It ensures transparency and compatibility with both ACBC and CBC data. Compared to Sawtooth and other stand-alone software, it provides researchers with greater flexibility in data analyses and additional features like holdout validation.

This study also evaluates differences among the tested (A)CBC variants regarding interview times, reservation prices, elasticities, WTP, and other relevant outcomes to provide a comprehensive overview. By doing so, we offer researchers and analysts valuable insights into what they can expect and need to consider when applying different CBC approaches.

Our empirical research program comprises two preliminary online studies, two lab, and two online experiments, drawing on diverse samples and different product categories, ranging from pizza menus to fitness trackers. The studies’ results consistently indicate several key findings: (1) Hypothetical ACBC and incentive-aligned CBC perform comparably well when predicting consequential product choice. (2) The former variant has a slight advantage when predicting product rankings. (3) Combining incentive alignment and adaptive designs leads to superior predictive validity. However, it also requires the longest interview time and the highest total cost. (4) For CBC, lengthy interviews with many choice tasks do not necessarily maximize predictive validity, aligning with recent findings reported by Li et al. (2022). In contrast, for the even longer ACBC interviews, later decisions tend to increase prediction validity further. (5) An analysis of the participants’ cognitive efforts and survey evaluations further substantiates the dominant role of incentive-aligned ACBC, as participants spent more time on it but simultaneously gave it the highest evaluations. (6) Our results point to two different psychological processes underlying the effectiveness of both principles in isolation. Incentive alignment increases the extent of deliberation, while it does not necessarily lead to more consistent preferences. Adaptive designs, on the other hand, lead to greater study enjoyment. When adding incentive alignment to ACBC, greater deliberation (i.e., longer processing times) mainly emerges in the initial ACBC stage(s). (7) When focusing on metrics relevant for marketers despite predictive validity, the results—inter alia—highlight that market researchers will extract exaggerated estimates of reservation prices when not implementing adaptive designs. Furthermore, within ACBC, applying a hypothetical variant (instead of incentive-aligned ACBC) leads to an underestimation of a product’s competitive strength.

The "Managerial implications" section guides selecting between different (A)CBC variants by emphasizing the evaluation of a comprehensive set of decision criteria (i.e., technical feasibility, predictive validity, extracted utility functions, cost, time consumption, and participants’ study evaluations). We also discuss what to expect from modified versions of ACBC (i.e., shorter ACBCs not incorporating all stages) regarding the trade-off between interview times and gains in predictive validity.

Theoretical background

Mechanisms to incentive-align choice-based conjoint studies: A brief review

Participants make hypothetical and not consequential decisions in traditional CBC. Therefore, they only have a limited incentive to spend their time and energy on elaborate decision-making, which is required to make choices consistent with one’s true preferences. The problem of hypothetical bias is recognized in various marketing domains. It manifests, for example, in the form of exaggerated estimates of WTP (Wertenbroch & Skiera, 2002), or as a biased depiction of consumers’ susceptibility to manipulative attempts when choosing between products (Lichters et al., 2017), and particularity as biased parameter estimates in conjoint studies (e.g., Lusk et al., 2008; Miller et al., 2011).

Researchers have integrated incentive-aligning mechanisms into CBC to resolve this problem, thus providing participants with real economic consequences based on their decisions. The results indicate that the estimated utilities are more realistic and predictive for actual consumer decisions than hypothetical CBC studies (e.g., Ding, 2007; Ding et al., 2009; Hauser et al., 2019). Accordingly, Rao (2014, p. 221) suggests that “data collected with incentive compatibility is far superior for conjoint studies in practice.”

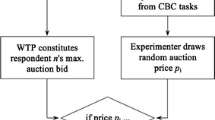

Among all mechanisms proposed to incentive-align CBC, the Direct mechanism (Ding et al., 2005) is the only mechanism that directly links participants’ rewards to their CBC choices (Web Appendix A provides a concise overview). Specifically, it randomly selects a single CBC choice task from all the tasks to determine a participant’s payoff (i.e., random lottery procedure), thus resembling real purchases. By contrast, indirect methods require estimating individual utility functions, introducing uncertainty in parameter accuracy.Footnote 1 Besides, commonly applied utility estimation is time-consuming (Allenby & Ginter, 1995; Voleti et al., 2017) and, therefore, delays immediate feedback. A time gap between the interview and the reward may give the impression that a “black box” determines participants’ rewards. This black box may induce weaker motivation to unveil true preferences compared to the direct mechanism. Nevertheless, indirect methods such as the RankOrder mechanism (Dong et al., 2010) are of considerable practical relevance since they compensate for the Direct mechanism’s disadvantage of requiring numerous potential reward products. The RankOrder mechanism determines the reward based on the participant’s top-ranked product in an unknown product list (it only requires at least two products). Web Appendix A discusses implementing the RankOrder mechanism in different ACBC versions.

The current state of research shows that incentive alignment is a universal measure to improve CBC’s predictive validity, independent of the applied mechanism and the incentivization procedure used (rewarding each participant vs. partial lottery incentives; see Table 1). Thus, incentive-aligned CBC has become a “silver bullet” in conjoint methodology, allowing researchers to routinely benchmark other preference measurement procedures (e.g., Ding et al., 2009; Hauser et al., 2019; Yang et al., 2018). However, a second stream of research follows another approach to enhance traditional CBC, namely implementing adaptive designs.

Adaptive choice-based conjoint analysis: A brief review

Incentive alignment does not address the methodical limitations of CBC stemming from the static construction of choice tasks. To overcome this challenge, researchers have proposed adaptive CBC-design algorithms, which adaptively adjust choice tasks based on participants’ earlier-revealed preferences in the interview flow (e.g., Schlereth & Skiera, 2017). Compared to standard CBC, adaptive designs better account for consumer preference heterogeneity. At the same time, they comply with researchers’ advice that choice designs should extract as much helpful information as possible (Huber & Zwerina, 1996).

The literature suggests various approaches for adaptive CBC designs, such as Toubia et al.’s (2004) polyhedral adaptive CBC, Eggers and Sattler’s (2009) hybrid individualized two-level CBC, and Joo et al.’s (2019) goal-directed question-selection method (Web Appendix A presents an overview). The most frequently applied and cited adaptive CBC is ACBC (Johnson & Orme, 2007). ACBC is used in many fields, including marketing and advertising (e.g., Kouki-Block & Wellbrock, 2021), health research (e.g., de Groot et al., 2012), acceptance of food products (e.g., McLean et al., 2017), as well as studies on entrepreneurship (e.g., Wuebker et al., 2015). ACBC is versatile, handling metric and categorical attributes, offering realistic graphics, estimating the no-purchase utility, and not necessitating a price attribute. ACBC’s popularity is, moreover, due to the fact that—unlike other adaptive CBC approaches—it explicitly captures non-compensatory decision rules (Dowling et al. 2020). It is also available as a software package with a graphical user interface (Sawtooth Software Inc. 2022a).

In contrast to static CBC, Johnson and Orme’s ACBC adds two preceding sections to identify participants’ idiosyncratic consideration sets before providing them with tailor-made choice tasks. Participants start with a Build-Your-Own (BYO) exercise, which requires them to configure their ideal product given specific feature prices. During this stage, participants trade between highly attractive and low-priced attribute levels. A second Screening stage then adaptively presents sets of products that slightly deviate from the specified BYO product. For each product concept, participants must decide whether or not it is worth a possible purchase option (i.e., a consideration task; see Ding et al., 2011). At this point of the survey, participants must also repeatedly state their must-have and unacceptable criteria to adapt the presented products to satisfy the identified rules (i.e., non-compensatory decision rules; Gilbride & Allenby, 2004). Thus, during the Screening, the products increasingly meet participants’ thresholds. All products marked as a possibility in the Screening stage are subsequently allocated to choice tasks according to a balanced overlap design (Liu & Tang, 2015) within a third Choice Tournament stage. However, unlike in CBC, this third stage arranges the choice tasks by following a forced-choice knockout tournament. Each “winning concept” (the chosen product) advances to another round until, after a final stage, a particular concept emerges as the tournament winner (Huang & Luo, 2016). By representing participants’ established consideration sets, the tournament design involves more level overlap on individuals’ most important attributes with increasing tasks. Additionally, by presenting ACBC choice tasks in a forced-choice format, the Choice tournament stage prevents participants from choosing the no-purchase option as an “easy way out” of complex decisions (Schlereth & Skiera, 2017).

In combination, the initial screening of concepts and the subsequent tournament augmentation produce more challenging later-stage decisions in the ACBC interview flow than decisions made in CBC. This generates a higher utility balance among the presented choice tasks, which improves the estimation of partworth utilities (Huber & Zwerina, 1996).

ACBC also offers an optional fourth Calibration stage, where participants indicate their purchase likelihood for unattractive/attractive (BYO, Screening, Choice Tournament) products on a five-point Likert scale. The purpose of the Calibration stage is to fine-tune the participant’s utility of not purchasing any product (referred to as “no-purchase utility” or “none parameter”). However, the benefits of this stage have not been thoroughly evaluated in existing literature.

In summary, hypothetical ACBC shows superior predictive performance compared to standard hypothetical CBC (Bauer et al., 2015; Chapman et al., 2009; Huang & Luo, 2016).

The unclear role of incentive alignment in adaptive choice-based conjoint analysis

The question arises whether implementing both principles together would be a fruitful endeavor. We will subsequently discuss arguments in favor and against the merit of combining both approaches. This aids in developing a conceptual framework for establishing the potential superiority of incentive-aligned ACBC.

Combining incentive alignment with adaptive designs should yield better results as both improve standard CBC through different mechanisms. Incentive alignment ensures that it is in the best interest of the participants to make deliberate and truthful decisions (Ding et al., 2005), while ACBC improves the relevance and format of data collection (Johnson & Orme, 2007). However, conjoint researchers have previously noted that modifying the design of traditional CBC in combination with incentive alignment does not necessarily improve predictive validity over the level offered by either strategy alone. For example, Wlömert and Eggers (2016) investigated dual-response designs together with incentive alignment, and individually, both features increased the predictive validity of CBC regarding a service adoption in the future. However, the authors found no incremental increase in hit rates when combining dual-response designs with incentive-aligned CBC. Comparable insights can be drawn from Hauser et al. (2019), who combined image realism (i.e., “craftsmanship” invested in making the conjoint design more realistic and appealing) and incentive alignment in CBC. The authors did not assess whether the predictive validity significantly differed in their full 2 × 2 design, as this was not the article’s primary focus. However, reanalyzing their data shows that while incentive alignment and realistic images individually improve the predictive validity significantly over a hypothetical, text-only condition, their combination does not result in further improvement (see Web Appendix H). Lastly, Toubia et al. (2012) introduced Conjoint Poker, a gamified version of CBC. However, the incentive-aligned poker conjoint did not significantly improve out-of-sample predictions compared to the incentive-aligned static CBC.

Making sense of these findings, incentive alignment has been shown in CBC studies to increase the assessment of less relevant features (Meißner et al., 2016), which is costly in terms of cognitive resources. In line with this argument, repeatedly answering similar CBC tasks led participants to use non-compensatory decision-making heuristics adapted to the task type (Li et al., 2022; Yang et al., 2015). Despite their effort-saving properties, these heuristics further reduce the usefulness of later CBC tasks in the interview to enhance predictive validity. This aligns with findings in psychology. Here, researchers have shown that resource depletion from repetitive product decisions results in inferior performance in subsequent tasks due to the inclination to rely on effort-saving heuristics (Vohs et al., 2014). Answering more questions (e.g., dual-response) and/or having longer interview times (e.g., ACBC with multiple stages) might lead to even higher cognitive costs for participants, fostering effort-saving heuristics. All these arguments suggest that a ceiling effect for achievable improvements in predictive validity is plausible when combining incentive alignment (which motivates higher cognitive investments) and other design improvements in CBC.

However, a deeper look at the literature provides compelling arguments favoring the combination. Indeed, the critical message of Li et al. (2022) is that the adaptation of the decision process is driven by repeatedly performing the same tasks. In the case of ACBC, this risk is much lower due to the explicit design of different stages, each serving distinct purposes. The authors even call for research in this direction (Li et al., 2022, 979):“[…] we encourage the development of methods for mitigating adaptation to the task. For example, adaptation could be reduced or delayed by repeatedly changing the format of the task […].” Therefore, despite the longer duration of ACBC studies, issues such as boredom, fatigue, and task adaptation should be less problematic compared to other CBC designs. In contrast, study enjoyment is typically higher in ACBC than in traditional CBC (Johnson & Orme, 2007), and a higher amount of (useful) deliberation seems plausible. Hence, in combination, both principles do not necessarily lead to a ceiling effect, as some previous research might suggest. The key is that more extended interviews can work if the choice decision format varies adaptively. Incentive alignment on top helps to make the tasks even more relevant due to the interlinked nature of the adaptive design in ACBC studies, thus capturing non-compensatory decision rules even better (than hypothetical ACBC).

Research goals and study overview

To investigate this in detail, our study is the first to benchmark both principles. In line with the arguments above, we also evaluate whether participants show greater deliberation due to applying incentive alignment (measured by processing times; see, e.g., Guo, 2022) and whether adaptive (vs. static) CBC further leads to more study enjoyment. Ultimately, we evaluate whether combining incentive alignment and adaptive designs yields higher prediction accuracy than their application in isolation. In this context, we also analyze the influence of incentive alignment on the predictive accuracy of individual ACBC stages (see Web Appendix H).

Apart from predictive validity, we also evaluate the impact of incentive-aligned (vs. hypothetical) and adaptive (vs. static) CBC variants on market-relevant estimates such as reservation prices and elasticities. An additional goal of our study series is to provide market researchers with guidelines on when to apply traditional CBC, incentive-aligned CBC, hypothetical ACBC, or incentive-aligned ACBC. Web Appendix A also provides guidance on meaningful combinations of ACBC stages and discusses the implications of incentivizing and/or omitting specific stages. Methodologically, we contribute to open research on ACBC by providing software that estimates utility functions using—Hierarchical Bayes—based on data obtained from Sawtooth Software’s ACBC implementation. For this purpose, we have developed an R/Stan script that we used to carry out all analyses reported in this article (scripts and data available at https://osf.io/e5v4c/). Its results align with Sawtooth Software’s results (see Web Appendix E). We hope that our code will foster the usage of ACBC, as the corresponding Web Appendix also provides a step-by-step tutorial on analyzing ACBC datasets without proprietary software. The tutorial covers aspects not included in standard software packages, such as holdout task evaluation metrics.

We address our research goals through a comprehensive program consisting of two preliminary studies and four experiments. In all studies, we implement realistic visual stimuli and mouse-over gestures that detail the products’ features to foster data quality (Hauser et al., 2019). The first two lab experiments (Study 1 on Pizzas and Supplemental Study A on PlayStation 4 Bundles) contrast three conjoint versions: incentive-aligned CBC, hypothetical ACBC, and incentive-aligned ACBC. Study 2, an online experiment with Food Processors, extends our research scope by further implementing hypothetical CBC as a baseline condition, more validation tasks (such as rank order validation, following Lusk et al., 2008), and an additional validation sample condition (i.e., new participants that are not assigned to any conjoint condition). In all these studies, we apply the Direct mechanism (Ding et al., 2005) to create incentive-compatibility in CBC and conceptualize an equivalent mechanism for ACBC by potentially rewarding participants with their winning product from ACBC’s Choice Tournament. Note that as ACBC stages are linked, the information from all decisions in previous stages is affected by the incentivization (see Web Appendix A). Study 3, an online experiment on fitness trackers, then implements the RankOrder mechanism (Dong et al., 2010), which increases the range of potential applications.

Empirical studies

Study 1: Consumers’ forced-choice preferences for pizza

Study objective

Study 1 on pizza menu preferences adopts incentive alignment by incentivizing each participant (Ding et al., 2005). The study explores how hypothetical and incentive-aligned ACBCs perform in purchase predictions compared to an incentive-aligned CBC. To ensure relevance in the pizza context, we (1) exclusively recruited participants who regularly consume pizza and (2) partnered with a local pizza restaurant.

Based on a preliminary study (Web Appendix C), the attributes and levels for the main study included eight pizza types (Margherita, Salami, Funghi, Salami and Ham and Funghi, Chicken, Gyros, Four Cheeses, Tomato) in three sizes (diameter: 20cm, 25cm, 29cm), additional sauces (hollandaise, BBQ, none), toppings (onion rings, paprika, jalapenos, olives, boiled egg, none), extra cheeses (Gouda, Feta, Mozzarella, none), spices (garlic, none), 0.5-L beverages (Coca-Cola, Coke Light/Zero, Fanta, Sprite, water, none), and the menu price (CBC: €5.95, €7.95, €8.95, €9.95, €11.95, €13.95; ACBC: summed price).

Experimental design and procedure

Study 1 drew on a single between-subjects factor with three levels: (I) incentive-aligned CBC, (II) hypothetical, and (III) incentive-aligned ACBC (see Fig. 1). Each participant was disbursed with a personalized pizza voucher redeemable at our research partner’s restaurant, along with a certain amount of cash arising from the difference between €15 and the pizza price (e.g., Ding, 2007; Dong et al., 2010). Voucher value varied based on different decisions within the study, depending on the study condition.

Each experimental condition consisted of two parts: the conjoint exercise (Part 1) and a holdout task (HOT) (Part 2, the same for each condition). Participants in the incentive-aligned conditions (I or III) had two chances to determine their final pizza menu as the study reward, once in Part 1 and once in Part 2. A lottery procedure determined which study part became payoff-relevant (50:50 chance). Participants in the hypothetical ACBC condition (II) only determined their reward in Part 2 (HOT), leaving the ACBC part as not payoff-relevant.

For condition I, we created individualized choice designs comprising 17 CBC tasks in Part 1, each with three products and a no-purchase option. The designs followed a balanced-overlap strategy pursuing D-optimality across all participants (e.g., Huber & Zwerina, 1996; Liu & Tang, 2015). Following Part 1, participants made a selection from a fixed HOT, which immediately offered 16 pizza menus in a forced-choice format.Footnote 2 In line with other research on incentive alignment, we aimed for a HOT decision that is difficult to predict correctly (see Table 1) to give the conjoint methods room to show differences in predictive validity. If Part 1 became relevant based on a virtual coin toss, we implemented the Direct mechanism by randomly drawing one out of all 17 CBC choice tasks via a virtual lottery. By contrast, if Part 2 became relevant, we disbursed the selected alternative in the HOT (Ding et al., 2005).

We set up computerized ACBC interviews for conditions II and III (Part 1). All participants worked on Screening tasks with binary choices after configuring their ideal pizza menu in the first BYO stage. The Choice Tournament stage then provided participants with choice tasks arranged in a KO-tournament, each covering three pizza menus. Then, participants finally provided answers to ACBC’s optional Calibration stage.

We instructed participants about the principles of ACBC and made them aware of the interdependencies across all stages. However, those in condition III (incentive-aligned ACBC) received additional information on the random payoff mechanism in Part 1. Specifically, we explained that the final winning concept of the Choice Tournament stage or the decision from the HOT would be realized based on a virtual coin toss. In contrast, participants in condition II (hypothetical ACBC) were assured of receiving their HOT choice for disbursement.

Participants

A German university’s behavioral economics lab provided participants from a panel of preregistered individuals. All of n = 278 participants (46% females, 94% up to 30 years old, and 90% with a maximum of €999.99 monthly net income) indicated that they 1) were willing to spend 30 min. on the study, 2) were at least 16 years old, 3) had eaten pizza during the last year, and 4) were interested in a pizza voucher from our research partner’s restaurant.

The participants’ age, net income, frequency of pizza consumption, and eating behaviors did not differ across conditions (smallest p = 0.246), but gender did (female shares: condition I: 37%, II: 55%, III: 47%; Fisher’s exact test, p = 0.046). We checked gender’s potential influence in subsequent analyses (Web Appendix C provides demographics in each study).

Analysis and results

We estimated a Hierarchical Bayes (HB) multinomial logit (MNL) model with a multivariate normal distribution (Allenby & Ginter, 1995; Lenk et al., 1996) and followed the recent choice modeling literature for the prior specification (Akinc & Vandebroek, 2018). We sign-constrained the price parameter to ensure economically plausible parameters via a (negative) log-normal distribution (Allenby et al., 2014; Wlömert & Eggers, 2016). This decision did not affect our conclusions (see Web Appendix G). Using Hamiltonian Monte Carlo (No-U-Turn sampler in Stan; see, e.g., Carpenter et al., 2017), the HB estimation used five chains, each with random starting values and 500 warm-up iterations, followed by 5,000 draws. We kept every fifth draw and averaged the results for point estimates of each parameter (Web Appendix D provides further details).

We then predicted participants’ HOT decisions using the MNL rule (Green & Krieger, 1988). We counted how many participants chose the HOT product that was predicted to be most preferred (hit rate (HR), e.g., Ding et al., 2005). We further analyzed the mean hit probability (MHP, Dotson et al., 2018), which expresses the mean predicted choice probability of the actual chosen alternative across participants. We also analyzed the predictive performance at an aggregated level, using the mean absolute error of prediction (MAE) to measure the deviation between predicted and actual choice shares across all HOT products (Liu & Tang, 2015). Finally, we used Cohen’s kappa to assess the agreement between actual and predicted choices (Chapman et al., 2009). Web Appendix D provides further details on all studies’ estimation results, including population-level estimates, partworths, relative importances, WTP (utility in monetary space), and models testing differences in hit rates.

Panel A of Table 2 presents Study 1’s key results. Overall, each conjoint approach significantly improves the purchase prediction compared to a prediction by chance (all hit rates > 1/16 = 6.25%, all binomial test p-values < 0.001). Based on the commonly applied classification for Cohen’s kappa (Landis & Koch, 1977), the concordance between predicted and actual choices is slight for incentive-aligned CBC (0.15), fair for hypothetical ACBC (0.26), and moderate for incentive-aligned ACBC (0.43).

Next, we analyzed the MHP using a linear regression model with the condition as the independent variable (base level = incentive-aligned CBC). We applied a ranked-based, inverse normal transformation to account for the non-normal nature of predicted probabilities (Gelman et al., 2014, p. 97). Pairwise comparisons between conditions drew on estimated marginal means (Searle et al., 1980). The results indicate incentive-aligned ACBC with 35.23% outperforms hypothetical ACBC (24.53%; β = 0.43, t(275) = 3.04, p(one-tailed) = 0.001) and incentive-aligned CBC (17.15%; β = 0.64, t(275) = 4.46, p(one-tailed) < 0.001). A comparison of hypothetical ACBC and incentive-aligned CBC shows no significant difference (β = 0.20, t(275) = 1.42, p = 0.157).Footnote 3 The predictive validity at an aggregate level confirms the superior performance of incentive-aligned ACBC, which provides the lowest MAE (1.83%), followed by incentive-aligned CBC (2.38%) and hypothetical ACBC (3.51%).

Discussion of Study 1, Supplemental Study A, and motivation for Study 2

Study 1 supports the superiority of incentive-aligned ACBC over hypothetical ACBC and incentive alignment in static CBC. Put differently, the results suggest no evidence of a ceiling effect when combining incentive alignment and adaptive designs. In addition, the results indicate that hypothetical ACBC and incentive-aligned CBC achieve comparable predictive validity. However, the findings from Study 1 are specific to a scenario in which all participants received a product payoff, which might not be feasible in applied market research, especially when working with online panels or studying expensive durables or services, as recently outlined by survey results in Pachali et al. (2023). Furthermore, Study 1’s results are limited to forced-choice decisions since the HOT used for validation did not include a no-purchase option.

For this reason, Supplemental Study A on PlayStation 4 bundles (net n = 242) incorporates a free-choice HOT and a random payoff lottery allotment (see Web Appendix B). This design adjustment requires further attention to the valid estimation of the none parameter as it is used in choice simulations to calculate whether a consumer will opt for a product or not. Thus, Supplemental Study A additionally evaluates whether the fourth Calibration stage proposed by Johnson and Orme (2007) increases incentive-aligned ACBC’s predictive validity.

Despite successfully replicating Study 1’s results (Table 2, Panel B), this experiment also highlights that the Calibration stage indeed significantly improves the predictive validity (pooled ACBC data: increase in hit rate of 12.03 pp). Web Appendix H provides details across studies. However, similar to Study 1, Supplemental Study A incorporated a single validation procedure—within each condition, data from a set of conjoint tasks are used to predict holdout decisions drawn from the same participants. Furthermore, like in Study 1, a relatively homogenous set of participants (mainly students) participated in a lab experiment, whereas in the industry, most conjoint studies are conducted online (Sawtooth Software Inc. 2022b).

For this reason, Study 2 targets an online sample with diverse characteristics and implements four design improvements. First, Study 2 includes a hypothetical CBC condition that allows us to examine whether the positive effect of incentive alignment on the predictive validity (Ding et al., 2005), as well as the previous findings on the superiority of hypothetical ACBC over hypothetical CBC, can be replicated (e.g., Bauer et al., 2015; Chapman et al., 2009). Second, it introduces another, more conservative form of validation at the aggregate level by using the conjoint data to predict shares of an independent validation sample (e.g., Gensler et al., 2012). Third, Study 2 implements a series of consequential HOTs rather than offering a single task (Huang & Luo, 2016) to assess the robustness of the results, including an incentive-aligned ranking HOT (Lusk et al., 2008). The study evaluates conjoint methods’ ability to reproduce participants’ product concept ranking. Finally, questions on survey evaluation provide insights into participants’ perceptions of the study.

Study 2: Consumers’ preferences for food processors

Experimental design and procedure

A European electric kitchen appliance manufacturer provided the attributes and levels for the conjoint study, including color (white, black, red), power (900 or 1,000 Watt), additional mixing bowl (plastic, stainless steel polished, stainless steel brushed, none), additional discs (disc for potatoes, disc for vegetables, both, none), a measuring cup (included, not included), a mincer (included, included with a shortbread biscuit attachment, included with a grater, not included), further attachments (citrus juicer, blender attachment, blender, ice maker, tasty moments shredders, none), a recipe book (smoothies and shakes, vegetarian, low carb, sweet and easy, Jamie’s 5-ingredients, none), and the price (CBC: €175.95, €210.95, €245.95, €280.95, €315.95, €350.95; ACBC: summed price).

As explained, Study 2 implemented five between-subjects conditions (see Figure C2 in Web Appendix C): (I) hypothetical CBC, (II) incentive-aligned CBC, (III) hypothetical ACBC, (IV) incentive-aligned ACBC, and (V) a validation sample. While participants from the first four conditions again received two parts (a conjoint part followed by a HOT part), participants from the validation sample only answered a HOT part.

The study offered participants a 1-in-30 chance of winning a food processor bundle and a money reward equal to the difference between €400.00 and the price of the reward bundle. While all winners from conditions I, III, and V received one of their HOT choices, a virtual coin toss decided if the conjoint or the HOT part was payoff-relevant in the incentive-aligned conjoint conditions (II and IV). The CBC exercises (I and II) designs included 14 choice tasks, each with three products and a no-purchase option. Other aspects of Study 2 followed Study 1.

If the HOTs became payoff-relevant, one of the four HOTs was randomly drawn to be realized. While the first two HOTs offered participants ten and six food processor bundles, respectively, in a forced-choice setting, the third HOT provided them with six bundles along with a no-purchase option. The fourth HOT required participants to rank six products according to their preferences. We used the procedure of Lusk et al. (2008) to incentive-align the ranking task, with the probability of receiving a specific product increasing with its rank (i.e., products at rank number 1 (J) receive the highest (lowest) probability). We calculated the probabilities according to the formula \(\frac{J+1-{r}_{j}}{{\sum }_{j=1}^{J}j}\bullet 100\mathrm{\%}\), with J being the number of products to be considered and rj the ranking of product j. The HOT included six products. Accordingly, the mechanism ensured a ((6 + 1 − 1)/21)⋅100 = 28.6% chance of receiving the product ranked first, a ((6 + 1 − 2)/21)⋅100 = 23.8% chance of receiving the product ranked second, and so forth.

Instead of the ranking HOT, the validation sample (condition V) received two less complex choice tasks in addition to the first three HOTs described above (e.g., Ding, 2007). Both additional HOTs covered four product options: one forced and one free-choice task. All bundles were presented in the same layout as in the manufacturer’s online store.

We assessed participants’ understanding of the reward procedure and excluded those participants from our analyses who did not provide the correct answers. After completing the conjoint part, participants evaluated the experienced study enjoyment using three-items proposed by Johnson and Orme (2007) (from 1 = strongly disagree to 5 = strongly agree, e.g., “I would be very interested in taking another survey just like this in the future”).

Participants

An independent German market research firm recruited online participants. All of them passed the same screening procedure as before. A total of 600 participants completed the study. Applying screening criteria (i.e., implausible fast or slow interview times, straight-lining in the form of selecting the same product position across each choice task) led to n = 556 cases. Additional n = 27 cases were excluded because the corresponding participants did not provide correct answers to questions assessing their understanding of the reward procedure. The final sample’s (n = 529) characteristics are 56% females, 23% students, 57% employees, 44% up to 30 years of age, 28% with a maximum of €999.99 net monthly income, from all federal states of Germany. They did not differ across conditions (smallest p = 0.170).

Analysis and results

Again, the four-stage (vs. three-stage) ACBC results in significantly better individual free-choice purchase predictions (pooled ACBC data in HOT 3: increase in hit rate of 5.24pp, exact McNemar, p(one-tailed) = 0.049). Thus, we used the data from the four-stage ACBC for our analysis. Regarding HOTs 1–3, our analytical strategy of individual purchase predictions (i.e., MHP) followed Study 1. Regarding HOT 4 (ranking), we applied an ordered logistic regression with ranks assigned by participants to the predicted choice as the dependent variable. In addition, we calculated the average rank of predicted choice (Dong et al., 2010), as well as the average (Spearman) rank correlation between predicted and observed rankings by condition (Orme & Heft, 1999). Lastly, we assessed the predictive validity of the conjoint methods at the aggregate level, using the consequential choices from the validation sample.

Panel C of Table 2 provides an overview of the results. All hit rates in HOTs 1–3 significantly exceed the chance level (all binomial test p-values < 0.013). In these HOTs, the concordance of predicted and actual choices is slight for hypothetical CBC (0.05 – 0.06), slight to fair for both incentive-aligned CBC (0.12 – 0.22) and hypothetical ACBC (0.13 – 0.29), and fair for incentive-aligned ACBC (0.22 – 0.37). In HOTs 1 to 3, we consistently find (1) hypothetical CBC performing worst (avg. hit rate: 22.54%, avg. MHP: 21.71%), (2) incentive-aligned CBC and hypothetical ACBC performing comparably well (avg. hit rates: 32.40% and 37.58%, avg. MHP: 26.47% and 32.77%), and (3) incentive-aligned ACBC having the best results (avg. hit rate: 45.67%, avg. MHP: 40.77%).

Next, we applied a generalized, linear mixed-effects model to the MHP. This model incorporated a random intercept for each HOT and participant. Furthermore, the hit probabilities were again ranked-based inverse normal transformed (linear model). The model contained two independent factors—the first contrasting incentive-aligned (1) against hypothetical (0) conjoint versions, and the second contrasting ACBC (1) against CBC (0). Results highlight a significant positive main effect of incentive alignment (β = 0.14, t(419) = 2.24, p(one-tailed) = 0.013) and a positive main effect of adaptive designs (β = 0.18, t(419) = 2.88, p(one-tailed) = 0.002). Considering both factors, the results indicate a stronger positive effect of adaptive designs compared to incentive alignment. Additional analysis rejects an interaction of both factors (β = 0.01, t(418) = 0.05, p = 0.960). This corresponds well with our theoretical understanding of both effects’ different, independent origins.

Like Study 1, we find a positive but insignificant difference between hypothetical ACBC and incentive-aligned CBC using estimated marginal means (β = 0.04, t(419) = 0.46, p = 0.644). Furthermore, incentive-aligned ACBC outperforms incentive-aligned CBC, as already shown by the significant main effect of the design factor.

Next, we elaborated on the ability of conjoint variants to reproduce participants’ ranking of products (HOT 4). The results of an ordered logistic regression on participants’ ranks of predicted choice align closely with our previous findings in that they indicate a positive main effect of incentive alignment (β = 0.29, z = 1.66, p(one-tailed) = 0.049) and an even more substantial main effect deriving from adaptive designs (β = 0.55, z = 3.12, p(one-tailed) < 0.001), without a significant interaction effect (β = 0.22, z = 0.63, p = 0.526). As previously, further comparisons reveal that hypothetical ACBC and incentive-aligned CBC do not significantly differ from each other (β = 0.26, z = 1.04, p = 0.297), but that incentive-aligned ACBC is superior to incentive-aligned CBC (see the main effect of the design-factor).

An additional focus on participants’ rank correlations (observed vs. predicted ranking) highlights the greater benefit of adaptive designs over incentive alignment. Both ACBC variants exceed the CBC variants, while incentive alignment shows no strong effect. A linear regression model on the ranked-based inverse normal transformed rank correlations confirms a significant positive effect of adaptive designs (β = 0.22, t(419) = 2.30, p(one-tailed) = 0.011). At the same time, incentive alignment is not significant (β = 0.05, t(419) = 0.52, p(one-tailed) = 0.302). Also, the interaction between the factors is insignificant (β = − 0.11, t(418) = − 0.56, p = 0.577). Incentive-aligned ACBC is significantly superior to the CBC variants (hypothetical CBC β = 0.27, t(419) = 1.96, p(one-tailed) = 0.025). The significant main effect of the factor adaptive vs. static designs includes the effect of incentive-aligned, as we do not include the interaction term.

Finally, a prediction of the validation sample’s choice shares in HOTs 1–3 and HOTs 5–6 completes the picture (Table 2, Panel C). The MAE is, on average, lower in ACBC than CBC, with the lowest values most often found in incentive-aligned ACBC.

Discussion of Study 2 and motivation for Study 3

Study 2 highlights that both principles applied in isolation—incentive alignment and adaptive designs—significantly enhance the ability of traditional CBC to predict consequential product choices. When it comes to the comparison between hypothetical ACBC and incentive-aligned CBC, both methods again perform at a comparable level. Interestingly, Study 2’s analysis of the rank correlation between estimated and observed rankings in a consequential ranking task (Lusk et al., 2008) points to higher efficacy of adaptive designs (vs. incentive alignment). Study 2 further supports the superiority of incentive-aligned ACBC over hypothetical ACBC and incentive-aligned CBC.

Nevertheless, Study 2 is limited by applying the Direct mechanism (Ding et al., 2005). This incentive-aligning mechanism is best from a conceptual point of view (see Web Appendix A and the section “Theoretical background”); however, it necessitates that each shown concept in the conjoint study is available as study disbursement. In addition, within each of the previous studies, we implemented conjoint variants in which the brand of a product was not a focal attribute (i.e., there is no brand competition). Therefore, it is unclear whether applying incentive alignment in ACBC also leads to superior prediction accuracy in the presence of brand competition or strong brand preferences. Thus, Study 3 implements two improvements: (1) It uses a product category with strong brands (fitness trackers), and (2) it adopts the RankOrder mechanism for incentive alignment from the CBC context (Dong et al., 2010) to ACBC, which broadens the potential applications of incentive alignment in ACBC (see Web Appendix A).

Study 3: Consumers’ forced-choice preferences for fitness trackers

Experimental design and procedure

Attributes and levels for the study on low-budget fitness trackers included brand (Fitbit, TomTom, Withings), GPS (no GPS, connected GPS, integrated GPS), heart rate monitor (HRM) (no HRM, HRM – chest strap, integrated HRM), sleep detection (without or with sleep detection), food tracker app (not included, included), fitness coach app (not included, included), and price (summed price function). The attributes and levels align well with the advertised products at the time of the study.

The study consisted of two between-subjects conditions: (I) hypothetical vs. (II) incentive-aligned ACBC. The survey’s first part involved a four-stage ACBC, and the second part included a HOT with nine product alternatives. In both conditions, participants knew that a random mechanism would pick the “winners” (1% chance of winning) who would receive a fitness tracker based on their choices and €320 minus the product price. We used the RankOrder mechanism to identify the winning concept from Part 1 of the incentive-aligned ACBC. This mechanism requires a reward list of products (unbeknown to the participants) and an estimation of individual utility functions (Dong et al., 2010). The participant’s utility function is used to infer a preference rank order for the reward list. The product with the highest estimated utility is then used as study disbursement. This study’s reward list included nine products (identical to those in the HOT). This fact, however, was only revealed at the end of the study flow. After completing the ACBC, participants evaluated the survey using the same three-item scale as in Study 2 and reported their knowledge about fitness trackers on three items (Brucks, 1985). We asked subjects to rate their knowledge about fitness trackers in general and as compared to friends and experts (1: very little; 7: very much, Cronbach’s α = 0.91).

Participants

We recruited German-speaking participants from thematic forums and an online platform for survey-sharing (www.surveycircle.com). This led to 375 participants starting the online interviews. Four participants were excluded because they did not reside in German-speaking countries. Another 130 did not complete the interview. Seven participants did not agree to the terms of the random payoff mechanism. We furthermore screened for implausible interview times (< 8 or > 120 min) and straight-lining (selecting every time the same product position in the Choice Tournament), which led to the exclusion of 13 participants. Finally, the server protocols indicated that technical problems were encountered for twelve participants. We also excluded these cases, leading to a final net sample of n = 209. This sample comprised 66% females, 88% up to 30 years of age, and 81% with a maximum of €1499.99 net income per month. Participants in both conditions did not differ in terms of these criteria as well as occupation status and product knowledge (smallest p = 0.184).

Analysis and results

Both ACBCs predicted the HOT choices significantly better than expected by chance (i.e., hit rates > 1/9 = 11.11%, binomial test p-values < 0.001). The concordance of predicted and observed choices is fair for hypothetical (0.22) and incentive-aligned ACBC (0.28). Panel D of Table 2 highlights that, again, incentive-aligned ACBC outperforms the hypothetical ACBC regarding hit rates (45.19% vs. 36.19%) and MHPs (38.17% vs. 29.77%). Using a linear regression with the ranked-based inverse normal transformed hit probabilities, we found that incentive alignment had, again, a significant, positive effect (β = 0.28, t(206) = 2.05, p(one-tailed) = 0.021). Moreover, the incentive-aligned ACBC achieves a lower MAE than the hypothetical ACBC (HOT: 3.51% vs. 5.94%). These results underscore the robustness of the positive effects of incentive alignment in ACBC, even in the presence of strong brands and another mechanism for incentive alignment.

Further analyses across all empirical studies

This section builds a connection between the four experiments to shed light on additional issues, namely the reliability of the gains in predictive validity, the processes contributing to the efficacy of incentive alignment and adaptive designs, the differences in extracted marketing implications when applying varying CBC variants, and further insights from a cost analysis.

Reliability of the effects on predictive validity

First, we employed a series of single-paper meta-analyses (SPM) (McShane & Böckenholt, 2017) on the differences in MHP (Web Appendix H presents full results). This gives us an idea of how stable the differences in predictive validity are. When focusing on the gains in predictive validity of incentive-aligned ACBC over incentive-aligned CBC (tested in Studies 1 and 2 and Supplemental Study A, n = 555), the meta-analytic effect remains significant (z = 5.68, p < 0.001). When focusing only on the comparison of incentive-aligned vs. hypothetical ACBC (n = 760), the meta-analytic effect is also significant: z = 4.54, p < 0.001. Finally, our empirical studies showed that incentive-aligned CBC and hypothetical ACBC performed on a comparable level. This conclusion did not change when applying a meta-analytic approach (tested in Studies 1 and 2 and Supplemental Study A, n = 568): z = 1.51, p = 0.131. Hence, unlike previous research that combined incentive alignment with design enhancements in static CBC (i.e., dual-response format, Wlömert & Eggers, 2016; realistic images, Hauser et al., 2019; Conjoint Poker, Toubia et al., 2012), the combination of both mechanisms (i.e., incentive alignment and adaptive designs) increases the predictive validity significantly beyond what each principle achieves in isolation.

Underlying processes

Following our theoretical reasoning, incentive alignment leads to greater deliberation while responding to a conjoint study. To assess deliberation, we analyzed participants’ processing times as an implicit measure of effort (e.g., Guo, 2022). This is challenging in ACBC as each participant follows an individualized study flow (see Web Appendix H for details). Therefore, we do not focus on raw times (which are only directly comparable for the BYO stage) but on times per choice task. Additionally, we analyze the data by ACBC stage to better understand the interplay of incentive alignment and adaptive designs.

As expected, incentive-aligned (vs. hypothetical) groups generally spent more time per task (between 2% and 10%). An SPM across all four studies with log-transformed times per task to approach a normal distribution (Morrin & Ratneshwar, 2003) shows a highly significant result of close to 5% (β = 0.046, z = 3.11, p = 0.002). Web Appendix H provides descriptive evidence that incentive alignment affects the number of tasks worked on by participants. In 3 out of 4 studies, participants in incentive alignment ACBC conditions had more Screening stage tasks, implying a more critical assessment of specific attribute levels (so-called “must haves” and “unacceptables”). This explains why participants spent more time in total. Using an SPM, we also find a significant close to 6% longer time per task for this stage (β = 0.06, z = 2.08, p = 0.038). Interestingly, the largest significant increase in task time, exceeding 9%, is found in the BYO stage (β = 0.09, z = 2.323, p = 0.020). This is noteworthy, as all studies with significantly longer BYO times (i.e., Study 1, Suppl. Study A, and Study 2) implemented the Direct instead of the RankOrder Mechanism. In sum, incentive alignment increases deliberation, and participants appear to understand the whole interview process of the ACBC. This conclusion is based on the observation that deliberation mostly increases in the BYO and Screening stages, which then has downstream effects on the concepts used in the Tournament stage. Notably, incentive alignment also significantly increases the deliberation in CBC (Study 2: β = 0.21, z = 2.30, p = 0.023).

As the increase in deliberation varies across stages, the question arises whether each additional stage also yields different improvements in predictive validity. To address this question, we re-estimated HB MNL models for each ACBC condition and study with increasing numbers of stages and evaluated the predictive validity in terms of MHP (Web Appendix H presents all results; Web Appendix A outlines which combinations of stages for the data collection and incentivization are feasible). We also re-estimated the model for CBC conditions with increasing choice tasks (similar to Li et al., 2022). The results show that, in the CBC cases, we predominantly see a pattern similar to the one reported by Li et al. (2022). After reaching several choice tasks, additional tasks do not improve and sometimes even reduce the predictive validity. In contrast, for incentive-aligned and hypothetical ACBC conditions, the highest predictive validity is predominantly obtained using all stages. This aligns with our theoretical reasoning that ACBC is less prone to task-specific participant adaptation, leading to higher predictive validity despite more choice tasks and longer interview times. However, the additional effect of incentive alignment in ACBC varies across stages. In forced-choice product predictions (Study 1, HOT 2 in Study 2, and Study 3), the BYO (+ 39.8%) and Tournament (+ 30.1%) stages benefit the most. For free-choice predictions (Suppl. Study A and HOT 3 in Study 2), and the BYO (+ 16.5%) and Calibration (+ 22.8%) stages had larger improvements.

We further reasoned that applying adaptive designs (vs. static CBC) leads to a better evaluation of the study. This was assessed in Study 2, where incentive-aligned and hypothetical CBC and ACBC were part of the study design. The results from the survey evaluation questionnaire (Cronbach’s α = 0.65) revealed significant differences among the four conjoint variants (MiACBC = 3.49, SD = 0.88, MiCBC = 3.14, SD = 0.97, MhACBC = 3.34, SD = 0.94, MhCBC = 3.14, SD = 0.78). More specifically, an ANOVA with the two factors incentive alignment (present vs. absent) and adaptive designs (ACBC vs. CBC) as well as their interaction showed that—as expected—the effect of adaptive designs was significant (F(1,418) = 9.69, p = 0.002), while the effects of incentive alignment (F(1,418) = 0.77, p = 0.381) and the interaction (F(1,418) = 0.64, p = 0.422) remain insignificant. Post-hoc tests without interaction effect indicate that incentive-aligned ACBC is more enjoyable than hypothetical CBC (t(419) = 2.78, p = 0.006) and incentive-aligned CBC (t(419) = 3.11, p = 0.002), but not than hypothetical ACBC (t(419) = 0.88, p = 0.381). We also applied the same scale in Study 3 (Cronbach’s α = 0.64): MiACBC = 3.88 [SD = 0.78] vs. MhACBC = 3.76 [SD = 0.79]. When comparing only the study evaluation between hypothetical vs. incentive-aligned ACBC, the meta-analytic effect (n = 419) across Studies 2 and 3 remains insignificant: Hedges’ g = 0.16, z = 1.61, p = 0.106.

Lastly, we also evaluated possible differences in the scale of the analysis (i.e., the ratio of the price coefficient and the magnitude of the error term as an indication of participants’ choice consistency; see, e.g., Hauser et al., 2019). To analyze differences caused by incentive alignment and adaptive designs, we compare the mean partworths of the relevant conditions in each study (Web Appendix H presents all details). An SPM of the main effect of incentive alignment on scale implies an insignificant effect (p = 0.086). However, comparing conditions with and without adaptive designs reveals significant increases in scale. The factor varies between 1.31 (Study 1) and 1.74 (Study 2). The meta-analytic effect is 1.50 (i.e., + 50%) and significant (p < 0.001). This time, we find significant heterogeneity (Q(3) = 9.08, p = 0.028).

These results suggest that adaptive (vs. static) designs present more relevant alternatives per task, leading to more consistent (i.e., less random or more deterministic) choices. This aligns with the finding from Study 2 that participants enjoy ACBC over CBC studies. However, some stages in ACBC also contain only a few alternatives (e.g., binary choice in the Screening stage).

To summarize, incentive alignment increases deliberation, and, in the case of ACBC, this effect persists to the end of lengthy interviews. We also show that using the Direct mechanism (Ding et al., 2005) and (potentially) rewarding participants with their winning product from ACBC’s Choice Tournament affects all (interlinked) ACBC stages and not solely the Tournament stage. Therefore, consistent with our reasoning, incentive alignment and adaptive designs complement each other, which explains why we do not find a ceiling effect.

Marketing implications beyond predictive validity

We first analyzed reservation prices to provide insight into the different conjoint variants’ forecasts for marketing-relevant metrics (e.g., Gensler et al., 2012; Miller et al., 2011). The reservation price is conceptualized as a participant’s maximum WTP for a specific product instead of not purchasing the product. Compared to a simple rescaling of partworths in monetary space (WTP for features; see Web Appendix D), this measure considers the whole product’s preference while considering the competition with the no-purchase option. To compute reservation prices in each condition (and study), we chose a “popular” product that most customers would consider buying (see Web Appendix H).

Figure 2 shows the demand curves for each study (panels) and conditions (colors). Reservation prices are ordered from low to high values and plotted for each possible price level (one € steps) the fraction of customers who would still buy.

The main conclusions are: (1) All demand curves have a similar shape (i.e., a downward-sloping sigmoid, with not all participants being predicted to buy even for zero Euro, and a long tail with relatively high values). (2) By tendency, incentive-aligned ACBC conditions have fewer participants with extremely high and unrealistic reservation prices than other (A)CBC variants. (3) Adaptive designs and incentive alignment appear to lower (median) reservation prices (vertical dashed lines), but adaptive designs have a more pronounced effect compared to incentive alignment. (4) Differences between the ACBC conditions are minor. In general, the order of conditions based on median reservation prices (\(\overline{rp }\)) is consistently the same across studies: \({\overline{rp} }^{hCBC}> {\overline{rp} }^{iCBC}> {\overline{rp} }^{hACBC}> {\overline{rp} }^{iACBC}\). This general tendency that the combination of incentive alignment with other design improvements in CBC leads to the most conservative reservation prices aligns well with the results of Wlömert and Eggers (2016).

We also repeated the analysis using all possible combinations of attributes and their levels (i.e., the Cartesian product approach; see Gensler et al., 2012). We calculated the median reservation price for each combination and averaged the results within conditions. The order of the results remained the same. From a managerial point of view, one would estimate an average reservation price from the ACBC conditions of 10% (Supplemental Study A) to 50% (Study 2) lower than CBC conditions. For example, averaged median reservation prices in Study 2 (Food Processors) are €169 for incentive-aligned ACBC (the variant with highest predictive validity), €193 for hypothetical ACBC, but €357 for incentive-aligned CBC and €370 for hypothetical CBC. Thus, while incentive-aligned CBC and hypothetical ACBC perform comparably in predictive validity, marketing managers would derive very different reservation prices.

Next, we analyzed the resulting price elasticities to investigate further the relationship between the methods and the resulting price sensitivity. Price elasticities also enable us to assess the face validity of the price responses. As elasticities depend on the specific choice scenario and competition between products matter (i.e., secondary demand effect via switching), we use (one of) the HOT(s) in each study in addition to the evaluation of the simple binary product vs. no-purchase scenarios in the analysis of the reservation prices. Web Appendix H presents all details. In general, the price elasticities appear reasonable in magnitude, e.g., about -2 for pizza in Study 1 and between -2 and -5 for the durables in the other three studies, and these values are comparable to the empirical generalizations summarized in Bijmolt et al. (2005). In most cases, however, we find no clear picture regarding the differences of conjoint variants regarding price elasticity, except for Study 2. Here, incentive alignment and/or adaptive designs increase the elasticity (in absolute terms), which might be a key driver of lower reservation prices.

Furthermore, we focused on Study 3, the only study with competing brands (Fitbit, TomTom, Withings). This is particularly interesting from a marketing perspective as one can interpret cross-price elasticity (i.e., how much price changes in one brand influence demand changes in other brands). Drawing on the extracted cross-price elasticities, we calculated the brand’s vulnerability and competitive clout (Kamakura & Russell, 1989). The former measure quantifies how vulnerable a product is in a simulated market in terms of market share due to competitors’ price changes. The latter measures how much power a product has for drawing market shares from its competitors. As one consistent result, we find that not implementing incentive alignment in ACBC leads to underestimating competitive clout but not vulnerability. This highlights a crucial improvement achieved by incorporating incentive alignment in ACBC.

Finally, when studying markets with alternatives and the option of not buying any product, it is crucial to consider the impact of competition and the availability of the no-purchase option. The results suggest that ACBC (combined with incentive alignment) is better suited for inferring more realistic no-purchase utilities. Indeed, Table 2 already highlighted considerable improvements in the predicted accuracy in free-choice HOTs (Suppl. Study A and HOT 3 in Study 2). To understand this result better, we split the MHP by product and no-purchase hits (Web Appendix H presents all results). All A(CBC) variants better predict no-purchase than product choices. Still, the combination of incentive alignment and ACBC (with the Calibration stage) clearly boosts the predictive validity for no-purchase choices. ACBC outperforms CBC in predicting no-purchase choices even without incentive alignment, but the relative improvement through incentive alignment is larger in CBC than in ACBC. Combined with the results of forced-choice HOTs, we note that the superiority of (incentive-aligned) ACBC is further amplified in HOTs with a no-purchase option. Thus, implementing adaptive designs might result in more accurate managerial conclusions about general product demand, whereas implementing incentive alignment mainly has a positive effect on the prediction of actual product choices.

Cost comparison

We tasked two European market research companies to submit quotes for all conjoint variants (across all product categories). The calculation includes fixed costs per variant, such as setup cost, project management and programming, and analysis. It also includes variable costs that are a direct function of the number of participants surveyed, such as the panel fee, participants’ fee, and cost for incentive alignment (i.e., products and shipping fees). Furthermore, we assume a case where n = 100 individuals participate in each conjoint variant. We averaged the quotes from the two companies (Web Appendix F presents full details). Several insights emerge: First, with sample sizes comparable to the presented four studies, incentive-aligned ACBC imposes the highest and hypothetical CBC the lowest total cost. We conducted a sensitivity analysis to shed further light on the interplay between fixed and variable costs related to varying sample sizes. Incentive-aligned ACBC remains the most expensive and hypothetical CBC the cheapest variant, irrespective of the sample size. The difference between both variants increases with increasing sample sizes due to the significant variable cost for incentive alignment.

Second, the ranking of conjoint variants regarding total cost changes between a sample size of n = 200 and n = 300. With smaller sample sizes, incentive-aligned CBC is cheaper than hypothetical ACBC due to its lower fixed cost. For larger sample sizes, the ranking reverses, as the variable cost of incentive-aligned CBC exceeds the fixed cost of hypothetical ACBC. Large sample sizes favor hypothetical ACBC over incentive-aligned CBC and vice versa.

Third, managers might question the amount of money one must take in hand to “purchase” one percentage point prediction accuracy above the chance-level. After all, in market research, money is invested in learning something that goes beyond chance-level prediction. The answer depends on the conjoint variant: €511.68 (hypothetical CBC), €305.64 (incentive-aligned CBC), €259.88 (hypothetical ACBC), and €211.19 (incentive-aligned ACBC). In contrast to the absolute cost, these figures speak a different language. Specifically, with sample sizes similar to our four studies, hypothetical and incentive-aligned ACBCs are much more cost-effective than their CBC counterparts.

Fourth, the learnings extracted from a sensitivity analysis for these figures are three-fold, as the initial ranking of conjoint variants changed completely with increasing sample size. For sample sizes larger than n = 400, the cost for one percentage point prediction accuracy above the chance-level in incentive-aligned ACBC exceeds those of hypothetical ACBC. Furthermore, above a sample size of between n = 600 and n = 700, the cost of incentive-aligned CBC exceeds those of the hypothetical CBC. In conclusion, in the absence of the ability of increased sample sizes to substantially foster predictive validity, there seems to be an upper limit for the relative cost advantage of incentive alignment in conjoint studies with very high sample sizes. Overall, due to their better predictions per se, both ACBC variants are superior to their static counterparts.

General discussion

Summary of findings

CBC supports managerial decision-making in many fields (e.g., Keller et al., 2021; Schmidt & Bijmolt, 2020). Its broad and ongoing popularity leads to steady improvements to this research toolbox. We analyzed two principles introduced to improve CBC: incentive alignment (e.g., Ding et al., 2005) and adaptive designs, specifically adaptive choice-based conjoint analysis (e.g., Johnson & Orme, 2007).

This study is the first that combines both principles and benchmarks them against each other concerning their predictive validity. It further focuses on the underlying process differences (i.e., the extent of deliberation, experienced study enjoyment, choice consistency), and differences in various marketing implications (e.g., estimated reservation prices). Finally, it highlights under which conditions the application of both, incentive alignment and/or adaptive designs can lower the cost for information received in market research studies on predicting product purchases. A series of four experiments (online and lab) using diverse product categories, and consumer samples deliver important insights.

Independent of the applied validation procedure (predicting participants’ consequential product choices, incentive-aligned product rankings, or an independent validation sample’s choice shares), our results demonstrate that incentive-aligned ACBC produces top-tier predictions and, thus, outperforms incentive-aligned (and hypothetical) CBC and hypothetical ACBC. Likewise, incentive-aligned CBC and hypothetical ACBC perform equally well. Interestingly, the results point to a greater benefit from applying adaptive designs than incentive alignment. This is particularly true when predicting participants’ product rankings. In this case, adaptive designs have a major positive effect, while the incentive alignment effect is negligible. Apart from this, our results provide no evidence of any (negative) interaction of the principles. This supports the notion that the two influences stem from independent, underlying psychological processes and rejects a ceiling effect: Adaptive (vs. static) designs rather lead to enhanced study enjoyment, and it appears that this materializes in the form of higher choice consistency (as displayed by higher scale). Incentive-aligned (vs. hypothetical) CBC comes with more deliberation without resulting in higher enjoyment. We show that adaptive designs can help mitigate additional choice tasks' adverse effects in CBC studies (Li et al., 2022).

Our results support the positive effect of the optional Calibration stage on the predictive validity of ACBC. However, the Calibration stage has often been ignored in academic ACBC applications (e.g., Bauer et al., 2015; Huang & Luo, 2016).

Overall, we conclude that incentive-aligned ACBC leads to the best predictions of future purchase decisions. Against this background, we further analyzed issues beyond the mere differences in predictive validity. Specifically, our results show that market researchers may extract exaggerated estimates of reservation prices from a CBC study when not applying adaptive designs. Thus, neither incentive-aligned nor hypothetical CBC seems to adequately capture consumers’ preference for the no-purchase option. The same also holds for implications regarding competition in markets, as applying static CBC (vs. adaptive designs) also leads to underestimating price elasticities. Thus, managers should be aware of the trade-offs when applying suboptimal CBC variants, as they could end up with a biased view of general demand and consumers’ mean WTP for the products they are analyzing.

When looking at the general differences in the estimated utility functions (see Web Appendix D), some tendencies appear for the application of adaptive (vs. static) CBCs: First, we see a reduced preference for unusual attribute levels (e.g., garlic on a pizza or a special color version of the PlayStation 4 bundle). Second, attribute levels not directly related to the core product usage (e.g., an additional recipe book for a food processor) also have lower utilities.

Finally, when taking a cost perspective—which has been ignored by literature before—our sensitivity analysis suggests that the ranking of total cost from high to low changed from incentive-aligned ACBC > incentive-aligned CBC > hypothetical ACBC > hypothetical CBC to incentive-aligned ACBC > hypothetical ACBC > incentive-aligned CBC > hypothetical CBC when sample size increased to approximately n = 200 or higher. In other words, implementing both adaptive designs and incentive alignment becomes less attractive with large sample sizes. This picture completely changes when focusing on the cost per unit of prediction accuracy. An analysis of the cost per 1% predictive validity above chance-level prediction highlights that at n = 100, the ranking of variants (from highest cost to lowest cost) is hypothetical CBC > incentive-aligned CBC > hypothetical ACBC > incentive-aligned ACBC. With increasing sample sizes above n = 400, hypothetical CBC and ACBC are becoming relatively cheaper, and at sample sizes above approximately n = 650, the ranking of cost per prediction accuracy changed to incentive-aligned CBC > hypothetical CBC > incentive-aligned ACBC > hypothetical ACBC. Thus, for studies with very large sample sizes, there appears to be an upper limit to the usefulness of incentive alignment when the predictive validity is not increasing with sample size. At the same time, applying adaptive vs. static designs is becoming relatively more attractive.

Managerial implications

When focusing solely on the absolute gains in predictive validity, the implications of our research are straightforward. However, a more integrative discussion of the strengths and weaknesses of conjoint variants is mandatory for market research managers. To this end, Table 3 summarizes the various aspects that might guide managers in deciding on the most appropriate (A)CBC variant under the right circumstances. It becomes evident that this undertaking is more complex than simply following a straightforward decision tree. Nonetheless, managers can draw upon various criteria to aid them in this process.

Subsequently, we provide a breakdown of the essential matters that warrant careful consideration:

-

(1)

Regarding feasibility, ACBC is not a “silver bullet.” Adaptive CBC surveys rely on computerized interviews, which may be unavailable in some situations or may be impractical or not economically viable. This is the case, for example, when a survey is conducted in environments such as care homes. CBC surveys are more flexible since they can be administered in paper-and-pencil and computer formats.

-

(2)