Abstract

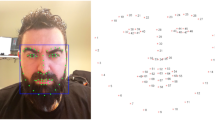

A virtual cosmetics try-on system provides a realistic try-on experience for consumers and helps them efficiently choose suitable cosmetics. In this article, we propose a real-time augmented reality virtual cosmetics try-on system for smartphones (ARCosmetics), taking speed, accuracy, and stability into consideration at each step to ensure a better user experience. A novel and very fast face tracking method utilizes the face detection box and the average position of facial landmarks to estimate the faces in continuous frames. A dynamic weight Wing loss is introduced to assign a dynamic weight to every landmark by the estimated error during training. It balances the attention between small, medium, and large range error and thus increases the accuracy and robustness. We also designed a weighted average method to utilize the information of the adjacent frame for landmark refinement, guaranteeing the stability of the generated landmarks. Extensive experiments conducted on a large 106-point facial landmark dataset and the 300-VW dataset demonstrate the superior performance of the proposed method compared to other state-of-the-art methods. We also conducted user satisfaction studies further to verify the efficiency and effectiveness of our ARCosmetics system.

Similar content being viewed by others

References

Chen H J, Hui K M, Wang S Y, Tsao L W, Shuai H H, Cheng W H. BeautyGlow: on-demand makeup transfer framework with reversible generative network. In: Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2019, 10034–10042

Jiang W, Liu S, Gao C, Cao J, He R, Feng J, Yan S. PSGAN: pose and expression robust spatial-aware GAN for customizable makeup transfer. In: Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2020, 5193–5201

Kingma D P, Dhariwal P. Glow: generative flow with invertible 1×1 convolutions. In: Proceedings of the 32nd International Conference on Neural Information Processing Systems. 2018, 10236–10245

Viola P, Jones M J. Robust real-time face detection. International Journal of Computer Vision, 2004, 57(2): 137–154

Li H, Lin Z, Shen X, Brandt J, Hua G. A convolutional neural network cascade for face detection. In: Proceedings of 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2015, 5325–5334

Zhang K, Zhang Z, Li Z, Qiao Y. Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Processing Letters, 2016, 23(10): 1499–1503

Tang X, Du D K, He Z, Liu J. PyramidBox: a context-assisted single shot face detector. In: Proceedings of the 15th European Conference on Computer Vision (ECCV). 2018, 812–828

Deng J, Guo J, Zhou Y, Yu J, Kotsia I, Zafeiriou S. RetinaFace: single-stage dense face localisation in the wild. 2019, arXiv preprint arXiv: 1905.00641

Henriques J F, Caseiro R, Martins P, Batista J. High-speed tracking with kernelized correlation filters. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(3): 583–596

Held D, Thrun S, Savarese S. Learning to track at 100 FPS with deep regression networks. In: Proceedings of the 14th European Conference on Computer Vision (ECCV). 2016, 749–765

Li B, Wu W, Wang Q, Zhang F, Xing J, Yan J. SiamRPN++: evolution of Siamese visual tracking with very deep networks. In: Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). 2019, 4277–4286

Li B, Yan J, Wu W, Zhu Z, Hu X. High performance visual tracking with Siamese region proposal network. In: Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2018, 8971–8980

Wang Q, Teng Z, Xing J, Gao J, Hu W, Maybank S. Learning attentions: residual attentional Siamese network for high performance online visual tracking. In: Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2018, 4854–4863

Zhu Z, Wang Q, Li B, Wu W, Yan J, Hu W. Distractor-aware Siamese networks for visual object tracking. In: Proceedings of the 15th European Conference on Computer Vision (ECCV). 2018, 103–119

Yan S, Liu C, Li S Z, Zhang H, Shum H Y, Cheng Q. Face alignment using texture-constrained active shape models. Image and Vision Computing, 2003, 21(1): 69–75

Cootes T F, Edwards G J, Taylor C J. Active appearance models. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2001, 23(6): 681–685

Bulat A, Tzimiropoulos G. How far are we from solving the 2D & 3D face alignment problem? (and a dataset of 230, 000 3D facial landmarks). In: Proceedings of 2017 IEEE International Conference on Computer Vision (ICCV). 2017, 1021–1030

Dong X, Yan Y, Ouyang W, Yang Y. Style aggregated network for facial landmark detection. In: Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2018, 379–388

Kowalski M, Naruniec J, Trzcinski T. Deep alignment network: a convolutional neural network for robust face alignment. In: Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). 2017, 2034–2043

Newell A, Yang K, Deng J. Stacked hourglass networks for human pose estimation. In: Proceedings of the 14th European Conference on Computer Vision (ECCV). 2016, 483–499

Wang X, Bo L, Li F. Adaptive wing loss for robust face alignment via heatmap regression. In: Proceedings of 2019 IEEE/CVF International Conference on Computer Vision (ICCV). 2019, 6970–6980

Wu W, Qian C, Yang S, Wang Q, Cai Y, Zhou Q. Look at boundary: a boundary-aware face alignment algorithm. In: Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2018, 2129–2138

Guo X, Li S, Yu J, Zhang J, Ma J, Ma L, Liu W, Ling H. PFLD: a practical facial landmark detector. 2019, arXiv preprint arXiv: 1902.10859

Lv J, Shao X, Xing J, Cheng C, Zhou X. A deep regression architecture with two-stage re-initialization for high performance facial landmark detection. In: Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2017, 3691–3700

Sun Y, Wang X, Tang X. Deep convolutional network cascade for facial point detection. In: Proceedings of 2013 IEEE Conference on Computer Vision and Pattern Recognition. 2013, 3476–3483

Valle R, Buenaposada J M, Valdés A, Baumela L. A deeply-initialized coarse-to-fine ensemble of regression trees for face alignment. In: Proceedings of the 15th European Conference on Computer Vision (ECCV). 2018, 609–624

Zhang Z, Luo P, Loy C C, Tang X. Facial landmark detection by deep multi-task learning. In: Proceedings of the 13th European Conference on Computer Vision (ECCV). 2014, 94–108

Feng Z H, Kittler J, Awais M, Huber P, Wu X J. Wing loss for robust facial landmark localisation with convolutional neural networks. In: Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2018, 2235–2245

Shen J, Zafeiriou S, Chrysos G G, Kossaifi J, Tzimiropoulos G, Pantic M. The first facial landmark tracking in-the-wild challenge: benchmark and results. In: Proceedings of 2015 IEEE International Conference on Computer Vision Workshop (ICCVW). 2015, 1003–1011

Asthana A, Zafeiriou S, Cheng S, Pantic M. Incremental face alignment in the wild. In: Proceedings of 2014 IEEE Conference on Computer Vision and Pattern Recognition. 2014, 1859–1866

Sánchez-Lozano E, Martinez B, Tzimiropoulos G, Valstar M. Cascaded continuous regression for real-time incremental face tracking. In: Proceedings of the 14th European Conference on Computer Vision (ECCV). 2016, 645–661

Dong X, Yu S I, Weng X, Wei S E, Yang Y, Sheikh Y. Supervision-by-registration: an unsupervised approach to improve the precision of facial landmark detectors. In: Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2018, 360–368

Jin Y, Guo X, Li Y, Xing J, Tian H. Towards stabilizing facial landmark detection and tracking via hierarchical filtering: a new method. Journal of the Franklin Institute, 2020, 357(5): 3019–3037

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S, Fu C Y, Berg A C. SSD: single shot MultiBox detector. In: Proceedings of the 14th European Conference on Computer Vision (ECCV). 2016, 21–37

Howard A G, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, Andreetto M, Adam H. MobileNets: efficient convolutional neural networks for mobile vision applications. 2017, arXiv preprint arXiv: 1704.04861

Chen Y, Wang Z, Peng Y, Zhang Z, Yu G, Sun J. Cascaded pyramid network for multi-person pose estimation. In: Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2018, 7103–7112

Liu Y, Shen H, Si Y, Wang X, Zhu X, Shi H, Hong Z, Guo H, Guo Z, Chen Y, Li B, Xi T, Yu J, Xie H, Xie G, Li M, Lu Q, Wang Z, Lai S, Chai Z, Wei X. Grand challenge of 106-point facial landmark localization. In: Proceedings of 2019 IEEE International Conference on Multimedia & Expo Workshops (ICMEW). 2019, 613–616

Cao Q, Shen L, Xie W, Parkhi O M, Zisserman A. VGGFace2: a dataset for recognising faces across pose and age. In: Proceedings of the 13th IEEE International Conference on Automatic Face & Gesture Recognition. 2018, 67–74

Sagonas C, Antonakos E, Tzimiropoulos G, Zafeiriou S, Pantic M. 300 faces in-the-wild challenge: database and results. Image and Vision Computing, 2016, 47: 3–18

Kingma D P, Ba J. Adam: a method for stochastic optimization. In: Proceedings of the 3rd International Conference on Learning Representations. 2015

Kumar A, Chellappa R. Disentangling 3D pose in a dendritic CNN for unconstrained 2D face alignment. In: Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2018, 430–439

Lucas B D, Kanade T. An iterative image registration technique with an application to stereo vision. In: Proceedings of the 7th International Joint Conference on Artificial Intelligence. 1981, 674–679

Kazemi V, Sullivan J. One millisecond face alignment with an ensemble of regression trees. In: Proceedings of 2014 IEEE Conference on Computer Vision and Pattern Recognition. 2014, 1867–1874

King D E. Dlib-ml: a machine learning toolkit. The Journal of Machine Learning Research, 2009, 10: 1755–1758

Acknowledgements

This work was supported in part by the National Key R&D Program of China (2021ZD0140407) and in part by the National Natural Science Foundation of China (Grant No. U21A20523).

Author information

Authors and Affiliations

Corresponding author

Additional information

Shan An (Senior Member, IEEE) received a Bachelor’s degree in automation engineering from Tianjin University, China in 2007 and a Master’s degree in control science from Shandong University, China in 2010. He is currently the team leader of the vision learning group of Tech. & Data Center, JD.COM Inc. He has served as a program committee member for ACM Multimedia (2019–2022), AAAI (2022), and IJCAI (2021–2024). He is a reviewer of more than twenty highly prestigious journals and conference papers, such as IEEE TRANSACTIONS ON NEURAL NETWORKS AND LEARNING SYSTEMS, IEEE TRANSACTIONS ON MULTIMEDIA, and Pattern Recognition, CVPR, ICCV, ICRA. His research interests include computer vision in AR and robotics, image retrieval, and image segmentation.

Jianye Chen received the BE degree and Master’s degree from the Beijing University of Posts and Telecommunications, China in 2015 and 2018, respectively. He is currently working as a computer vision algorithm engineer with the Tech. & Data Center in JD.COM Inc. His current research interests include face alignment and video analysis.

Zhaoqi Zhu received the Bachelor’s degree in Electronic Information Engineering from the Hebei University of Industry, China and the Master’s degree in Electronic and Communication Engineering from the Tianjin University, China in 2015 and 2018, respectively. He is currently working as a computer vision algorithm engineer with the Tech. & Data Center in JD.COM Inc. His research interests include computer vision, deep learning and recommendation system.

Fangru Zhou received the Bachelor’s degree in information and computing science, and the Master’s degree in mathematics and applied mathematics from Northeastern University, China in 2016 and 2019, respectively. She is currently an algorithm engineer with the Tech. & Data Center in JD.COM Inc. Her research interests include computer vision and neural network applications.

Yuxing Yang received the Bachelor’s degree in communication engineering, and the Master’s degree in computer science from Beijing University of Posts and Telecommunications, China in 2016 and 2019, respectively. He is currently an algorithm engineer with the Tech. & Data Center in JD.COM Inc. His research interests include computer vision in AR, deep learning and image processing.

Yuqing Ma received the PhD degree in 2021 from Beihang University, China. She is currently working as a PostDoc at the School of Computer Science and Engineering, Beihang University, China. Her current research interests include computer vision, few-shot learning, and open world detection.

Xianglong Liu (Member, IEEE) received the BS and PhD degrees in computer science from Beihang University, China in 2008 and 2014. From 2011 to 2012, he visited the Digital Video and Multimedia (DVMM) Lab, Columbia University, USA as a joint PhD student. He is currently a Professor with the School of Computer Science and Engineering, Beihang University, China. He has published over 60 research papers at top venues like the IEEE TRANSACTIONS ON IMAGE PROCESSING, the IEEE TRANSACTIONS ON CYBERNETICS, IEEE TRANSACTIONS ON NEURAL NETWORKS AND LEARNING SYSTEMS, the Conference on Computer Vision and Pattern Recognition, the International Conference on Computer Vision, and the Association for the Advancement of Artificial Intelligence. His research interests include machine learning, computer vision and multimedia information retrieval.

Haogang Zhu (Member, IEEE) received the PhD degree from the University College London, UK. He is Professor of the School of Computer Science and Engineering, Beihang University, China from 2015. His main research interest includes Bayesian analysis, machine learning, and image understanding. He worked as the Principle Investigator and Data Scientist on various projects sponsored by research councils and industry leaders such as NIHR UK, Fight for Sight, Heidelberg Engineering, Pfizer, Novartis and Carl Zeiss Meditec. His research has led to several patents and tools/systems used by research institutes and industrial companies.

Electronic Supplementary Material

Rights and permissions

About this article

Cite this article

An, S., Chen, J., Zhu, Z. et al. ARCosmetics: a real-time augmented reality cosmetics try-on system. Front. Comput. Sci. 17, 174706 (2023). https://doi.org/10.1007/s11704-022-2059-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11704-022-2059-8