Abstract

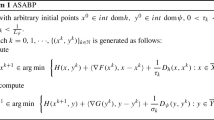

In this paper, we consider a nonsmooth convex finite-sum problem with a conic constraint. To overcome the challenge of projecting onto the constraint set and computing the full (sub)gradient, we introduce a primal-dual incremental gradient scheme where only a component function and two constraints are used to update each primal-dual sub-iteration in a cyclic order. We demonstrate an asymptotic sublinear rate of convergence in terms of suboptimality and infeasibility which is an improvement over the state-of-the-art incremental gradient schemes in this setting. Numerical results suggest that the proposed scheme compares well with competitive methods.

Similar content being viewed by others

References

Amini, M., Yousefian, F.: An iterative regularized incremental projected subgradient method for a class of bilevel optimization problems. In: 2019 American Control Conference (ACC), pp. 4069–4074. IEEE (2019)

Bauschke, H.H.: Projection algorithms and monotone operators. Ph.D. thesis, Theses (Dept. of Mathematics and Statistics)/Simon Fraser University (1996)

Ben-Tal, A., Nemirovski, A.: Lectures on Modern Convex Optimization: Analysis, Algorithms, and Engineering Applications. SIAM (2001)

Bertsekas, D., Nedic, A., Ozdaglar, A.: Convex Analysis and Optimization. Athena Scientific, Athena Scientific Optimization and Computation Series (2003)

Blatt, D., Hero, A.O., Gauchman, H.: A convergent incremental gradient method with a constant step size. SIAM J. Optim. 18(1), 29–51 (2007)

Chambolle, A., Ehrhardt, M.J., Richtárik, P., Schonlieb, C.B.: Stochastic primal-dual hybrid gradient algorithm with arbitrary sampling and imaging applications. SIAM J. Optim. 28(4), 2783–2808 (2018)

Chen, S., Donoho, D.: Basis pursuit. In: Proceedings of 1994 28th Asilomar Conference on Signals, Systems and Computers, vol. 1, pp. 41–44. IEEE (1994)

Defazio, A., Bach, F., Lacoste-Julien, S.: Saga: A fast incremental gradient method with support for non-strongly convex composite objectives. In: Advances in neural information processing systems, pp. 1646–1654 (2014)

Donoho, D.L.: Compressed sensing. IEEE Trans. Inf. Theory 52(4), 1289–1306 (2006)

Gaines, B.R., Kim, J., Zhou, H.: Algorithms for fitting the constrained lasso. J. Comput. Graph. Stat. 27(4), 861–871 (2018)

Gurbuzbalaban, M., Ozdaglar, A., Parrilo, P.A.: On the convergence rate of incremental aggregated gradient algorithms. SIAM J. Optim. 27(2), 1035–1048 (2017)

Hamedani, E.Y., Aybat, N.S.: A primal-dual algorithm for general convex-concave saddle point problems. arXiv preprint arXiv:1803.01401 (2018)

Jalilzadeh, A., Yazdandoost Hamedani, E., Aybat, N.S., Shanbhag, U.V.: A doubly-randomized block-coordinate primal-dual method for large-scale saddle point problems. arXiv pp. arXiv–1907 (2019)

Kaushik, H.D., Yousefian, F.: A projection-free incremental gradient method for large-scale constrained optimization. arXiv preprint arXiv:2006.07956 (2020)

Le Roux, N., Schmidt, M., Bach, F.: A stochastic gradient method with an exponential convergence rate for finite training sets. Pereira et al (2013)

Nedic, A., Bertsekas, D.P.: Incremental subgradient methods for nondifferentiable optimization. SIAM J. Optim. 12(1), 109–138 (2001)

Xu, Y.: First-order methods for constrained convex programming based on linearized augmented lagrangian function. arXiv preprint arXiv:1711.08020 (2017)

Xu, Y.: Primal-dual stochastic gradient method for convex programs with many functional constraints. SIAM J. Optim. 30(2), 1664–1692 (2020)

Yousefian, F., Nedić, A., Shanbhag, U.V.: On smoothing, regularization, and averaging in stochastic approximation methods for stochastic variational inequality problems. Math. Program. 165(1), 391–431 (2017)

Yu, A.W., Lin, Q., Yang, T.: Doubly stochastic primal-dual coordinate method for regularized empirical risk minimization with factorized data. CoRR, abs/1508.03390 (2015)

Zhang, Y., Xiao, L.: Stochastic primal-dual coordinate method for regularized empirical risk minimization. J. Mach. Learn. Res. 18(1), 2939–2980 (2017)

Zhu, Z., Storkey, A.J.: Adaptive stochastic primal-dual coordinate descent for separable saddle point problems. In: Joint European Conference on Machine Learning and Knowledge Discovery in Databases, pp. 645–658. Springer (2015)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jalilzadeh, A. Primal-dual incremental gradient method for nonsmooth and convex optimization problems. Optim Lett 15, 2541–2554 (2021). https://doi.org/10.1007/s11590-021-01752-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-021-01752-x