Abstract

Comprehending speech with the existence of background noise is of great importance for human life. In the past decades, a large number of psychological, cognitive and neuroscientific research has explored the neurocognitive mechanisms of speech-in-noise comprehension. However, as limited by the low ecological validity of the speech stimuli and the experimental paradigm, as well as the inadequate attention on the high-order linguistic and extralinguistic processes, there remains much unknown about how the brain processes noisy speech in real-life scenarios. A recently emerging approach, i.e., the second-person neuroscience approach, provides a novel conceptual framework. It measures both of the speaker’s and the listener’s neural activities, and estimates the speaker-listener neural coupling with regarding of the speaker’s production-related neural activity as a standardized reference. The second-person approach not only promotes the use of naturalistic speech but also allows for free communication between speaker and listener as in a close-to-life context. In this review, we first briefly review the previous discoveries about how the brain processes speech in noise; then, we introduce the principles and advantages of the second-person neuroscience approach and discuss its implications to unravel the linguistic and extralinguistic processes during speech-in-noise comprehension; finally, we conclude by proposing some critical issues and calls for more research interests in the second-person approach, which would further extend the present knowledge about how people comprehend speech in noise.

Similar content being viewed by others

References

Alain C, Du Y, Bernstein LJ et al (2018) Listening under difficult conditions: an activation likelihood estimation meta-analysis. Hum Brain Mapp 39(7):2695–2709. https://doi.org/10.1002/hbm.24031

Alexandrou AM, Saarinen T, Makela S et al (2017) The right hemisphere is highlighted in connected natural speech production and perception. NeuroImage 152:628–638. https://doi.org/10.1016/j.neuroimage.2017.03.006

Anderson S, Kraus N (2010) Sensory-cognitive interaction in the neural encoding of speech in noise: a review. J Am Acad Audiol 21(9):575–585. https://doi.org/10.3766/jaaa.21.9.3

Armeni K, Willems RM, Frank SL (2017) Probabilistic language models in cognitive neuroscience: promises and pitfalls. Neurosci Biobehav Rev 83:579–588. https://doi.org/10.1016/j.neubiorev.2017.09.001

Badal VD, Nebeker C, Shinkawa K et al (2021) Do words Matter? Detecting social isolation and loneliness in older adults using Natural Language Processing. Front Psychiatry 12:728732. https://doi.org/10.3389/fpsyt.2021.728732

Broderick MP, Anderson AJ, Di Liberto GM et al (2018) Electrophysiological Correlates of Semantic Dissimilarity reflect the comprehension of Natural, Narrative Speech. Curr Biol 28(5):803–809e803. https://doi.org/10.1016/j.cub.2018.01.080

Coffey EBJ, Mogilever NB, Zatorre RJ (2017) Speech-in-noise perception in musicians: a review. Hear Res 352:49–69. https://doi.org/10.1016/j.heares.2017.02.006

Crosse MJ, Di Liberto GM, Bednar A et al (2016) The multivariate temporal response function (mTRF) toolbox: a MATLAB Toolbox for relating neural signals to continuous stimuli. Front Hum Neurosci 10:604. https://doi.org/10.3389/fnhum.2016.00604

Czeszumski A, Eustergerling S, Lang A et al (2020) Hyperscanning: a valid method to study neural inter-brain underpinnings of Social Interaction. Front Hum Neurosci 14:39. https://doi.org/10.3389/fnhum.2020.00039

Dai B, Chen C, Long Y et al (2018) Neural mechanisms for selectively tuning in to the target speaker in a naturalistic noisy situation. Nat Commun 9(1):2405. https://doi.org/10.1038/s41467-018-04819-z

de Heer WA, Huth AG, Griffiths TL et al (2017) The hierarchical cortical organization of human speech processing. J Neurosci 37(27):6539–6557. https://doi.org/10.1523/Jneurosci.3267-16.2017

Dieler AC, Tupak SV, Fallgatter AJ (2012) Functional near-infrared spectroscopy for the assessment of speech related tasks. Brain Lang 121(2):90–109. https://doi.org/10.1016/j.bandl.2011.03.005

Dikker S, Silbert LJ, Hasson U et al (2014) On the same wavelength: predictable language enhances speaker-listener brain-to-brain synchrony in posterior superior temporal gyrus. J Neurosci 34(18):6267–6272. https://doi.org/10.1523/JNEUROSCI.3796-13.2014

Ding N, Simon JZ (2012) Emergence of neural encoding of auditory objects while listening to competing speakers. Proc Natl Acad Sci U S A 109(29):11854–11859. https://doi.org/10.1073/pnas.1205381109

Ding N, Simon JZ (2013) Adaptive temporal encoding leads to a background-insensitive cortical representation of speech. J Neurosci 33(13):5728–5735. https://doi.org/10.1523/JNEUROSCI.5297-12.2013

Dryden A, Allen HA, Henshaw H et al (2017) The association between cognitive performance and speech-in-noise perception for adult listeners: a systematic literature review and meta-analysis. Trends Hear. https://doi.org/10.1177/2331216517744675

Du Y, Buchsbaum BR, Grady CL et al (2014) Noise differentially impacts phoneme representations in the auditory and speech motor systems. Proc Natl Acad Sci U S A 111(19):7126–7131. https://doi.org/10.1073/pnas.1318738111

Du Y, Buchsbaum BR, Grady CL et al (2016) Increased activity in frontal motor cortex compensates impaired speech perception in older adults. Nat Commun 7:12241. https://doi.org/10.1038/ncomms12241

Du Y, Zatorre RJ (2017) Musical training sharpens and bonds ears and tongue to hear speech better. Proc Natl Acad Sci U S A 114(51):13579–13584. https://doi.org/10.1073/pnas.1712223114

Etard O, Reichenbach T (2019) Neural Speech Tracking in the Theta and in the Delta frequency Band differentially encode clarity and comprehension of Speech in noise. J Neurosci 39(29):5750–5759. https://doi.org/10.1523/JNEUROSCI.1828-18.2019

Fedorenko E, Blank IA (2020) Broca’s area is not a Natural Kind. Trends Cogn Sci 24(4):270–284. https://doi.org/10.1016/j.tics.2020.01.001

Friederici AD (2012) The cortical language circuit: from auditory perception to sentence comprehension. Trends Cogn Sci 16(5):262–268. https://doi.org/10.1016/j.tics.2012.04.001

Garrod S, Pickering MJ (2004) Why is conversation so easy? Trends Cogn Sci 8(1):8–11. https://doi.org/10.1016/j.tics.2003.10.016

Golestani N, Hervais-Adelman A, Obleser J et al (2013) Semantic versus perceptual interactions in neural processing of speech-in-noise. NeuroImage 79:52–61. https://doi.org/10.1016/j.neuroimage.2013.04.049

Golumbic EMZ, Ding N, Bickel S et al (2013) Mechanisms underlying selective neuronal Tracking of attended Speech at a “Cocktail Party”. Neuron 77(5):980–991. https://doi.org/10.1016/j.neuron.2012.12.037

Grand G, Blank IA, Pereira F et al (2022) Semantic projection recovers rich human knowledge of multiple object features from word embeddings. Nat Hum Behav. https://doi.org/10.1038/s41562-022-01316-8

Guediche S, Blumstein SE, Fiez JA et al (2014) Speech perception under adverse conditions: insights from behavioral, computational, and neuroscience research. Front Syst Neurosci 7:126. https://doi.org/10.3389/fnsys.2013.00126

Hagoort P (2019) The neurobiology of language beyond single-word processing. Science 366:55–58

Hamilton LS, Huth AG (2020) The revolution will not be controlled: natural stimuli in speech neuroscience. Lang Cogn Neurosci 35(5):573–582. https://doi.org/10.1080/23273798.2018.1499946

Hamilton AFC (2021) Hyperscanning: beyond the hype. Neuron 109(3):404–407. https://doi.org/10.1016/j.neuron.2020.11.008

Hanulikova A (2021) Do faces speak volumes? Social expectations in speech comprehension and evaluation across three age groups. PLoS ONE 16(10):e0259230. https://doi.org/10.1371/journal.pone.0259230

Hasson U, Ghazanfar AA, Galantucci B et al (2012) Brain-to-brain coupling: a mechanism for creating and sharing a social world. Trends Cogn Sci 16(2):114–121. https://doi.org/10.1016/j.tics.2011.12.007

Hasson U, Frith CD (2016) Mirroring and beyond: coupled dynamics as a generalized framework for modelling social interactions. Philos Trans R Soc Lond B Biol Sci 371(1693). https://doi.org/10.1098/rstb.2015.0366

Hasson U, Egidi G, Marelli M et al (2018) Grounding the neurobiology of language in first principles: the necessity of non-language-centric explanations for language comprehension. Cognition 180:135–157. https://doi.org/10.1016/j.cognition.2018.06.018

Healy EW, Yoho SE (2016) Difficulty understanding speech in noise by the hearing impaired: underlying causes and technological solutions. In: Annual international conference IEEE engineering in medicine and biology society 2016, pp 89–92. https://doi.org/10.1109/EMBC.2016.7590647

Hennessy S, Mack WJ, Habibi A (2022) Speech-in-noise perception in musicians and non-musicians: a multi-level meta-analysis. Hear Res 416:108442. https://doi.org/10.1016/j.heares.2022.108442

Hernandez LM, Green SA, Lawrence KE et al (2020) Social attention in Autism: neural sensitivity to Speech over background noise predicts encoding of Social Information. Front Psychiatry 11:343. https://doi.org/10.3389/fpsyt.2020.00343

Herrmann B, Schlichting N, Obleser J (2014) Dynamic range adaptation to spectral stimulus statistics in human auditory cortex. J Neurosci 34(1):327–331. https://doi.org/10.1523/Jneurosci.3974-13.2014

Hickok G, Poeppel D (2007) The cortical organization of speech processing. Nat Rev Neurosci 8(5):393–402. https://doi.org/10.1038/nrn2113

Hickok G, Houde J, Rong F (2011) Sensorimotor integration in speech processing: computational basis and neural organization. Neuron 69(3):407–422. https://doi.org/10.1016/j.neuron.2011.01.019

Hitczenko K, Mazuka R, Elsner M et al (2020) When context is and isn’t helpful: a corpus study of naturalistic speech. Psychon Bull Rev 27(4):640–676. https://doi.org/10.3758/s13423-019-01687-6

Holder JT, Levin LM, Gifford RH (2018) Speech Recognition in noise for adults with normal hearing: age-normative performance for AzBio, BKB-SIN, and QuickSIN. Otol Neurotol 39(10):e972–e978. https://doi.org/10.1097/MAO.0000000000002003

Holroyd CB (2022) Interbrain synchrony: on wavy ground. Trends Neurosci 45(5):346–357. https://doi.org/10.1016/j.tins.2022.02.002

Huth AG, de Heer WA, Griffiths TL et al (2016) Natural speech reveals the semantic maps that tile human cerebral cortex. Nature 532(7600):453–458. https://doi.org/10.1038/nature17637

Jaaskelainena IP, Sams M, Glerean E et al (2021) Movies and narratives as naturalistic stimuli in neuroimaging. NeuroImage 224:117445. https://doi.org/10.1016/j.neuroimage.2020.117445

Jiang J, Dai B, Peng D et al (2012) Neural synchronization during face-to-face communication. J Neurosci 32(45):16064–16069. https://doi.org/10.1523/JNEUROSCI.2926-12.2012

Jiang J, Zheng LF, Lu CM (2021) A hierarchical model for interpersonal verbal communication. Soc Cogn Affect Neurosci 16(1–2):246–255. https://doi.org/10.1093/scan/nsaa151

Kelsen BA, Sumich A, Kasabov N et al (2022) What has social neuroscience learned from hyperscanning studies of spoken communication? A systematic review. Neurosci Biobehav Rev 132:1249–1262. https://doi.org/10.1016/j.neubiorev.2020.09.008

Kingsbury L, Huang S, Wang J et al (2019) Correlated neural activity and encoding of Behavior across brains of socially interacting animals. Cell 178(2):429–446e416. https://doi.org/10.1016/j.cell.2019.05.022

Kingsbury L, Hong WZ (2020) A multi-brain framework for social interaction. Trends Neurosci 43(9):651–666. https://doi.org/10.1016/j.tins.2020.06.008

Kuhlen AK, Allefeld C, Haynes JD (2012) Content-specific coordination of listeners’ to speakers’ EEG during communication. Front Hum Neurosci 6:266. https://doi.org/10.3389/fnhum.2012.00266

Kutlu E, Tiv M, Wulff S et al (2022) Does race impact speech perception? An account of accented speech in two different multilingual locales. Cogn Res Princ Implic 7(1):7. https://doi.org/10.1186/s41235-022-00354-0

Leong V, Byrne E, Clackson K et al (2017) Speaker gaze increases information coupling between infant and adult brains. Proc Natl Acad Sci U S A 114(50):13290–13295. https://doi.org/10.1073/pnas.1702493114

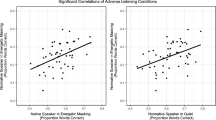

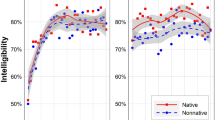

Li ZR, Li JW, Hong B et al (2021) Speaker-Listener neural coupling reveals an adaptive mechanism for Speech Comprehension in a noisy environment. Cereb Cortex 31(10):4719–4729. https://doi.org/10.1093/cercor/bhab118

Li JW, Hong B, Nolte G et al (2022a) Preparatory delta phase response is correlated with naturalistic speech comprehension performance. Cogn Neurodyn 16(2):337–352. https://doi.org/10.1007/s11571-021-09711-z

Li ZR, Hong B, Wang D et al (2022b) Speaker-listener neural coupling reveals a right-lateralized mechanism for non-native speech-in-noise comprehension. Cereb Cortex. https://doi.org/10.1093/cercor/bhac302

Liberman AM, Cooper FS, Shankweiler DP et al (1967) Perception of the speech code. Psychol Rev 74(6):431–461. https://doi.org/10.1037/h0020279

Liu L, Zhang Y, Zhou Q et al (2020) Auditory-articulatory neural alignment between Listener and Speaker during Verbal Communication. Cereb Cortex 30(3):942–951. https://doi.org/10.1093/cercor/bhz138

Marrufo-Perez MI, Sturla-Carreto DDP, Eustaquio-Martin A et al (2020) Adaptation to noise in Human Speech Recognition depends on noise-level statistics and fast dynamic-range Compression. J Neurosci 40(34):6613–6623. https://doi.org/10.1523/JNEUROSCI.0469-20.2020

McGowan KB (2015) Social expectation improves speech perception in noise. Lang Speech 58(Pt 4):502–521. https://doi.org/10.1177/0023830914565191

Mesgarani N, Chang EF (2012) Selective cortical representation of attended speaker in multi-talker speech perception. Nature 485(7397):233–236. https://doi.org/10.1038/nature11020

Montague PR, Berns GS, Cohen JD et al (2002) Hyperscanning: simultaneous fMRI during linked social interactions. NeuroImage 16(4):1159–1164. https://doi.org/10.1006/nimg.2002.1150

Nelson MJ, El Karoui I, Giber K et al (2017) Neurophysiological dynamics of phrase-structure building during sentence processing. Proc Natl Acad Sci U S A 114(18):E3669–E3678. https://doi.org/10.1073/pnas.1701590114

Novembre G, Iannetti GD (2021) Hyperscanning alone cannot prove causality. Multibrain Stimulation can. Trends Cogn Sci 25(2):96–99. https://doi.org/10.1016/j.tics.2020.11.003

Oswald CJ, Tremblay S, Jones DM (2000) Disruption of comprehension by the meaning of irrelevant sound. Memory 8(5):345–350. https://doi.org/10.1080/09658210050117762

Pan Y, Novembre G, Song B et al (2018) Interpersonal synchronization of inferior frontal cortices tracks social interactive learning of a song. NeuroImage 183:280–290. https://doi.org/10.1016/j.neuroimage.2018.08.005

Pan Y, Novembre G, Song B et al (2021) Dual brain stimulation enhances interpersonal learning through spontaneous movement synchrony. Soc Cogn Affect Neurosci 16(1–2):210–221. https://doi.org/10.1093/scan/nsaa080

Panouilleres MTN, Mottonen R (2018) Decline of auditory-motor speech processing in older adults with hearing loss. Neurobiol Aging 72:89–97. https://doi.org/10.1016/j.neurobiolaging.2018.07.013

Pickering MJ, Garrod S (2013) An integrated theory of language production and comprehension. Behav Brain Sci 36(4):329–347. https://doi.org/10.1017/S0140525X12001495

Price CJ (2012) A review and synthesis of the first 20 years of PET and fMRI studies of heard speech, spoken language and reading. NeuroImage 62(2):816–847. https://doi.org/10.1016/j.neuroimage.2012.04.062

Pulvermuller F, Fadiga L (2010) Active perception: sensorimotor circuits as a cortical basis for language. Nat Rev Neurosci 11(5):351–360. https://doi.org/10.1038/nrn2811

Redcay E, Moraczewski D (2020) Social cognition in context: a naturalistic imaging approach. NeuroImage 216:116392. https://doi.org/10.1016/j.neuroimage.2019.116392

Redcay E, Schilbach L (2019) Using second-person neuroscience to elucidate the mechanisms of social interaction. Nat Rev Neurosci 20(8):495–505. https://doi.org/10.1038/s41583-019-0179-4

Rysop AU, Schmitt LM, Obleser J et al (2021) Neural modelling of the semantic predictability gain under challenging listening conditions. Hum Brain Mapp 42(1):110–127

Scharenborg O, van Os M (2019) Why listening in background noise is harder in a non-native language than in a native language: a review. Speech Commun 108:53–64. https://doi.org/10.1016/j.specom.2019.03.001

Schilbach L, Timmermans B, Reddy V et al (2013) Toward a second-person neuroscience. Behav Brain Sci 36(4):393–414. https://doi.org/10.1017/S0140525x12000660

Schirmer A, Kotz SA (2006) Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends Cogn Sci 10(1):24–30. https://doi.org/10.1016/j.tics.2005.11.009

Schomers MR, Pulvermuller F (2016) Is the sensorimotor cortex relevant for speech perception and understanding? An integrative review. Front Hum Neurosci 10:435. https://doi.org/10.3389/fnhum.2016.00435

Schoot L, Hagoort P, Segaert K (2016) What can we learn from a two-brain approach to verbal interaction? Neurosci Biobehav Rev 68:454–459. https://doi.org/10.1016/j.neubiorev.2016.06.009

Schwartz JL, Basirat A, Menard L et al (2012) The perception-for-action-control theory (PACT): a perceptuo-motor theory of speech perception. J Neurolinguist 25(5):336–354

Sebanz N, Bekkering H, Knoblich G (2006) Joint action: bodies and minds moving together. Trends Cogn Sci 10(2):70–76. https://doi.org/10.1016/j.tics.2005.12.009

Sehm B, Schnitzler T, Obleser J et al (2013) Facilitation of Inferior Frontal Cortex by Transcranial Direct Current Stimulation induces perceptual learning of severely degraded Speech. J Neurosci 33(40):15868–15878. https://doi.org/10.1523/Jneurosci.5466-12.2013

Shi LF, Koenig LL (2016) Relative weighting of semantic and syntactic cues in native and non-native listeners’ recognition of english sentences. Ear Hear 37(4):424–433

Si X, Zhou W, Hong B (2017) Cooperative cortical network for categorical processing of chinese lexical tone. Proc Natl Acad Sci U S A 114(46):12303–12308. https://doi.org/10.1073/pnas.1710752114

Silbert LJ, Honey CJ, Simony E et al (2014) Coupled neural systems underlie the production and comprehension of naturalistic narrative speech. Proc Natl Acad Sci U S A 111(43):E4687–4696. https://doi.org/10.1073/pnas.1323812111

Smirnov D, Saarimaki H, Glerean E et al (2019) Emotions amplify speaker-listener neural alignment. Hum Brain Mapp 40(16):4777–4788. https://doi.org/10.1002/hbm.24736

Sonkusare S, Breakspear M, Guo C (2019) Naturalistic stimuli in neuroscience: critically acclaimed. Trends Cogn Sci 23(8):699–714. https://doi.org/10.1016/j.tics.2019.05.004

Stephens GJ, Silbert LJ, Hasson U (2010) Speaker-listener neural coupling underlies successful communication. Proc Natl Acad Sci U S A 107(32):14425–14430. https://doi.org/10.1073/pnas.1008662107

Tanana MJ, Soma CS, Kuo PB et al (2021) How do you feel? Using natural language processing to automatically rate emotion in psychotherapy. Behav Res Methods 53(5):2069–2082. https://doi.org/10.3758/s13428-020-01531-z

Vander Ghinst M, Bourguignon M, Niesen M et al (2019) Cortical tracking of speech-in-noise develops from childhood to adulthood. J Neurosci 39(15):2938–2950. https://doi.org/10.1523/Jneurosci.1732-18.2019

Wang Q, Duan Z, Perc M et al (2008) Synchronization transitions on small-world neuronal networks: effects of information transmission delay and rewiring probability. Europhys Lett 83(5):50008. https://doi.org/10.1209/0295-5075/83/50008

Wang Q, Perc M, Duan Z et al (2009) Delay-induced multiple stochastic resonances on scale-free neuronal networks. Chaos 19(2):023112. https://doi.org/10.1063/1.3133126

Weed E, Fusaroli R (2020) Acoustic measures of Prosody in Right- Hemisphere damage: a systematic review and Meta-analysis. J Speech Lang Hear Res 63(6):1762–1775. https://doi.org/10.1044/2020_Jslhr-19-00241

Wilson RH, McArdle RA, Smith SL (2007) An evaluation of the BKB-SIN, HINT, QuickSIN, and WIN materials on listeners with normal hearing and listeners with hearing loss. J Speech Lang Hear Res 50(4):844–856. https://doi.org/10.1044/1092-4388(2007/059)

Wilson RH, Trivette CP, Williams DA et al (2012) The effects of energetic and informational masking on the words-in-noise test (WIN). J Am Acad Audiol 23(7):522–533. https://doi.org/10.3766/jaaa.23.7.4

Wong LL, Ng EH, Soli SD (2012) Characterization of speech understanding in various types of noise. J Acoust Soc Am 132(4):2642–2651. https://doi.org/10.1121/1.4751538

Yeshurun Y, Nguyen M, Hasson U (2021) The default mode network: where the idiosyncratic self meets the shared social world. Nat Rev Neurosci 22(3):181–192. https://doi.org/10.1038/s41583-020-00420-w

Zheng L, Chen C, Liu W et al (2018) Enhancement of teaching outcome through neural prediction of the students’ knowledge state. Hum Brain Mapp 39(7):3046–3057. https://doi.org/10.1002/hbm.24059

Yu ACL (2022) Perceptual cue weighting is influenced by the Listener’s gender and subjective evaluations of the speaker: the case of English Stop Voicing. Front Psychol 13:840291. https://doi.org/10.3389/fpsyg.2022.840291

Yuan JJ (2020) Cognitive neuroscience of emotional susceptibility (in Chinese). Science Press, Beijing

Zekveld AA, Rudner M, Johnsrude IS et al (2011) The influence of semantically related and unrelated text cues on the intelligibility of sentences in noise. Ear Hear 32(6):E16–E25

Funding

This work was supported by the National Natural Science Foundation of China (NSFC) under grant (61977041), the Tsinghua University Spring Breeze Fund (2021Z99CFY037), and the National Natural Science Foundation of China (NSFC) and the German Research Foundation (DFG) in project Crossmodal Learning (NSFC 62061136001/DFG TRR-169/C1, B1).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, Z., Zhang, D. How does the human brain process noisy speech in real life? Insights from the second-person neuroscience perspective. Cogn Neurodyn 18, 371–382 (2024). https://doi.org/10.1007/s11571-022-09924-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-022-09924-w