Abstract

Syntactic theory provides a rich array of representational assumptions about linguistic knowledge and processes. Such detailed and independently motivated constraints on grammatical knowledge ought to play a role in sentence comprehension. However most grammar-based explanations of processing difficulty in the literature have attempted to use grammatical representations and processes per se to explain processing difficulty. They did not take into account that the description of higher cognition in mind and brain encompasses two levels: on the one hand, at the macrolevel, symbolic computation is performed, and on the other hand, at the microlevel, computation is achieved through processes within a dynamical system. One critical question is therefore how linguistic theory and dynamical systems can be unified to provide an explanation for processing effects. Here, we present such a unification for a particular account to syntactic theory: namely a parser for Stabler’s Minimalist Grammars, in the framework of Smolensky’s Integrated Connectionist/Symbolic architectures. In simulations we demonstrate that the connectionist minimalist parser produces predictions which mirror global empirical findings from psycholinguistic research.

Similar content being viewed by others

Notes

Note that “the girl” is already a phrase that could have been obtained by merging “the” ( d ) and “girl” ( n ) together. We have omitted this step for the sake of simplicity.

Each lexical item corresponds to one node, further a root node with two daughters consists of three nodes in total (parent, left daughter, right daughter). A merge operation adds one node, while move increases the node count by two.

References

Bader M (1996) Sprachverstehen: Syntax und Prosodie beim Lesen. Westdeutscher Verlag, Opladen

Bader M, Meng M (1999) Subject-object ambiguities in German embedded clauses: an across-the-board comparision. J Psycholinguist Res 28(2):121–143

beim Graben P, Gerth S, Vasishth S (2008a) Towards dynamical system models of language-related brain potentials. Cogn Neurodyn 2(3):229–255

beim Graben P, Pinotsis D, Saddy D, Potthast R (2008b) Language processing with dynamic fields. Cogn Neurodyn 2(2):79–88

beim Graben P, Atmanspacher H (2009) Extending the philosophical significance of the idea of complementarity. In: Atmanspacher H, Primas H (eds) Recasting reality. Springer, Berlin, pp 99–113

beim Graben P, Potthast R (2009) Inverse problems in dynamic cognitive modeling. Chaos 19(1):015103

beim Graben P, Barrett A, Atmanspacher H (2009) Stability criteria for the contextual emergence of macrostates in neural networks. Netw Comput Neural Syst 20(3):177–195

Berg G (1992) A connectionist parser with recursive sentence structure and lexical disambiguation. In: Proceedings of the 10th national conference on artificial intelligence, pp 32–37

Chomsky N (1981) Lectures on government and binding. Foris

Chomsky N (1995) The minimalist program. MIT Press, Cambridge

Christiansen MH, Chater N (1999) Toward a connectionist model of recursion in human linguistic performance. Cogn Sci 23(4):157–205

Dolan CP, Smolensky P (1989) Tensor product production system: A modular architecture and representation. Connect Sci 1(1):53–68

Elman JL (1995) Language as a dynamical system. In: Port RF, van Gelder T (eds), Mind as motion: explorations in the dynamics of cognition. MIT Press, Cambridge, pp 195–223

Fanselow G, Schlesewsky M, Cavar D, Kliegl R (1999) Optimal parsing: syntactic parsing preferences and optimality theory. Rutgers Optim Arch pp 367–1299

Farkas I, Crocker MW (2008) Syntactic systematicity in sentence processing with a recurrent self-organizing network. Neurocomputing 71:1172–1179

Ferreira F, Henderson JM (1990) Use of verb information in syntactic parsing: evidence from eye movements and word-by-word self-paced reading. J Exp Psychol Learn Mem Cogn 16:555–568

Frazier L (1979) On comprehending sentences: syntactic parsing strategies. PhD thesis, University of Connecticut, Storrs

Frazier L (1985) Syntactic complexity. In: Dowty D, Karttunen L, Zwicky A (eds), Natural language parsing. Cambridge University Press

Frazier L, Rayner K (1982) Making and correcting errors during sentence comprehension: eye movements in the analysis of structurally ambiguous sentences. Cogn Psychol 14:178–210

Freeman WJ (2007) Definitions of state variables and state space for brain-computer interface. Part 1. Multiple hierarchical levels of brain function. Cogn Neurodyn 1:3–14

Frisch S, Schlesewsky M, Saddy D, Alpermann A (2002) The P600 as an indicator of syntactic ambiguity. Cognition 85:B83–B92

Gerth S (2006) Parsing mit minimalistischen, gewichteten Grammatiken und deren Zustandsraumdarstellung. Unpublished Master’s thesis, Universität Potsdam

Gibson E (1998) Linguistic complexity: locality of syntactic dependencies. Cognition 68:1–76

Frey W, Gärtner HM (2002) On the treatment of scrambling and adjunction in Minimalist Grammars. In: Jäger G, Monachesi P, Penn G, Wintner S (eds) Proceedings of formal grammars, pp 41–52

Haegeman L (1994) Introduction to government & binding theory. Blackwell Publishers, Oxford

Hagoort P (2003) How the brain solves the binding problem for language: a neurocomputational model of syntactic processing. NeuroImage 20:S18–S29

Hagoort P (2005) On Broca, brain, and binding: a new framework. Trends Cogn Sci 9(9):416–423

Hale JT (2003a) Grammar, uncertainty and sentence processing. PhD thesis, The Johns Hopkins University

Hale JT (2003b) The information conveyed by words in sentences. J Psycholinguist Res32(2):101–123

Hale JT (2006) Uncertainty about the rest of the sentence. Cogn Sci 30(4):643–672

Harkema H (2001) Parsing minimalist languages. PhD thesis, University of California, Los Angeles

Hemforth B (1993) Kognitives Parsing: Repräsentation und Verarbeitung kognitiven Wissens. Infix, Sankt Augustin

Hemforth B (2000) German sentence processing. Kluwer Academic Publishers, Dodrecht

Hertz J, Krogh A, Palmer RG (1991) Introduction to the theory of neural computation. Perseus Books, Cambridge

Hopcroft JE, Ullman JD (1979) Introduction to automata theory, languages, and computation. Addison-Wesley, Menlo Park

Hopfield JJ (1982) Neural networks and physical systems with emergent collective computational abilities. Proc Natl Acad Sci USA 79(8):2554–2558

Joshi AK, Schabes Y (1997) Tree-adjoining grammars. In: Salomma A, Rosenberg G (eds) Handbook of formal languages and automata, vol 3. Springer, Berlin, pp 69–124

Joshi AK, Levy L, Takahashi M (1975) Tree adjunct grammars. J Comput Syst Sci 10(1):136–163

Lawrence S, Giles CL, Fong S (2000) Natural language grammatical inference with recurrent neural networks. IEEE Trans Knowl Data Eng 12(1):126–140

Legendre G, Miyata Y, Smolensky P (1990a) Harmonic grammar—a formal multi-level connectionist theory of linguistic well-formedness: theoretical foundations. In: Proceedings of the 12th annual conference cognitive science society. Cognitive Science Society, Cambridge, pp 388–395

Legendre G, Miyata Y, Smolensky P (1990b) Harmonic grammar—a formal multi-level connectionist theory of linguistic well-formedness: an application. In: Proceedings 12th annual conference cognitive science society. Cognitive Science Society, Cambridge, pp 884–891

Michaelis J (2001) Derivational minimalism is mildly context-sensitive. In: Moortgat M (ed) Logical aspects of computational linguistics, vol 2014. Springer, Berlin, pp 179–198 (Lecture notes in artificial intelligence)

Mizraji E (1989) Context-dependent associations in linear distributed memories. Bull Math Biol 51(2):195–205

Mizraji E (1992) Vector logics: The matrix-vector representation of logical calculus. Fuzzy Sets Syst 50:179–185

Niyogi S, Berwick RC (2005) A minimalist implementation of Hale-Keyser incorporation theory. In: Sciullo AMD (ed) UG and external systems language, brain and computation, linguistik aktuell/linguistics today, vol 75. John Benjamins, Amsterdam, pp 269–288

Osterhout L, Holcomb PJ, Swinney DA (1994) Brain potentials elicited by garden-path sentences: evidence of the application of verb information during parsing. J Exp Psychol Learn Mem Cogn 20(4):786–803

Pollard C, Sag IA (1994) Head-driven phrase structure grammar. University of Chicago Press, Chicago

Potthast R, beim Graben P (2009) Inverse problems in neural field theory. SIAM J Appl Dyn Syst (in press)

Prince A, Smolensky P (1997) Optimality: from neural networks to universal grammar. Science 275:1604–1610

Siegelmann HT, Sontag ED (1995) On the computational power of neural nets. J Comput Syst Sci 50(1):132–150

Smolensky P (1986) Information processing in dynamical systems: foundations of harmony theory. In: Rumelhart DE, McClelland JL, the PDP Research Group (eds) Parallel distributed processing: explorations in the microstructure of cognition, vol I. MIT Press, Cambridge, pp 194–281 (Chap 6)

Smolensky P (1990) Tensor product variable binding and the representation of symbolic structures in connectionist systems. Artif Intell 46:159–216

Smolensky P (2006) Harmony in linguistic cognition. Cogn Sci 30:779–801

Smolensky P, Legendre G (2006a) The harmonic mind. from neural computation to optimality-theoretic grammar, vol 1: cognitive architecture. MIT Press, Cambridge

Smolensky P, Legendre G (2006b) The harmonic mind. From neural computation to optimality-theoretic grammar, vol 2: linguistic and philosophic implications. MIT Press, Cambridge

Stabler EP (1997) Derivational minimalism. In: Retoré C (ed) Logical Aspects of computational linguistics, springer lecture notes in computer science, vol 1328. Springer, New York, pp 68–95

Stabler EP (2000) Minimalist Grammars and recognition. In: CSLI (ed) Linguistic form and its computation, Rohrer, Rossdeutscher and Kamp, pp 327–352

Stabler EP (2004) Varieties of crossing dependencies: structure dependence and mild context sensitivity. Cogn Sci 28:699–720

Stabler EP, Keenan EL (2003) Structural similarity within and among languages. Theor Comput Sci 293:345–363

Staudacher P (1990) Ansätze und Probleme prinzipienorientierten Parsens. In: Felix SW, Kanngießer S, Rickheit G (eds) Sprache und Wissen. Westdeutscher Verlag, Opladen, pp 151–189

Tabor W (2000) Fractal encoding of context-free grammars in connectionist networks. Expert Syst Int J Knowl Eng Neural Netw 17(1):41–56

Tabor W (2003) Learning exponential state-growth languages by hill climbing. IEEE Trans Neural Netw 14(2):444–446

Tabor W, Tanenhaus MK (1999) Dynamical models of sentence processing. Cogn Sci 23(4):491–515

Tabor W, Juliano C, Tanenhaus MK (1997) Parsing in a dynamical system: An attractor-based account of the interaction of lexical and structural constraints in sentence processing. Lang Cogn Process 12(2/3):211–271

Traxler M, Gernsbacher MA (eds) (2006) Handbook of psycholinguistics. Elsevier, Oxford

Vosse T, Kempen G (2000) Syntactic structure assembly in human parsing: a computational model based on competitive inhibition and a lexicalist grammar. Cognition 75:105–143

Vosse T, Kempen G (this issue) The Unification space implemented as a localist neural net: predictions and error-tolerance in a constraint-based parser. Cogn Neurodyn

Weyerts H, Penke M, Muente TF, Heinze HJ, Clahsen H (2002) Word order in sentence processing: an experimental study of verb placement in German. J Psycholinguist Res 31(3):211–268

Acknowledgements

We would like to thank Shravan Vasishth, Whitney Tabor, Titus von der Malsburg, Hans-Martin Gärtner and Antje Sauermann for helpful and inspiring discussions concerning this work.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

In this appendix we present the minimalist parses of all example sentences from section “Materials”

English examples

The girl knew the answer immediately

This example is outlined in section “Minimalist parsing”.

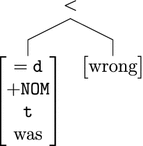

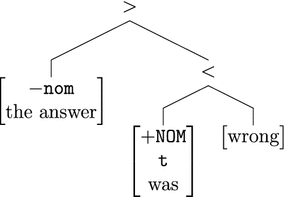

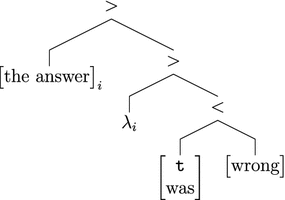

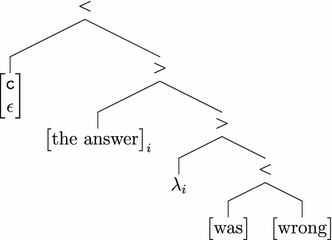

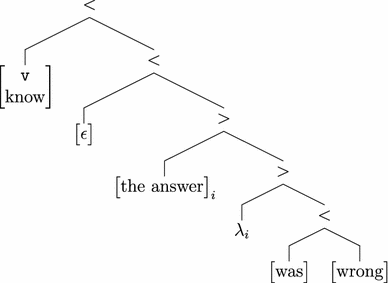

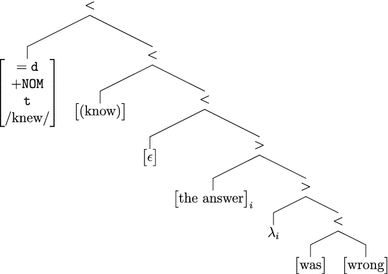

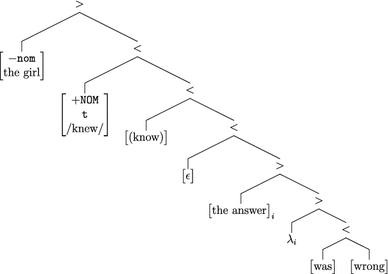

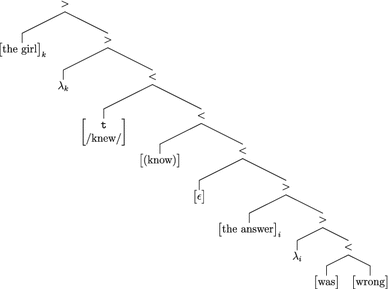

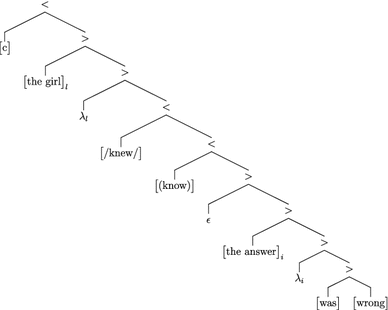

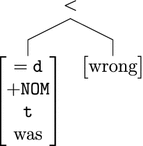

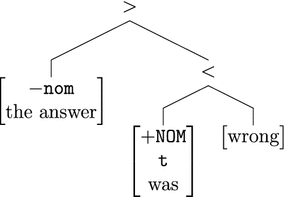

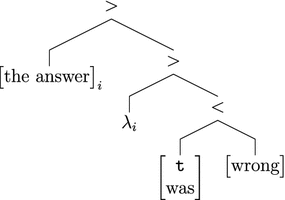

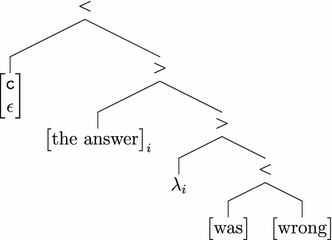

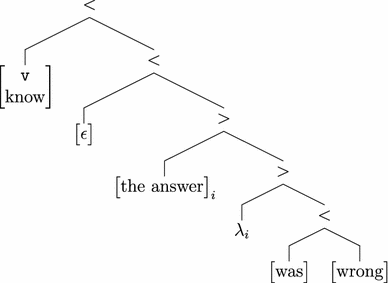

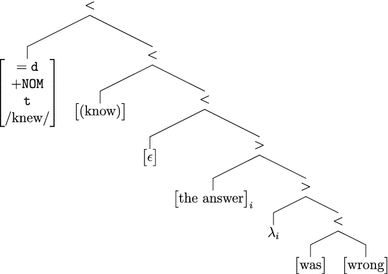

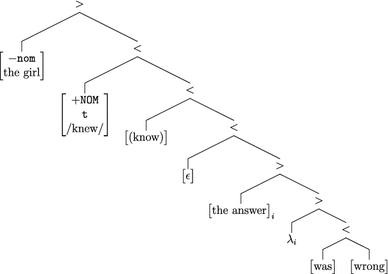

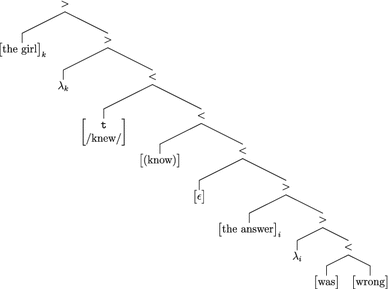

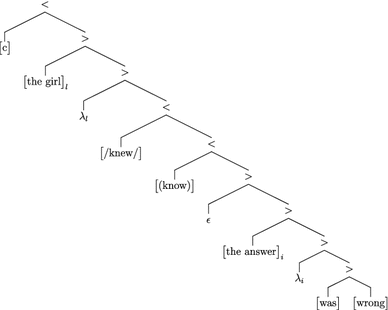

The girl knew the answer was wrong. (complement clause)

-

1.

step: Merge

-

2.

step: Merge

-

3.

step: Move

-

4.

step: Merge

-

5.

step: Merge

-

6.

step: Merge

-

7.

step: Merge

-

8.

step: Move

-

9.

step: Merge

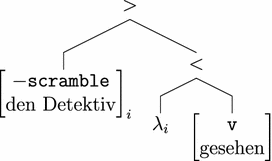

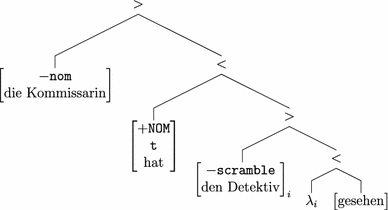

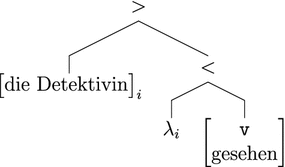

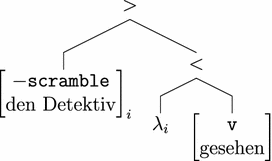

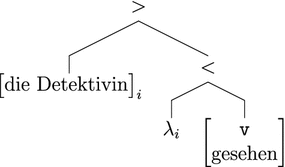

German examples

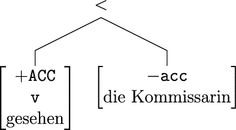

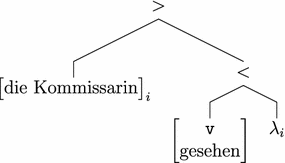

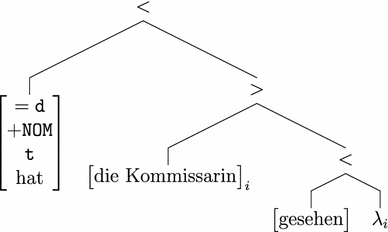

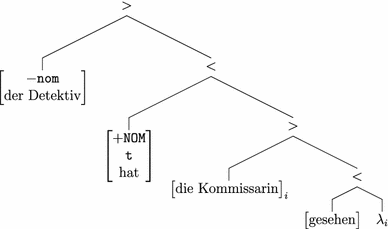

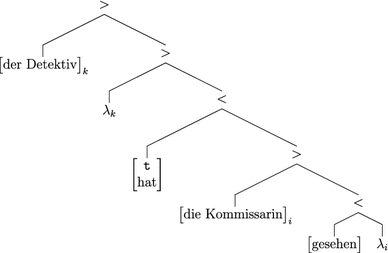

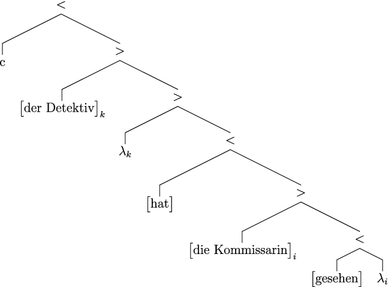

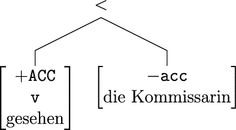

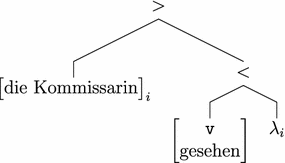

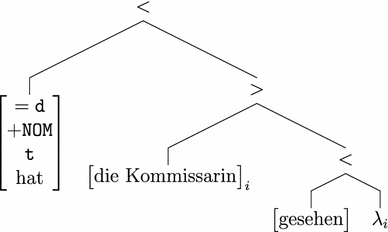

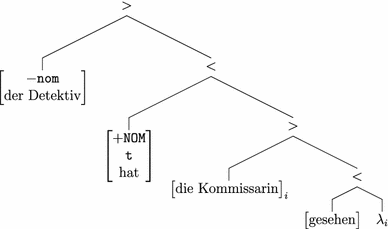

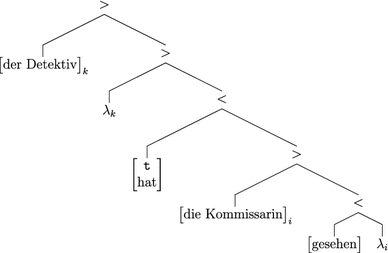

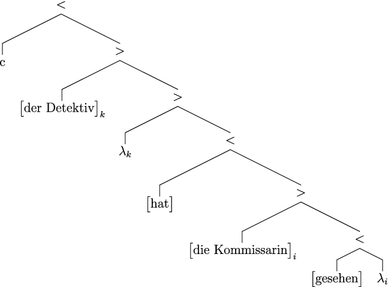

Der Detektiv hat die Kommissarin gesehen

-

1.

step: merge

-

2.

step: move

-

3.

step: merge

-

4.

step: merge

-

5.

step: move

-

6.

step: merge

Die Detektivin hat den Kommissar gesehen

The sentence is parsed like the first sentence “Der Detektiv hat die Kommissarin gesehen.”

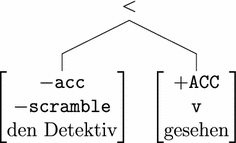

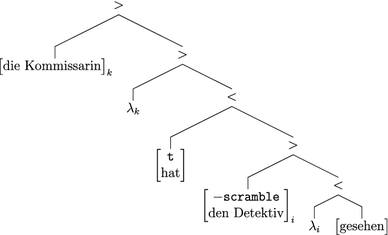

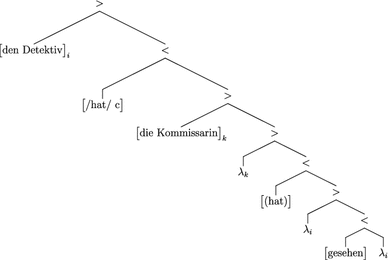

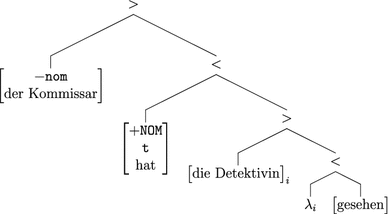

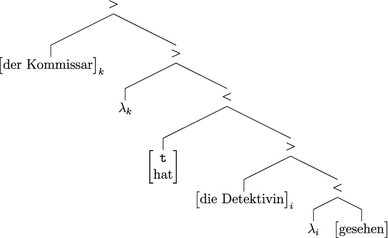

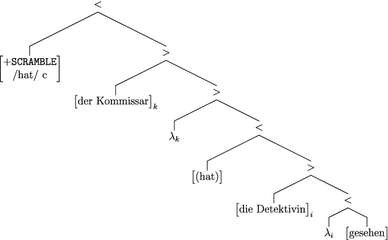

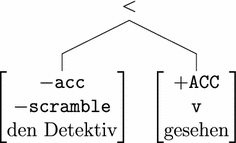

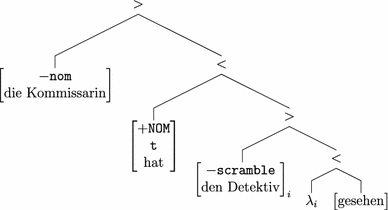

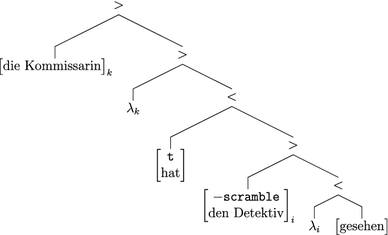

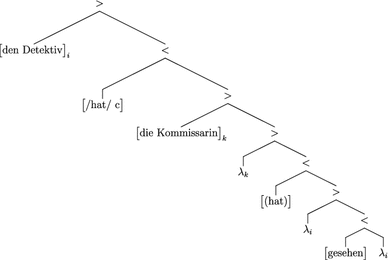

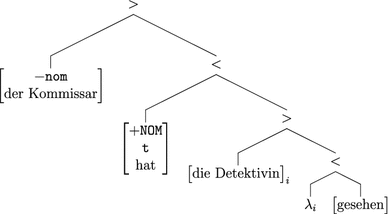

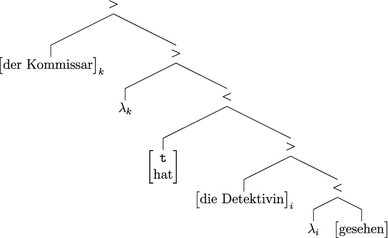

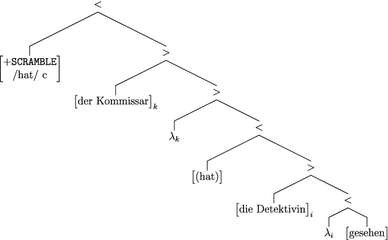

Den Detektiv hat die Kommissarin gesehen

-

1.

step: merge

-

2.

step: move

-

3.

step: merge

-

4.

step: merge

-

5.

step: move

-

6.

step: merge(head movement)

-

7.

step: move(scrambling)

Die Detektivin hat der Kommissar gesehen

-

1.

step: merge

-

2.

step: move

-

3.

step: merge

-

4.

step: merge

-

5.

step: move

-

6.

step: merge(head movement)

At this point the derivation of the sentence terminates because there are no more features that could be checked. As there is still the licensor for the scrambling operation left the sentence is grammatically not well-formed and is not accepted by the grammar formalism.

Rights and permissions

About this article

Cite this article

Gerth, S., beim Graben, P. Unifying syntactic theory and sentence processing difficulty through a connectionist minimalist parser. Cogn Neurodyn 3, 297–316 (2009). https://doi.org/10.1007/s11571-009-9093-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-009-9093-1