Abstract

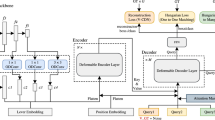

In complex scenarios, current detection algorithms often face challenges such as misdetection and omission when identifying irregularities in pedestrian mask wearing. This paper introduces an enhanced detection method called IPCRGC-YOLOv7 (Improved Partial Convolution Recursive Gate Convolution-YOLOv7) as a solution. Firstly, we integrate the Partial Convolution structure into the backbone network to effectively reduce the number of model parameters. To address the problem of vanishing training gradients, we utilize the residual connection structure derived from the RepVGG network. Additionally, we introduce an efficient aggregation module, PRE-ELAN (Partially Representative Efficiency-ELAN), to replace the original Efficient Long-Range Attention Network (ELAN) structure. Next, we improve the Cross Stage Partial Network (CSPNet) module by incorporating recursive gated convolution. Introducing a new module called CSPNRGC (Cross Stage Partial Network Recursive Gated Convolution), we replace the ELAN structure in the Neck part. This enhancement allows us to achieve higher order spatial interactions across different network hierarchies. Lastly, in the loss function component, we replace the original cross-entropy loss function with Efficient-IoU to enhance loss calculation accuracy. To address the challenge of balancing the contributions of high-quality and low-quality sample weights in the loss, we propose a new loss function called Wise-EIoU (Wise-Efficient IoU). The experimental results show that the IPCRGC-YOLOv7 algorithm improves accuracy by 4.71%, recall by 5.94%, mean Average Precision (mAP@0.5) by 2.9%, and mAP@.5:.95 by 2.7% when compared to the original YOLOv7 algorithm, which can meet the requirements for mask wearing detection accuracy in practical application scenarios.

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from public datasets.

References

Hinton, G.E., Salakhutdinov, R.R.: Reducing the dimensionality of data with neural networks. Science 313(5786), 504–507 (2006)

Girshick, R. Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 580–587 (2014)

Ren, S.: RCNN Faster: towards real-time object detection with region proposal networks. In: Advances in Neural Information Processing Systems, 9199(10.5555):2969239–2969250 (2015)

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C.-Y., Berg, A.C.: SSD: Single shot multibox detector. In: Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14, pp. 21–37. Springer (2016)

Tan, M., Pang, R., Le, Q.V.: EfficientDet: scalable and efficient object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10781–10790 (2020)

Duan, K., Bai, S., Xie, L., Qi, H., Huang, Q., Tian, Q.: CenterNet: keypoint triplets for object detection. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 6569–6578 (2019)

Jiang, P., Ergu, D., Liu, F., Cai, Y., Ma, B.: A review of Yolo algorithm developments. Procedia Comput. Sci. 199, 1066–1073 (2022)

Neubeck A., Luc, V.G.: Efficient non-maximum suppression. In: 18th International Conference on Pattern Recognition (ICPR’06), vol. 3, pp. 850–855 (2006)

Redmon, J., Farhadi, A.: Yolo9000: better, faster, stronger. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7263–7271 (2017)

Redmon, J., Farhadi, A.: Yolov3: an incremental improvement. arXiv preprint arXiv:1804.02767 (2018)

Bochkovskiy, A., Wang, C.-Y., Mark Liao, H.-Y.: Yolov4: optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934 (2020)

Zhu, X., Lyu, S., Wang, X., Zhao, Q.: TPH-YOLOv5: improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 2778–2788 (2021)

Ge, Z., Liu, S., Wang, F., Li, Z., Sun, J.: YOLOx: exceeding yolo series in 2021. arXiv preprint arXiv:2107.08430 (2021)

Wang, C.-Y., Bochkovskiy, A., Mark Liao H.-Y.: YOLOv7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7464–7475 (2023)

Hu, WY, Du, Y., Huang, Y., Wang, H.K., Zhao, K.: Lightweight mask detection algorithm based on improved yolov4. In: 2023 6th International Conference on Communication Engineering and Technology (ICCET), pp. 125–131. IEEE (2023)

Zhao, P., Xie, L., Eng, L.: Deep small object detection algorithm integrating attention mechanism. J. Front. Comput. Sci. Technol. (2022). https://doi.org/10.3778/j.issn.1673-9418.2108087

Liu, G., Zhang, Q.: Mask wearing detection algorithm based on improved tiny YOLOv3. Int. J. Pattern Recognit. Artif. Intell. 35(07), 2155007 (2021)

Li, C., Wang, Y., Liu, X.: An improved YOLOv7 lightweight detection algorithm for obscured pedestrians. Sensors 23(13), 5912 (2023)

Chen, J., Kao, S., He, H., Zhuo, W., Wen, S., Lee, C., Gary Chan, S.-H.: Run, don’t walk: chasing higher flops for faster neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12021–12031 (2023)

Kollár, J.: Flops. Nagoya Math. J. 113, 15–36 (1989)

Rao, Y., Zhao, W., Tang, Y., Zhou, J., Lim, S.N., Lu, J.: HorNet: efficient high-order spatial interactions with recursive gated convolutions. Adv. Neural Inf. Process. Syst. 35, 10353–10366 (2022)

Hu, J., Shen, L., Gang, S.: Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. In: Advances in Neural Information Processing Systems, vol. 30 (2017)

Yu, J., Jiang, Y., Wang, Z., Cao, Z., Huang, T.: UnitBox: an advanced object detection network. In: Proceedings of the 24th ACM International Conference on Multimedia, pp. 516–520 (2016)

Rezatofighi, H., Tsoi, N., Gwak, J.Y., Sadeghian, A., Reid, I., Savarese, S.: Generalized intersection over union: a metric and a loss for bounding box regression. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 658–666 (2019)

Zheng, Z., Wang, P., Liu, W., Li, J., Ye, R., Ren, D.: Distance-IoU loss: faster and better learning for bounding box regression. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 12993–13000 (2020)

Zheng, Z., Wang, P., Ren, D., Liu, W., Ye, R., Qinghua, H., Zuo, W.: Enhancing geometric factors in model learning and inference for object detection and instance segmentation. IEEE Trans. Cybern. 52(8), 8574–8586 (2021)

Ding, X., Zhang, X., Ma, N., Han, J., Ding, G., Sun, J.: RepVGG: Making VGG-style convnets great again. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 13733–13742 (2021)

Wang, C.-Y., Mark Liao H.-Y., Wu, Y.-H., Chen P.-Y., Hsieh, J.-W., Yeh, I.-H.: CSPNet: a new backbone that can enhance learning capability of CNN. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition workshops, pp. 390–391 (2020)

Lin, T.-Y., Goyal, P., Girshick, R., He, K., Dollár, P.: Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2980–2988 (2017)

Tong, Z., Chen, Y., Xu, Z., Yu, R.: Wise-IoU: bounding box regression loss with dynamic focusing mechanism. arXiv preprint arXiv:2301.10051 (2023)

Cabani, A., Hammoudi, K., Benhabiles, H., Melkemi, M.: MaskedFace-Net—a dataset of correctly/incorrectly masked face images in the context of COVID-19. Smart Health 19, 100144 (2021)

Cunico, F., Toaiari, A., Cristani, M.: A masked face classification benchmark on low-resolution surveillance images. In: International conference on pattern recognition, pp. 49–63. Springer (2022)

Tan, M., Pang, R., Le, Q.V.: EfficientDet: scalable and efficient object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10781–10790 (2020)

Shen, Z., Liu, Z., Li, J., Jiang, Y.-G., Chen, Y., Xue, X.: DSOD: learning deeply supervised object detectors from scratch. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1919–1927 (2017)

Zang, Y., Li, W., Zhou, K., Huang, C., Loy, C.C.: Open-vocabulary DETR with conditional matching. In: European Conference on Computer Vision, pp. 106–122. Springer (2022)

Lin, T.-Y., Goyal, P., Girshick, R., He, K., Dollár, P.: Focal loss for dense object detection. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2980–2988 (2017)

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S., Guo, B.: Swin transformer: hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF international conference on computer vision, pp. 10012–10022 (2021)

Pang, J., Chen, K., Shi, J., Feng, H., Ouyang, W., Lin, D.: Libra R-CNN: towards balanced learning for object detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 821–830 (2019)

Zhang, H., Chang, H., Ma, B., Wang, N., Chen, X.: Dynamic R-CNN: towards high quality object detection via dynamic training. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XV 16, pp. 260–275. Springer (2020)

Sun, P., Zhang, R., Jiang, Y., Kong, T., Xu, C., Zhan, W., Tomizuka, M., Li, L., Yuan, Z., Wang, C., et al.: Sparse R-CNN: end-to-end object detection with learnable proposals. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 14454–14463 (2021)

Zhang, J., Zheng, Z., Xie, X., Gui, Y., Kim, G.-J.: ReYOLO: a traffic sign detector based on network reparameterization and features adaptive weighting. J. Ambient Intell. Smart Environ. 14, 1–18 (2022). (preprint)

Zhang, J., Ye, Z.I., Jin, X., Wang, J., Zhang, J.: Real-time traffic sign detection based on multiscale attention and spatial information aggregator. J. Real-Time Image Process. 19(6), 1155–1167 (2022)

Zhang, J., Xie, Z., Sun, J., Zou, X., Wang, J.: A cascaded R-CNN with multiscale attention and imbalanced samples for traffic sign detection. IEEE Access 8, 29742–29754 (2020)

Funding

This research was supported by the Joint Fund of Coal, the General Program of National Natural Science Foundation of China (No. 51174257)

Author information

Authors and Affiliations

Contributions

Huaping Zhou and Anpei Dang wrote the main manuscript text. Anpei Dang performed the validation. Anpei Dang and Kelei Sun prepared Figs. 1–5 and Tables 1–8. All authors reviewed the manuscript

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhou, H., Dang, A. & Sun, K. IPCRGC-YOLOv7: face mask detection algorithm based on improved partial convolution and recursive gated convolution. J Real-Time Image Proc 21, 59 (2024). https://doi.org/10.1007/s11554-024-01448-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11554-024-01448-2