Abstract

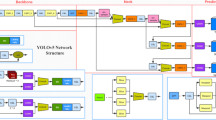

Accurate ship detection is critical for maritime transportation security. Current deep learning-based object detection algorithms have made marked progress in detection accuracy. However, these models are too heavy to be applied in mobile or embedded devices with limited resources. Thus, this paper proposes a lightweight convolutional neural network shortened as LSDNet for mobile ship detection. In the proposed model, we introduce Partial Convolution into YOLOv7-tiny to reduce its parameter and computational complexity. Meanwhile, GhostConv is introduced to further achieve lightweight structure and improve detection performance. In addition, we use Mosaic-9 data-augmentation method to enhance the robustness of the model. We compared the proposed LSDNet with other approaches on a publicly available ship dataset, SeaShips7000. The experimental results show that LSDNet achieves higher accuracy than other models with less computational cost and parameters. The test results also suggest that the proposed model can meet the requirements of real-time applications.

Similar content being viewed by others

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Technical characteristics for an automatic identification system using time-division multiple access in the VHF maritime mobile band, Standard ITU-R M.1371. Available at http://www.itu.int/rec/R-REC-M.1371/en (2014)

Zou, Z., Chen, K., Shi, Z., et al.: Object detection in 20 years: a survey. Proc. IEEE 111, 257–276 (2023)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 580–587 (2014)

Girshick, R.: Fast R-CNN. In: Proceedings of IEEE Conference on Computer Vision (ICCV), pp. 1440–1448 (2015)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards realtime object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell.Intell. 39(6), 1137–1149 (2017)

Liu, W., Anguelov, D., Erhan, D.: SSD: Single shot MultiBox detector. European Conference on Computer Vision, pp. 21–37 (2016)

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: unified, real-time object detection. Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 779–788 (2016)

Redmon, J., Farhadi, A.: YOLO9000: Better, faster, stronger. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7263–7271 (2017)

Redmon, J., Farhadi, A.: YOLOv3: An incremental improvement. Preprint at arXiv:1804.02767 (2018)

Bochkovskiy, A., Wang, C.-Y., Liao, H.-Y.M.: YOLOv4: Optimal speed and accuracy of object detection. Preprint at arXiv:2004.10934 (2020)

Glenn, J., Alex, S., Jirka, B.: Ultralytics/YOLOv5:V6.0 (Versionv6.0). .Available at http://doi.org/10.5281/zenodo.63715 (2021)

Wang, C.Y., Yeh, I.H., Liao, H.Y.M.: You only learn one representation: Unified network for multiple tasks. Preprint at arXiv:2105.04206 (2021)

Ge, Z., Liu, S., Wang, F., et al.: Yolox: Exceeding yolo series in 2021. Preprint at arXiv:2107.08430 (2021)

Wang, C.Y., Bochkovskiy, A., Liao, H.Y.M.: YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7464–7475 (2023)

Howard, A.G., Zhu, M., Chen, B., et al.: Mobilenets: Efficient convolutional neural networks for mobile vision applications. Preprint at arXiv:1704.04861 (2017)

Sandler, M., Howard, A., Zhu, M., et al.: Mobilenetv2: Inverted residuals and linear bottlenecks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4510–4520 (2018)

Howard, A., Sandler, M., Chen, B., Wang, W., Chen, L.-C., Tan, M., Chu, G., Vasudevan, V., Zhu, Y., Pang, R., Adam, H., Le, Q.: Searching for MobileNetV3. In: Proceedings of IEEE/CVF International Conference on Computer Vision (ICCV), pp. 1314–1324 (2019)

Zhang, X., Zhou, X., Lin, M., Sun, J.: ShuffleNet: An extremely efficient convolutional neural network for mobile devices. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6848–6856 (2018)

Ma, N., Zhang, X.: ShuffleNet V2: Practical guidelines for efficient CNN architecture design. In: Proceedings of European Conference on Computer Vision (ECCV), pp. 122–138 (2018)

Vasu, P.K.A., Gabriel, J., Zhu, J., et al.: MobileOne: an improved one millisecond mobile backbone. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7907–7917 (2023)

Wang, C.-Y., Liao, H.-Y.M., Wu, Y.-H., Chen, P.-Y., Hsieh, J.-W., Yeh, I.-H.: CSPNet: A new backbone that can enhance learning capability of CNN. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Work-shops (CVPRW), pp. 390–391 (2020)

He, K., Zhang, X., Ren, S., Sun, J.: Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell.Intell. 37(9), 1904–1916 (2014)

Liu, S., Qi, L., Qin, H., Shi, J., Jia, J.: Path aggregation network for instance segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8759–8768 (2018)

Zhang, M., Rong, X., Yu, X.: Light-SDNet: A lightweight CNN architecture for ship detection. IEEE Access 10, 86647–86662 (2022)

Zheng, Y., Zhang, Y., Qian, L., et al.: A lightweight ship target detection model based on improved YOLOv5s algorithm. PLoS ONE 18(4), e0283932 (2023)

Cen, J., Feng, H., Liu, X., et al.: An improved ship classification method based on YOLOv7 model with attention mechanism. Wireless Commun. Mobile Comput. 2023, 1 (2023)

Li, D., Zhang, Z., Fang, Z., et al.: Ship detection with optical image based on CA-YOLO v3 Network. In: IEEE 3rd International Conference on Frontiers of Electronics, Information and Computation Technologies (ICFEICT), pp. 589–598 (2023)

Qian, L., Zheng, Y., Cao, J., et al.: Lightweight ship target detection algorithm based on improved YOLOv5s. J. Real-Time Image Proc. 21(1), 1–15 (2024)

Chen, J., Kao, S., He, H., et al.: Run, don't walk: chasing higher FLOPS for faster neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12021–12031 (2023)

Vadera, S., Ameen, S.: Methods for pruning deep neural networks. IEEE Access 10, 63280–63300 (2022)

Gholami A, Kim S, Dong Z, et al. A survey of quantization methods for efficient neural network inference. Low-Power Computer Vision, pp. 291–326 Chapman and Hall/CRC, Boca Raton (2022)

Gou, J., Yu, B., Maybank, S.J., et al.: Knowledge distillation: a survey. Int. J. Comput. VisionComput. Vision 129, 1789–1819 (2021)

Han, K., Wang, Y., Tian, Q., Guo, J., Xu, C., Xu, C.: GhostNet: More features from cheap operations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1577–1586 (2020)

Yun, S., Han, D., Oh, S.J., et al.: Cutmix: Regularization strategy to train strong classifiers with localizable features. In: Proceedings of the IEEE/CVF international conference on computer vision, pp. 6023–6032 (2019)

Zeng, G., Yu, W., Wang, R., et al.: Research on mosaic image data enhancement for overlapping ship targets. Preprint at arXiv:2105.05090 (2021)

Stemmer, U.: Locally private k-means clustering. J. Mach. Learn. Res. 22(1), 7964–7993 (2021)

Shao, Z., Wu, W., Wang, Z., Du, W., Li, C.: SeaShips: A large-scale precisely annotated dataset for ship detection. IEEE Trans. Multimedia 20(10), 2593–2604 (2018)

Gao, X., Sun, W.: Ship object detection in one-stage framework based on Swin-Transformer. In: Proceedings of the 2022 5th International Conference on Signal Processing and Machine Learning, pp. 189–196 (2022)

Zhu, L., Geng, X., Li, Z., Liu, C.: Improving YOLOv5 with attention mechanism for detecting boulders from planetary images. Remote Sens. 13(18), 3776 (2021)

Baltrušaitis, T., Ahuja, C., Morency, L.P.: Multimodal machine learning: a survey and taxonomy. IEEE Trans. Pattern Anal. Mach. Intell.Intell. 41(2), 423–443 (2018)

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 61401127, Provincial Natural Science Foundation under Grant LH2022F038 and Cultivation Project of National Natural Science Foundation of Harbin Normal University under Grant XPPY202208.

Funding

National Natural Science Foundation of China, 61401127, Provincial Natural Science Foundation, LH2022F038, Cultivation Project of National Natural Science Foundation, XPPY202208.

Author information

Authors and Affiliations

Contributions

Cui Lang and Xiaoyan Yu wrote the main manuscript text and prepared all the figures and tables, Xianwei Rong made the data curation, modified and edited the original draft. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lang, C., Yu, X. & Rong, X. LSDNet: a lightweight ship detection network with improved YOLOv7. J Real-Time Image Proc 21, 60 (2024). https://doi.org/10.1007/s11554-024-01441-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11554-024-01441-9