Abstract

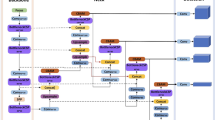

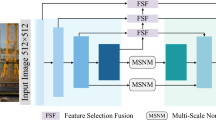

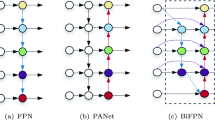

Given the current safety helmet detection methods, the feature information of the small-scale safety helmet will be lost after the network model is convolved many times, resulting in the problem of missing detection of the safety helmet. To this end, an improved target detection algorithm of YOLOv5 is used to detect the wearing of safety helmets. Firstly, a new small-scale detection layer is added to the head of the network for multi-scale feature fusion, thereby increasing the receptive field area of the feature map to improve the model’s recognition of small targets. Secondly, a cross-layer connection is designed between the feature extraction network and the feature fusion network to enhance the fine-grained features of the target in the shallow layer of the network. Thirdly, a coordinate attention (CA) module is added to the cross-layer connection to capture the global information of the image and improve the localization ability of the target. Finally, the Normalized Wasserstein Distance (NWD) is used to measure the similarity between bounding boxes, replacing the intersection over union (IoU) method. The experimental results show that the improved model achieves 95.09% of the mAP value for safety helmet-wearing detection, which has a good effect on the recognition of small-sized safety helmets of different degrees in the construction work scene.

Similar content being viewed by others

Data availability

The datasets generated and analyzed during the current study are available in the https://www.kaggle.com/datasets/andrewmvd/hard-hat-detection

References

Liu, X., Ye, X.: Application of skin colour detection and Hu moments in helmet recognition. J. East China Univ. Sci. Technol. Nat. Sci. Ed. 40(3), 365–370 (2014). https://doi.org/10.14135/j.cnki.1006-3080.2014.03.018

Shrestha, K., Shrestha, P.P., Bajracharya, D., Yfantis, E.A.: Hard-hat detection for construction safety visualization. J. Constr. Eng. 2015(1), 1–8 (2015). https://doi.org/10.1155/2015/721380

Rubaiyat, A.H, Toma, T.T., Kalantari-Khandani, M., Rahman, S.A., Chen, L., Ye, Y., Pan, C.S.: Automatic detection of helmet uses for construction safety. In: 2016 IEEE/WIC/ACM International Conference on Web Intelligence Workshops (WIW). pp. 135–142 (2016). https://doi.org/10.1109/wiw.2016.045

Fang, M., Sun, T., Shao, Z.: Fast helmet wear detection based on improved YOLOv2. Opt. Precis. Eng. 27(05), 1196–1205 (2019)

Chu, Y.Z., Huang, Y., Zhang, X.F., Liu, H.: SSD image target detection algorithm based on self-attention. J. Huazhong Univ. Sci. Technol. (Nat. Sci. Ed.) 48(09), 70–75 (2020). https://doi.org/10.13245/j.hust.200912

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C-Y., Berg, A.C.: SSD: single Shot MultiBox Detector. In: European Conference on Computer Vision, pp. 21–37 (2016)

Zhang, B., Song, Y., Xiong, R., Zhang, S.: Safety helmet wear detection incorporating human articulation points. Chin. J. Saf. Sci. 30(02), 177–182 (2020). https://doi.org/10.16265/j.cnki.issn1003-3033.2020.02.028

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1137–1149 (2017). https://doi.org/10.1109/tpami.2016.2577031

Han, K., Li, S., Xiao, Y.: YOLOv3-based helmet wearing status detection in construction scenarios. J. Railw. Sci. Eng. 18(01), 268–276 (2021). https://doi.org/10.19713/j.cnki.43-1423/u.T20200284

Redmon, J., Farhadi, A.: YOLOv3: an incremental improvement (2018). ArXiv. abs/1804.02767. https://doi.org/10.48550/arXiv.1804.02767

Rezatofighi, H., Tsoi, N., Gwak, J., Sadeghian, A., Reid, I., Savarese, S.: Generalized intersection over union: a metric and a loss for bounding box regression. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 658–666 (2019). https://doi.org/10.1109/cvpr.2019.00075

Han, G., Zhu, M., Zhao, X., Gao, H.: Method based on the cross-layer attention mechanism and multiscale perception for safety helmet-wearing detection. Comput. Electr. Eng. 95, 107458 (2021). https://doi.org/10.1016/j.compeleceng.2021.107458

Song, R., Wang, Z.: RBFPDet: an anchor-free helmet wearing detection method. Appl. Intell. 53, 5013–5028 (2023). https://doi.org/10.1007/s10489-022-03664-4

Wang, L., Zhang, X., Yang, H.: Safety helmet wearing detection model based on improved YOLO-M. IEEE Access 11, 26247–26257 (2023). https://doi.org/10.1109/ACCESS.2023.3257183

Li, H., Wu, D., Zhang, W., Xiao, C.: YOLO-PL: helmet wearing detection algorithm based on improved YOLOv4. Digit. Signal Process. (2023). https://doi.org/10.1016/j.dsp.2023.104283

Bochkovskiy, A., Wang, C.-Y., Liao, H.-Y.M.: YOLOv4: optimal speed and accuracy of object detection (2020). ArXiv. abs/2004.10934. https://doi.org/10.48550/arXiv.2004.10934

Lee, J.-Y., Choi, W.S., Choi, S.-H.: Verification and performance comparison of CNN-based algorithms for two-step helmet-wearing detection. Expert Syst. Appl. 225, 120096 (2023). https://doi.org/10.1016/j.eswa.2023.120096

Chen, J., Deng, S., Wang, P., Huang, X., Liu, Y.: Lightweight helmet detection algorithm using an improved YOLOv4. Sensors (Basel, Switzerland) (2023). https://doi.org/10.3390/s23031256

Cui, C., Gao, T., Wei, S., Du, Y., Guo, R., Dong, S., Lu, B., Zhou, Y., Lv, X. W., Liu, Q., Hu, X., Yu, D., Ma, Y.: PP-LCNet: a lightweight CPU convolutional neural network (2021). ArXiv. abs/2109.15099. https://doi.org/10.48550/arXiv.2109.15099

Gevorgyan, Z.: SIoU loss: more powerful learning for bounding box regression (2022). https://doi.org/10.48550/arXiv.2205.12740ArXiv. abs/2205.12740.

Qiao, Y., Zhen, T., Li, Z.H.: Improved helmet wear detection algorithm for YOLOv5. Comput. Eng. Appl. 59(11), 203–211 (2023)

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S., Guo, B.: Swin transformer: hierarchical vision transformer using shifted windows. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 9992–10002 (2021). https://doi.org/10.48550/arXiv.2103.14030

Ma, N., Zhang, X., Zheng, H., Sun, J.: ShuffleNet V2: practical guidelines for efficient CNN architecture design (2018). ArXiv. abs/1807.11164. https://doi.org/10.48550/arXiv.1807.11164

Huang, G., Liu, Z., Weinberger, K.Q.: Densely connected convolutional networks. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2261–2269 (2016). https://doi.org/10.48550/arXiv.1608.06993

Hou, Q., Zhou, D., Feng, J.: Coordinate attention for efficient mobile network design. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 13708–13717 (2021). https://doi.org/10.1109/cvpr46437.2021.01350

Hu, J., Shen, L., Albanie, S., Sun, G., Wu, E.: Squeeze-and-excitation networks. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2017). https://doi.org/10.1109/cvpr.2018.00745

Woo, S., Park, J., Lee, J-Y., Kweon, I-S.: CBAM: Convolutional Block Attention Module (2018). ArXiv. abs/1807.06521. https://doi.org/10.1007/978-3-030-01234-2_1

Tan, M., Le, Q. V.: EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks (2019). ArXiv. abs/1905.11946. https://doi.org/10.48550/arXiv.1905.11946

Howard, A. G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., Andreetto, M., Adam, H.: MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications (2017). ArXiv: abs/1704.04861. https://doi.org/10.48550/arXiv.1704.04861

Wang, J., Xu, C., Yang, W., Yu, L.: A normalized Gaussian Wasserstein distance for tiny object detection (2021). ArXiv. abs/2110.13389. https://doi.org/10.48550/arXiv.2110.13389

Wang, R.J., Li, X., Ao, S., Ling, C.X.: Pelee: a real-time object detection system on mobile devices. Neural Inf. Process. Syst. (2018). https://doi.org/10.48550/arXiv.1804.06882

Wong, A., Shafiee, M.J., Li, F., Chwyl, B.: Tiny SSD: a tiny single-shot detection deep convolutional neural network for real-time embedded object detection. In: 2018 15th Conference on Computer and Robot Vision (CRV), pp. 95–101 (2018). https://doi.org/10.1109/CRV.2018.00023

Wang, C-Y., Bochkovskiy, A., Liao, H-Y.M.: YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In: 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7464–7475 (2022). https://doi.org/10.48550/arXiv.2207.02696

Yang, C., Huang, Z., Wang, N.: QueryDet: cascaded sparse query for accelerating high-resolution small object detection. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 13658–13667 (2021).https://doi.org/10.48550/arXiv.2103.09136

Funding

This work was supported in part by the Chunhui Project of Ministry of Education in China under Grant z2018087, Chunhui Project of Ministry of Education in China under Grant [2019]1383, and in part by the Science and Technology Achievements Transfer and Transformation Demonstration project of Sichuan province in China under Grant 2020ZHCG0099.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study's conception and design. Material preparation, data collection, and analysis were performed by G.D., W.X., and Y.Z. The first draft of the manuscript was written by G.D. and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript. Part of the new experiments and literature search were completed by Y.H.

Corresponding author

Ethics declarations

Conflict of interest

Authors in this paper declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Dong, G., Zhang, Y., Xie, W. et al. A safety helmet-wearing detection method based on cross-layer connection. J Real-Time Image Proc 21, 72 (2024). https://doi.org/10.1007/s11554-024-01437-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11554-024-01437-5