Abstract

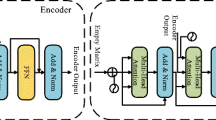

A lane detection algorithm based on lane shape prediction with transformers (LSTR) is designed to address the problems of a large number of feature extraction network parameters, low utilization of original feature information, and easy loss of detail and edge information in the current lane detection algorithm. First, to reduce the number of parameters in the lane detection network and achieve a lightweight design, the ordinary convolution is replaced by ghost convolution (Ghost-Conv) with good performance; second, to enhance the utilization of the original feature information in the network and improve the lane detection accuracy, a self batch normalization (Self-BN) module is proposed to retain more original feature information by changing the normalization to achieve the improvement of the lane detection accuracy, and finally, to improve the accuracy of the network for lane detection, an efficient channel attention (ECA) mechanism is introduced to enhance the extraction effect of lane detail information and edge information. Experiments are conducted on the open source dataset TuSimple, and the results show that the proposed algorithm reduces the number of parameters and computation by nearly half, improves the detection speed by 33 FPS, increases the detection accuracy by 0.96%, reaches 97.11%, and reduces the false positive rate and the false negative rate by 0.55% and 0.71%, respectively, meeting the real-time requirements of autonomous driving, compared to the original network. Compared to other lane detection networks, it also has great advantages.

Similar content being viewed by others

References

Ke, H.: Research on lane line detection and tracking under complex road conditions. Front. Sci. Eng. 1, 162–165 (2021)

Kanopoulos, N., Vasanthavada, N., Baker, R.L.: Design of an image edge detection filter using the Sobel operator. IEEE J. Solid State Circuits 23, 358–367 (1988)

Li, Y., Chen, L., Huang, H., Li, X., Xu, W., Zheng, L., Huang, J.: Nighttime lane markings recognition based on Canny detection and Hough transform. In: 2016 IEEE International Conference on Real-time Computing and Robotics (RCAR), pp. 411–415 (2016)

Canny, J.: A computational approach to edge detection. In: IEEE Transactions on Pattern Analysis and Machine Intelligence, pp. 679–698 (1986)

Illingworth, J., Kittler, J.: A survey of the Hough transform. Comput. Vis. Graph. Image Process. 44, 87–116 (1988)

Wang, Z., Wang, W.: The research on edge detection algorithm of lane. EURASIP J. Image Video Process. 2018, 1–9 (2018)

Danilo, C.H., Kurnianggoro, L., Filonenko, A., Jo, K.H.: Real-time lane region detection using a combination of geometrical and image features. Sensors 16, 11 (2016)

Niu, J., Lu, J., Xu, M., Lv, P., Zhao, X.: Robust lane detection using two-stage feature extraction with curve fitting. Pattern Recognit. 59, 225–233 (2016)

DeRose, T.D., Barsky, B.A.: Geometric continuity, shape parameters, and geometric constructions for Catmull-Rom splines. ACM Trans. Graph. (TOG) 7, 1–41 (1988)

Shin, B.-S., Tao, J., Klette, R.: A superparticle filter for lane detection. Pattern Recognit. 48, 3333–3345 (2015)

Tan, H., Zhou, Y., Zhu, Y., Yao, D., Li, K.: A novel curve lane detection based on Improved River Flow and RANSA. In: 17th International IEEE Conference on Intelligent Transportation Systems (itsc), pp. 133–138 (2014)

Yoo, S., Lee, H.S., Myeong, H., Yun, S., Park, H., Cho, J., Kim, D.H.: End-to-end lane marker detection via row-wise classification. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 1006–1007 (2020)

Qin, Z., Wang, H., Li, X.: Ultra fast structure-aware deep lane detection. In: Computer Vision-ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020. Proceedings, Part XXIV 16, pp. 276–291 (2020)

Liu, Y.-B., Zeng, M., Meng, Q.-H.: Heatmap-based vanishing point boosts lane detection (2020). arXiv:2007.15602

Wang, J., Ma, Y., Huang, S., Hui, T., Wang, F., Qian, C., Zhang, T.: A keypoint-based global association network for lane detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1392–1401 (2022)

Tabelini, L., Berriel, R., Paixao, T.M., Badue, C., De Souza, A.F., Oliveira-Santos, T.: Keep your eyes on the lane: real-time attention-guided lane detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 294–302 (2021)

Zheng, T., Huang, Y., Liu, Y., Tang, W., Yang, Z., Cai, D., He, X.: Clrnet: cross layer refinement network for lane detection. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 898–907 (2022)

Tian, Y., Gelernter, J., Wang, X., Chen, W., Gao, J., Zhang, Y., Li, X.: Lane marking detection via deep convolutional neural network. Neurocomputing 280, 46–55 (2018)

Li, X., Li, J., Hu, X., Yang, J.: Line-cnn: end-to-end traffic line detection with line proposal unit. IEEE Trans. Intell. Transp. Syst. 21, 248–258 (2019)

Jiang, P., Ergu, D., Liu, F., Cai, Y., Ma, B.: A review of Yolo algorithm developments. Proc. Comput. Sci. 199, 1066–1073 (2022)

Liu, W., Anguelov, D., Erhan, D., Szegedy, C., Reed, S., Fu, C.-Y., Berg, A.C.: Ssd: single shot multibox detector. In: Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14, pp. 21–37 (2016)

Pan, X., Shi, J., Luo, P., Wang, X., Tang, X.: Spatial as deep: spatial cnn for traffic scene understanding. In: Proceedings of the AAAI Conference on Artificial Intelligence, p. 32 (2018)

Liu, L., Chen, X., Zhu, S., Tan, P.: Condlanenet: a top-to-down lane detection framework based on conditional convolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 3773–3782 (2021)

Neven, D., De Brabandere, B., Georgoulis, S., Proesmans, M., Van Gool, L.: Towards end-to-end lane detection: an instance segmentation approach. In: 2018 IEEE Intelligent Vehicles Symposium (IV), pp. 286–291 (2018)

Xu, H., Wang, S., Cai, X., Zhang, W., Liang, X., Li, Z.: Curvelane-nas: unifying lane-sensitive architecture search and adaptive point blending. In: Computer Vision-ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020. Proceedings, Part XV 16, pp. 689–704 (2020)

Liu, R., Yuan, Z., Liu, T., Xiong, Z.: End-to-end lane shape prediction with transformers. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 3694–3702 (2021)

Han, K., Wang, Y., Tian, Q., Guo, J., Xu, C., Xu, C.: Ghostnet: more features from cheap operations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1580–1589 (2020)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. In: International Conference on Machine Learning, pp. 448–456 (2015)

Wang, Q., Wu, B., Zhu, P., Li, P., Zuo, W., Hu, Q.: ECA-Net: efficient channel attention for deep convolutional neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11534–11542 (2020)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. In: Advances in Neural Information Processing Systems, p. 30 (2017)

Philion, J.: Fastdraw: addressing the long tail of lane detection by adapting a sequential prediction network. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11582–11591 (2019)

Acknowledgements

This work is supported by the National Nature Science Foundation of China (NSFC, Grant No. 51804250), and by the Fundamental Research Funds for the Central Universities in China.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest and data availability statement

The code involved in this paper will not be disclosed for the time being due to the need for subsequent research, and will be available for others to use after the overall project is completed.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yang, X., Ji, W., Zhang, S. et al. Lightweight real-time lane detection algorithm based on ghost convolution and self batch normalization. J Real-Time Image Proc 20, 69 (2023). https://doi.org/10.1007/s11554-023-01323-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11554-023-01323-6