Abstract

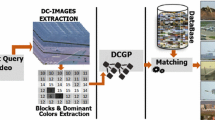

This paper presents a fast and effective technique for videos’ visual similarity detection and measurement using compact fixed-length signatures. The proposed technique facilitates for building real-time and scalable video matching/retrieval systems through generating a representative signature for a given video shot. The generated signature (Statistical Dominant Colour Profile, SDCP) effectively encodes the colours’ spatio-temporal patterns in a given shot, towards a robust real-time matching. Furthermore, the SDCP signature is engineered to better address the visual similarity problem, through its relaxed representation of shot contents. The compact fixed-length aspect of the proposed signature is the key to its high matching speed (>1000 fps) compared to the current techniques that relies on exhaustive processing, such as dense trajectories. The SDCP signature encodes a given video shot with only 294 values, regardless of the shot length, which facilitates for speedy signature extraction and matching. To maximize the benefit of the proposed technique, compressed-domain videos are utilized as a case study following their wide availability. However, the proposed technique avoids full video decompression and operates on tiny frames, rather than full-size decompressed frames. This is achievable through using the tiny DC-images sequence of the MPEG compressed stream. The experiments on various standard and challenging datasets (e.g. UCF101 13k videos) shows the technique’s robust performance, in terms of both, retrieval ability and computational performances.

Similar content being viewed by others

Notes

Percent change: \(\mathbf{[~|Difference|/Reference Value] \times 100}\).

Average of the direct difference between both values across the first 10 ranks.

Per cent change: \(\mathbf{[~|Difference|/Reference Value] \times 100}\).

References

YouTube statistics (2015). http://www.youtube.com/yt/press/statistics.html

Abbass, A., Youssif, A., Ghalwash, A.: Compressed domain video fingerprinting technique using the singular value decomposition. In: Proceedings of Applied Informatics and Computing Theory (2012)

Abbass, A.S., Youssif, A.A., Ghalwash, A.Z.: Hybrid-based compressed domain video fingerprinting technique. Comput. Inf. Sci. 5(5), 25 (2012)

Aihara, K., Aoki, T.: Motion dense sampling and component clustering for action recognition. Multimed. Tools Appl. 74(16), 6303–6321 (2015)

Almeida, J., Leite, N.J., Torres, R.da.S.: Comparison of video sequences with histograms of motion patterns. In: IEEE International Conference on Image Processing, pp. 3673–3676 (2011)

Altadmri, A., Ahmed, A.: A framework for automatic semantic video annotation. Multimed. Tools Appl. 72(2), 1167–1191 (2014)

Arlinghaus, S.: Practical handbook of curve fitting. CRC Press, Boca Raton (1994)

Attneave, F.: Dimensions of similarity. Am. J. Psychol. 53, 516–556 (1950)

Avula, S.K., Deshmukh, S.C.: Frame based video retrieval using video signatures. Int. J. Comput. Appl. 59(10), 35–40 (2012)

Ballas, N., Delezoide, B., Prêteux, F.: Trajectory signature for action recognition in video. In: Proceedings of the 20th ACM international conference on Multimedia, pp. 1429–1432. ACM (2012)

Basharat, A., Zhai, Y., Shah, M.: Content based video matching using spatiotemporal volumes. Comput. Vis. Image Underst. 110(3), 360–377 (2008)

Bekhet, S., Ahmed, A.: Compact signature-based compressed video matching using dominant color profiles (dcp). In: International Conference on Pattern Recognition ICPR, pp. 3933–3938 (2014)

Bekhet, S., Ahmed, A., Altadmri, A., Hunter, A.: Compressed video matching: Frame-to-frame revisited. Multimed. Tools Appl. (2015). doi:10.1007/s11042-015-2887-8

Bekhet, S., Ahmed, A., Hunter, A.: Video matching using dc-image and local features. Lect. Notes Eng. Comput. Sci. 3, 2209–2214 (2013)

Chattopadhyay, C., Das, S.: Use of trajectory and spatiotemporal features for retrieval of videos with a prominent moving foreground object. Signal Image Video Process. 10(2), 319–326 (2016)

Chen, L.H., Chin, K.H., Liao, H.Y.M.: Integration of color and motion features for video retrieval. Int. J. Pattern Recognit. Artif. Intell. 23(02), 313–329 (2009)

Cheung, S.C.S., Zakhor, A.: Efficient video similarity measurement with video signature. IEEE Trans. Circuits Syst. Video Technol. 13(1), 59–74 (2003)

DeMenthon, D., Doermann, D.: Video retrieval using spatio-temporal descriptors. In: Proceedings of the eleventh ACM international conference on Multimedia, pp. 508–517. ACM (2003)

Deng, Y., Manjunath, B., Kenney, C., Moore, M.S., Shin, H.: An efficient color representation for image retrieval. IEEE Trans. Image Process. 10(1), 140–147 (2001)

Dimitrova, N., Golshani, F.: Motion recovery for video content classification. ACM Trans. Inf. Syst. (TOIS) 13(4), 408–439 (1995)

Donahue, J., Anne Hendricks, L., Guadarrama, S., Rohrbach, M., Venugopalan, S., Saenko, K., Darrell, T.: Long-term recurrent convolutional networks for visual recognition and description. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2625–2634 (2015)

Droueche, Z., Lamard, M., Cazuguel, G., Quellec, G., Roux, C., Cochener, B.: Content-based medical video retrieval based on region motion trajectories. In: 5th European Conference of the International Federation for Medical and Biological Engineering, pp. 622–625. Springer (2012)

Faloutsos, C., Barber, R., Flickner, M., Hafner, J., Niblack, W., Petkovic, D., Equitz, W.: Efficient and effective querying by image content. J. Intell. Inf. Syst. 3(3–4), 231–262 (1994)

Fang, Y., Lin, W., Chen, Z., Tsai, C.M., Lin, C.W.: A video saliency detection model in compressed domain. IEEE Trans. Circuits Syst. Video Technol. 24(1), 27–38 (2014)

Farag, W.E., Abdel-Wahab, H.: A human-based technique for measuring video data similarity. In: Proceedings Eighth IEEE International Symposium on Computers and Communication, 2003.(ISCC 2003), pp. 769–774. IEEE (2003)

Flickner, M., Sawhney, H., Niblack, W., Ashley, J., Huang, Q., Dom, B., Gorkani, M., Hafner, J., Lee, D., Petkovic, D., et al.: Query by image and video content: The QBIC system. Computer 28(9), 23–32 (1995)

Gao, H.P., Yang, Z.Q.: Content based video retrieval using spatiotemporal salient objects. In: International Symposium on Intelligence Information Processing and Trusted Computing (IPTC), pp. 689–692 (2010)

Guest, P.G., Guest, P.G.: Numerical methods of curve fitting. Cambridge University Press, Cambridge (2012)

Harris, C., Stephens, M.: A combined corner and edge detector. In: Alvey vision conference, vol. 15, p. 50. Citeseer (1988)

Jiang, Y.G., Bhattacharya, S., Chang, S.F., Shah, M.: High-level event recognition in unconstrained videos. Int. J. Multimed. Inf. Retr. 2(2), 73–101 (2013)

Kamila, N.K.: Handbook of Research on Emerging Perspectives in Intelligent Pattern Recognition, Analysis, and Image Processing. IGI Global (2015)

Kanade, S.S., Patil, P.: Dominant color based extraction of key frames for sports video summarization. Int. J. Adv. Eng. Technol. 6(1), 504–512 (2013)

Kantorov, V., Laptev, I.: Efficient feature extraction, encoding, and classification for action recognition. In: 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2593–2600. IEEE (2014)

Karpathy, A., Toderici, G., Shetty, S., Leung, T., Sukthankar, R., Fei-Fei, L.: Large-scale video classification with convolutional neural networks. In: 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1725–1732. IEEE (2014)

Kekre, H., Mishra, D., Rege, M.P.: Survey on recent techniques in content based video retrieval. Int. J. Eng. Tech. Res. (IJETR) 3(5), 69–73 (2015)

Kiranyaz, S., Uhlmann, S., Gabbouj, M.: Dominant color extraction based on dynamic clustering by multi-dimensional particle swarm optimization. In: Seventh International Workshop on Content-Based Multimedia Indexing, 2009. CBMI’09, pp. 181–188. IEEE (2009)

Kliper-Gross, O., Gurovich, Y., Hassner, T., Wolf, L.: Motion interchange patterns for action recognition in unconstrained videos. In: Computer Vision–ECCV 2012, pp. 256–269. Springer (2012)

Kuehne, H., Jhuang, H., Garrote, E., Poggio, T., Serre, T.: Hmdb: a large video database for human motion recognition. In: 2011 IEEE International Conference on Computer Vision (ICCV), pp. 2556–2563. IEEE (2011)

Li, L., Huang, W., Gu, I.H., Luo, R., Tian, Q.: An efficient sequential approach to tracking multiple objects through crowds for real-time intelligent CCTV systems. IEEE Trans. Syst. Man Cybern. Part B Cybern. 38(5), 1254–1269 (2008)

Li, N., Cheng, X., Zhang, S., Wu, Z.: Realistic human action recognition by fast hog3d and self-organization feature map. Mach. Vis. Appl. 25(7), 1793–1812 (2014)

Lichtsteiner, P., Posch, C., Delbruck, T.: A 128\(\times\) 128 120 db 15 \(\mu\)s latency asynchronous temporal contrast vision sensor. IEEE J. Solid-State Circuits 43(2), 566–576 (2008)

Lienhart, R.W., Effelsberg, W., Jain, R.C.: Visualgrep: A systematic method to compare and retrieve video sequences. In: Photonics West’98 Electronic Imaging, pp. 271–282. International Society for Optics and Photonics (1997)

Lin, T., Ngo, C.W., Zhang, H.J., Shi, Q.Y.: Integrating color and spatial features for content-based video retrieval. In: Image Processing, 2001. Proceedings. 2001 International Conference on, vol. 3, pp. 592–595. IEEE (2001)

Lin, T., Zhang, H.J.: Automatic video scene extraction by shot grouping. In: Pattern Recognition, 2000. Proceedings. 15th International Conference on, vol. 4, pp. 39–42. IEEE (2000)

Liu, H., Sun, M.T., Wu, R.C., Yu, S.S.: Automatic video activity detection using compressed domain motion trajectories for H. 264 videos. J. Vis. Commun. Image Represent. 22(5), 432–439 (2011)

Liu, J., Luo, J., Shah, M.: Recognizing realistic actions from videos in the wild. In: Computer Vision and Pattern Recognition, 2009. CVPR 2009. IEEE Conference on, pp. 1996–2003. IEEE (2009)

Liu, T.J., Han, H.J., Xin, X., Li, Z., Katsaggelos, A.K.: A robust and lightweight feature system for video fingerprinting. In: 2012 Proceedings of the 20th European Signal Processing Conference (EUSIPCO), pp. 160–164. IEEE (2012)

Liu, X., Zhuang, Y., Pan, Y.: A new approach to retrieve video by example video clip. In: Proceedings of the seventh ACM international conference on Multimedia (Part 2), pp. 41–44. ACM (1999)

Lu, B., Cao, H., Cao, Z.: An efficient method for video similarity search with video signature. In: 2010 International Conference on Computational and Information Sciences (ICCIS), pp. 713–716. IEEE (2010)

Manjunath, B.S., Salembier, P., Sikora, T.: Introduction to MPEG-7: multimedia content description interface, vol. 1. Wiley, New York (2002)

Manning, C.D., Raghavan, P., Schütze, H., et al.: Introduction to information retrieval, vol. 1. Cambridge University Press, Cambridge (2008)

Mohan, R.: Video sequence matching. In: Acoustics, Speech and Signal Processing, 1998. Proceedings of the 1998 IEEE International Conference on, vol. 6, pp. 3697–3700. IEEE (1998)

Mojsilovic, A., Hu, J., Soljanin, E.: Extraction of perceptually important colors and similarity measurement for image matching, retrieval and analysis. IEEE Trans. Image Process. 11(11), 1238–1248 (2002)

Naphade, M.R., Yeung, M.M., Yeo, B.L.: Novel scheme for fast and efficient video sequence matching using compact signatures. In: Electronic Imaging, pp. 564–572. International Society for Optics and Photonics (1999)

Pacharaney, U.S., Salankar, P.S., Mandalapu, S.: Dimensionality reduction for fast and accurate video search and retrieval in a large scale database. In: 2013 Nirma University International Conference on Engineering (NUiCONE), pp. 1–9. IEEE (2013)

Panchal, P., Merchant, S.: Performance evaluation of fade and dissolve transition shot boundary detection in presence of motion in video. In: Emerging Technology Trends in Electronics, Communication and Networking (ET2ECN), 2012 1st International Conference on, pp. 1–6. IEEE (2012)

Patel, B., Meshram, B.: Content based video retrieval systems. Int. J. UbiComp (IJU) 3(2) (2012)

Peng, X., Qiao, Y., Peng, Q., Qi, X.: Exploring motion boundary based sampling and spatial-temporal context descriptors for action recognition. In: British Machine Vision Conference (BMVC) (2013)

Poppe, C., De Bruyne, S., Paridaens, T., Lambert, P., Van de Walle, R.: Moving object detection in the H. 264/AVC compressed domain for video surveillance applications. J. Vis. Commun. Image Represent. 20(6), 428–437 (2009)

Reddy, K.K., Shah, M.: Recognizing 50 human action categories of web videos. Mach. Vis. Appl. 24(5), 971–981 (2013)

Rodriguez, M.D., Ahmed, J., Shah, M.: Action mach a spatio-temporal maximum average correlation height filter for action recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, 2008. CVPR 2008., pp. 1–8 (2008)

Rogowitz, B.E., Frese, T., Smith, J.R., Bouman, C.A., Kalin, E.B.: Perceptual image similarity experiments. In: Photonics West’98 Electronic Imaging, pp. 576–590. International Society for Optics and Photonics (1998)

Sabitha, M., Hariharan, R.: Hybrid approach for image search reranking. Int. J. Sci. Res. (IJSR) 2, 123–128 (2013)

Sadanand, S., Corso, J.J.: Action bank: A high-level representation of activity in video. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1234–1241. IEEE (2012)

Serre, T., Wolf, L., Bileschi, S., Riesenhuber, M., Poggio, T.: Robust object recognition with cortex-like mechanisms. IEEE Trans. Pattern Anal. Mach. Intell. 29(3), 411–426 (2007)

Shao, H., Wu, Y., Cui, W., Zhang, J.: Image retrieval based on mpeg-7 dominant color descriptor. In: Young Computer Scientists, 2008. ICYCS 2008. The 9th International Conference for, pp. 753–757. IEEE (2008)

Shao, J., Shen, H.T., Zhou, X.: Challenges and techniques for effective and efficient similarity search in large video databases. Proceedings of the VLDB Endowment 1(2), 1598–1603 (2008)

Shechtman, E., Irani, M.: Matching local self-similarities across images and videos. In: IEEE Conference on Computer Vision and Pattern Recognition, 2007. CVPR’07. pp. 1–8. IEEE (2007)

Shi, F., Petriu, E., Laganiere, R.: Sampling strategies for real-time action recognition. In: 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2595–2602. IEEE (2013)

Snoek, C.G., Huurnink, B., Hollink, L., De Rijke, M., Schreiber, G., Worring, M.: Adding semantics to detectors for video retrieval. IEEE Trans. Multimed. 9(5), 975–986 (2007)

Solmaz, B., Assari, S.M., Shah, M.: Classifying web videos using a global video descriptor. Mach. Vis. Appl. 24(7), 1473–1485 (2013)

Soomro, K., Zamir, A.R., Shah, M.: Ucf101: A dataset of 101 human actions classes from videos in the wild. arXiv preprint arXiv:1212.0402 (2012)

Su, C.W., Liao, H.Y., Tyan, H.R., Lin, C.W., Chen, D.Y., Fan, K.C.: Motion flow-based video retrieval. IEEE Trans. Multimed. 9(6), 1193–1201 (2007)

Sun, J., Mu, Y., Yan, S., Cheong, L.F.: Activity recognition using dense long-duration trajectories. In: 2010 IEEE International Conference on Multimedia and Expo (ICME), pp. 322–327. IEEE (2010)

Thepade, S.D., Yadav, N.B.: Assessment of similarity measurement criteria in thepade’s sorted ternary block truncation coding (tstbtc) for content based video retrieval. In: 2015 International Conference on Communication, Information and Computing Technology (ICCICT), pp. 1–6. IEEE (2015)

Thorpe, S., Fize, D., Marlot, C., et al.: Speed of processing in the human visual system. Nature 381(6582), 520–522 (1996)

TrecVid(2011): Trec video retrival task, bbc ruch (1-02-2011). www.nplpir.nist.gov/projects/trecvid

UCF: Ucf sports action dataset (2016). http://crcv.ucf.edu/data/UCF_Sports_Action.php. Retrieved (11-02-2016)

Uijlings, J., Duta, I., Sangineto, E., Sebe, N.: Video classification with densely extracted hog/hof/mbh features: an evaluation of the accuracy/computational efficiency trade-off. Int. J. Multimed. Inf. Retr. 4(1), 33–44 (2015)

Wang, H., Kläser, A., Schmid, C., Liu, C.L.: Dense trajectories and motion boundary descriptors for action recognition. Int. J Comput. Vis. 103(1), 60–79 (2013)

Wang, H., Schmid, C.: Action recognition with improved trajectories. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3551–3558 (2013)

Wang, L., Qiao, Y., Tang, X.: Motionlets: Mid-level 3d parts for human motion recognition. In: 2013 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2674–2681. IEEE (2013)

Watson, A.B.: Image compression using the discrete cosine transform. Math. J. 4(1), 81 (1994)

Wu, Y., Zhuang, Y., Pan, Y.: Content-based video similarity model. In: Proceedings of the eighth ACM international conference on multimedia, pp. 465–467. ACM (2000)

Xu, P., Xie, L., Chang, S.F., Divakaran, A., Vetro, A., Sun, H.: Algorithms and system for segmentation and structure analysis in soccer video. In: Proceedings of ICME, vol. 1, pp. 928–931. Citeseer (2001)

Zhang, H., Smoliar, S.W.: Developing power tools for video indexing and retrieval. In: IS&T/SPIE 1994 International Symposium on Electronic Imaging: Science and Technology, pp. 140–149. International Society for Optics and Photonics (1994)

Zhang, Z., Yuan, F.: Compressed video copy detection based on texture analysis. In: 2010 IEEE International Conference on Wireless Communications, Networking and Information Security (WCNIS), pp. 612–615. IEEE (2010)

Zhang, Z., Zou, J.: Compressed video copy detection based on edge analysis. In: The 2010 IEEE International Conference on Information and Automation, pp. 2497–2501 (2010)

Zhao, Z., Cui, B., Cong, G., Huang, Z., Shen, H.T.: Extracting representative motion flows for effective video retrieval. Multimed. Tools Appl. 58(3), 687–711 (2012)

Zhu, X., Elmagarmid, A.K., Xue, X., Wu, L., Catlin, A.C.: Insightvideo: toward hierarchical video content organization for efficient browsing, summarization and retrieval. IEEE Trans. Multimed. 7(4), 648–666 (2005)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bekhet, S., Ahmed, A. Video similarity detection using fixed-length Statistical Dominant Colour Profile (SDCP) signatures. J Real-Time Image Proc 16, 1999–2014 (2019). https://doi.org/10.1007/s11554-017-0700-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11554-017-0700-9