Abstract

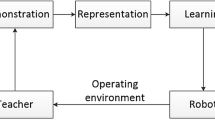

Learning from demonstration (LfD) is an appealing method of helping robots learn new skills. Numerous papers have presented methods of LfD with good performance in robotics. However, complicated robot tasks that need to carefully regulate path planning strategies remain unanswered. Contact or non-contact constraints in specific robot tasks make the path planning problem more difficult, as the interaction between the robot and the environment is time-varying. In this paper, we focus on the path planning of complex robot tasks in the domain of LfD and give a novel perspective for classifying imitation learning and inverse reinforcement learning. This classification is based on constraints and obstacle avoidance. Finally, we summarize these methods and present promising directions for robot application and LfD theory.

Similar content being viewed by others

References

Argall B D, Chernova S, Veloso M, et al. A survey of robot learning from demonstration. Robotics Autonomous Syst, 2009, 57: 469–483

Billard A, Calinon S, Dillmann R, et al. Survey: Robot programming by demonstration. Handbook of Robotics, 2008, 59

Schaal S. Is imitation learning the route to humanoid robots? Trends Cognitive Sci, 1999, 3: 233–242

Hussein A, Gaber M M, Elyan E, et al. Imitation learning: A survey of learning methods. Acm Comput Surv (CSUR), 2017, 50: 1–35

Arora S, Doshi P. A survey of inverse reinforcement learning: Challenges, methods and progress. ArXiv: 1806.06877

Gao Y, Peters J, Tsourdos A, et al. A survey of inverse reinforcement learning techniques. Int Jnl Intel Comp Cyber, 2012, 5: 293–311

Argall B, Browning B, Veloso M. Learning by demonstration with critique from a human teacher. In: Proceedings of the IEEE International Conference on Human-Robot Interaction (HRI). 2nd ACM. IEEE, 2007. 57–64

Argall B D, Browning B, Veloso M. Learning robot motion control with demonstration and advice-operators In: Proceedings of the International Conference on Intelligent Robots and Systems. IEEE, 2008. 399–404

Calinon S. Robot Programming by Demonstration. In: Handbook of Robotics. Berlin, Heidelberg: Springer, 2008

Calinon S, Guenter F, Billard A. On learning, representing, and generalizing a task in a humanoid robot. IEEE Trans Syst, 2007, 37: 286–298

Calinon S, Billard A. Incremental learning of gestures by imitation in a humanoid robot. In: Proceedings of the ACM. IEEE International Conference on Human-Robot Interaction. Arlington: 2007. 255–262

Calinon S, Billard A. Active teaching in robot programming by demonstration. In: Proceedings of the RO-MAN 2007-The 16th IEEE International Symposium on Robot and Human Interactive Communication. IEEE, 2007. 702–707

Ijspeert A J, Nakanishi J, Schaal S. Movement imitation with nonlinear dynamical systems in humanoid robots. In: Proceedings of the IEEE International Conference on Robotics and Automation (Cat. No. 02CH37292). IEEE, 2002. 2: 1398–1403

Peters J, Schaal S. Reinforcement learning of motor skills with policy gradients. Neural Networks, 2008, 21: 682–697

Guenter F, Hersch M, Calinon S, et al. Reinforcement learning for imitating constrained reaching movements. Adv Robotics, 2007, 21: 1521–1544

Schaal S, Mohajerian P, Ijspeert A. Dynamics systems vs. optimal controlla unifying view. Prog Brain Res, 2007, 165: 425–445

Ijspeert A J, Nakanishi J, Schaal S. Learning attractor landscapes for learning motor primitives. In: Advances in Neural Information Processing Systems. Vancouver, 2003. 1547–1554

Schaal S, Peters J, Nakanishi J, et al. Learning movement primitives. Robotics Research. In: the Eleventh International Symposium. Berlin, Heidelberg: Springer, 2005. 561–572

Ijspeert A J, Nakanishi J, Hoffmann H, et al. Dynamical movement primitives: Learning attractor models for motor behaviors. Neural Comput, 2013, 25: 328–373

Schaal S, Ijspeert A, Billard A. Computational approaches to motor learning by imitation. Phil Trans R Soc Lond B, 2003, 358: 537–547

Fang B, Jia S, Guo D, et al. Survey of imitation learning for robotic manipulation. Int Jour Int Rot App, 2019 3: 362C369

Ahmed H, Mohamed M G, Eyad E, et al. Imitation learning: A survey of learning methods. ACM Computing Surveys, 2017, 50: 1–35

Billard A, Epars Y, Calinon S, et al. Discovering optimal imitation strategies. Robotics Autonomous Syst, 2004, 47: 69–77

Billard A G, Calinon S, Guenter F. Discriminative and adaptive imitation in uni-manual and bi-manual tasks. Robotics Autonomous Syst, 2006, 54: 370–384

Rabiner L R. A tutorial on hidden Markov models and selected applications in speech recognition. Proc IEEE, 1989, 77: 257–286

Inamura T, Toshima I, Tanie H, et al. Embodied symbol emergence based on mimesis theory. Int J Robotics Res, 2004, 23: 363–377

Kulic D, Takano W, Nakamura Y. Incremental learning, clustering and hierarchy formation of whole body motion patterns using adaptive hidden Markov chains. Int J Robotics Res, 2008, 27: 761–784

Takano W, Yamane K, Sugihara T, et al. Primitive communication based on motion recognition and generation with hierarchical mimesis model. In: Proceedings of the IEEE International Conference on Robotics and Automation. IEEE, 2006. 3602–3609

Takano W, Yamane K, Nakamura Y. Primitive communication of humanoid robot with human via hierarchical mimesis model on the proto symbol space. In: Proceedings of the 5th IEEE-RAS International Conference on Humanoid Robots. IEEE, 2005. 167–174

Ghahramani Z, Jordan M I. Factorial hidden Markov models. In: Advances in Neural Information Processing Systems. Denver, 1996. 472–478

Kulic D, Takano W, Nakamura Y. Representability of human motions by factorial hidden markov models. In: International Conference on Intelligent Robots and Systems. IEEE, 2007. 2388–2393

Kulic D, Takano W, Nakamura Y. Incremental on-line hierarchical clustering of whole body motion patterns. In: RO-MAN 2007-The 16th IEEE International Symposium on Robot and Human Interactive Communication. IEEE, 2007. 1016–1021

Lee D, Ott C, Nakamura Y. Mimetic communication model with compliant physical contact in human-humanoid interaction. Int J Robotics Res, 2010, 29: 1684–1704

Lee D, Nakamura Y. Mimesis model from partial observations for a humanoid robot. Int J Robotics Res, 2010, 29: 60–80

Lee D, Nakamura Y. Mimesis from partial observations. In: Proceedings of the International Conference on Intelligent Robots and Systems. IEEE, 2005. 3758–3763

Asfour T, Azad P, Gyarfas F, et al. Imitation learning of dual-arm manipulation tasks in humanoid robots. Int J Human Robot, 2008, 05: 183–202

Calinon S, Billard A G. What is the teacher’s role in robot programming by demonstration? Toward benchmarks for improved learning. Interaction Studies, 2007, 8: 441–464

Cederborg T, Li M, Baranes A, et al. Incremental local online Gaussian mixture regression for imitation learning of multiple tasks. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots Systems. IEEE, 2010

Ijspeert A J, Nakanishi J, Schaal S. Trajectory formation for imitation with nonlinear dynamical systems. In: Proceedings of the International Conference on Intelligent Robots and Systems. Expanding the Societal Role of Robotics in the the Next Millennium (Cat. No. 01CH37180). IEEE, 2001. 2: 752–757

Vecerik M, Hester T, Scholz J, et al. Leveraging demonstrations for deep reinforcement learning on robotics problems with sparse rewards. ArXiv: 1707.08817

Nair A, McGrew B, Andrychowicz M, et al. Overcoming exploration in reinforcement learning with demonstrations. In: Proceedings of the International Conference on Robotics and Automation (ICRA). IEEE, 2018. 6292–6299

Bojarski M, Del Testa D, Dworakowski D, et al. End to end learning for self-driving cars. ArXiv: 1604.07316

Kappler D, Pastor P, Kalakrishnan M, et al. Data-driven online decision making for autonomous manipulation. In: Robotics: Science and Systems. Rome, 2015

Pastor P, Kalakrishnan M, Chitta S, et al. Skill learning and task outcome prediction for manipulation. In: Proceedings of the International Conference on Robotics and Automation. IEEE, 2011. 3828–3834

Pastor P, Righetti L, Kalakrishnan M, et al. Online movement adaptation based on previous sensor experiences. In: Proceedings of the International Conference on Intelligent Robots and Systems. IEEE, 2011. 365–371

Pastor P, Kalakrishnan M, Righetti L, et al. Towards associative skill memories. In: Proceedings of the 12th IEEE-RAS International Conference on Humanoid Robots (Humanoids 2012). IEEE, 2012. 309–315

Christopher G A, Andrew W M, Stefan S. Locally weighted learning for control. Artifi Intell Rev, 1997, 11: 75–113

Schaal, S, Atkeson, C. Constructive Incremental learning from only local information. Neural Comput, 1998, 10: 2047–2084

Vijayakumar S, D’Souza A, Schaal S. Incremental online learning in high dimensions. Neural Comput, 2005, 17: 2602–2634

Jara-Ettinger J. Theory of mind as inverse reinforcement learning. Cur Opi in Beh Sci, 2019, 29: 105–110

Ng A Y, Russell S J. Algorithms for inverse reinforcement learning. In: Proceedings of the Seventeenth International Conference on Machine Learning (ICML00). San Francisco: Morgan Kaufmann Publishers Inc., 2000. 663–670

Wulfmeier M, Ondruska P, Posner I. Deep inverse reinforcement learning. ArXiv: 1507.04888

Coates A, Abbeel P, Ng A Y. Apprenticeship learning for helicopter control. Commun ACM, 2009, 52: 97–105

Ratliff N D, Bagnell J A, Zinkevich M A. Maximum margin planning. In: Proceedings of the 23rd International Conference on Machine Learning. New York, 2006. 729–736

Klein E, Geist M, Piot B, et al. Inverse reinforcement learning through structured classification. In: Advances in Neural Information Processing Systems. 2012. 1007–1015

Lin J L, Hwang K S, Shi H, et al. An ensemble method for inverse reinforcement learning. Inf Sci, 2020, 512: 518–532

Klein E, Piot B, Geist M, et al. Structured classification for inverse reinforcement learning. In: Proceedings of the European Workshop on Reinforcement Learning. Edinburgh, 2013. 1–14

Ziebart B D, Maas A L, Bagnell J A, et al. Maximum entropy inverse reinforcement learning. In: Proceedings of the 23rd National Conference on Artificial Intelligence. Chicago: AAAI Press, 2008. 1433C1438

Halperin I. Inverse reinforcement learning for marketing. ArXiv: 1712.04612

Boularias A, Kober J, Peters J. Relative entropy inverse reinforcement learning. In: Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics. Freiburg, 2011. 182–189

Ramachandran D, Amir E. Bayesian inverse reinforcement learning. IJCAI, 2007, 7: 2586–2591

Choi J, Kim K E. Hierarchical bayesian inverse reinforcement learning. IEEE Trans Cybernet, 2014, 45: 793–805

Michini B, How J P. Bayesian nonparametric inverse reinforcement learning. In: Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Berlin, Heidelberg: Springer, 2012. 148–163

Rothkopf C A, Dimitrakakis C. Preference elicitation and inverse reinforcement learning. In: Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Berlin, Heidelberg: Springer, 2011. 34–48

Qiao Q, Beling P A. Inverse reinforcement learning with Gaussian process. In: Proceedings of the 2011 American Control Conference. IEEE, 2011. 113–118

Da Silva V F, Costa A H R, Lima P. Inverse reinforcement learning with evaluation. In: Proceedings of the IEEE International Conference on Robotics and Automation. Montreal, 2006. 4246–4251

Amin K, Jiang N, Singh S. Repeated inverse reinforcement learning. In: Advances in Neural Information Processing Systems. Long Beach, 2017. 1815–1824

Hadfield-Menell D, Russell S J, Abbeel P, et al. Cooperative inverse reinforcement learning. In: Advances in Neural Information Processing Systems. Barcelona SPAIN, 2016. 3909–3917

Zhang X, Zhang K, Miehling E, et al. Non-cooperative inverse reinforcement learning. In: Advances in Neural Information Processing Systems. Vancouver, 2019. 9482–9493

Chen R, Wang W, Zhao Z, et al. Active learning for risk-sensitive inverse reinforcement learning. ArXiv: 1909.07843

Abbeel P, Coates A, Ng A Y. Autonomous helicopter aerobatics through apprenticeship learning. Int J Robotics Res, 2010, 29: 1608–1639

Abbeel P, Ng A Y. Apprenticeship learning via inverse reinforcement learning. In: Proceedings of the Twenty-first International Conference on Machine Learning. New York, 2004

Natarajan S, Kunapuli G, Judah K, et al. Multi-agent inverse reinforcement learning. In: Proceedings of the Ninth International Conference on Machine Learning and Applications. IEEE, 2010. 395–400

Amiranashvili A, Dosovitskiy A, Koltun V, et al. Motion perception in reinforcement learning with dynamic objects. ArXiv: 1901.03162

Babes M, Marivate V, Subramanian K, et al. Apprenticeship learning about multiple intentions. In: Proceedings of the 28th International Conference on Machine Learning (ICML-11). Madison, 2011. 897–904

Xin L, Li S E, Wang P, et al. Accelerated inverse reinforcement learning with randomly pre-sampled policies for autonomous driving reward design. In: Proceedings of the Intelligent Transportation Systems Conference (ITSC). IEEE, 2019. 2757–2764

Xie X, Li C, Zhang C, et al. Learning virtual grasp with failed demonstrations via bayesian inverse reinforcement learning. In: Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, 2019

Finn C, Levine S, Abbeel P. Guided cost learning: Deep inverse optimal control via policy optimization. In: Proceedings of the International Conference on Machine Learning. New York, 2016. 49–58

Kalakrishnan M, Pastor P, Righetti L, et al. Learning objective functions for manipulation. In: Proceedings of the IEEE International Conference on Robotics and Automation. IEEE, 2013. 1331–1336

Tolstaya E, Ribeiro A, Kumar V, et al. Inverse optimal planning for air traffic control. ArXiv: 1903.10525

Osogami T, Raymond R. Map matching with inverse reinforcement learning. In: Proceedings of the Twenty-Third International Joint Conference on Artificial Intelligence. Beijing, 2013

Pietquin O. Inverse reinforcement learning for interactive systems. In: Proceedings of the 2nd Workshop on Machine Learning for Interactive Systems: Bridging the Gap Between Perception, Action and Communication. New York, 2013. 71–75

Kishikawa D, Arai S. Comfortable driving by using deep inverse reinforcement Learning. In: Proceedings of the International Conference on Agents (ICA). IEEE, 2019. 38–43

Rosbach S, James V, Grobjohann S, et al. Driving with style: Inverse reinforcement learning in general-purpose planning for automated driving. ArXiv: 1905.00229

Wulfmeier M, Rao D, Wang D Z, et al. Large-scale cost function learning for path planning using deep inverse reinforcement learning. Int J Robotics Res, 2017, 36: 1073–1087

Wulfmeier M, Wang D Z, Posner I. Watch this: Scalable cost-function learning for path planning in urban environments. In: Proceedings of the International Conference on Intelligent Robots and Systems (IROS). IEEE, 2016. 2089–2095

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the National Natural Science Foundation of China (Grant No. 91848202), and the Foundation for Innovative Research Groups of the National Natural Science Foundation of China (Grant No. 51521003).

Rights and permissions

About this article

Cite this article

Xie, Z., Zhang, Q., Jiang, Z. et al. Robot learning from demonstration for path planning: A review. Sci. China Technol. Sci. 63, 1325–1334 (2020). https://doi.org/10.1007/s11431-020-1648-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11431-020-1648-4