Abstract

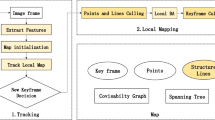

This paper presents a robust visual simultaneous localization and mapping (SLAM) system that leverages point and structural line features in dynamic man-made environments. Manhanttan world assumption is considered and the structural line features in such man-made environments provide rich geometric constraint, e.g., parallelism. Such a geometric constraint can be therefore used to rectify 3D maplines after initialization. To cope with dynamic scenarios, the proposed system are divided into four main threads including 2D dynamic object tracking, visual odometry, local mapping and loop closing. The 2D tracker is responsible to track the object and capture the moving object in bounding boxes. In such a case, the dynamic background can be excluded and the outlier point and line features can be effectively removed. To parameterize 3D lines, we use Plücker line coordinates in initialization and projection processes, and utilize the orthonormal representation in unconstrained graph optimization process. The proposed system has been evaluated in both benchmark datasets and real-world scenarios, which reveals a more robust performance in most of the experiments compared with the existing state-of-the-art methods.

Similar content being viewed by others

References

Strasdat H, Montiel J M M, Davison A J. Visual SLAM: Why filter? Image Vision Comput, 2012, 30: 65–77

Bescos B, Facil J M, Civera J, et al. DynaSLAM: Tracking, mapping, and inpainting in dynamic scenes. IEEE Robot Autom Lett, 2018, 3: 4076–4083

Yu C, Liu Z, Liu X, et al. DS-SLAM: A semantic visual SLAM towards dynamic environments. In: IEEE/RSJ International Conference on Intelligent Robots and Systems. Madrid, 2018. 1168–1174

Coughlan J, Yuille A. Manhattan world: Compass direction from a single image by Bayesian inference. In: IEEE International Conference on Computer Vision. Kerkyra, 1999. 941–947

Zhou H, Zou D, Pei L, et al. StructSLAM: Visual SLAM with building structure lines. IEEE Trans Veh Technol, 2015, 64: 1364–1375

Zou D, Wu Y, Pei L, et al. StructVIO: Visual-inertial odometry with structural regularity of man-made environments. IEEE Trans Robot, 2019, 35: 999–1013

Li H, Yao J, Bazin J, et al. A monocular SLAM system leveraging structural regularity in Manhattan world. In: IEEE International Conference on Robotics and Automation. Brisbane, 2018. 2518–2525

Bartoli A, Sturm P. Structure-from-motion using lines: Representation, triangulation, and bundle adjustment. Comput Vision Image Understand, 2005, 100: 416–441

Pumarola A, Vakhitov A, Agudo A, et al. PL-SLAM: Real-time monocular visual SLAM with points and lines. In: IEEE International Conference on Robotics and Automation. Singapore, 2017. 45034508

Gomez-Ojeda R, Moreno F A, Zuniga-Noel D, et al. PL-SLAM: A stereo SLAM system through the combination of points and line segments. IEEE Trans Robot, 2019, 35: 734–746

Gomez-Ojeda R, Briales J, González-Jiménez J. PL-SVO: Semidirect monocular visual odometry by combining points and line segments. In: IEEE/RSJ International Conference on Intelligent Robots and Systems. Daejeon, 2016. 4211–4216

Zheng F, Tsai G, Zhang Z, et al. Trifo-VIO: Robust and efficient stereo visual inertial odometry using points and lines. In: IEEE/RSJ International Conference on Intelligent Robots and Systems. Madrid, 2018. 3686–3693

Zhang G, Lee J H, Lim J, et al. Building a 3-D line-based map using stereo SLAM. IEEE Trans Robot, 2015, 31: 1364–1377

Zuo X, Xie X, Liu Y, et al. Robust visual SLAM with point and line features. In: IEEE/RSJ International Conference on Intelligent Robots and Systems. Vancouver, 2017. 1775–1782

Schindler G, Dellaert F. Atlanta world: An expectation maximization framework for simultaneous low-level edge grouping and camera calibration in complex man-made environments. In: IEEE Conference on Computer Vision and Pattern Recognition. Washington, 2004. 203–209

Mur-Artal R, Tardos J D. ORB-SLAM2: An open-source SLAM system for monocular, stereo, and RGB-D cameras. IEEE Trans Robot, 2017, 33: 1255–1262

Qiu K, Qin T, Gao W, et al. Tracking 3-D motion of dynamic objects using monocular visual-inertial sensing. IEEE Trans Robot, 2019, 35: 799–816

Yang S, Scherer S. CubeSLAM: Monocular 3-D object SLAM. IEEE Trans Robot, 2019, 35: 925–938

Eckenhoff K, Yang Y, Geneva P, et al. Tightly-coupled visual-inertial localization and 3-D rigid-body target tracking. IEEE Robot Autom Lett, 2019, 4: 1541–1548

Galvez-López D, Tardos J D. Bags of binary words for fast place recognition in image sequences. IEEE Trans Robot, 2012, 28: 1188–1197

Hare S, Golodetz S, Saffari A, et al. Struck: Structured output tracking with kernels. IEEE Trans Pattern Anal Mach Intell, 2016, 38: 2096–2109

Rublee E, Rabaud V, Konolige K, et al. ORB: An efficient alternative to SIFT or SURF. In: IEEE International Conference on Computer Vision. Barcelona, 2011. 2564–2571

von Gioi R G, Jakubowicz J, Morel J M, et al. LSD: A fast line segment detector with a false detection control. IEEE Trans Pattern Anal Mach Intell, 2010, 32: 722–732

Lu X, Yao J, Li H, et al. 2-line exhaustive searching for real-time vanishing point estimation in Manhattan world. In: IEEE Winter Conference on Applications of Computer Vision. California, 2017. 345–353

Zhang L, Koch R. An efficient and robust line segment matching approach based on LBD descriptor and pairwise geometric consistency. J Visual Commun Image Represent, 2013, 24: 794–805

Kuemmerle R, Grisetti G, Strasdat H, et al. G2o: A general framework for graph optimization. In: IEEE International Conference on Robotics and Automation. Shanghai, 2011. 3607–3613

Burri M, Nikolic J, Gohl P, et al. The EuRoC micro aerial vehicle datasets. Int J Robot Res, 2016, 35: 1157–1163

Geiger A, Lenz P, Urtasun R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In: IEEE Conference on Computer Vision and Pattern Recognition. Providence RI, 2012. 3354–3361

Bonarini A, Burgard W, Fontana G, et al. RAWSEEDS: Robotics advancement through web-publishing of sensorial and elaborated extensive data sets. In: IEEE/RSJ International Conference on Intelligent Robots and Systems. Beijing, 2006. 16–23

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the Institute for Guo Qiang of Tsinghua University (Grant No. 2019GQG1023), the National Natural Science Foundation of China (Grant No. 61873140), and the Independent Research Program of Tsinghua University (Grant No. 2018Z05JDX002).

Rights and permissions

About this article

Cite this article

Liu, J., Meng, Z. & You, Z. A robust visual SLAM system in dynamic man-made environments. Sci. China Technol. Sci. 63, 1628–1636 (2020). https://doi.org/10.1007/s11431-020-1602-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11431-020-1602-3