Abstract

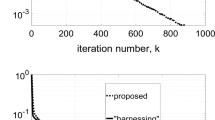

In this paper, we consider the distributed optimization problem, where the goal is to minimize the global objective function formed by a sum of agents’ local smooth and strongly convex objective functions, over undirected connected graphs. Several distributed accelerated algorithms have been proposed for solving such a problem in the existing literature. In this paper, we provide insights for understanding these existing distributed algorithms from an ordinary differential equation (ODE) point of view. More specifically, we first derive an equivalent second-order ODE, which is the exact limit of these existing algorithms by taking the small step-size. Moreover, focusing on the quadratic objective functions, we show that the solution of the resulting ODE exponentially converges to the unique global optimal solution. The theoretical results are validated and illustrated by numerical simulations.

Similar content being viewed by others

References

Tsitsiklis J N. Problems in decentralized decision making and computation. Dissertation for Doctoral Degree. Cambridge: Massachusetts Institute of Technology, 1984

Tsitsiklis J, Bertsekas D, Athans M. Distributed asynchronous deterministic and stochastic gradient optimization algorithms. IEEE Trans Automat Contr, 1986, 31: 803–812

Xiao L, Boyd S. Optimal scaling of a gradient method for distributed resource allocation. J Optim Theor Appl, 2006, 129: 469–488

Molzahn D K, Dorfler F, Sandberg H, et al. A Survey of distributed optimization and control algorithms for electric power systems. IEEE Trans Smart Grid, 2017, 8: 2941–2962

Boyd S, Parikh N, Chu E, et al. Distributed optimization and statistical learning via the alternating direction method of multipliers. FNT Mach Learn, 2010, 3: 1–122

Rabbat M, Nowak R. Distributed optimization in sensor networks. In: Third International Symposium on Information Processing in Sensor Networks. 2004. 20–27

Ren W, Beard R W, Atkins E M. Information consensus in multivehicle cooperative control. IEEE Control Syst, 2007, 27: 71–82

Olfati-Saber R, Fax J A, Murray R M. Consensus and cooperation in networked multi-agent systems. Proc IEEE, 2007, 95: 215–233

Yuan Y, Stan G B, Shi L, et al. Decentralised minimum-time consensus. Automatica, 2013, 49: 1227–1235

Gao W, Jiang Z P, Lewis F L, et al. Leader-to-formation stability of multiagent systems: An adaptive optimal control approach. IEEE Trans Automat Contr, 2018, 63: 3581–3587

Nedić A, Ozdaglar A. Distributed subgradient methods for multi-agent optimization. IEEE Trans Automat Contr, 2009, 54: 48–61

Nedić A, Olshevsky A. Distributed optimization over time-varying directed graphs. IEEE Trans Automat Contr, 2015, 60: 601–615

Hong Y G, Zhang Y Q. Distributed optimization: Algorithm design and convergence analysis (in Chinese). Contl Theor Appl, 2014, 31: 850–857

Yi P, Hong Y G. Distributed cooperative optimization and its applications (in Chinese). Sci Sin Math, 2016, 46: 1547–1564

Long Y S, Liu S, Xie L H. Distributed constrained stochastic optimal consensus (in Chinese). Sci Sin Math, 2016, 46: 1487–1498

Nedić A, Liu J. Distributed optimization for control. Ann Rev Control Robot Auton Syst, 2018, 1: 77–103

Yang T, Yi X, Wu J, et al. A survey of distributed optimization. Annu Rev Control, 2019, 47: 278–305

Xie P, You K Y, Hong Y G, et al. A survey of distributed convex optimization algorithms over networks (in Chinese). Contl Theor Appl, 2018, 35: 918–927

Yang T, Chai T Y. Research status and prospects of distributed collaborative optimization (in Chinese). Sci Sin Tech, 2020, doi: https://doi.org/10.1360/SST-2020-0040

Matei I, Baras J S. Performance evaluation of the consensus-based distributed subgradient method under random communication topologies. IEEE J Sel Top Signal Process, 2011, 5: 754–771

Yuan K, Ling Q, Yin W. On the convergence of decentralized gradient descent. SIAM J Optim, 2016, 26: 1835–1854

Shi W, Ling Q, Wu G, et al. EXTRA: An exact first-order algorithm for decentralized consensus optimization. SIAM J Optim, 2015, 25: 944–966

Qu G N, Li N. Harnessing smoothness to accelerate distributed optimization. IEEE Trans Control Netw Syst, 2018, 5: 1245–1260

Nedić A, Olshevsky A, Shi W. Achieving geometric convergence for distributed optimization over time-varying graphs. SIAM J Optim, 2017, 27: 2597–2633

Xu J M, Zhu S Y, Soh Y C, et al. Convergence of asynchronous distributed gradient methods over stochastic networks. IEEE Trans Automat Contr, 2018, 63: 434–448

Jakovetić D. A unification and generalization of exact distributed first-order methods. IEEE Trans Signal Inf Process Networks, 2019, 5: 31–46

Yang T, George J, Qin J, et al. Distributed least squares solver for network linear equations. Automatica, 2020, 113: 108798

Yuan Y, Tang X, Zhou W, et al. Data driven discovery of cyber physical systems. Nat Commun, 2019, 10: 4894

Wang J, Elia N. Control approach to distributed optimization. In: Proceedings of the 48th Annual Allerton Conference on Communication, Control, and Computing. Allerton, 2010. 557–561

Gharesifard B, Cortes J. Distributed continuous-time convex optimization on weight-balanced digraphs. IEEE Trans Automat Contr, 2014, 59: 781–786

Xie Y J, Lin Z L. Global optimal consensus for multi-agent systems with bounded controls. Syst Control Lett, 2017, 102: 104–111

Kia S S, Cortés J, Martínez S. Distributed convex optimization via continuous-time coordination algorithms with discrete-time communication. Automatica, 2015, 55: 254–264

Lu J, Tang C Y. Zero-gradient-sum algorithms for distributed convex optimization: The continuous-time case. IEEE Trans Automat Contr, 2012, 57: 2348–2354

Chen W, Ren W. Event-triggered zero-gradient-sum distributed consensus optimization over directed networks. Automatica, 2016, 65: 90–97

Mokhtari A, Ling Q, Ribeiro A. Network newton distributed optimization methods. IEEE Trans Signal Process, 2017, 65: 146–161

Varagnolo D, Zanella F, Cenedese A, et al. Newton-Raphson consensus for distributed convex optimization. IEEE Trans Automat Contr, 2016, 61: 994–1009

Su W J, Boyd S, Candés E J. A differential equation for modeling Nesterov’s accelerated gradient method: Theory and insights. J Mach Learn Res, 2016, 17: 1–43

Brown A A, Bartholomew-Biggs M C. Some effective methods for unconstrained optimization based on the solution of systems of ordinary differential equations. J Optim Theor Appl, 1989, 62: 211–224

Nesterov Y E. Introductory Lectures on Convex Optimization: A Basic Course. New York: Springer, 2004. 87

Wibisono A, Wilson A C, Jordan M I. A variational perspective on accelerated methods in optimization. Proc Natl Acad Sci, 2016, 113: E7351–E7358

Shi B, Du S, Su W J, et al. Acceleration via symplectic discretization of high-resolution differential equations. In: Proceedings of the 33th International Conference on Neural Information Processing Systems. 2019. 5745–5753

Zhang J Z, Uribe C A, Mokhtari A, et al. Achieving acceleration in distributed optimization via direct discretization of the Heavy-Ball ODE. In: Proceedings of the American Control Conference. IEEE, 2019. 3408–3413

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the National Natural Science Foundation of China (Grant Nos. 91748112, 61991403, 61991404, and 61991400).

Rights and permissions

About this article

Cite this article

Chen, R., Yang, T. & Chai, T. Distributed accelerated optimization algorithms: Insights from an ODE. Sci. China Technol. Sci. 63, 1647–1655 (2020). https://doi.org/10.1007/s11431-020-1596-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11431-020-1596-8