Abstract

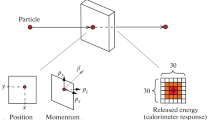

In this paper, we undertake further investigation to alleviate the issue of limit cycling behavior in training generative adversarial networks (GANs) through the proposed predictive centripetal acceleration algorithm (PCAA). Specifically, we first derive the upper and lower complexity bounds of PCAA for a general bilinear game, with the last-iterate convergence rate notably improving upon previous results. Then, we combine PCAA with the adaptive moment estimation algorithm (Adam) to propose PCAA-Adam, for practical training of GANs to enhance their generalization capability. Finally, we validate the effectiveness of the proposed algorithm through experiments conducted on bilinear games, multivariate Gaussian distributions, and the CelebA dataset, respectively.

Similar content being viewed by others

References

Abernethy J, Lai K A, Wibisono A. Last-iterate convergence rates for min-max optimization: Convergence of Hamiltonian gradient descent and consensus optimization. In: Proceedings of the 32nd International Conference on Algorithmic Learning Theory. Berlin: Springer, 2021, 3–47

Alawieh M B, Li W, Lin Y, et al. High-definition routing congestion prediction for large-scale FPGAs. In: Proceedings of the 25th Asia and South Pacific Design Automation Conference. New York: IEEE, 2020, 26–31

Anagnostides I, Penna P. Solving zero-sum games through alternating projections. arXiv:2010.00109, 2020

Arjovsky M, Chintala S, Bottou L. Wasserstein generative adversarial networks. In: Proceedings of the 34th International Conference on Machine Learning. New York: ICML, 2017, 214–223

Azizian W, Mitliagkas I, Lacoste-Julien S, et al. A tight and unified analysis of gradient-based methods for a whole spectrum of differentiable games. In: Proceedings of the Twenty-Third International Conference on Artificial Intelligence and Statistics. New York: PMLR, 2020, 2863–2873

Bailey J P, Gidel G, Piliouras G. Finite regret and cycles with fixed step-size via alternating gradient descent-ascent. In: Proceedings of Thirty Third Annual Conference on Learning Theory. New York: PMLR, 2020, 391–407

Balduzzi D, Racaniere S, Martens J, et al. The mechanics of n-player differentiable games. In: Proceedings of the 35th International Conference on Machine Learning. New York: ICML, 2018, 354–363

Bao X C, Zhang G D. Finding and only finding local Nash equilibria by both pretending to be a follower. In: Proceedings of the ICLR 2022 Workshop on Gamification and Multiagent Solutions. Washington DC: ICLR, 2022

Berard H, Gidel G, Almahairi A, et al. A closer look at the optimization landscapes of generative adversarial networks. In: International Conference on Learning Representations. Washington DC: ICLR, 2020

Brock A, Donahue J, Simonyan K. Large scale GAN training for high fidelity natural image synthesis. In: International Conference on Learning Representations. Washington DC: ICLR, 2018

Cai S, Obukhov A, Dai D, et al. Pix2nerf: Unsupervised conditional π-GAN for single image to neural radiance fields translation. In: Computer Vision and Pattern Recognition. New York: IEEE, 2022, 3981–3990

Chae J, Kim K, Kim D. Open problem: Is there a first-order method that only converges to local minimax optima. In: Proceedings of Thirty Sixth Annual Conference on Learning Theory. New York: PMLR, 2023, 5957–5964

Chae J, Kim K, Kim D. Two-timescale extragradient for finding local minimax points. arXiv:2305.16242, 2023

Chan E R, Lin C Z, Chan M A, et al. Efficient geometry-aware 3D generative adversarial networks. In: Computer Vision and Pattern Recognition. New York: IEEE, 2022, 16123–16133

Chavdarova T, Pagliardini M, Jaggi M, et al. Taming GANs with lookahead-minmax. In: International Conference on Learning Representations. Washington DC: ICLR, 2021

Crowson K, Biderman S, Kornis D, et al. VQGAN-CLIP: Open domain image generation and editing with natural language guidance. In: Proceedings of the European Conference on Computer Vision. Cham: Springer, 2022, 88–105

Daskalakis C, Ilyas A, Syrgkanis V, et al. Training GANs with optimism. In: International Conference on Learning Representations. Washington DC: ICLR, 2018

Daskalakis C, Panageas I. The limit points of (optimistic) gradient descent in min-max optimization. In: Advances in Neural Information Processing Systems, vol. 31. Cambridge: MIT Press, 2018, 9236–9246

Daskalakis C, Panageas I. Last-iterate convergence: Zero-sum games and constrained min-max optimization. In: Innovations in Theoretical Computer Science Conference. Wadern: Dagstuhl Publishing, 2019

Fang S, Han F, Liang W Y, et al. An improved conditional generative adversarial network for microarray data. In: Proceedings of the International Conference on Intelligent Computing. Berlin: Springer, 2020, 105–114

Gidel G. Multi-player games in the era of machine learning. PhD Thesis. Montréal: Université de Montréal, 2021

Gidel G, Berard H, Vignoud G, et al. A variational inequality perspective on generative adversarial networks. In: International Conference on Learning Representations. Washington DC: ICLR, 2019

Gidel G, Hemmat R A, Pezeshki M, et al. Negative momentum for improved game dynamics. In: Proceedings of the Twenty-Second International Conference on Artificial Intelligence and Statistics. New York: PMLR, 2019, 1802–1811

Gidel G, Jebara T, Lacoste-Julien S. Frank-Wolfe algorithms for saddle point problems. In: Proceedings of the 20th International Conference on Artificial Intelligence and Statistics. New York: PMLR, 2017, 362–371

Goodfellow I. NIPS 2016 tutorial: Generative adversarial networks. arXiv:1701.00160, 2016

Goodfellow I, Pouget-Abadie J, Mirza M, et al. Generative adversarial nets. In: Advances in Neural Information Processing Systems, vol. 27. Cambridge: MIT Press, 2014, 2672–2680

Grnarova P, Kilcher Y, Levy K Y, et al. Generative minimization networks: Training GANs without competition. arXiv:2103.12685, 2021

He H, Zhao S F, Xi Y Z, et al. AGE: Enhancing the convergence on GANs using alternating extra-gradient with gradient extrapolation. In: NeurIPS 2021 Workshop on Deep Generative Models and Downstream Applications. Cambridge: MIT Press, 2021

He H, Zhao S F, Xi Y Z, et al. Solve minimax optimization by Anderson acceleration. In: International Conference on Learning Representations. Washington DC: ICLR, 2022

Hsieh Y P. Convergence without convexity: Sampling, optimization, and games. PhD Thesis. Lausanne: École Polytechnique Fédérale de Lausanne, 2020

Jesse E, Kumar K A, Shuo C, et al. GANSynth: Adversarial neural audio synthesis. In: International Conference on Learning Representations. Washington DC: ICLR, 2019

Jin C, Netrapalli P, Jordan M I. What is local optimality in nonconvex-nonconcave minimax optimization. In: Proceedings of the 37th International Conference on Machine Learning. New York: ICML, 2020, 4880–4889

Kingma D P, Ba J. Adam: A method for stochastic optimization. arXiv:1412.6980, 2014

Korpelevich G M. The extragradient method for finding saddle points and other problems. Matecon, 1976, 12: 747–756

Lei N, An D S, Guo Y, et al. A geometric understanding of deep learning. Engineering, 2020, 6: 361–374

Li K K, Yang X M, Zhang K. Training GANs with predictive centripetal acceleration (in Chinese). Sci Sin Math, 2024, 54: 671–698

Liang T Y, Stokes J. Interaction matters: A note on non-asymptotic local convergence of generative adversarial networks. In: Proceedings of the Twenty-Second International Conference on Artificial Intelligence and Statistics. New York: PMLR, 2019, 907–915

Lin T Y, Jin C, Jordan M I. On gradient descent ascent for nonconvex-concave minimax problems. In: Proceedings of the 37th International Conference on Machine Learning. New York: ICML, 2020, 6083–6093

Lorraine J, Acuna D, Vicol P, et al. Complex momentum for learning in games. arXiv:2102.08431, 2021

Lv W, Xiong J, Shi J, et al. A deep convolution generative adversarial networks based fuzzing framework for industry control protocols. J Intell Manu, 2021, 32: 441–457

Mazumdar E V, Jordan M I, Sastry S S. On finding local Nash equilibria (and only local Nash equilibria) in zero-sum games. arXiv:1901.00838, 2019

Mertikopoulos P, Papadimitriou C, Piliouras G. Cycles in adversarial regularized learning. In: Proceedings of the Twenty-Ninth Annual ACM-SIAM Symposium on Discrete Algorithms. New York: ACM, 2018, 2703–2717

Mertikopoulos P, Zenati H, Lecouat B, et al. Optimistic mirror descent in saddle-point problems: Going the extra (gradient) mile. In: International Conference on Learning Representations. Washington DC: ICLR, 2019

Mescheder L, Geiger A, Nowozin S. Which training methods for GANs do actually converge. In: Proceedings of the 35th International Conference on Machine Learning. New York: ICML, 2018, 3481–3490

Mescheder L, Nowozin S, Geiger A. The numerics of GANs. In: Advances in Neural Information Processing Systems, vol. 30. Cambridge: MIT Press, 2017, 1825–1835

Mishchenko K, Kovalev D, Shulgin E, et al. Revisiting stochastic extragradient. In: Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics. New York: PMLR, 2020, 4573–4582

Mokhtari A, Ozdaglar A, Pattathil S. A unified analysis of extra-gradient and optimistic gradient methods for saddle point problems: Proximal point approach. In: Proceedings of the Twenty-Third International Conference on Artificial Intelligence and Statistics. New York: PMLR, 2020, 1497–1507

Nedić A, Ozdaglar A. Subgradient methods for saddle-point problems. J Optim Theo Appl, 2009, 142: 205–228

Odena A. Open questions about generative adversarial networks. Distill, 2019, 4: e18

Ouyang Y Y, Xu Y Y. Lower complexity bounds of first-order methods for convex-concave bilinear saddle-point problems. Math Program, 2021, 185: 1–35

Peng W, Dai Y H, Zhang H, et al. Training GANs with centripetal acceleration. Optim Methods Softw, 2020, 35: 1–19

Pethick T, Latafat P, Patrinos P, et al. Escaping limit cycles: Global convergence for constrained nonconvex-nonconcave minimax problems. arXiv:2302.09831, 2023

Pinetz T, Soukup D, Pock T. What is optimized in Wasserstein GANs. In: Proceedings of the 23rd Computer Vision Winter Workshop. New York: IEEE, 2018

Qu Y Y, Zhang J W, Li R D, et al. Generative adversarial networks enhanced location privacy in 5G networks. Sci China Inf Sci, 2020, 63: 1–12

Radford A, Metz L, Chintala S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv:1511.06434, 2015

Razavi-Far R, Ruiz-Garcia A, Palade V, et al. Generative Adversarial Learning: Architectures and Applications. Cham: Springer, 2022

Ryu E K, Yuan K, Yin W T. ODE analysis of stochastic gradient methods with optimism and anchoring for minimax problems and GANs. arXiv:1905.10899, 2019

Salimans T, Goodfellow I, Zaremba W, et al. Improved techniques for training GANs. In: Advances in Neural Information Processing Systems, vol. 29. Cambridge: MIT Press, 2016, 2234–2242

Saxena D, Cao J. Generative adversarial networks (GANs) challenges, solutions, and future directions. ACM Comput Sur, 2021, 54: 1–42

Shen J Y, Chen X H, Heaton H, et al. Learning a minimax optimizer: A pilot study. In: International Conference on Learning Representations. Washington DC: ICLR, 2020

Skorokhodov I, Tulyakov S, Elhoseiny M. StyleGAN-V: A continuous video generator with the price, image quality and perks of StyleGAN2. In: Computer Vision and Pattern Recognition. New York: IEEE, 2022, 3626–3636

Vondrick C, Pirsiavash H, Torralba A. Generating videos with scene dynamics. In: Advances in Neural Information Processing Systems, vol. 29. Cambridge: MIT Press, 2016, 613–621

Wang Y. A mathematical introduction to generative adversarial nets (GAN). arXiv:2009.00169, 2020

Xu Z, Zhang H L. Optimization algorithms and their complexity analysis for non-convex minimax problems (in Chinese). Oper Res Trans, 2021, 25: 74–86

Yuan Y X, Bai Y Q, Chen J W, et al. Chinese Discipline Development Strategy · Mathematical Optimization (in Chinese). Beijing: Science Press, 2020

Zhang G J, Yu Y L. Convergence behaviour of some gradient-based methods on bilinear zero-sum games. In: International Conference on Learning Representations. Washington DC: ICLR, 2020

Zhang J Y, Hong M Y, Zhang S Z. On lower iteration complexity bounds for the convex concave saddle point problems. Math Program, 2022, 194: 901–935

Zhang M, Lucas J, Ba J, et al. Lookahead optimizer: k steps forward, 1 step back. In: Advances in Neural Information Processing Systems, vol. 32. Cambridge: MIT Press, 2019, 9597–9608

Acknowledgements

This work was supported by the Major Program of National Natural Science Foundation of China (Grant Nos. 11991020 and 11991024), the Team Project of Innovation Leading Talent in Chongqing (Grant No. CQYC20210309536), NSFC-RGC (Hong Kong) Joint Research Program (Grant No. 12261160365), and the Scientific and Technological Research Program of Chongqing Municipal Education Commission (Grant No. KJQN202300528).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, K., Tang, L. & Yang, X. Alleviating limit cycling in training GANs with an optimization technique. Sci. China Math. 67, 1287–1316 (2024). https://doi.org/10.1007/s11425-023-2296-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11425-023-2296-5

Keywords

- GANs

- general bilinear game

- predictive centripetal acceleration alogrithm

- lower and upper complexity bounds

- PCAA-Adam