Abstract

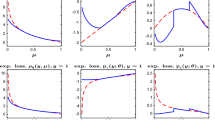

In this paper, we consider the unified optimal subsampling estimation and inference on the low-dimensional parameter of main interest in the presence of the nuisance parameter for low/high-dimensional generalized linear models (GLMs) with massive data. We first present a general subsampling decorrelated score function to reduce the influence of the less accurate nuisance parameter estimation with the slow convergence rate. The consistency and asymptotic normality of the resultant subsample estimator from a general decorrelated score subsampling algorithm are established, and two optimal subsampling probabilities are derived under the A- and L-optimality criteria to downsize the data volume and reduce the computational burden. The proposed optimal subsampling probabilities provably improve the asymptotic efficiency upon the subsampling schemes in the low-dimensional GLMs and perform better than the uniform subsampling scheme in the high-dimensional GLMs. A two-step algorithm is further proposed to implement and the asymptotic properties of the corresponding estimators are also given. Simulations show satisfactory performance of the proposed estimators, and two applications to census income and Fashion-MNIST datasets also demonstrate its practical applicability.

Similar content being viewed by others

References

Ai M Y, Wang F, Yu J, et al. Optimal subsampling for large-scale quantile regression. J Complexity, 2021, 62: 101512

Ai M Y, Yu J, Zhang H M, et al. Optimal subsampling algorithms for big data generalized linear models. arXiv: 1806.06761v1, 2018

Ai M Y, Yu J, Zhang H M, et al. Optimal subsampling algorithms for big data regressions. Statist Sinica, 2021, 31: 749–772

Blazère M, Loubes J-M, Gamboa F. Oracle inequalities for a group lasso procedure applied to generalized linear models in high dimension. IEEE Trans Inform Theory, 2014, 60: 2303–2318

Cheng C, Feng X D, Huang J, et al. Regularized projection score estimation of treatment effects in high-dimensional quantile regression. Statist Sinica, 2022, 32: 23–41

Duan R, Ning Y, Chen Y. Heterogeneity-aware and communication-efficient distributed statistical inference. Biometrika, 2022, 109: 67–83

Fan J Q, Li R Z. Variable selection via nonconcave penalized likelihood and its oracle properties. J Amer Statist Assoc, 2001, 96: 1348–1360

Fang E X, Ning Y, Li R Z. Test of significance for high-dimensional longitudinal data. Ann Statist, 2020, 48: 2622–2645

Ferguson T S. A Course in Large Sample Theory. London: Chapman and Hall, 1996

Han D X, Huang J, Lin Y Y, et al. Robust post-selection inference of high-dimensional mean regression with heavy-tailed asymmetric or heteroskedastic errors. J Econometrics, 2022, 230: 416–431

Hansen M H, Hurwitz W N. On the theory of sampling from finite populations. Ann Math Statist, 1943, 14: 333–362

Hastie T, Tibshirani R, Wainwright M. Statistical Learning with Sparsity. The Lasso and Generalizations. Monographs on Statistics and Applied Probability, vol. 143. Boca Raton: CRC Press, 2015

Javanmard A, Montanari A. Confidence intervals and hypothesis testing for high-dimensional regression. J Mach Learn Res, 2014, 15: 2869–2909

Jordan M I, Lee J D, Yang Y. Communication-efficient distributed statistical inference. J Amer Statist Assoc, 2019, 114: 668–681

Koenker R, Portnoy S. M estimation of multivariate regressions. J Amer Statist Assoc, 1990, 85: 1060–1068

Kohavi R. Scaling up the accuracy of Naive-Bayes classifiers: A decision-tree hybrid. In: Proceedings of the Second International Conference on Knowledge Discovery and Data Mining. Menol Park: AAAI Press, 1996, 202–207

Li M Y, Li R Z, Ma Y Y. Inference in high dimensional linear measurement error models. J Multivariate Anal, 2021, 184: 104759

Ma P, Mahoney M W, Yu B. A statistical perspective on algorithmic leveraging. J Mach Learn Res, 2015, 16: 861–911

Ma P, Zhang X L, Xing X, et al. Asymptotic analysis of sampling estimators for randomized numerical linear algebra algorithms. In: Proceedings of Machine Learning Research, vol. 108. Boston: Addison-Wesley, 2020, 1026–1034

Ning Y, Liu H. A general theory of hypothesis tests and confidence regions for sparse high dimensional models. Ann Statist, 2017, 45: 158–195

Obozinski G, Wainwright M J, Jordan M I. Support union recovery in high-dimensional multivariate regression. Ann Statist, 2011, 39: 1–47

Raskutti G, Wainwright M J, Yu B. Restricted eigenvalue properties for correlated Gaussian designs. J Mach Learn Res, 2010, 11: 2241–2259

Schifano E D, Wu J, Wang C, et al. Online updating of statistical inference in the big data setting. Technometrics, 2016, 58: 393–403

Tibshirani R. Regression shrinkage and selection via the Lasso. J R Stat Soc Ser B Stat Methodol, 1996, 58: 267–288

van de Geer S, Bühlmann P, Ritov Y, et al. On asymptotically optimal confidence regions and tests for high-dimensional models. Ann Statist, 2014, 42: 1166–1202

van der Vaart A W. Asymptotic Statistics. Cambridge: Cambridge University Press, 1998

Wang H Y, Ma Y Y. Optimal subsampling for quantile regression in big data. Biometrika, 2021, 108: 99–112

Wang H Y, Zhu R, Ma P. Optimal subsampling for large sample logistic regression. J Amer Statist Assoc, 2018, 113: 829–844

Wang W G, Liang Y B, Xing E P. Block regularized Lasso for multivariate multi-response linear regression. J Mach Learn Res, 2013, 14: 608–617

Xiao H, Rasul K, Vollgraf R. Fashion-MNIST: A novel image dataset for benchmarking machine learning algorithms. arXiv:1708.07747, 2017

Xiong S F, Li G Y. Some results on the convergence of conditional distributions. Statist Probab Lett, 2008, 78: 3249–3253

Yao Y Q, Wang H Y. A review on optimal subsampling methods for massive datasets. J Data Sci, 2021, 19: 151–172

Yu J, Wang H Y, Ai M Y, et al. Optimal distributed subsampling for maximum quasi-likelihood estimators with massive data. J Amer Statist Assoc, 2022, 117: 265–276

Zhang C-H, Zhang S S. Confidence intervals for low dimensional parameters in high dimensional linear models. J R Stat Soc Ser B Stat Methodol, 2014, 76: 217–242

Zhang H M, Jia J Z. Elastic-net regularized high-dimensional negative binomial regression: Consistency and weak signal detection. Statist Sinica, 2022, 32: 181–207

Zhang H X, Wang H Y. Distributed subdata selection for big data via sampling-based approach. Comput Statist Data Anal, 2021, 153: 107072

Zhang T, Ning Y, Ruppert D. Optimal sampling for generalized linear models under measurement constraints. J Comput Graph Stat, 2021, 30: 106–114

Zhang Y C, Duchi J C, Wainwright M J. Communication-efficient algorithms for statistical optimization. J Mach Learn Res, 2013, 14: 3321–3363

Acknowledgements

This work was supported by the Fundamental Research Funds for the Central Universities, National Natural Science Foundation of China (Grant No. 12271272) and the Key Laboratory for Medical Data Analysis and Statistical Research of Tianjin. The authors are grateful to the referees for their insightful comments and suggestions on this article, which have led to significant improvements.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gao, J., Wang, L. & Lian, H. Optimal decorrelated score subsampling for generalized linear models with massive data. Sci. China Math. 67, 405–430 (2024). https://doi.org/10.1007/s11425-022-2057-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11425-022-2057-8