Abstract

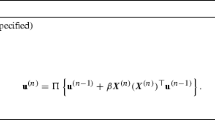

Principal component analysis (PCA) has been widely used in analyzing high-dimensional data. It converts a set of observed data points of possibly correlated variables into a set of linearly uncorrelated variables via an orthogonal transformation. To handle streaming data and reduce the complexities of PCA, (subspace) online PCA iterations were proposed to iteratively update the orthogonal transformation by taking one observed data point at a time. Existing works on the convergence of (subspace) online PCA iterations mostly focus on the case where the samples are almost surely uniformly bounded. In this paper, we analyze the convergence of a subspace online PCA iteration under more practical assumption and obtain a nearly optimal finite-sample error bound. Our convergence rate almost matches the minimax information lower bound. We prove that the convergence is nearly global in the sense that the subspace online PCA iteration is convergent with high probability for random initial guesses. This work also leads to a simpler proof of the recent work on analyzing online PCA for the first principal component only.

Similar content being viewed by others

References

Abed-Meraim K, Attallah S, Chkeif A, et al. Orthogonal Oja algorithm. IEEE Signal Process Lett, 2000, 7: 116–119

Absil P A, Edelman A, Koev P. On the largest principal angle between random subspaces. Linear Algebra Appl, 2006, 414: 288–294

Allen-Zhu Z, Li Y. First efficient convergence for streaming k-PCA: A global, gap-free, and near-optimal rate. In: 2017 IEEE 58th Annual Symposium on Foundations of Computer Science (FOCS). New York: IEEE, 2017, 487–492

Arora R, Cotter A, Livescu K, et al. Stochastic optimization for PCA and PLS. In: 2012 50th Annual Allerton Conference on Communication, Control, and Computing (Allerton). New York: IEEE, 2012, 861–868

Arora R, Cotter A, Srebro N. Stochastic optimization of PCA with capped MSG. Adv Neural Inform Process Syst, 2013, 26: 1815–1823

Balcan M F, Du S S, Wang Y, et al. An improved gap-dependency analysis of the noisy power method. In: Proceedings of the 29th Annual Conference on Learning Theory, vol. 49. San Diego: PMLR, 2016, 284–309

Balsubramani A, Dasgupta S, Freund Y. The fast convergence of incremental PCA. Adv Neural Inform Process Syst, 2013, 2: 3174–3182

Blum A, Hopcroft J, Kannan R. Foundations of Data Science. New York: Cambridge University Press, 2020

Chikuse Y. Statistics on Special Manifolds. New York: Springer, 2003

De Sa C, Olukotun K, Ré C. Global convergence of stochastic gradient descent for some non-convex matrix problems. In: Proceedings of the 32nd International Conference on Machine Learning, vol. 37. San Diego: PLMR, 2015, 2332–2341

Demmel J. Applied Numerical Linear Algebra. Philadelphia: SIAM, 1997

Garber D, Hazan E, Jin C, et al. Faster eigenvector computation via shift-and-invert preconditioning. In: Proceedings of the 33rd International Conference on Machine Learning, vol. 48. San Diego: JMLR, 2016, 2626–2634

Hardt M, Price E. The noisy power method: A meta algorithm with applications. Adv Neural Inform Process Syst, 2014, 27: 2861–2869

Horn R A, Johnson C R. Topics in Matrix Analysis. Cambridge: Cambridge University Press, 1991

Hotelling H. Analysis of a complex of statistical variables into principal components. J Educational Psych, 1933, 24: 417–441

Jain P, Jin C, Kakade S M, et al. Streaming PCA: Matching matrix Bernstein and near-optimal finite sample guarantees for Oja’s algorithm. In: Proceedings of The 29th Conference on Learning Theory (COLT). New York: COLT, 2016, 1147–1164

James A T. Normal multivariate analysis and the orthogonal group. Ann Math Statist, 1954, 25: 40–75

Li C L, Lin H T, Lu C J. Rivalry of two families off algorithms for memory-restricted streaming PCA. In: Proceedings of the 19th International Conference on Artificial Intelligence and Statistics (AISTATS). San Diego: JMLR, 2016, 473–481

Li C J, Wang M D, Liu H, et al. Near-optimal stochastic approximation for online principal component estimation. Math Program, 2018, 167: 75–97

Luke Y L. The Special Functions and Their Approximations. New York: Academic Press, 1969

Marinov T V, Mianjy P, Arora R. Streaming principal component analysis in noisy settings. In: Proceedings of the 35th International Conference on Machine Learning, vol. 80. San Diego: PMLR, 2018, 3413–3422

Mianjy P, Arora R. Stochastic PCA with ℓ2 and ℓ1 regularization. In: Proceedings of the 35th International Conference on Machine Learning, vol. 80. San Diego: PMLR, 2018, 3531–3539

Muirhead R J. Aspects of Multivariate Statistical Theory. Wiley Series in Probability and Mathematical Statistics. New York: John Wiley & Sons, 1982

Oja E. Simplified neuron model as a principal component analyzer. J Math Biol, 1982, 15: 267–273

Oja E, Karhunen J. On stochastic approximation of the eigenvectors and eigenvalues of the expectation of a random matrix. J Math Anal Appl, 1985, 106: 69–84

Pearson K F R S. On lines and planes of closest fit to systems of points in space. Philos Mag, 1901, 2: 559–572

Shamir O. Convergence of stochastic gradient descent for PCA. In: Proceedings of the 33rd International Conference on Machine Learning, vol. 48. San Diego: PMLR, 2016, 257–265

Stewart G W, Sun J G. Matrix Perturbation Theory. Boston: Academic Press, 1990

Tropp J A. User-friendly tail bounds for sums of random matrices. Found Comput Math, 2012. 12: 389–434

van der Vaart A W, Wellner J A. Weak Convergence and Empirical Processes. Springer Series in Statistics. New York: Springer, 1996

Vershynin R. Introduction to the non-asymptotic analysis of random matrices. In: Compressed Sensing: Theory and Applications. New York: Cambridge University Press, 2012, 210–268

Vu V Q, Lei J. Minimax sparse principal subspace estimation in high dimensions. Ann Statist, 2013, 41: 2905–2947

Acknowledgements

This work was supported by National Natural Science Foundation of China (Grant No. 11901340), National Science Foundation of USA (Grant Nos. DMS-1719620 and DMS-2009689), Ministry of Science and Technology of Taiwan, Taiwanese Center for Theoretical Sciences, and the ST Yau Centre at the Taiwan Chiao Tung University. The authors are indebted to the referees for their constructive comments and suggestions that improved the presentation.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Liang, X., Guo, ZC., Wang, L. et al. Nearly optimal stochastic approximation for online principal subspace estimation. Sci. China Math. 66, 1087–1122 (2023). https://doi.org/10.1007/s11425-021-1972-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11425-021-1972-5

Keywords

- principal component analysis

- principal component subspace

- stochastic approximation

- high-dimensional data

- online algorithm

- finite-sample analysis