Abstract

Artificial intelligence (AI) competence in education is a set of skills that enable teachers to ethically and responsibly develop, apply, and evaluate AI for learning and teaching processes. While AI competence becomes a key competence for teachers, current research on the acceptance and use of AI in classroom practice with a specific focus on the required competencies of teachers related to AI is scarce. This study builds on an AI competence model and investigates predispositions of AI competence among N = 480 teachers in vocational schools. Results indicate that AI competence can be modeled as combining six competence dimensions. Findings suggest that the different competence dimensions are currently unequally developed. Pre- and in-service teachers need professional learning opportunities to develop AI competence.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Artificial intelligence (AI) is affecting more and more fields of the digitalized world, such as business (Ng et al., 2021), arts (Epstein et al., 2020), communication (Androutsopoulou et al., 2019), and science (Sharpless & Kerlavage, 2021). The importance of AI competence as a future skill for all citizens is underlined by its implementation into the latest edition of the European DigComp 2.2. framework (Vuorikari et al., 2022). Consequently, AI is increasingly used in education (De Laat et al., 2020; Ifenthaler & Seufert, 2022; Zawacki-Richter et al., 2019). AI in education is defined as a combination of „machine learning, algorithm productions, and natural language processing “ (Akgun and Greenhow, 2021, p.1) with the potential to reduce teachers ‘ workload, contextualize students ‘ learning, (semi-)automate assessments (Ifenthaler et al., 2018), and provide intelligent tutoring systems (Ifenthaler et al., 2024). Accordingly, teachers apply intelligent tutoring systems and adaptive learning environments to support the individual learning pathways of their students (Castro-Schez et al., 2021; Ifenthaler & Schumacher, 2023; Park et al., 2023). Educators can use learning analytics to identify at-risk students and initiate personalized support (Ifenthaler, 2015). Furthermore, educators can benefit from AI systems through automated scoring tools (Ludwig et al., 2021) or recommender systems (Hemmler et al., 2023). However, a responsible and effective application of AI in education is based on competent teachers who are proficient in the different facets of AI (Caena & Redecker, 2019; Ng et al., 2021; Seufert et al., 2021). While teachers seem open to working with AI in schools, the few existing projects are based on individual initiatives rather than on changes in the organizational learning culture (Roppertz, 2020). Furthermore, Rietz and Völmicke (2020) identify a lack of individual learner support. Their analysis states that the focus on newly developed tools lies mostly in developing organizational tools for school administration.

This study's objective is to analyze the dimensional structure of an AI competence model and the evidence-based development of an instrument for assessing teachers’ self-rated AI competence. The assessment of teachers’ AI competencies is a crucial step in identifying teachers' readiness to deal with the challenges and opportunities presented by introducing AI technology into the field of education. On a micro level, teachers can be enabled to reflect on already existing knowledge, attitudes, and skills while pinpointing opportunities to improve their teaching capabilities in an increasingly digital society (Nielsen et al., 2015). From an organizational perspective, schools and administrative decision-makers can establish further teacher training possibilities based on analyzing teachers’ competencies. Furthermore, the results of competence assessment can be used to shape decisions in the educational programs of teachers at universities and on a political level (Ifenthaler et al., 2024). The AI competence model was conceptualized based on experts' understanding of the AI field and consists of six dimensions. Further, the study aimed to empirically confirm the robustness of the AI competence model to nurture the development of professional learning opportunities for AI competence of pre-service and in-service teachers.

Background

Current research on accepting and using AI in classroom practice with a specific focus on teachers' AI competence is scarce. Furthermore, existing studies on AI competence fall short of a holistic view of AI competence (Delcker et al., 2024). Still, the existing literature on teachers' AI competence identifies different fields of expertise, which can be summarized in distinctive competence dimensions. Teachers are expected to demonstrate basic knowledge of the functionality of AI (Attwell et al., 2020). For example, they must be able to identify whether an application uses AI (Long & Magerko, 2020). Teachers need to be aware of data security risks and how they can ensure data privacy when collecting, analyzing, and managing data in education (Papamitsou et al., 2021). Teachers must identify AI's potential and risks in education, society, and the workplace (Attwell et al., 2020).

Additionally, they must be aware of the competencies AI requires (Massmann & Hofstetter, 2020). Furthermore, teachers should be interested in AI, open to trying new AI tools, critically reflect on the possibilities of AI, and become active entities in the AI implementation processes. Teachers need to be able to deploy AI tools in their instructional design, and they need the competence to teach about AI (Gupta & Bhaskar, 2020; Zhang & Aslan, 2021). The enumerated competencies have to be accompanied by ongoing teacher professionalization and training, including teachers’ ability to educate themselves about AI from professional networks further, as well as implement AI in administrative processes (Al-Zyoud, 2020; Butter et al., 2014).

Current research on AI competence frameworks demonstrates varying findings for combining these fields of expertise. Huang (2021) proposes a framework that emphasizes specific AI-related knowledge such as machine learning, robotics, and programming in combination with more general key competencies (e.g., self-learning and teamwork). In contrast, Kim et al. (2021) base their model on AI knowledge, AI skills, and AI attitudes, underlining the importance of critical reflection for ethical AI implementation. Sanusi et al. (2022) follow this idea and implement ethics of AI as a competence connecting the other parts of their model, namely learning, team, and knowledge competence. Further, in designing and implementing AI systems in the context of education, consideration and compliance with ethical norms as well as values are of utmost importance (Heil & Ifenthaler, 2024). Richards and Dignum (2019) proposed the so-called ART (Accountability, Responsibility, and Transparency) principles to be the foundations of AI systems. Algorithms and data need to allow accountability for the decisions made by an agent and reflect the organization’s moral values.

Furthermore, a clear chain of responsibility concerning the involved stakeholders must be evident. Ultimately, in terms of data and algorithms implemented, the AI system needs to be developed in a form that provides insights into its mode of operation. The ethical considerations are paired with legal regulations and frameworks. Countries and regions, such as the European General Data Protection Regulation (GDPR) or the California Consumer Privacy Act (CCPA), implemented data protection laws to prevent data misuse. Accordingly, knowledge about the specific regulations, as well as their application in teaching and learning, has to be part of a holistic framework of teachers’ AI competence.

The proposed frameworks form a valuable basis for identifying and modeling general AI competencies. Still, multiple shortcomings hinder a direct transfer to using one of the frameworks as a blueprint for assessing teachers' AI competencies. Firstly, the ability of teachers to include AI in their learning and teaching practice, as well as their perspective on the implications AI might have for education in general (Tuomi, 2022). Secondly, these frameworks propose theoretical considerations but do not deliver actionable measurement instruments. In contrast, SELFIEforTEACHERS (Economou, 2023) is designed as a tool for teachers to self-reflect on their perceived digital competencies and is based on the DigCompEdu framework (European Commission: Joint Research Centre et al., 2017). SELFIEforTEACHERS covers a wide variety of digital competencies, but AI technology can hardly be found in the tool.

Laupichler et al. (2023), as well as Ng et al. (2021), underline the need for instruments that offer a holistic but actionable approach to AI competence measurement: As of now, published research work is often too general, leading to extensive questionnaires, which are unable to capture the numerous constructs related to AI competence in detail. On the other hand, some instruments are too detailed and only collect data on very specific components of AI competence, such as attitudes (Sindermann et al., 2021) or anxiety (Wang & Wang, 2022) towards AI technology. Furthermore, Laupichler et al. (2023) point out that some other instruments might only be valid for certain courses, such as the one developed by Dai et al. (2020), who proposed an instrument to measure the influence of an AI course on students’ anxiety.

The instrument presented in this paper has been developed to assess perceived AI competence from a practical perspective. In contrast to long instruments like SELFIE, it focuses on perceived AI competence and does not include items connected to more general digital competences. Additionally, the instrument covers various constructs associated with perceived AI competence instead of focusing on a single construct. School leaders and educators should use it in teacher training to collect data about the perceived AI competence of in-service and pre-service teachers. It focuses on relevant fields of AI competence and their relationships rather than general digital competency or a single part of AI competence.

The instrument presented in this paper follows the same principle as the other instruments which are currently being developed to assess AI competence (Laupichler et al., 2023; Sindermann et al., 2021; Wang & Wang, 2022): It collects data on teachers' perceived competencies, not results of performance tasks (Schoenfeld, 2010). Although there is a clear difference between perceived competences and the actual competences of a person, perceived competences are related to actual competences (Arnold, 1985), and the measurement of perceived competences is a tool that is often chosen when measuring the competences of teachers (Sumaryanta et al., 2018).

Pilot study

A pilot study was conducted to explore possible additions and adaptations to the existing frameworks of AI competence. Qualitative interviews with different stakeholders in the educational context, as well as experienced stakeholders from the field of ICT, were conducted. In total, N = 35 stakeholders took part in the study from April until May 2021. Out of the 35 stakeholders, 15 participants were in-service teachers, 9 participants worked as instructors in training companies, and 11 participants worked in various ICT-related occupations, such as software development, IT consulting, and project management. All participants were picked on their position and field of expertise within their respective organizations and had prior knowledge about AI within their field. An interview guideline was used to structure the interviews. The first part of the interview guideline contains questions regarding the participants' demographic data. The second part focuses on AI competencies in a pedagogical context and which dimensions might belong to AI competencies. These questions were guided by the results of the literature review. In the last part, the participants were asked to rank the different components of AI competencies based on the participant's perceived level of importance. The recorded interviews were transcribed into Microsoft Word and imported into MAXQDA for further analysis, following Kuckartz's content-structuring analysis method (Kuckartz & Rädiker, 2023).

Based on an in-depth systematic literature review and the results of the analysis of the expert interviews, the following six dimensions of AI competence for teachers have been identified:

-

(1)

Theoretical Knowledge about AI (TH): Teachers need to know the difference between AI and traditional computer programs and how AI can be used. More precisely, teachers need to be able to identify future fields of application for AI. This requires a basic understanding of different AI technologies, such as machine learning, deep learning, data mining, or artificial neural networks.

-

(2)

Legal Framework and Ethics (LF): Teachers need to be able to work with data-based ethical considerations, especially when working with student data. They must be aware of the challenges that can arise for fairness, equality, and transparency when AI is used. This enables them to prevent discrimination and evaluate the results of AI technology. Teachers have to ensure data protection under local laws, such as the GDPR in Europe, at all times.

-

(3)

Implications of AI (IP): Teachers need to identify the challenges and potentials of AI in education, society, and the workplace. AI changes the competencies required to be a capable citizen. In education, different forms of learning might occur. Society and the workplace might be at risk of alienation. Not every problem can be solved or approached with AI. Competent teachers have to factor these thoughts into their practice.

-

(4)

Attitude toward AI (AT): Teachers have to be open to AI and engage with AI. They have to keep an open mind to identify use cases for new technology and potentials for their students. It is important for teachers to critically reflect on their own beliefs about and their handling of AI.

-

(5)

Teaching and Learning with AI (TL): Teachers need to be able to implement AI into their teaching. This includes AI as a general topic, AI for individual or cooperative learning, or AI as an assessment tool. In addition, they have to identify how AI affects education processes, focusing on the possible cooperation of humans and machines. Furthermore, teachers need to act as role models for the application of AI.

-

(6)

Ongoing Professionalization (PF): Teachers must understand the importance of continuing professionalization. AI has to be identified as a quickly evolving field, which makes continuous, demand-driven training necessary. This includes forming a professional network with colleagues and university and industry partners. Furthermore, the implementation of AI into organizational processes is subsumed in this dimension.

In summary, AI competence in the context of education is a set of skills that enable teachers to ethically responsible develop, apply, and evaluate AI for learning and teaching processes. Research shows that the relationship of different competence fields is a key factor for investigating teachers’ knowledge and skills (Blömeke et al., 2016; Schoenfeld, 2010). Systematic reviews in the field of AI literacy and competence emphasize the need for the holistic, multidimensional approach chosen in this study (Knoth et al., 2024; Sperling et al., 2024). The interrelationship between these fields can be underlined by the following example: a teacher who wants to use AI in his class must be open to its use (AT) to identify relevant parts of her teaching as potentially benefiting from using AI. She then decides to use AI to grade her students’ papers automatically. To be able to do that, she needs to understand how an AI tool might use different techniques to fulfill that task (TH). Simultaneously, the teacher needs to be aware of ethical and legal considerations that play a role in automated grading (LF). She also needs to realize the implications (IP) the usage might have for her workplace, such as a more efficient way of using her work hours. As the teacher is not very experienced with AI, she decided to go through an online training program (PF). Once she collects all the possible information and sets up her AI tool, she decides to inform her students about the process and how she wants to include it in her teaching and learning (TL).

Current study

Previous research works have shown that perceived AI competence can be measured with longer, more general instruments (Caena & Redecker, 2019). In addition, specific constructs belonging to AI literacy, such as attitude or anxiety, can be measured in more detail (Sindermann et al., 2021; Wang & Wang, 2022). This study aimed to establish a scale to measure teachers' perceived AI competencies and confirm the six dimensions of the AI competence model without focusing on a single dimension or requiring a lengthy questionnaire.

The guiding research questions were:

RQ 1): Are the items of the perceived AI competence instrument a fitting representation of the underlying factors?

RQ 2): How do the six factors in the model represent the overall perceived AI competence?

Answering these research questions is an important step to validating the developed instrument and, therefore, ensuring its practical applicability.

Method

Participants

Teachers at vocational schools in Germany were contacted via publicly available email addresses to participate in the online survey. The focus on vocational schools is rooted in the heterogeneity of the German school system. The different types of schools, such as pre-schools, elementary schools, high schools, and vocational schools, lead to a wide variety in the competence of the teaching personnel, both between and within these schools. While the instrument does not focus on a specific type of school, sampling from one type of school allows for eliminating the school type as an influencing factor on the perceived competencies of the surveyed teachers (Pfost & Artelt, 2014; Rohm et al., 2021). The final convenience sample included N = 480 participants (47% female, 53% male). Their mean age was 39 years (SD = 11.36). The average work experience of the participants was ten years (SD = 5.43).

The participation was conducted voluntarily, and there were no incentives to participate in the form of money, vouchers, or sweepstakes. All procedures performed in studies involving human participants followed the ethical standards of the institutional/national research committee.

Instrument

The AICO_edu (AI Competence Educators) questionnaire was developed based on the six dimensions of the perceived AI competence model presented above. Each dimension was captured through six to eight items (TH: 8; LF: 6; IP: 8; AT: 8; TL: 8; PF: 7), which were answered on a five-point Likert scale (1 = strongly disagree; 5 = strongly agree). The items were created based on the results of the pilot study. As a first step, they were revised by the interview partners of the pilot study to ensure that the wording and the structuring of the subscales were in line with its planned practical area of application. The structure was further checked through an expert discussion among educational researchers as part of a research colloquium. Cronbach’s alpha for the six dimensions and corresponding sample items are presented in Table 1.

Procedure and analysis

A quantitative study using a convenience sampling method in vocational schools was conducted over a period of two months in 2021 to examine the robustness of the perceived AI competence model. As a standard research data-protection practice, all data were stored and analyzed anonymously. Data were cleaned and combined for descriptive and inferential statistics using r-Statistics (https://www.r-project.org). All effects were tested at the .05 significance level.

Confirmatory factor analysis

A Confirmatory Factor Analysis was used to examine the construct validity of the developed questionnaire. Confirmatory Factor Analysis (CFA) and Exploratory Factor Analysis (EFA) are types of structural equation modeling. While EFA is applied to datasets to identify unknown relationships inside the data, CFA is used to confirm theory-based pre-assumptions about the structure of the data and unobservable latent factors measured by observable indicators (Brown & Moore, 2012).

As we developed the original questionnaire based on theoretical and empirical pre-analysis described in the pilot study, we conducted a confirmatory factor analysis to validate our measurement instrument (RQ1). The first CFA analyzed how well the 45 developed items represent our six dimensions of AICO_edu.

In addition, we intended to investigate if the six dimensions presumed to represent an overall AI-competence, derived from the in-depth literature review and the qualitative interviews with stakeholders, reflect one unobservable latent factor, AI-competence (RQ2). Therefore, we conducted a second CFA, measuring how well the means of the six sub-categories as indicators represent one factor.

Several fit indices were applied, such as chi-square, the Root-Mean-Square-Error of Approximation (RMSEA), the Comparative Fit Index (CFI), and the Tucker-Lewis Index (TLI). These global indices represent how well the assumed model fits the data.

Results

Confirmatory factor analysis (RQ1)

A six-factor model confirmatory factor analysis was conducted to analyze the representation of the six underlying dimensions by the 45 items of the AICO_edu instrument. The results are presented in Table 2.

The six-factor model (M1) does not meet the criteria of a good fit, as the RMSEA is higher than .08, and neither the CFI nor the TLI are higher than the cut-off value of .95 (Hernandez et al., 2019; Savalei, 2012). Due to low covariations between some questions in the same dimension, eight items have been removed for an improved representation of the underlying latent factor. A further analysis of the wording of the items showed that some of the removed items had not been explicitly phrased enough to guarantee valid answers: The term “fields of application” in TH02 and TH03 can be interpreted as fields of applications within occupations or as fields of applications in general. TH08 should be rephrased into “knowledge about databases” to fit the TH category better. The three items removed from the LF category need to be further contextualized. These items need to be more specific about the type of student data, where the data is collected, and how the data is analyzed. The removed items can be found in the instrument in the Appendix. They are marked with an asterisk. The results are presented in Table 3.

After the removal of items, the fitness scores of the model improved and were closer to the desired cut-off values. The RMSEA is closer to the cut-off value of 0.08, and CFI and TLI are closer to the cut-off value of .95, respectively.

The results of the six-factor confirmatory factor analysis suggest that the items used for the different factors can partially be grouped into the six theoretical dimensions (TH, LF, IP, AT; TL, PF) of perceived AI competence. Some of the results align with previously developed models, such as attitudes in connection with AI technology (Sindermann et al., 2021). The results also show that new items have to be developed or rephrased in cases where removing an item from the model improved the fitness scores of the model. This will enhance the fitness score, as more suitable items improve the representation of the underlying factors.

Single-factor analysis (RQ2)

A single-factor model confirmatory factor analysis was conducted to analyze the representation of perceived AI competence through the six dimensions of the AICO_edu instrument based on the means of the respective subscales. The results of the CFA are presented in Table 4.

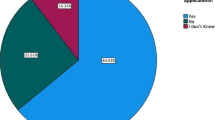

Modification indices hinted toward a conflicting relationship between the PF dimension and the other dimensions of the model (Whittaker, 2012). A detailed explanation can be found in the discussion section of the paper. Removing the PF dimension from the model resulted in the model shown in Table 5 and Fig. 1.

Although the relative fit indices improve, the new model does not meet the criteria of a good fit concerning the CFI and the TLI. Further analysis of the items regarding their semantic and topical alignment hinted toward possible interrelationships between the PF and the AT dimension. Removing the AT dimension from the model resulted in the model shown in Table 6.

The final model meets the criteria of a good fit concerning the CFI and the TLI, as well as the RMSEA (see Fig. 2).

The results of the single-factor analysis underline the assumption that perceived AI competency consists of multiple constructs. The results align with previous research on AI competencies (Caena & Redecker, 2019; Huang, 2021; Kim & Kim, 2022; Laupichler et al., 2023; Sanusi et al., 2022). Most importantly, the results suggest that professionalization and attitudes might not be directly connected to perceived AI literacy.

Discussion and conclusion

The theoretical assumptions about teachers' perceived AI competence underline the construct's multi-dimensionality. The evaluation of the instrument emphasized the modularity of perceived AI competence.

Teachers have to be able to implement AI in the classroom and organizational processes (Attwell et al., 2020; Gupta & Bhaskar, 2020). Their pedagogical practice has to be backed up by theoretical knowledge about the functionality of AI, as well as legal requirements and ethical considerations (Massmann & Hofstetter, 2020; Schmid et al., 2021). However, AI theory and tools rarely exist in teacher education and professional development programs (Vazhayil et al., 2019). Improving competencies and knowledge about AI, as well as implementing them into practice, is therefore dependent on teachers’ attitudes towards AI.

The findings of this study uncover specific issues for further examination. The developed instrument (see Appendix) can collect evidence about teachers’ perceived AI competence. As described in the method section, some items need to be improved to enable a more valid data collection. Furthermore, the two categories, Professionalization (PF) and Attitude (AT) do not fit into the model structure in their current form. The PF dimension may be influenced by the lack of training possibilities in the field of AI (Caena & Redecker, 2019; Seufert et al., 2021). Teachers’ interest in professional development, which has been assessed in the PF dimension, can, therefore, be interpreted in two ways. Teachers might possess AI competence already, they know about the importance of AI, they want to educate themselves further, and have a high interest in professional development opportunities. A high score on the PF scale would then reflect an AI-competent teacher.

On the other hand, teachers might not be proficient in dealing with AI in the context of education. These teachers are interested in professional development to close their existing knowledge gaps. A high score on the PF scale would then reflect a teacher with a low perceived AI competence level. Other items in the PF scale are also influenced by this contradiction, such as participation in professional networks.

The same assumptions hold for parts of the AT scale, which leads to its removal from the final model. A positive attitude towards AI in education might stem from a non-critical reflection on possible risks and chances of the underlying technology. While this results in high scores in the AT dimension, it would not be considered a high AI competence. On the other hand, a more critically informed approach towards AI could lead to lower scores on the AT scale but should be interpreted as a higher AI competence. These possible conflicts align with research such as the work of Blömeke et al. (2015) and Shavelson (2013). As both the PF and the AT dimensions are relevant for a holistic model of AI competence (Knoth et al., 2024; Sperling et al., 2024), further research needs to be conducted on how these dimensions can be incorporated into a valid scale. Possible solutions might be found in a highly context-specific scale for ongoing professionalization and a stronger compartmentalization of attitudes. Both approaches stand in contrast to the initial goal of this study to create a manageable scale for the assessment of AI competence, as both the specification of the PF and the AT scale would lead to more items for those two dimensions.

The role of professional development

The follow-up review process of the instrument will consider these contradictions and create a better distinction for the reasons behind choosing further training opportunities or interest in professional networks specifically. The findings from the confirmatory factor analysis support the consideration of the quality of the PF subscale, especially the improvement of the model fit indices after removing the dimension from the model. The PF dimension is an important addition to the existing models by Huang (2021), Kim et al. (2021), and Sanusi et al., (2022). The ongoing professionalization of in-service teachers has not yet been considered in these models.

Digital literacy in the field of AI needs to harness networking abilities to provide constant professionalization regarding important topics of the field. The increasing usage of AI technology for teaching and learning requires competent teachers who can identify challenges and opportunities for all stakeholders in vocational schools (Pedro et al., 2019). Students at risk of cheating (Oravec, 2022), racial or gender bias (Baker & Hawn, 2021) in algorithms as well as transparency in coding (Bogina et al., 2022) are some examples that make a sustainable professionalization of teachers necessary. The inclusion of professionalization into the framework fulfills an additional demand. AI competence for teachers should not be viewed as a stark set of skills but rather a sustainable development of competences that adapt to the ongoing changes in the field of AI.

A reliable model for AI measurement

Furthermore, the confirmatory factor analysis and the modification indices hint toward further possibilities for improvements of the model and the questionnaire (Whittaker, 2012). The removal of items led to an improvement in the accuracy of the model. In some cases, modification indices suggest moving items to different dimensions. These findings can be traced back to theoretical assumptions and statements of the experts on which the instrument's construction is based. The experts stated that some items might be allocated to various dimensions due to the multidimensionality of perceived AI competence. Reformulations of items and splitting items into multiple items might help to overcome these problems.

Limitations

Various factors limit the presented study. Most importantly, while AI is getting traction as a research topic in the field of education, the implementation of AI in teaching practices, school development, or teacher training has just started to gain attention (Attwell et al., 2020). As a result, many of the surveyed teachers might not have been in contact with AI, or at least not frequently. Furthermore, the construction of the questionnaire is based on the limited research findings existing on the AI competencies of teachers. Although the instrument has been developed with the help of experts from the field of vocational education as a first explorative step to examining the AI competence of vocational teachers, the findings of the study hint toward improvement capacities regarding the construction of the instrument (Shi et al., 2019; Whittaker, 2012). While the results show that the developed dimension represents a common factor, this factor might not be AI competence. Firstly, linking the data of the perceived AI competence of participants to the results of practical tests and experiments will deliver better insights into the connections of the dimensions towards the construct of AI competence. Secondly, tests about AI knowledge and practical AI usage might close the gap between perceived AI competence and actionable AI competence.

The contrast between perceived competence and other competence measurements is another significant limitation. Analyzing the results of knowledge tests or the practical usage of AI technology in teaching and learning processes might result in better or at least different results than the assessment of perceived competences. In addition, information about the relationship between attitudes, intended usage, and actual usage can be analyzed (Venkatesh et al., 2003). On the other hand, assessing data with knowledge tests or practical assignments for AI tools is difficult due to the fast-moving development in the field of AI, as well as the lack of clear regulation for the usage of AI in teaching and learning. At the time of the data collection, no AI tool was approved for teaching and learning at German schools, making it impossible to perform a more practical, realistic measurement of AI usage.

Given the Teaching and Learning (TL) dimension, the current model AICO_edu is developed explicitly for the use of education-specific AI competencies. However, after modification, the AICO instrument also allows for use in other context-specific use cases. For instance, AICO_man could include a dimension focusing on management. In this scenario, the Teaching and Learning (TL) scale could be removed from the questionnaire, and a Management (MA) scale could be added. This scale would then consist of items that target the relationship between AI and management (“I can make management decisions based on the results of an AI tool,” “I know how AI can be integrated into management processes”). Furthermore, performance tasks could be added to the instrument to increase the validity of the scales and to counteract the problems of self-reported competence data. These performance tasks might include quiz questions or the analysis of sample data.

AI is an emerging field of interest for learning and teaching, which must be further implemented in theory development (Gibson & Ifenthaler, 2024) and teacher education programs. Currently, AI is seldom a part of teacher training programs or further teacher practice (Roppertz, 2020). The findings of the presented study are, therefore, being used for the instructional design of two training programs. These programs try to overcome the current shortcomings in AI training for in-service and pre-service teachers by combining theoretical knowledge about AI and hands-on practical solutions for vocational school practice. The evaluation of these programs can help to identify further dimensions of AI competence and methods to measure AI competencies of schoolteachers.

Data availability

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.

References

Akgun, S., & Greenhow, C. (2021). Artificial intelligence in education: Addressing ethical challenges in K-12 settings. AI Ethics. https://doi.org/10.1007/s43681-021-00096-7

Al-Zyoud, H. M. M. (2020). The role of artificial intelligence in teacher professional development. Universal Journal of Educational Research, 8, 6263–6272.

Androutsopoulou, A., Karacapilidis, N., Loukis, E., & Charalabidis, Y. (2019). Transforming the communication between citizens and government through AI-guided chatbots. Government Information Quarterly, 36(2), 358–367. https://doi.org/10.1016/j.giq.2018.10.001

Arnold, H. J. (1985). Task performance, perceived competence, and attributed causes of performance as determinants of intrinsic motivation. Academy of Management Journal, 28(4), 876–888. https://doi.org/10.2307/256242

Attwell, G., Bekiaridis, G., Deitmer, L., Perini, M., Roppertz, S., & Tutlys, V. (2020). Artificial intelligence in policies, processes and practices of vocational education and training. Universität Bremen.

Baker, R. S., & Hawn, A. (2021). Algorithmic bias in education. International Journal of Artificial Intelligence in Education, 32(4), 1052–1092. https://doi.org/10.1007/s40593-021-00285-9

Blömeke, S., Busse, A., Kaiser, G., König, J., & Suhl, U. (2016). The relation between content-specific and general teacher knowledge and skills. Teaching and Teacher Education, 56, 35–46. https://doi.org/10.1016/j.tate.2016.02.003

Blömeke, S., Gustafsson, J.-E., & Shavelson, R. J. (2015). Beyond Dichotomies. Zeitschrift Für Psychologie, 223(1), 3–13. https://doi.org/10.1027/2151-2604/a000194

Bogina, V., Hartman, A., Kuflik, T., & Shulner-Tal, A. (2022). Educating software and AI stakeholders about algorithmic fairness, accountability, transparency and ethics. International Journal of Artificial Intelligence in Education, 32(3), 808–833. https://doi.org/10.1007/s40593-021-00248-0

Brown, T. A., & Moore, M. T. (2012). Confirmatory factor analysis. In R. H. Hoyle (Ed.), Handbook of structural equation modeling (pp. 361–379). The Guilford Press.

Butter, M. C., Pérez, L. J., & Quintana, M. G. B. (2014). School networks to promote ICT competences among teachers. Case study in intercultural schools. Computers in Human Behavior, 30, 442–451. https://doi.org/10.1016/j.chb.2013.06.024

Caena, F., & Redecker, C. (2019). Aligning teacher competence frameworks to 21st century challenges: The case for the European digital competence framework for educators (Digcompedu). European Journal of Education, 54(3), 356–369. https://doi.org/10.1111/ejed.12345

Castro-Schez, J. J., Glez-Morcillo, C., Albusac, J., & Vallejo, D. (2021). An intelligent tutoring system for supporting active learning: A case study on predictive parsing learning. Information Sciences, 544, 446–468. https://doi.org/10.1016/j.ins.2020.08.079

Dai, Y., Chai, C.-S., Lin, P.-Y., Jong, M.S.-Y., Guo, Y., & Qin, J. (2020). Promoting Students’ well-being by developing their readiness for the artificial intelligence age. Sustainability, 12(16), 6597. https://doi.org/10.3390/su12166597

De Laat, M., Joksimovic, S., & Ifenthaler, D. (2020). Artificial intelligence, real-time feedback and workplace learning analytics to support in situ complex problem-solving: A commentary. International Journal of Information and Learning Technology, 37(5), 267–277. https://doi.org/10.1108/IJILT-03-2020-0026

Delcker, J., Heil, J., Ifenthaler, D., Seufert, S., & Spirgi, L. (2024). First-year students AI-competence as a predictor for intended and de facto use of AI-tools for supporting learning processes in higher education. International Journal of Educational Technology in Higher Education, 21, 18. https://doi.org/10.1186/s41239-024-00452-7

Economou, A. (2023). SELFIEforTEACHERS. Designing and developing a self-reflection tool for teachers’ digital competence. Publications Office of the European Union. https://doi.org/10.2760/561258

Epstein, Z., Levine, S., Rand, D. G., & Rahwan, I. (2020). Who gets credit for AI-generated art? Iscience, 23(9), 101515. https://doi.org/10.1016/j.isci.2020.101515

European Commission: Joint Research Centre, Redecker, C., & Punie, Y. (2017). European framework for the digital competence of educators–DigCompEdu. Publications Office. https://doi.org/10.2760/159770

Gibson, D. C., & Ifenthaler, D. (2024). Computational learning theories. Springer. https://doi.org/10.1007/978-3-031-65898-3

Gupta, K. P., & Bhaskar, P. (2020). Inhibiting and motivating factors influencing teachers’ adoption of AI-based teaching and learning solutions: Prioritization using analytic hierarchy process. Journal of Information Technology Education: Research, 19, 693–723. https://doi.org/10.28945/4640

Heil, J., & Ifenthaler, D. (2024). Ethics in AI-based online assessment in higher education. In S. Caballe, J. Casas-Roma, & J. Conesa (Eds.), Ethics in online AI based systems (pp. 55–70). Elsevier. https://doi.org/10.1016/B978-0-443-18851-0.00008-1

Hernandez, L., Balmaceda, N., Hernandez, H., Vargas, C., De La Hoz, E., Orellano, N., Vasquez, E., & Uc-Rios, C. E. (2019). Optimization of a WiFi wireless network that maximizes the level of satisfaction of users and allows the use of new technological trends in higher education institutions BT. In N. Streitz & S. Konomi (Eds.), Distributed, ambient and pervasive interactions (pp. 144–160). Springer International Publishing.

Huang, X. (2021). Aims for cultivating students’ key competencies based on artificial intelligence education in China. Education and Information Technologies, 26(5), 5127–5147. https://doi.org/10.1007/s10639-021-10530-2

Ifenthaler, D. (2015). Learning analytics. In J. M. Spector (Ed.), The SAGE encyclopedia of educational technology (pp. 448–451). Sage. https://doi.org/10.4135/9781483346397.n187

Ifenthaler, D., Greiff, S., & Gibson, D. C. (2018). Making use of data for assessments: harnessing analytics and data science. In J. Voogt, G. Knezek, R. Christensen, & K.-W. Lai (Eds.), International handbook of IT in primary and secondary education (2nd ed., pp. 649–663). Springer.

Ifenthaler, D., Majumdar, R., Gorissen, P., Judge, M., Mishra, S., Raffaghelli, J., & Shimada, A. (2024). Artificial intelligence in education: Implications for policymakers, researchers, and practitioners. Technology, Knowledge and Learning. https://doi.org/10.1007/s10758-024-09747-0

Ifenthaler, D., & Schumacher, C. (2023). Reciprocal issues of artificial and human intelligence in education. Journal of Research on Technology in Education, 55(1), 1–6. https://doi.org/10.1080/15391523.2022.2154511

Ifenthaler, D., & Seufert, S. (Eds.). (2022). Articifial intelligence education in the context of work. Springer. https://doi.org/10.1007/978-3-031-14489-9

Kim, N. J., & Kim, M. K. (2022). Teacher’s perceptions of using an artificial intelligence-based educational tool for scientific writing. Frontiers in Education. https://doi.org/10.3389/feduc.2022.755914

Kim, S., Jang, Y., Choi, S., Kim, W., Jung, H., Kim, S., & Kim, H. (2021). Analyzing teacher competency with TPACK for K-12 AI education. KI - Künstliche Intelligenz, 35(2), 139–151. https://doi.org/10.1007/s13218-021-00731-9

Knoth, N., Decker, M., Laupichler, M. C., Pinski, M., Buchholtz, N., Bata, K., & Schultz, B. (2024). Developing a holistic AI literacy assessment matrix–Bridging generic, domain-specific, and ethical competencies. Computers and Education Open, 6, 100177. https://doi.org/10.1016/j.caeo.2024.100177

Kuckartz, U., & Rädiker, S. (2023). Qualitative content analysis: Methods, practice and software (2nd ed.). SAGE Publications Ltd.

Laupichler, M. C., Aster, A., & Raupach, T. (2023). Delphi study for the development and preliminary validation of an item set for the assessment of non-experts’ AI literacy. Computers and Education: Artificial Intelligence, 4, 100126. https://doi.org/10.1016/j.caeai.2023.100126

Long, D., & Magerko, B. (2020). What is AI literacy? Competencies and design considerations. Proceedings of the 2020 CHI conference on human factors in computing systems, 1–16. https://doi.org/10.1145/3313831.3376727

Ludwig, S., Mayer, C., Hansen, C., Eilers, K., & Brandt, S. (2021). Automated essay scoring using transformer models. Psych, 3(4), 897–915. https://doi.org/10.3390/psych3040056

Massmann, C., & Hofstetter, A. (2020). AI-pocalypse now? Herausforderungen Künstlicher Intelligenz für Bildungssystem, Unternehmen und die Workforce der Zukunft. In R. A. Fürst (Ed.), Digitale Bildung und Künstliche Intelligenz in Deutschland (pp. 167–220). Springer Fachmedien Wiesbaden. https://doi.org/10.1007/978-3-658-30525-3_8

Ng, D. T. K., Leung, J. K. L., Chu, S. K. W., & Qiao, M. S. (2021). Conceptualizing AI literacy: An exploratory review. Computers and Education: Artificial Intelligence, 2, 100041. https://doi.org/10.1016/j.caeai.2021.100041

Nielsen, W., Miller, K. A., & Hoban, G. (2015). Science teachers’ response to the digital education revolution. Journal of Science Education and Technology, 24(4), 417–431. https://doi.org/10.1007/s10956-014-9527-3

Papamitsiou, Z., Filippakis, M., Poulou, M., Sampson, D. G., Ifenthaler, D., & Giannakos, M. (2021). Towards an educational data literacy framework: Enhancing the profiles of instructional designers and e-tutors of online and blended courses with new competences. Smart Learning Environments, 8, 18. https://doi.org/10.1186/s40561-021-00163-w

Park, E., Ifenthaler, D., & Clariana, R. (2023). Adaptive or adapted to: Sequence and reflexive thematic analysis to understand learners’ self-regulated learning in an adaptive learning analytics dashboard. British Journal of Educational Technology, 54(1), 98–125. https://doi.org/10.1111/bjet.13287

Pedro, F., Subosa, M., Rivas, A., & Valverde, P. (2019). Artificial intelligence in education: Challenges and opportunities for sustainable development. UNESCO.

Pfost, M., & Artelt, C. (2014). Reading Literacy Development in Secondary School and the Effect of Differential Institutional Learning Environments (pp. 229–277). University of Bamberg Press Bamberg.

Richards, D., & Dignum, V. (2019). Supporting and challenging learners through pedagogical agents: Addressing ethical issues through designing for values. British Journal of Educational Technology, 50(6), 2885–2901. https://doi.org/10.1111/bjet.12863

Rietz, C., & Völmicke, E. (2020). Künstliche Intelligenz und das deutsche Schulsystem. In A. T. von Hattburg & M. Schäfer (Eds.), Digitalpakt–was nun? (pp. 89–96). Springer Fachmedien Wiesbaden. https://doi.org/10.1007/978-3-658-25530-5_10

Rohm, T., Carstensen, C. H., Fischer, L., & Gnambs, T. (2021). The achievement gap in reading competence: The effect of measurement non-invariance across school types. Large-Scale Assessments in Education, 9(1), 23. https://doi.org/10.1186/s40536-021-00116-2

Roppertz, S. (2020). Artificial intelligence & vocational education and training–perspective of VET teachers. European Commision.

Sanusi, I. T., Olaleye, S. A., Agbo, F. J., & Chiu, T. K. F. (2022). The role of learners’ competencies in artificial intelligence education. Computers and Education: Artificial Intelligence, 3, 100098. https://doi.org/10.1016/j.caeai.2022.100098

Savalei, V. (2012). The Relationship between root mean square error of approximation and model misspecification in confirmatory factor analysis models. Educational and Psychological Measurement, 72(6), 910–932. https://doi.org/10.1177/0013164412452564

Schmid, U., Blanc, B., Toepel, M., Pinkwart, N., & Drachsler, H. (2021). KI@Bildung: Lehren und Lernen in der Schule mit Werkzeugen Künstlicher Intelligenz.

Schoenfeld, A. H. (2010). How we think. Routledge. https://doi.org/10.4324/9780203843000

Seufert, S., Guggemos, J., Ifenthaler, D., Ertl, H., & Seifried, J. (2021). Künstliche Intelligenz in der beruflichen Bildung Zukunft der Arbeit und Bildung mit intelligenten Maschinen?! Franz Steiner Verlag. https://doi.org/10.25162/9783515130752

Sharpless, N. E., & Kerlavage, A. R. (2021). The potential of AI in cancer care and research. Biochimica et Biophysica Acta (BBA)-Reviews on Cancer, 1876(1), 188573. https://doi.org/10.1016/j.bbcan.2021.188573

Shavelson, R. J. (2013). On an approach to testing and modeling competence. Educational Psychologist, 48(2), 73–86. https://doi.org/10.1080/00461520.2013.779483

Shi, D., Lee, T., & Maydeu-Olivares, A. (2019). Understanding the model size effect on SEM fit indices. Educational and Psychological Measurement, 79(2), 310–334. https://doi.org/10.1177/0013164418783530

Sindermann, C., Sha, P., Zhou, M., Wernicke, J., Schmitt, H. S., Li, M., Sariyska, R., Stavrou, M., Becker, B., & Montag, C. (2021). Assessing the attitude towards artificial intelligence: Introduction of a short measure in German, Chinese, and English language. KI-Künstliche Intelligenz, 35(1), 109–118. https://doi.org/10.1007/s13218-020-00689-0

Sperling, K., Stenberg, C.-J., McGrath, C., Åkerfeldt, A., Heintz, F., & Stenliden, L. (2024). In search of artificial intelligence (AI) literacy in teacher education: A scoping review. Computers and Education Open, 6, 100169. https://doi.org/10.1016/j.caeo.2024.100169

Sumaryanta, Mardapi, D., Sugiman, & Herawan, T. (2018). Assessing teacher competence and its follow-up to support professional development sustainability. Journal of Teacher Education for Sustainability, 20(1), 106–123. https://doi.org/10.2478/jtes-2018-0007

Tuomi, I. (2022). Artificial intelligence, 21st century competences, and socio-emotional learning in education: More than high-risk? European Journal of Education, 57(4), 601–619. https://doi.org/10.1111/ejed.12531

Vazhayil, A., Shetty, R., Bhavani, R. R., & Akshay, N. (2019). Focusing on teacher education to introduce AI in schools: Perspectives and illustrative findings. 2019 IEEE Tenth International Conference on Technology for Education (T4E), https://doi.org/10.1109/T4E.2019.00021

Venkatesh, V., Morris, M. G., Davis, G. B., & Davis, F. D. (2003). User acceptance of information technology: Toward a unified view. MIS Quarterly, 1, 425–78.

Vuorikari, R., Kluzer, S., & Punie, Y. (2022). DigComp 2.2: The digital competence framework for citizens-with new examples of knowledge, skills and attitudes. Publications Office of the European Union. https://doi.org/10.2760/490274

Wang, Y.-Y., & Wang, Y.-S. (2022). Development and validation of an artificial intelligence anxiety scale: An initial application in predicting motivated learning behavior. Interactive Learning Environments, 30(4), 619–634. https://doi.org/10.1080/10494820.2019.1674887

Whittaker, T. A. (2012). Using the modification index and standardized expected parameter change for model modification. The Journal of Experimental Education, 80(1), 26–44. https://doi.org/10.1080/00220973.2010.531299

Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education–where are the educators? International Journal of Educational Technology in Higher Education, 16(1), 39. https://doi.org/10.1186/s41239-019-0171-0

Zhang, K., & Aslan, A. B. (2021). AI technologies for education: Recent research & future directions. Computers and Education: Artificial Intelligence, 2, 100025. https://doi.org/10.1016/j.caeai.2021.100025

Acknowledgements

Open Access funding enabled and organized by Projekt DEAL–bundesweite Lizenzierung von Angeboten großer Wissenschaftsverlage (https://www.projekt-deal.de).

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper. Jan Delcker declares no conflict of interest. Joana Heil declares no conflict of interest. Dirk Ifenthaler declares no conflict of interest.

Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards.

Informed consent

Informed consent was obtained by the participating institutions.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

AICO_edu questionnaire including all subscales

Item-ID | Subscale |

|---|---|

TH | Theory |

th_01 | I know how AI can be differentiated from traditional software |

th_02* | I know about current fields of application of AI |

th_03* | I know about future fields of application of AI |

th_04 | I have a basic understanding of algorithms |

th_05 | I am familiar with the terms "machine learning, "deep learning" and "data mining" |

th_06 | I know about the functionalities of "machine learning", "deep learning" and "data mining" |

th_07 | I am familiar with the functionalities of databases |

th_08* | I work with databases on a regular basis |

LF | Legal Frameworks and Ethics |

lf_01 | I know about the most important rules regarding the handling of student data |

lf_02* | I know how to protect student data |

lf_03* | I understand the risks which evolve from the usage of AI in teaching and learning |

lf_04* | I am able to evaluate the results of AI-based systems with respect to their credibility |

lf_05 | I am familiar with the GDPR (General Data Protection Regulation). HINT: Replace GDPR with the laws that apply to the context |

lf_06* | I am familiar with the GETA (General Equal Treatment Act). HINT: Replace GETA with the laws that apply to the context |

IA | Implication for Teaching and Learning |

ia_01 | I can name the potentials of AI for the workplace |

ia_02 | I can name the potentials of AI for society |

ia_03* | I can name the risks of AI for society |

ia_04 | I can name the risks of AI for the workplace |

ia_05* | I can name the potentials of AI for education |

ia_06 | I can name the risks of AI for education |

ia_07 | I am familiar with the required competencies of teachers concerning AI in education |

ia_08 | I am familiar with the required competencies of students concerning AI in education |

AT* | Attitudes |

at_01 | I am open to the usage of AI in vocational education and training |

at_02 | I believe I could change my attitude towards AI once I know more about the topic |

at_03 | I am curious to know more about AI in the context of vocational education and training |

at_04 | I want to learn more about AI in the context of vocational education and training |

at_05 | I am interested in testing AI in the context of vocational education and training |

at_06 | I am interested in a critical discussion about AI |

at_07 | I believe AI will play an important role in the future |

at_08 | AI should be an important topic in the future |

TL | Teaching and Learning |

tl_01 | I know how AI can be used in teaching and learning |

tl_02 | I am using AI for teaching and learning |

tl_03 | I know how the results of an AI application for vocational education and training can be analyzed |

tl_04 | I know how AI can be integrated into vocational education and training |

tl_05 | I know how to facilitate the AI competencies of students |

tl_06 | I believe I am a role model for the acquisition of AI competencies |

tl_07 | I am equipped with the necessary content knowledge to facilitate AI competencies |

tl_08 | I am equipped with the necessary pedagogical knowledge to facilitate AI competencies |

PF* | Professionalization |

pf_01 | I am interested in further training in the context of AI |

pf_02 | I know further training possibilities in the context of AI |

pf_03 | I already went to further training in the context of AI or am planning to do so |

pf_04 | I know about professional networks in the context of AI |

pf_05 | I am part of a professional network in the context of AI |

pf_06 | I know how AI applications can be used for administrative or organizational processes |

pf_07 | I am using AI applications for administrative or organizational processes |

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Delcker, J., Heil, J. & Ifenthaler, D. Evidence-based development of an instrument for the assessment of teachers’ self-perceptions of their artificial intelligence competence. Education Tech Research Dev (2024). https://doi.org/10.1007/s11423-024-10418-1

Accepted:

Published:

DOI: https://doi.org/10.1007/s11423-024-10418-1