Abstract

Computational thinking (CT) skills are critical for the science, technology, engineering, and mathematics (STEM) fields, thus drawing increasing attention in STEM education. More curricula and assessments, however, are needed to cultivate and measure CT for different learning goals. Maker activities have the potential to improve student CT, but more validated assessments are needed for maker activities. We developed a set of activities for students to improve and assess essential CT skills by creating real-life applications using Arduino, a microcontroller often used in maker activities. We examined the psychometric features of CT performance assessments with rubrics and the effectiveness of the maker activities on improving CT. Two high school physics teachers implemented these Arduino activities and assessments with fifteen high school students during three days in a summer program. The participating students took an internal content-involved and an external CT tests before and after participating in the program. The students also took the performance-based CT assessment at the end of the program. The data provide reliability and validity evidence of the Arduino assessment as a tool to measure CT. The pre- and post-test comparison indicates that students significantly improved their scores on the content-involved assessment aligned with the Arduino activities, but not on the content-free CT assessment. It shows that Arduino, or some equipment similar, can be used to improve students’ CT skills and the Arduino maker activities can be used as performance assessments to measure students’ engineering involving CT skills.

Similar content being viewed by others

References

Assaf, D. (2014). Enabling rapid prototyping in K-12 engineering education with BotSpeak, a universal robotics programming language. Paper presented at the 4th international workshop teaching robotics teaching with robotics 5th international conference robotics in education.

Atmatzidou, S., & Demetriadis, S. (2016). Advancing students’ computational thinking skills through educational robotics: A study on age and gender relevant differences. Robotics and Autonomous Systems, 75, 661–670.

Basu, S., Biswas, G., Sengupta, P., Dickes, A., Kinnebrew, J. S., & Clark, D. (2016). Identifying middle school students’ challenges in computational thinking-based science learning. Research and Practice in Technology Enhanced Learning, 11(13), 1–35.

Bers, M. U., Flannery, L., Kazakoff, E. R., & Sullivan, A. (2014). Computational thinking and tinkering: Exploration of an early childhood robotics curriculum. Computers & Education, 72, 145–157.

Blum, J. (2019). Exploring Arduino: Tools and techniques for engineering wizardry. John Wiley & Sons.

Brennan, K., & Resnick, M. (2012). New frameworks for studying and assessing the development of computational thinking. Paper presented at the annual meeting of the American Educational Research Association, Vancouver, BC, Canada.

Buechley, L., Peppler, K., Eisenberg, M., & Kafai, Y. (2013). Textile messages: Dispatches from the world of E-textiles and education. Peter Lang Inc., International Academic Publishers.

Cartelli, A., Dagiene, V., & Futschek, G. (2012). Bebras contest and digital competence assessment: Analysis of frameworks. Current trends and future practices for digital literacy and competence (pp. 35–46). IGI Global.

Chen, G., Shen, J., Barth-Cohen, L., Jiang, S., Huang, X., & Eltoukhy, M. (2017). Assessing elementary students’ computational thinking in everyday reasoning and robotics programming. Computers and Education, 109, 162–175.

Computer Science Teachers Association and the International Society for Technology in Edcuation. (2011). Computational thinking teacher resources. Retrieved June 10, 2018, from http://www.iste.org/docs/ct-documents/ct-teacher-resources_2ed-pdf.pdf?sfvrsn=2

Crouch, C. H., & Mazur, E. (2001). Peer instruction: Ten years of experience and results. American Journal of Physics, 69(9), 970–977.

Cuny, J., Snyder, L., & Wing, J. M. (2010). Demystifying computational thinking for non-computer scientists. Unpublished manuscript. http://www.cs.cmu.edu/~CompThink/resources/TheLinkWing.pdf.

Dagiene, V., & Futschek, G. (2008). Bebras international contest on informatics and computer literacy: Criteria for good tasks. Paper presented at the 3rd international conference on Informatics in Secondary Schools - Evolution and Perspectives: Informatics Education - Supporting Computational Thinking, Torun, Poland.

Dagienė, V., Sentance, S., & Stupurienė, G. (2017). Developing a two-dimensional categorization system for educational tasks in informatics. Informatica, 28(1), 23–44.

Dagiene, V., & Stupurienė, G. (2016). Bebras: A sustainable community building model for the concept based learning of informatics and computational thinking. Informatics in Education, 15(1), 25–44.

Day, C. (2011). Computational Thinking Is Becoming One of the Three Rs. Computing in Science Engineering, 13(1), 88–88.

Denner, J., Werner, L., & Ortiz, E. (2012). Computer games created by middle school girls: Can they be used to measure understanding of computer science concepts? Computers and Education, 58(1), 240–249.

Denning, P. J., & Tedre, M. (2019). Computational thinking. MIT Press.

Duncan, C., & Bell, T. (2015). A pilot computer science and programming course for primary school students. Paper presented at the Workshop in Primary and Secondary Computing Education, London, United Kingdom.

Edwards, C. (2013). Not-so-humble raspberry pi gets big ideas. Engineering & Technology, 8(3), 30–33.

Grover, S., Pea, R., & Cooper, S. (2015). Designing for deeper learning in a blended computer science course for middle school students. Computer Science Education, 25(2), 199–237.

Henderson, P. B., Cortina, T. J., & Wing, J. M. (2007). Computational thinking. ACM SIGCSE Bulletin, 39, 195–196.

Hsu, T. C., Chang, S. C., & Hung, Y. T. (2018). How to learn and how to teach computational thinking: Suggestions based on a review of the literature. Computers & Education, 126, 296–310.

Irgens, G. A., Dabholkar, S., Bain, C., Woods, P., Hall, K., Swanson, H., Horn, M., & Wilensky, U. (2020). Modeling and measuring high school students’ computational thinking practices in science. Journal of Science Education and Technology, 29(1), 137–161.

Jaipal-Jamani, K., & Angeli, C. (2017). Effect of robotics on elementary preservice teachers’ self-efficacy, science learning, and computational thinking. Journal of Science Education and Technology, 26(2), 175–192.

Karaahmetoglu, K. (2019). The effect of project-based Arduino educational robot applications on students’ computational thinking skills and their perception of basic stem skill levels. Participatory Educational Research, 6(2), 1–14.

Koh, K. H., Basawapatna, A., Bennett, V., & Repenning, A. (2010). Towards the automatic recognition of computational thinking for adaptive visual language learning. Paper presented at the IEEE.

Lin, Q., Yin, Y., Tang, X., Hadad, R., & Zhai, X. (2020). Assessing learning in technology-rich maker activities: A systematic review of empirical research. Computers & Education, 157, 103944.

Martin, L. (2015). The promise of the Maker Movement for education. Journal of Pre-College Engineering Education Research, 5(1), 30–39.

Martin, L., & Betser, S. (2020). Learning through making: The development of engineering discourse in an out-of-school maker club. Journal of Engineering Education, 109, 194–212.

NGSS. (2013). Next generation science standards: For states, by states. The National Academies Press.

Papert, S. (1980). Mindstorms: Children, computers, and powerful ideas. Basic Books, Inc.

Papert, S. (1996). The connected family: Bridging the digital generation gap longstreet press.

Peel, A., Sadler, T. D., & Friedrichsen, P. (2019). Learning natural selection through computational thinking: Unplugged design of algorithmic explanations. Journal of Research in Science Teaching, 56(7), 983–1007.

Pellegrino, J. W., DiBello, L. V., & Goldman, S. R. (2016). A framework for conceptualizing and evaluating the validity of instructional relevant assessments. Educational Psychologist, 51, 59–81.

President’s Information Technology Advisory Committee. (2005). Computational Science: Ensuring America’s Competitiveness. Retrieved October 1, 2016, from https://www.nitrd.gov/pitac/reports/20050609_computational/computational.pdf

Quan, G. M., & Gupta, A. (2020). Tensions in the productivity of design task tinkering. Journal of Engineering Education, 109, 88–106.

Rivas, L. (2014). Creating a classroom makerspace. Educational Horizons, 93(1), 25–26.

Selby, C., & Woollard, J. (2013). Computational thinking: The developing definition. Paper presented at the Special Interest Group on Computer Science Education (SIGCSE).

Sherman, M., & Martin, F. (2015). The assessment of mobile computational thinking. Journal of Computing Sciences in Colleges, 30(6), 53–59.

Sobota, J., Balda, P., & Schlegel, M. (2013). PiŜl, R., & Raspberry Pi and Arduino boards in control education. Volumes, 46(17), 7–12.

Sohn, W. (2014). Design and evaluation of computer programming education strategy using Arduino. Advanced Science and Technology Letters, 66(1), 73–77.

Tang, X., Yin, Y., Lin, Q., Hadad, R., & Zhai, X. (2020). Assessing computational thinking: A systematic review of empirical studies. Computers & Education, 148, 103798.

Weintrop, D., Beheshti, E., Horn, M. S., Orton, K., Trouille, L., Jona, K., & Wilensky, U. (2014). Interactive Assessment Tools for Computational Thinking in High School STEM Classrooms. Paper presented at the INTETAIN, Chicago, IL.

Weintrop, D., Beheshti, E., Horn, M., Orton, K., Jona, K., Trouille, L., & Wilensky, U. (2016). Defining computational thinking for mathematics and science classrooms. Journal of Science Education and Technology, 25(1), 127–147.

Werner, L., Denner, J., Campe, S., & Kawamoto, D. C. (2012). The fairy performance assessment: Measuring computational thinking in middle school. Paper presented at the 43rd ACM Technical Symposium on Computer Science Education, New York.

Wing, J. M. (2006). Computational thinking. Communications of the ACM, 49(3), 33–35.

Yin, Y., Hadad, R., Tang, X., & Lin, Q. (2020). Improving and assessing computational thinking in maker activities: The integration with physics and engineering learning. Journal of Science Education and Technology, 29(1), 189–214.

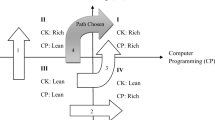

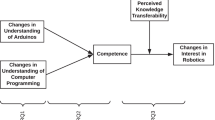

Zha, S., Jin, Y., Moore, P., & Gaston, J. (2020). A cross-institutional investigation of a flipped module on preservice teachers’ interest in teaching computational thinking. Journal of Digital Learning in Teacher Education, 36(1), 32–45.

Zhang, L., & Nouri, J. (2019). A systematic review of learning computational thinking through Scratch in K-9. Computers & Education, 141, 103607.

Zhao, W., & Shute, V. J. (2020). Can playing a video game foster computational thinking skills? Computers & Education, 141, 103633.

Zhong, X., & Liang, Y. (2016). Raspberry Pi: An effective vehicle in teaching the internet of things in computer science and engineering. Electronics, 5(3), 56.

Acknowledgements

This study was funded by National Science Foundation (NSF) (Award # 1543124). However, any opinions, findings, conclusions, or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the NSF. We also appreciate the great support and help from all the research assistants, researchers, teachers, students, and Chicago GEAR UP Alliance staff members who were involved in this study, and the Chicago Public Library.

Author information

Authors and Affiliations

Contributions

Conceptualization: YY, SK, RH, and XZ; Methodology: SK and YY; Formal analysis and investigation: SK and YY; Writing—original draft: SK and YY; Writing—revision: YY, XZ, SK, and RH; Funding acquisition: RH and YY; Resources: RH; Supervision: YY.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Research involving human participants and/or animals

No animal was involved. All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee. The study was approved by the IRB—Office of Protection of Research Subjects at the University of Illinois at Chicago on Oct 18, 2017, with the protocol number as 2016-0537.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1 Examples of the formative assessment practices using Arduino

CT | Application in Arduino activities | Formative assessment questions |

|---|---|---|

Problem decomposition | Dividing the project into hardware and software parts and dividing each part into design and implementation Dividing the circuits on the breadboard into individual ones (hardware design and implementation) Dividing a system into multiple independent parts to deal with simpler problems (software design) Dividing the code into multiple steps to deal with one simple problem at a time (software implementation), like creating three different signals independently for the SOS activity | Can you break down the task into smaller pieces? What are they? To make Arduino work, what components are needed? (software and hardware) To make the hardware work, what components are needed? (power, components, jumper wires, a complete loop) To make the software work, what components are needed? (turn on the light, specify the length of the light, turn off the light) |

Pattern recognition | Recognizing the patterns of how a system works (software design); e.g., the traffic light at an intersection: holding one set on red and making the other sets rotate from red to yellow, green, and red | How are Arduino circuits different from traditional circuits? (power? Switch option? Components in the circuits?) How is activity 2 different from 1? How is activity 3 different from 1? How is activity 4 different from the previous ones? (compare different ones from hardware and software) |

Abstraction | Drawing electronic diagrams (hardware design) Interpreting electronic diagrams when assembling the circuit on Breadboard (hardware implementation) Reducing a system to its main characteristics at the design stage (software design) | Can you draw an electronic diagram for your design (the circuits you built on a breadboard)? What is the function of the breadboard circuit and sketch code? What do the lines in the Arduino code tell the breadboard?—e.g., control which pin will get the power, how long the switch will be on, how long the switch will be off |

Algorithm | Listing the major steps to deal with an Arduino project (steps 1 to 4) Listing the steps taken to control the system (software design) Writing Arduino programs to control devices (e.g., LEDs) on the breadboard (software implementation) | How can we modify/write a program to control the component on a breadboard? |

Evaluation | Iterative implementation to check the correctness of software and hardware gradually (software and hardware implementation) Double-check the circuits on the breadboard to make sure that they work, well-arranged (e.g., use fewer jump wires and clear arrangement) Double-check the codes to ensure that the codes are efficient | Is your program clear? If not, how would you further improve it? Does your program control the lights as planned? Compare the pattern with Does the circuit work appropriately? Does the sketch program work appropriately? Is this the most efficient way to program it? Is your program reader-friendly? |

Appendix 2 External CT assessment

1. Magda bought ten balloons of three colors with the numbers as shown:

Question:

If Magda was born in the year 1983, can you pick up the balloons in the correct order to show Magda’s year of birth?

A. Yellow, Red, Green, Red

B. Yellow, Green, Green, Green

C. Yellow, Red, Red, Green

D. Yellow, Green, Red, Green

Appendix 3 Internal content assessment

-

1.

What do you know about Arduino? How do they work?

-

2.

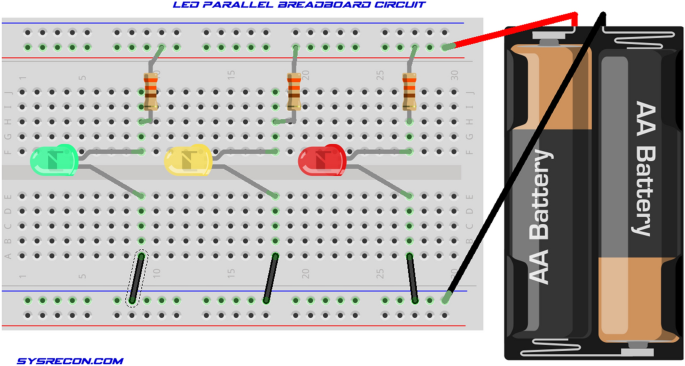

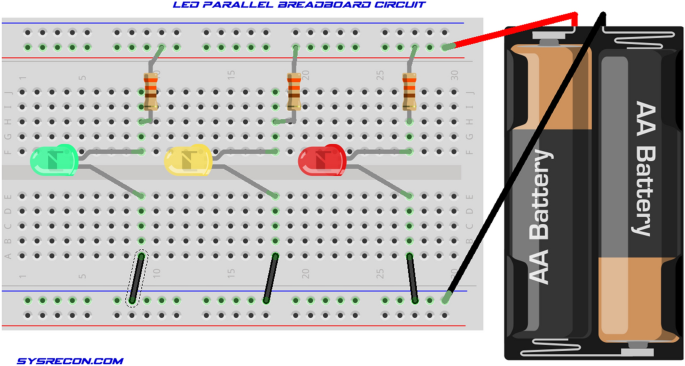

Breadboard: Below is a circuit connected to a breadboard.

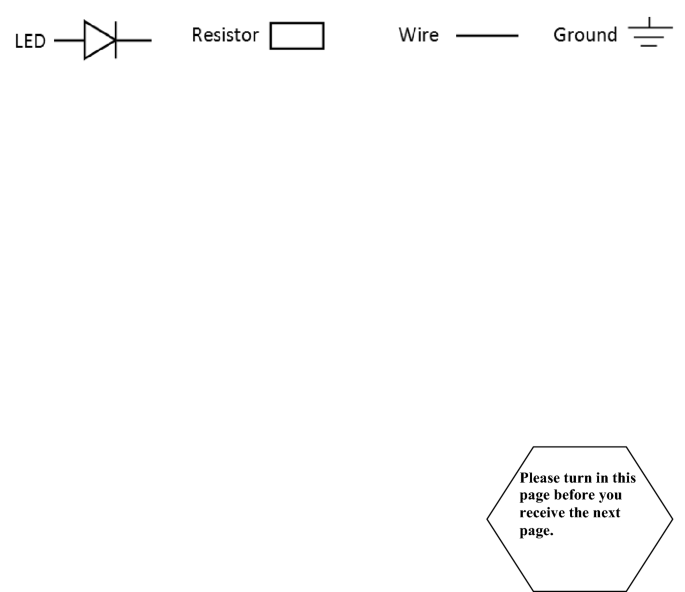

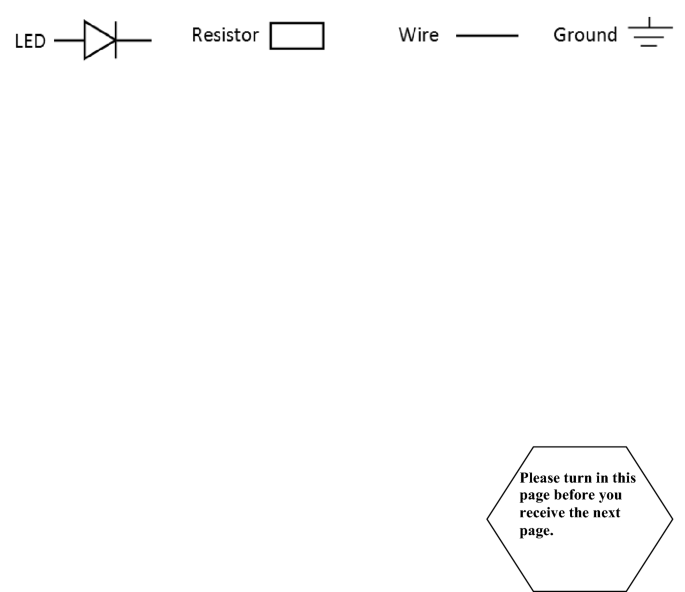

Please draw a circuit diagram for it. Feel free to use the following symbols to make your drawing easier.

-

3.

Arduino/Programming

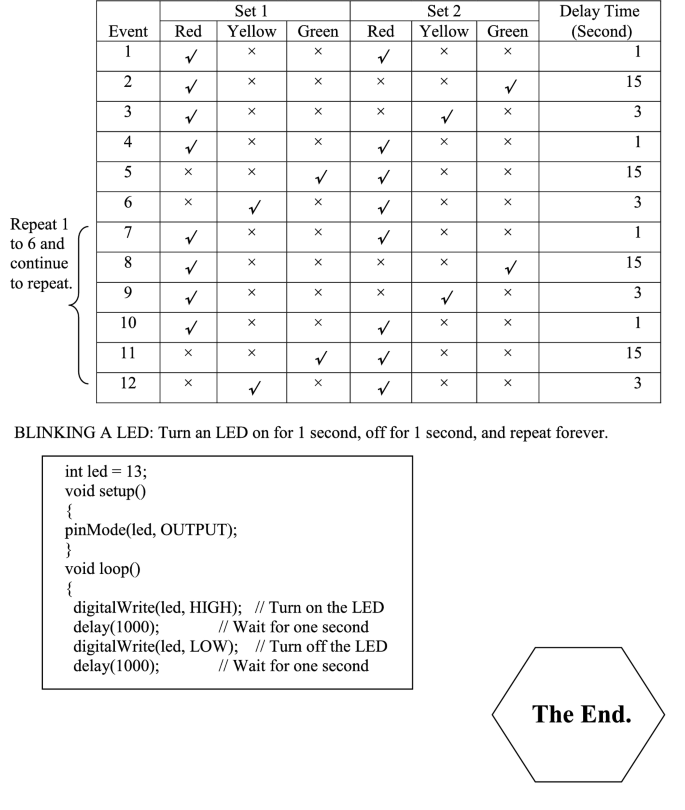

Look at the lines of code from the “Blink” sketch for Arduino.

If we keep the setup on the breadboard and want the LEDs to stay on twice as long as they are off, how can we edit the code? Fill in the blanks below: (Note: There is no one correct answer, be sure that the LED will be on twice as long as it is off!).

Thanks so much for completing this "POST knowledge survey." If you have any comments and suggestions on this knowledge survey, please let us know. Thank you!

Appendix 4 Arduino CT performance assessment

-

(1)

Suppose a traffic light system includes two sets of green, red, and yellow lights at an intersection. One set for north–south bound traffic and the other for east–west bound traffic. You are asked to build a traffic light model using Arduino to make it simulate the traffic lights.

-

(1)

List the major steps that you are going to take to accomplish this project.

-

(2)

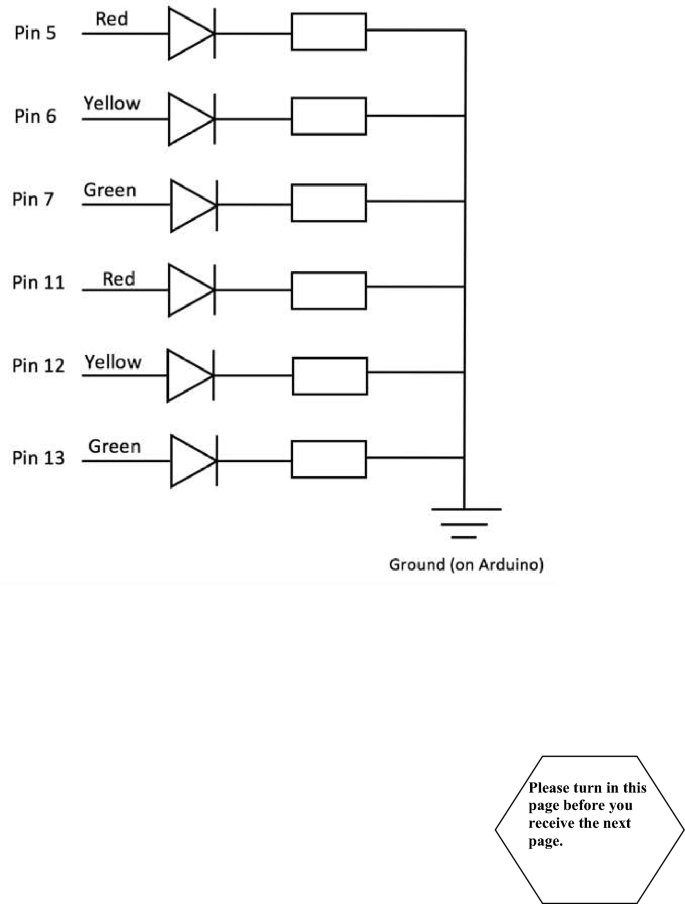

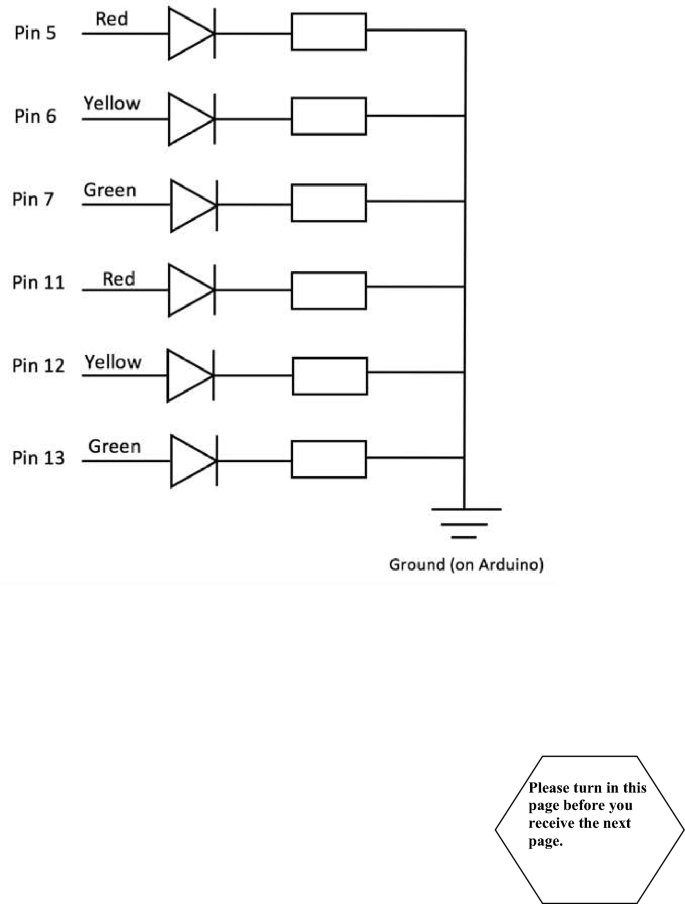

Draw a circuit diagram for the circuit that you plan to build on the breadboard using the following symbols.

-

(1)

-

(2)

Here is the diagram for the traffic light challenge. Please build the circuits on a breadboard.

-

(3)

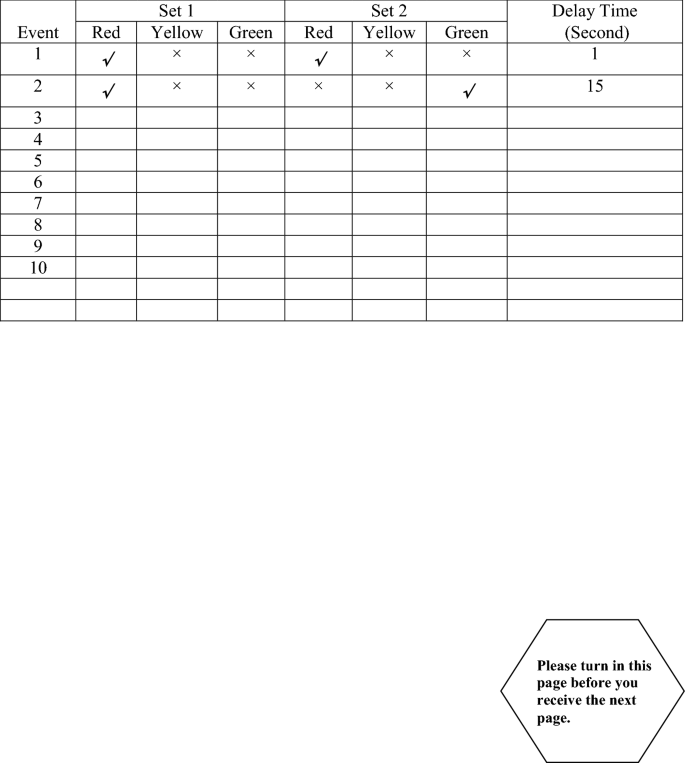

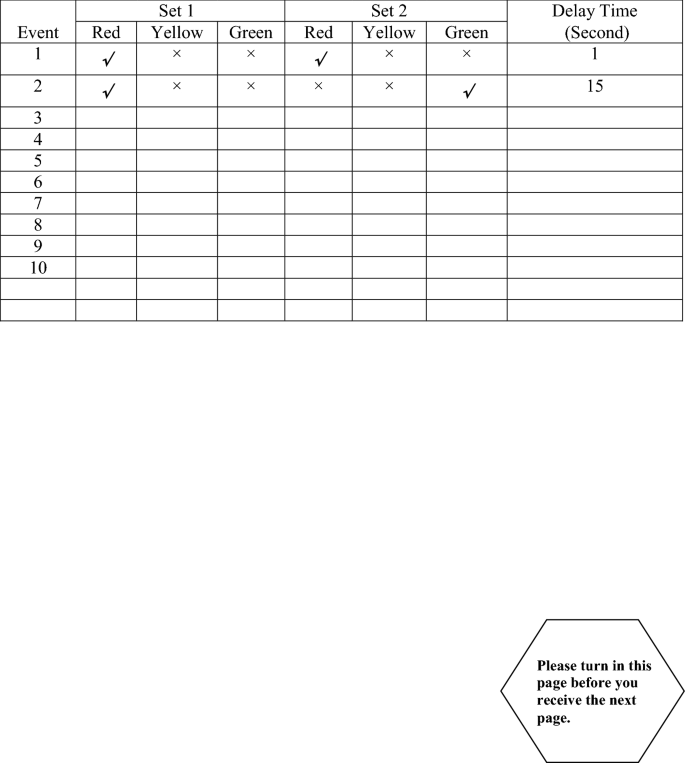

Suppose that your traffic light system will simulate what you observe at a typical intersection. You may use the following table to describe the light pattern for at least the first 10 events (√ represents on, × represents off). Or you can use your way to describe the pattern if you'd like.

-

(4)

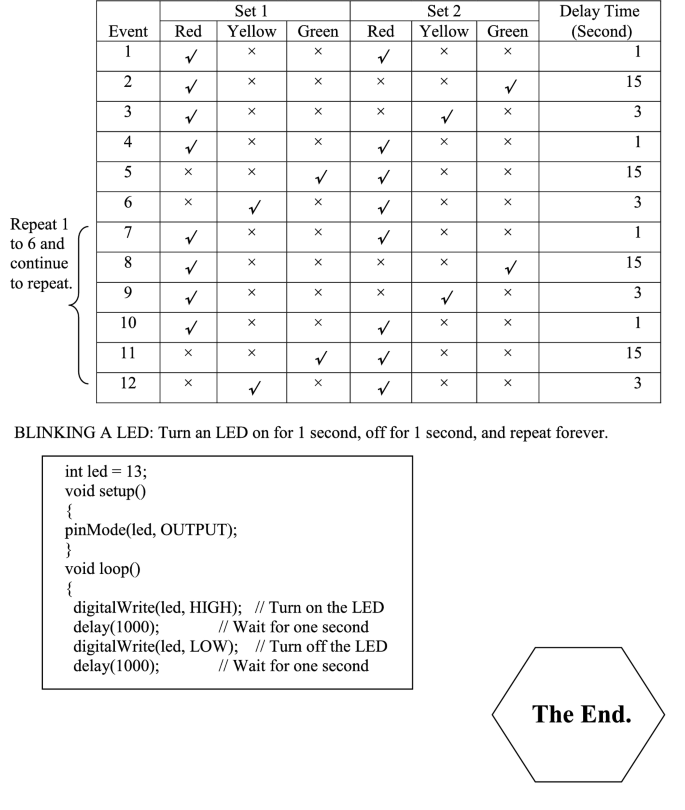

The table below presents a pattern for traffic lights. (√ represents on, × represents off). The following Arduino code is for one blinking LED. You may use this code as an example to write Arduino codes on a computer to make the traffic light system work. Save your codes with your initials and birthday as the file name.

Appendix 5 Traffic light scoring rubrics

Component | Description | Points | Student score |

|---|---|---|---|

1 (1) The major steps that you are going to take to accomplish this project (Decomposition) | |||

Task1 | Set up circuits on breadboard | 1 | |

Task 2 | Connect circuits with Arduino | 1 | |

Task 3 | Program Arduino | 1 | |

1(2) Draw circuit diagram (Abstraction) | |||

LEDs | Six LEDs are used | 3 | |

Three to five LEDs are used | 2 | ||

Less than three LEDs are used | 1 | ||

No LEDs are used | 0 | ||

Resistors | Six resistors are used | 3 | |

Three to five resistors are used | 2 | ||

Less than three resistors are used | 1 | ||

No resistors are used | 0 | ||

Series connections | All LEDs used are connected with resistors in series | 2 | |

Not all LEDs are connected with resistors in series | 1 | ||

None LED is connected with resistors in series | 0 | ||

Parallel connection | All six LED-resistor pairs are connected in parallel or all LEDs are connected in parallel ways | 2 | |

Less than six LED-resistor pairs are connected in parallel or some LEDs are connected in parallel ways | 1 | ||

None LED-resistor pairs are connected in parallel or none LEDs are connected in parallel ways | 0 | ||

Page 2. Breadboard connection: traffic light specific scoring | |||

LEDs | Six LEDs are used | 3 | 3 |

Three to five LEDs are used | 2 | ||

Less than three LEDs are used | 1 | ||

No LEDs are used | 0 | ||

Resistors | Six resistors are used | 3 | 1 |

Three to five resistors are used | 2 | ||

Less than three LEDs are used | 1 | ||

No LEDs are used | 0 | ||

Series connections | All LEDs used are connected with resistors in series | 2 | 0 |

Not all LEDs are connected with resistors in series | 1 | ||

None LED is connected with resistors in series | 0 | ||

Parallel connection | All six LED-resistor pairs are connected in parallel or all LEDs are connected in parallel ways | 2 | 0 |

Less than six LED-resistor pairs are connected in parallel or some LEDs are connected in parallel ways | 1 | ||

None LED-resistor pairs are connected in parallel or none LEDs are connected in parallel ways | 0 |

General breadboard and arduino scoring (Abstraction) | Pattern recognition and abstraction | ||

|---|---|---|---|

Component | Description | Point | Score of S9 |

Breadboard-Arduino positive connection | 6 output pins of Arduino are connected to 6 separate holes in Breadboard (not connected to the same bus) | 3 | 2 |

—6 output pins of Arduino are connected to the same bus in Breadboard —Or 6 output pins of Arduino are connected to 6 separate holes in Breadboard but the pins are not the specified ones | 2 | ||

Less than 6 output pins of Arduino (1 or more) are connected to Breadboard | 1 | ||

No output pin from Arduino is connected to Breadboard | 0 | ||

Breadboard-Arduino negative connection | All circuits on the breadboard are connected with Arduino ground | 2 | 0 |

Only some circuits on the breadboard are connected with Arduino ground | 1 | ||

None circuits on the breadboard are connected with the Arduino ground | 0 | ||

Short circuit on Breadboard | All LEDs and resistors are connected using unlinked holes (i.e., no short circuits under the LEDs and resistors) | 2 | 0 |

Some LEDs or resistors are connected on linked holes (i.e., Some LEDs or resistors have short circuits) | 1 | ||

All LEDs and resistors are connected to linked holes | 0 | ||

Open circuit on Breadboard | No open circuit on the breadboard (i.e., all the unlinked holes are connected properly) | 2 | 2 |

1 type of open circuit on the breadboard (e.g. on the vertical or horizontal pins) | 1 | ||

More than 1 type of open circuit on Breadboard | 0 | ||

Extra (wrong) connections | There is no extra wire that connects points that should not be connected | 1 | 1 |

There are extra wires connection points that should not be connected | 0 |

Page 3. Pattern recognition | |||

|---|---|---|---|

Pattern component | Description | Point | Score |

Count the correct combinations up from row 3 to row 7 (ignore rows 8 to 12) | Acceptable combinations: Red–Red, Red-Yellow, Red-Green, Green–Red, Yellow–Red, Red-Red Note: Green-Green, Yellow-Yellow, Yellow-Green combination are wrong, no point should be given! * Any of the combinations will be given 1 point (total of 5) * Repeated combinations (except for red-red that could happen twice) will not be given more than 1 point | 5 4 3 2 1 0 | |

Sequence as a whole | The sequence is completely correct: —Holding one set red and making the other set rotate from red, yellow, green, and red —Holding the other set red and making the other set rotate from red, yellow, green, and red | 3 | |

The sequence is correct only for one group of events: —Holding one set red and making the other set rotate from red, yellow, green, and red | 2 | ||

The sequence is correct for one set of lights: e..g, Set 1 or Set 2 has the following sequence–Red, yellow, green. But the other set of lights are either random or in the wrong color, e.g., Green or Yellow | 1 | ||

The sequence is partially to completely wrong for both sets | 0 | ||

Time Pattern | Appropriate delay length: Green–Red delay > Yellow -Red delay > Red-Red delay | 2 | |

Partially appropriate delay length (some students interpret it as cumulative time if it makes sense, we coded it as partially correct) | 1 | ||

No difference in delay length or random delay length | 0 |

Arduino Programming (Algorism) | |||

|---|---|---|---|

Component | Description | Point | Score of S3 |

Setup: variable names | 6 pins assigned to 6 variables | 2 | 2 |

Less than 6 pins assigned to variables | 1 | ||

Only 1 pin (or 0) is assigned to a variable | 0 | ||

Setup: output assignments | 6 pins assigned as outputs | 2 | 2 |

Less than 6 pins assigned as outputs | 1 | ||

Only 1 pin (or 0) is assigned as an output | 0 | ||

The number of events captured (count the number of events correctly captured by the code) | Event 1: Red 1 on, red 2 on, all others off Otherwise | 1 0 | 1 |

Event 2: Red 1 on, green 2 on, all others off (red2 needs to be set low explicitly) Otherwise | 1 0 | 0 | |

Event 3: Red 1 on, yellow 2 on, all others off (green2 needs to be set low explicitly) Otherwise | 1 0 | 0 | |

Event 4: Red 1 on, red 2 on, all others off (yellow2 needs to be set low explicitly) Otherwise | 1 0 | 0 | |

Event 5: Green 1 on, red 2 on, all others off (red1 needs to be set low explicitly) Otherwise | 1 0 | 0 | |

Event 6: Yellow 1 on, red 2 on, all others off (green1 needs to be set low explicitly) Otherwise | 1 0 | 0 | |

Event 7: Turn off yellow either at the end of the beginning of the loop Otherwise | 1 0 | 0 | |

Understanding the loop concept | No extra pattern (e.g., code only one round of the pattern) | 2 | 0 |

Extra patterns, but did not affect functionality (e.g., code two rounds of the pattern) | 1 | ||

Extra patterns that wrongly affect functionality (e.g., code several extra combinations in addition to one complete round of pattern) or less than 6 patterns to complete even one round | 0 | ||

Delay | —No missing delay; —Some delays are missing | 1 0 | 1 |

—No extra delay —Some delays are extra | 1 0 | 1 | |

—Delay length are all right (RG: 15, RY: 3, RR: 1) —Delay lengths are partially right or none is right | 1 0 | 0 | |

The efficiency of the code | Most efficient way: turning on/off only two lights for patterns 2 to 6 | 2 | 0 |

Somewhat efficient: turning on/off more than two lights (but not all 6) for all patterns | 1 | ||

—Turning on/off all 6 lights —Turning on/off random lights (e.g. copy/paste of blink) | 0 | ||

Reader-friendly codes | —meaningful comments —no comments or meaningless comments (e.g., simply copied the comments from blink, use mismatched comments) | 1 0 | 0 |

—use int to name pins with light colors to make the program easier to read (e.g., name green1, red1, yellow1, or g1, r1, y1) —did not use int or use int but did not name it with light color | 1 0 | 1 | |

—the whole program has a neat structure, e.g., using the same indents for the codes at the same level, leaving a space between separate events —somewhat organized, but not well organized, or organized but too small to be considered very structural —the program looks messy, disorganized | 2 1 0 | 2 |

Appendix 6 Students' scores on different computational thinking components

Student | Decomposition | Abstraction | Pattern recognition | Algorithm design | Total | Percentage (Total/Max; %) |

|---|---|---|---|---|---|---|

1 | 1 | 26 | 7 | 7 | 41 | 63 |

2 | 3 | 29 | 2 | 0 | 34 | 52 |

3 | 2 | 21 | 5 | 11 | 39 | 60 |

4 | 2 | 21 | 1 | 3 | 27 | 42 |

5 | 3 | 30 | 10 | 20 | 63 | 97 |

6 | 2 | 22 | 3 | 0 | 27 | 42 |

7 | 3 | 22 | 4 | 0 | 29 | 45 |

8 | 0 | 28 | 9 | 19 | 56 | 86 |

9 | 0 | 14 | 5 | 1 | 20 | 31 |

10 | 3 | 30 | 9 | 16 | 58 | 89 |

11 | 1 | 24 | 0 | 0 | 25 | 38 |

12 | 3 | 30 | 10 | 20 | 63 | 97 |

13 | 3 | 22 | 4 | 14 | 43 | 66 |

14 | 2 | 19 | 0 | 10 | 31 | 48 |

Mean | 2.00 | 24.14 | 4.93 | 8.64 | 39.71 | 61.14 |

SD | 1.11 | 4.87 | 3.58 | 8.07 | 14.80 | 22.68 |

Max | 3 | 30 | 10 | 22 | 65.00 | |

% (Mean/Max) | 67 | 80 | 49 | 39 | 61 |

Rights and permissions

About this article

Cite this article

Yin, Y., Khaleghi, S., Hadad, R. et al. Developing effective and accessible activities to improve and assess computational thinking and engineering learning. Education Tech Research Dev 70, 951–988 (2022). https://doi.org/10.1007/s11423-022-10097-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11423-022-10097-w