Abstract

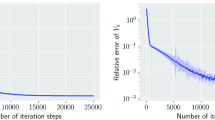

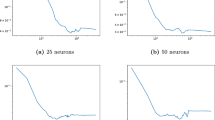

The authors prove the gradient convergence of the deep learning-based numerical method for high dimensional parabolic partial differential equations and backward stochastic differential equations, which is based on time discretization of stochastic differential equations (SDEs for short) and the stochastic approximation method for nonconvex stochastic programming problem. They take the stochastic gradient decent method, quadratic loss function, and sigmoid activation function in the setting of the neural network. Combining classical techniques of randomized stochastic gradients, Euler scheme for SDEs, and convergence of neural networks, they obtain the \(O(K^{\frac{1}{4}})\) rate of gradient convergence with K being the total number of iterative steps.

Similar content being viewed by others

References

Beck, C., Becker, S., Grohs, P., et al., Solving stochastic differential equations and Kolmogorov equations by means of deep learning. arXiv: 1806.00421, 2018

Bender, C. and Zhang, J., Time discretization and Markovian iteration for coupled FBSDEs, The Annals of Applied Probability, 18(1), 2008, 143–177.

Bouchard, B. and Touzi, N., Discrete-time approximation and Monte-Carlo simulation of backward stochastic differential equations, Stochastic Processes and their applications, 111(2), 2004, 175–206.

Carreira-Perpinan, M. and Wang, W., Distributed optimization of deeply nested systems, Appearing in Proceedings of the 17th International Conference on Artificial Intelligence and Statistics (AISTATS) 2014, Reykjavik, Iceland. JMLR: W&CP volume 33.

Cvitanic, J. and Zhang, J., The steepest descent method for forward-backward SDEs, Electronic Journal of Probability, 10, 2005, 1468–1495.

Delarue, F. and Menozzi, S., A forward-backward stochastic algorithm for quasi-linear PDEs, The Annals of Applied Probability, 16(1), 2006, 140–184.

Douglas, J., Ma, J. and Protter, P., Numerical methods for forward-backward stochastic differential equations, The Annals of Applied Probability, 6(3), 1996, 940–968.

E. W., Han, J. and Jentzen A., Deep learning-based numerical methods for high-dimensional parabolic partial differential equations and backward stochastic differential equations, Communications in Mathematics and Statistics, 5(4), 2017, 349–380.

E. W., Ma, C. and Wu, L., A priori estimates of the generalization error for two-layer neural networks. arXiv:1810.06397, 2018

E. W., A proposal on machine learning via dynamical systems, Communications in Mathematics and Statistics, 5(1), 2017, 1–11.

Ghadimi, S. and Lan, G., Stochastic first- and zeroth-order methods for nonconvex stochastic programming, SIAM Journal on Optimization, 23(4), 2013, 2341–2368.

Han, J. and Long, J., Convergence of the deep BSDE method for coupled FBSDEs. arXiv: 1811.01165, 2018

Han, J. and E, W., Deep learning approximation for stochastic control problems. arXiv: 1611.07422, 2016

Huijskens, T. P., Ruijter, M. J. and Oosterlee, C. W., Efficient numerical Fourier methods for coupled forward-backward SDEs, Journal of Computational and, Applied, Mathematics, 296, 2016, 593–612.

Ithapu, V. K., Ravi, S. N. and Singh, V., On the interplay of network structure and gradient convergence in deep learning, 2016 54th Annual Allerton Conference on Communication, Control, and Computing (Allerton), IEEE, 2016, 488–495

Li, Q., Chen, L., Tai, C. and E. W., Maximum principle based algorithms for deep learning, Journal of Machine Learning Research, 18(165), 2017, 1–29.

Ma, J., Shen, J. and Zhao, Y., On numerical approximations of forward-backward stochastic differential equations, SIAM Journal on Numerical Analysis, 46(5), 2008, 2636–2661.

Malek, A. and Beidokhti, R., Numerical solution for high order differential equations using a hybrid neural network-optimization method, Appl. Math. Comput., 183(1), 2006, 260–271.

Nemirovski, A., Juditsky, A., Lan, G. and Shapiro, A., Robust stochastic approximation approach to stochastic programming, SIAM Journal on Optimization, 19(4), 2009, 1574–1609.

Pardoux, E. and Peng, S., Backward stochastic differential equations and quasilinear parabolic partial differential equations, Stochastic Partial Differential Equations and Their Applications, Springer-Verlag, Berlin, Heidelberg, 1992, 200–217.

Rudd, K., Solving Partial Differential Equations Using Artificial Neural Networks, Ph.D. Thesis, Duke University, 2013.

Ruijter, M. J. and Oosterlee, C. W., Numerical Fourier method and second-order Taylor scheme for backward SDEs in finance, Applied Numerical Mathematics, 103, 2016, 1–26.

Shao, H. and Zheng, G., Convergence analysis of a back-propagation algorithm with adaptive momentum, Neurocomputing, 74(5), 2011, 749–752.

Sirignano, J. and Spiliopoulos, K., DGM: A deep learning algorithm for solving partial differential equations, Journal of Computational Physics, 375, 2018, 1339–1364.

Pardoux, E. and Tang, S., Forward-backward stochastic differential equations and quasilinear parabolic PDEs, Probability Theory and Related Fields, 114(2), 1999, 123–150.

Xu, Y. and Yin, W., A globally convergent algorithm for nonconvex optimization based on block coordinate update, Journal of Scientific Computing, 72(2), 2017, 700–734.

Zeng, J., Ouyang, S., Lau, T. T. K., et al., Global convergence in deep learning with variable splitting via the Kurdyka-łojasiewicz property. arXiv: 1803.00225, 2018

Zhang, X. and Zhang, N., A study on the convergence of gradient method with momentum for sigma-pi-sigma neural networks, Journal of Applied Mathematics and Physics, 6(04), 2018, 880–887.

Zou, D., Cao, Y., Zhou, D. and Gu, Q., Stochastic gradient descent optimizes over-parameterized deep ReLU networks. arXiv: 1811.08888, 2018

Acknowledgement

The authors would like to thank the anonymous reviewers for their careful work and many useful comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the National Key R&D Program of China (No. 2018YFA0703900) and the National Natural Science Foundation of China (No. 11631004).

Rights and permissions

About this article

Cite this article

Wang, Z., Tang, S. Gradient Convergence of Deep Learning-Based Numerical Methods for BSDEs. Chin. Ann. Math. Ser. B 42, 199–216 (2021). https://doi.org/10.1007/s11401-021-0253-x

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11401-021-0253-x