Abstract

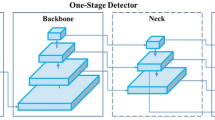

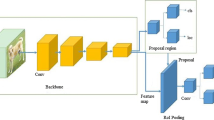

Seasonal flu is currently a major public health issue the world is facing. Although the World Health Organization (WHO) suggests social distancing is one of the best ways to stop the spread of the flu disease, the lack of controllability in keeping a social distance is widespread. Spurred by this concern, this paper developed a fast social distancing monitoring solution, which combines a lightweight PyTorch-based monocular vision detection model with inverse perspective mapping (IPM) technology, enabling the nursing robot to recover 3D indoor information from a monocular image and detect the distance between pedestrians, then conducts a live and dynamic infection risk assessment by statistically analyzing the distance between the people within a scene and ranking public places into different risk levels, called Fast DeepSOCIAL (FDS). First, the FDS model generates the probability of an object’s category and location directly using a lightweight PyTorch-based one-stage detector, which enables a nursing robot to obtain significant real-time performance gains while reducing memory consumption. Additionally, the FDS model utilizes an improved spatial pyramid pooling strategy, which introduces more branches and parallel pooling with different kernel sizes, which will be beneficial in capturing the contextual information at multiple scales and thus improving detection accuracy. Finally, the nursing robot introduces a gap-seeking strategy based on obstacles-weighted control (GSOWC) to adapt to dangerous indoor disinfection tasks while quickly avoiding obstacles in an unknown and cluttered environment. The performance of the FDS on the nursing robot platform is verified through extensive evaluation, demonstrating its superior performance compared to seven state-of-the-art methods and revealing that the FDS model can better detect social distance. Overall, a nursing robot employing the Fast DeepSOCIAL model (FDS) will be an innovative approach that effectively contributes to dealing with this seasonal flu disaster due to its fast, contactless, and inexpensive features.

Similar content being viewed by others

Data availability

The data presented in this study are available on request from the corresponding author.

References

Itoh Y, Shinya K, Kiso M et al (2009) In vitro and in vivo characterization of new swine-origin H1N1 influenza viruses. Nature 460(7258):1021–1025

Korani MF (2015) Assessment of seasonal flu immunization status among adult patients visiting al-Sharaee Primary Health Care Center in Makkah al-Mokarramah. Int J Med Sci Public Health 4(1):117–123

Bert F, Thomas R, Moro GL, Scarmozzino A, Silvestre C, Zotti CM, Siliquini R (2020) A new strategy to promote flu vaccination among health care workers: Molinette Hospital’s experience. J Eval Clin Pract 26(4):1205–1211

Eraso Y, Hills S (2021) Intentional and unintentional non-adherence to social distancing measures during COVID-19: a mixed-methods analysis. PLoS ONE 16(8):1–29

Guzman MI (2020) Bioaerosol size effect in COVID-19 transmission. ResearchGate 2020(1):1–7

Girshick R, Donahue J, Darrell T, Malik J (2014) Rich feature hierarchies for accurate object detection and semantic segmentation. In: 2014 IEEE conference on computer vision and pattern recognition, pp 580–587

Manuel CG, Jesús TM, Pedro LB, Jorge GG (2020) On the performance of one-stage and two-stage object detectors in autonomous vehicles using camera data. Remote Sens 13(1):1–23

Girshick R (2015) Fast r-cnn. In: 2015 IEEE International conference on computer vision, pp 2380–7504

Ren S, He K, Girshick R, Sun J (2017) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell 39(6):1137–1149

Dai J, Li Y, He K, Sun J (2016) R-FCN: object detection via region-based fully convolutional networks. In: 30th NIPS, pp 379–387

He K, Gkioxari G, Dollar P, Girshick R (2020) Mask R-CNN. IEEE Trans Pattern Anal Mach Intell 42(2):386–397

Zou Z, Shi Z, Guo Y, Ye J (2019) Object detection in 20 years: a survey. Proc IEEE 111(3):257–276

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: unified, real-time object detection. In: 2016 IEEE conference on computer vision and pattern recognition, pp 779–788

Redmon J, Farhadi A (2017) YOLO9000: better, faster, stronger. In: 2017 IEEE conference on computer vision and pattern recognition, pp 6517–6525

Redmon J, Farhadi A (2018) YOLOv3: an incremental improvement. In: 2018 computer vision and pattern recognition, pp 89–95

Rezaei M, Azarmi M (2020) DeepSOCIAL: social distancing monitoring and infection risk assessment in COVID-19 pandemic. Appl Sci 10(21):1–29

Bochkovskiy A, Wang CY, Liao HYM (2020) YOLOv4: optimal speed and accuracy of object detection. In: 2020 IEEE conference on computer vision and pattern recognition, pp 1–17

Jocher G, Nishimura K, Mineeva T, Vilariño, R (2020) YOLOv5. https://github.com/ultralytics/YOLOv5. Accessed 10 July 2020

Chuyi L, Lulu L, Hongliang J (2022) YOLOv6. https://github.com/meituan/YOLOv6. Accessed 07 Sep 2022

Jocher G, Chaurasia A, Qiu J (2023) YOLO by Ultralytics, https://github.com/ultralytics/ ultralytics, 2023. Accessed 30 Feb 2023

Zhao K, Zhu X, Jiang H, Zhang C, Wang Z, Fu B (2018) Dynamic loss for one-stage object detectors in computer vision. Electron Lett 54(25):1433–1434

He K, Zhang X, Ren S, Sun J (2015) Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans Pattern Anal Mach Intell 37(9):1904–1916

Wang CY, Bochkovskiy A, Liao HYM (2020) Scaled-YOLOv4: scaling cross stage partial network. In: 2021 IEEE/CVF conference on computer vision and pattern recognition, pp 13024–13033

Yan J, Grantham M, Pantelic J (2018) Normal breathing of patients with influenza virus infection generates virus-containing fine particle aerosols—are droplet precautions sufficient. Clin Infect Dis 10(66):3–3

Zhang D, Fang B, Yang W, Luo X, Tang Y (2014) Robust inverse perspective mapping based on vanishing point. In: 2014 International conference on security, pattern analysis, and cybernetics, pp 458–463

Lin J, Peng J (2023) Adaptive inverse perspective mapping transformation method for ballasted railway based on differential edge detection and improved perspective mapping model. Digit Signal Process 135(1):103944.1-103944.11

Hacene N, Mendil B (2021) Behavior-based autonomous navigation and formation control of mobile robots in unknown cluttered dynamic environments with dynamic target tracking. Int J Autom Comput 18(5):1–21

Papanastasiou AI, Ruffle BJ, Zheng AL (2022) Compliance with social distancing: theory and empirical evidence from Ontario during COVID-19. Can J Econ/Revue Can 55(1):705–734

Riba E, Mishkin D, Ponsa D, Rublee E, Bradski G (2020) Kornia: an open source differentiable computer vision library for PyTorch. In: 2020 IEEE winter conference on applications of computer vision, pp 3663–3672

Cao G, Xie X, Yang W, Liao Q, Shi G, Wu J (2017) Feature-fused SSD: fast detection for small objects. Comput Vis Pattern Recognit 10615:1–8

Wang CY, Liao H, Wu YH, Chen RY, Hsieh JW, Yeh IH (2020) CSPNet: a new backbone that can enhance learning capability of CNN. In: 2020 IEEE/CVF conference on computer vision and pattern recognition workshops, pp 1571–1580

Zhang R, Ni J (2020) A dense U-Net with cross-layer intersection for detection and localization of image forgery. In: 2020 IEEE International conference on acoustics, speech and signal processing, pp 2982–2986

Lin TY, Doll´ar P, Girshick R, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. In: 2017 ieee conference on computer vision and pattern recognition, Honolulu, pp 2117–2125

Hosang J, Benenson R, Schiele B, Germany, S (2017) Learning non-maximum suppression. In: 2017 IEEE conference on computer vision and pattern recognition. In: 2017 IEEE Computer Society, pp 6469–6477

Lee JK, Baik YK, Cho H, Yoo S (2020) Online extrinsic camera calibration for temporally consistent IPM using lane boundary observations with a lane width prior. In: 2020 computer vision and pattern recognition, pp 1–6

Hu Z, Xiao H, Zhou Z, Li N (2021) Detection of parking slots occupation by temporal difference of inverse perspective mapping from vehicle-borne monocular camera. Proc Inst Mech Eng Part D J Automob Eng 12(235):3119–3126

Jeong J, Kim A (2016) Adaptive inverse perspective mapping for lane map generation with SLAM. In: International conference on ubiquitous robots and ambient intelligence, pp 38–41

Payne S (2017) Virus Structure. Viruses. Elsevier, pp 13–21

Wu H, Gao Y, Wang W, Zhang Z (2021) A hybrid ant colony algorithm based on multiple strategies for the vehicle routing problem with time windows. Complex Intell Syst 9(2–3):2491–2508

COCO: Common Objects in Context Dataset. http://cocodataset.org. Accessed 19 Apr 2020

Ji W, Liu D, Meng Y, Liao Q (2021) Exploring the solutions via Retinex enhancements for fruit recognition impacts of outdoor sunlight: a case study of navel oranges. Evol Intel 15(12):1–37

Jia W, Xu S, Liang Z, Zhao Y, Min H, Li S, Yu Y (2021) Real-time automatic helmet detection of motorcyclists in urban traffic using improved YOLOv5 detector. IET Image Proc 15(14):3623–3637

Yang F (2021) A real-time apple targets detection method for picking robot based on improved YOLOv5. Remote Sens 13(9):1–23

Yan S, Fu Y, Zhang W, Yang W, Yu R, Zhang F (2023) Multi-target instance segmentation and tracking using YOLOV8 and BoT-SORT for Video SAR. In: 2023 5th International conference on electronic engineering and informatics, pp 506–510

Wang CY, Bochkovskiy A, Liao HYM (2023) YOLOv7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors, In: 2023 IEEE/CVF conference on computer vision and pattern recognition, pp 7464–7475

Liu W, Anguelov D, Erhan D, Szegedy C, Reed S (2016) SSD: single shot multibox detector, In: 2016 European conference computer vision, pp 21–37

Funding

This research is supported by National Natural Science Foundation of China (62003222), Chunhui of Ministry of education project (HZKY20220214), and 111 Project (D23005). The authors declare that there is no conflict of interest regarding the publication of this article.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Human and animal rights

The study in the paper did not involve humans or animals.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Fu, G., Wang, Y., Yang, J. et al. Deployment of nursing robot for seasonal flu: fast social distancing detection and gap-seeking algorithm based on obstacles-weighted control. Intel Serv Robotics (2024). https://doi.org/10.1007/s11370-024-00519-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11370-024-00519-4