Abstract

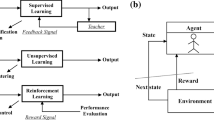

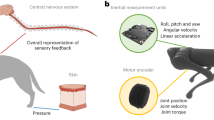

In the paper, a reinforcement learning technique is applied to produce a central pattern generation-based rhythmic motion control of a robotic salamander while moving toward a fixed target. Since its action spaces are continuous and there are various uncertainties in an environment that the robot moves, it is difficult for the robot to apply a conventional reinforcement learning algorithm. In order to overcome this issue, a deep deterministic policy gradient among the deep reinforcement learning algorithms is adopted. The robotic salamander and the environments where it moves are realized using the Gazebo dynamic simulator under the robot operating system environment. The algorithm is applied to the robotic simulation for the continuous motions in two different environments, i.e., from a firm ground to a mud. Through the simulation results, it is verified that the robotic salamander can smoothly move toward a desired target by adapting to the environmental change from the firm ground to the mud. The gradual improvement in the stability of learning algorithm is also confirmed through the simulations.

Similar content being viewed by others

References

Zhou X, Bi S (2012) A survey of bio-inspired compliant legged robot designs. Bioinspir Biomim 7(4):041001

Raj A, Thakur A (2016) Fish-inspired robots: design, sensing, actuation, and autonomy—a review of research. Bioinspir Biomim 11(3):031001

Hirose S, Yamada H (2009) Snake-like robots [tutorial]. IEEE Robot Autom Mag 16(1):88–98

Koh J-S, Cho K-J (2013) Omega-shaped inchworm-inspired crawling robot with large-index-and-pitch (LIP) SMA spring actuators. IEEE/ASME Trans Mechatron 18(2):419–429

Paranjape AA, Chung S-J, Kim J (2013) Novel dihedral-based control of flapping-wing aircraft with application to perching. IEEE Trans Robot 29(5):1071–1084

Chen Y et al (2015) Hybrid aerial and aquatic locomotion in an at-scale robotic insect. In: IEEE/RSJ international conference on intelligent robots and systems (IROS)

Marder E, Bucher D (2001) Central pattern generators and the control of rhythmic movements. Curr Biol 11(23):R986–R996

Ijspeert AJ (2008) Central pattern generators for locomotion control in animals and robots: a review. Neural Netw 21(4):642–653

Yu J et al (2014) A survey on CPG-inspired control models and system implementation. IEEE Trans Neural Netw Learn Syst 25(3):441–456

Manzoor S, Choi Y (2016) A unified neural oscillator model for various rhythmic locomotions of snake-like robot. Neurocomputing 173(3):1112–1123

Frolich LM, Biewener AA (1992) Kinematic and electromyographic analysis of the functional role of the body axis during terrestrial and aquatic locomotion in the salamander Ambystoma tigrinum. J Exp Biol 162(1):107–130

Ashley-Ross MA, Bechtel BF (2004) Kinematics of the transition between aquatic and terrestrial locomotion in the newt Taricha torosa. J Exp Biol 207(3):461–474

Delvolve I, Bem T, Cabelguen J-M (1997) Epaxial and limb muscle activity during swimming and terrestrial stepping in the Adult Newt, Pleurodeles waltl. J Neurophysiol 78(2):638–650

Ijspeert AJ et al (2007) From swimming to walking with a salamander robot driven by a spinal cord model. Science 315(5817):1416–1420

Cohen AH (1988) Evolution of the vertebrate central pattern generator for locomotion. In: Cohen AH, Rossignol S, Grillner S (eds) Neural control of rhythmic movements in vertebrates. Wiley

Gao K-Q, Shubin H (2001) Late Jurassic salamanders from northern China. Nature 410(6828):574

Crespi A et al (2013) Salamandra robotica II: an amphibious robot to study salamander-like swimming and walking gaits. IEEE Tran Robot 29(2):308–320

Sutton RS, Barto AG (1998) Reinforcement learning: an introduction. MIT Press, Cambridge

Watkins C, Dayan P (1992) Q-learning. Mach Learn 8(3–4):279–292

Lillicrap TP et al (2015) Continuous control with deep reinforcement learning. arXiv preprint arXiv: 1509.02971

Silver D et al (2016) Mastering the game of Go with deep neural networks and tree search. Nature 529(7587):484–489

Mnih V et al (2013) Playing atari with deep reinforcement learning. arXiv preprint arXiv:1312.5602

Mnih V et al (2015) Human-level control through deep reinforcement learning. Nature. https://doi.org/10.1038/nature14236

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980

Koenig N, Howard A (2004) Design and use paradigms for gazebo, an open-source multi-robot simulator. In: Proceedings of 2004 IEEE/RSJ international conference on intelligent robots and systems

Silver D et al. (2014) Deterministic policy gradient algorithms. In: Proceedings of the international conference on machine learning

Konda VR, Tsitsiklis JN (2000) Actor-critic algorithms. In: Conference on neural information processing systems (NIPS), pp 1008–1014

Hooper SL (2000) Central pattern generators. Curr Biol 10(5):R176–R179

Bishop CM (2006) Pattern recognition and machine learning (information science and statistics). Springer, Berlin

Nair V, Hinton GE (2010) Rectified linear units improve restricted boltzmann machines. In: Proceedings of the international conference on machine learning

Choi Y, Chung WK (2004) PID trajectory tracking control for mechanical systems. Springer, Berlin

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the Convergence Technology Development Program for Bionic Arm through the National Research Foundation of Korea Funded by the Ministry of Science, ICT & Future Planning (NRF-2015M3C1B2052811), Republic of Korea.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 1 (mp4 9960 KB)

Rights and permissions

About this article

Cite this article

Cho, Y., Manzoor, S. & Choi, Y. Adaptation to environmental change using reinforcement learning for robotic salamander. Intel Serv Robotics 12, 209–218 (2019). https://doi.org/10.1007/s11370-019-00279-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11370-019-00279-6