Abstract

Item compromise persists in undermining the integrity of testing, even secure administrations of computerized adaptive testing (CAT) with sophisticated item exposure controls. In ongoing efforts to tackle this perennial security issue in CAT, a couple of recent studies investigated sequential procedures for detecting compromised items, in which a significant increase in the proportion of correct responses for each item in the pool is monitored in real time using moving averages. In addition to actual responses, response times are valuable information with tremendous potential to reveal items that may have been leaked. Specifically, examinees that have preknowledge of an item would likely respond more quickly to it than those who do not. Therefore, the current study proposes several augmented methods for the detection of compromised items, all involving simultaneous monitoring of changes in both the proportion correct and average response time for every item using various moving average strategies. Simulation results with an operational item pool indicate that, compared to the analysis of responses alone, utilizing response times can afford marked improvements in detection power with fewer false positives.

Similar content being viewed by others

References

Armstrong, R. D., & Shi, M. (2009). A parametric cumulative sum statistic for person fit. Applied Psychological Measurement, 33, 391–410.

Armstrong, R. D., Stoumbos, Z. G., Kung, M. T., & Shi, M. (2007). On the performance of the lz person-fit statistic. Practical Assessment Research and Evaluation, 12(16).

Belov, D. I. (2014). Detecting item preknowledge in computerized adaptive testing using information theory and combinatorial optimization. Journal of Computerized Adaptive Testing, 2, 37–58.

Belov, D. I. (2015). Comparing the performance of eight item preknowledge detection statistics. Applied Psychological Measurement, 40, 83–97.

Belov, D. I., & Armstrong, R. D. (2010). Automatic detection of answer copying via Kullback–Leibler divergence and K-Index. Applied Psychological Measurement, 34, 379–392.

Belov, D. I., Pashley, P. J., Lewis, C., & Armstrong, R. D. (2007). Detecting aberrant responses with Kullback–Leibler distance. In K. Shigemasu, A. Okada, T. Imaizumi, & T. Hoshino (Eds.), New trends in psychometrics (pp. 7–14). Tokyo: Universal Academy Press.

Bock, R. D., & Mislevy, R. J. (1982). Adaptive EAP estimation of ability in a microcomputer environment. Applied Psychological Measurement, 6, 431–444.

Chang, H.-H. (2015). Psychometrics behind computerized adaptive testing. Psychometrika, 80, 1–20.

Chang, H.-H., Qian, J., & Ying, Z. (2001). \(a\)-stratified multistage computerized adaptive testing with \(b\)-blocking. Applied Psychological Measurement, 25, 333–341.

Chang, H.-H., & Stout, W. (1993). The asymptotic posterior normality of the latent trait in an IRT model. Psychometrika, 58, 37–52.

Chang, H.-H., & Ying, Z. (1999). \(a\)-stratified multistage computerized adaptive testing. Applied Psychological Measurement, 23, 211–222.

Chang, H.-H., & Ying, Z. (2008). To weight or not to weight? Balancing influence of initial items in adaptive testing. Psychometrika, 73, 441–450.

Chang, H.-H., & Ying, Z. (2009). Nonlinear sequential designs for logistic item response theory models with applications to computerized adaptive tests. The Annals of Statistics, 37, 1466–1488.

Chang, S. W., Ansley, T. N., & Lin, S. H. (2000). Performance of item exposure control methods in computerized adaptive testing: Further explorations. In Paper presented at the annual meeting of the American Educational Research Association, New Orleans, LA.

Drasgow, F., Levine, M. V., & Williams, E. A. (1985). Appropriateness measurement with polychotomous item response models and standardized indices. British Journal of Mathematical and Statistical Psychology, 38, 67–86.

Egberink, I., Meijer, R. R., Veldkamp, B. P., Schakel, L., & Smid, N. G. (2010). Detection of aberrant item score patterns in computerized adaptive testing: An empirical example using the CUSUM. Personality and Individual Differences, 48, 921–925.

Georgiadou, E., Triantafillou, E., & Economides, A. A. (2007). A review of item exposure control strategies for computerized adaptive testing developed from 1983 to 2005. The Journal of Technology, Learning, and Assessment.

Han, N., & Hambleton, R. (2004). Detecting exposed test items in computer-based testing. In Paper presented at the annual meeting of the National Council on Measurement in Education, San Diego, CA.

Hau, K.-T., & Chang, H.-H. (2001). Item selection in computerized adaptive testing: Should more discriminating items be used first? Journal of Educational Measurement, 38, 249–266.

Hetter, R. D., & Sympson, J. B. (1997). Item exposure control in CAT-ASVAB. In W. Sands, B. Waters, & J. McBride (Eds.), Computerized adaptive testing: From inquiry to operation (pp. 141–144). Washington, DC: American Psychological Association.

Impara, J. C., & Kingsbury, G. (2005). Detecting cheating in computer adaptive tests using data forensics. In Paper presented at the annual meeting of the National Council on Measurement in Education, Montreal, Cananda.

Kang, H.-A., & Chang, H.-H. (2016). Online detection of item compromise in CAT using responses and response times. In Paper presented at the annual meeting of the National Council on Measurement in Education, Washington, D.C.

Karabatsos, G. (2003). Comparing the aberrant response detection performance of thirty-six person-fit statistics. Applied Measurement in Education, 16, 277–298.

Kingsbury, G. G., & Zara, A. R. (1989). Procedures for selecting items for computerized adaptive tests. Applied Measurement in Education, 2, 359–375.

Levine, M. V., & Drasgow, F. (1988). Optimal appropriateness measurement. Psychometrika, 53, 161–176.

Lord, F. M. (1980). Applications of item response theory to practical testing problems. Hillsdale, NJ: Erlbaum.

Lord, F. M., & Novick, M. R. (1968). Statistical Theories of mental test scores. Reading, MA: Addison-Wesley.

Lu, Y., & Hambleton, R. (2003). Statistics for detecting disclosed items in a CAT environment (Research Report No. 498). Amherst, MA: University of Massachusetts, School of Education, Center for Educational Assessment.

Marianti, S., Fox, J.-P., Marianna, A., Veldkamp, B. P., & Tijmstra, J. (2014). Testing for aberrant behavior in response time modeling. Journal of Educational and Behavioral Statistics, 39, 426–451.

Mavridis, D., & Moustaki, I. (2008). Detecting outliers in factor analysis using the forward search algorithm. Multivariate Behavioral Research, 43, 435–475.

Mavridis, D., & Moustaki, I. (2009). The forward search algorithm for detecting aberrant response patterns in factor analysis for binary data. Journal of Computational and Graphical Statistics, 18, 1016–1034.

McLeod, L. D., & Lewis, C. (1999). Detecting item memorization in the CAT environment. Applied Psychological Measurement, 23, 147–160.

McLeod, L. D., & Schnipke, D. L. (1999). Detecting items that have been memorized in the computerized adaptive testing environment. In Paper presented at the annual meeting of the National Council on Measurement in Education, Montreal, Canada.

Meijer, R. R. (2002). Outlier detection in high-stakes certification testing. Journal of Educational Measurement, 39, 219–233.

Meijer, R. R., & Sotaridona, L. S. (2006). Detection of advance item knowledge using response times in computer adaptive testing. Technical Report 03-03, Law School Admission Council.

Mislevy, R. J., & Chang, H.-H. (2000). Does adaptive testing violate local independence? Psychometrika, 65, 149–156.

Moustaki, I., & Knott, M. (2014). Latent variable models that account for atypical responses. Journal of the Royal Statistical Society, Series C, 63, 343–360.

O’Leary, L. S., & Smith, R. W. (2017). Detecting candidate preknowledge and compromised content using differential person and item functioning. In G. J. Cizek & J. A. Wollack (Eds.), Handbook of quantitative methods for detecting cheating on tests (pp. 151–163). New York, NY: Routledge.

Öztürk, N. K., & Karabatsos, G. (2017). A Bayesian robust IRT outlier-detection model. Applied Psychological Measurement, 41, 195–208.

Risk, N. M. (2015). The impact of item parameter drift in computer adaptive testing (CAT) (Unpublished doctoral dissertation). University of Illinois at Chicago.

Stocking, M. L. (1993). Controlling item exposure rates in a realistic adaptive testing paradigm. ETS Research Report Series (pp. 1–31).

Stocking, M. L., & Lewis, C. (1998). Controlling item exposure conditional on ability in computerized adaptive testing. Journal of Educational and Behavioral Statistics, 23, 57–75.

Sympson, J. B., & Hetter, R. D. (1985). Controlling item-exposure rates in computerized adaptive testing. In Proceedings of the 27th annual meeting of the Military Testing Association, San Diego, CA: Navy Personnel Research and Development Center.

Tatsuoka, K. K. (1984). Caution indices based on item response theory. Psychometrika, 49, 95–110.

Tendeiro, J. N., & Meijer, R. R. (2012). A CUSUM to detect person misfit: A discussion and some alternative for existing procedures. Applied Psychological Measurement, 36, 420–442.

van der Linden, W. J. (2003). Some alternatives to Sympson–Hetter item-exposure control in computerized adaptive testing. Journal of Educational and Behavioral Statistics, 28, 249–265.

van der Linden, W. J. (2006). A lognormal model for response times on test items. Journal of Educational and Behavioral Statistics, 31, 181–204.

van der Linden, W. J. (2007). A hierarchical framework for modeling speed and accuracy on test items. Psychometrika, 72, 287–308.

van der Linden, W. J., & Guo, F. (2008). Bayesian procedures for identifying aberrant response-time patterns in adaptive testing. Psychometrika, 73, 365–384.

van der Linden, W. J., & Lewis, C. (2015). Bayesian checks on cheating on tests. Psychometrika, 80, 689–706.

van der Linden, W. J., & van Krimpen-Stoop, E. (2003). Using response times to detect aberrant responses in computerized adaptive testing. Psychometrika, 68, 251–265.

van Krimpen-Stoop, E., & Meijer, R. R. (2001). CUSUM-based person-fit statistics for adaptive testing. Journal of Educational and Behavioral Statistics, 26, 199–218.

Veerkamp, W. J. J., & Glas, C. A. W. (2000). Detection of known items in adaptive testing with a statistical quality control method. Journal of Educational and Behavioral Statistics, 25, 373–389.

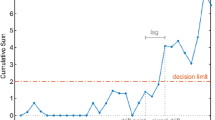

Zhang, J. (2014). A sequential procedure for detecting compromised items in the item pool of a CAT system. Applied Psychological Measurement, 38, 87–104.

Zhang, J., & Li, J. (2016). Monitoring items in real time to enhance CAT security. Journal of Educational Measurement, 53, 131–151.

Zhu, R., Yu, F., & Liu, S. (2002). Statistical indexes for monitoring item behavior under computer adaptive testing environment. In: Paper presented at the annual meeting of the American Educational Research Association, New Orleans, LA.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Application of Lyapunov’s Central Limit Theorem

Assume that log RT is normally distributed as follows: \(\log T_{ij} \sim \mathcal {N}(\mu _{ij},\sigma _j^2)\), where \(\mu _{ij}=\beta _j-\tau _i\) and \(\sigma _j^2=1/\alpha _j^2\). The mean log RT of the moving sample for item j is then given as \(\hat{\mu }_j^{(m)}=\dfrac{1}{m}\sum \nolimits _{i=n-m+1}^n \log T_{ij}\). Also, define the following: \(s_m^2=\sum \nolimits _{i=n-m+1}^n \sigma _j^2=m\sigma _j^2\). In this context, Lyapunov’s CLT states that

if, for any \(\delta >0\), the following condition is met:

Recognizing that the expectation term is a central absolute moment of \(\log T_{ij}\),

Therefore, using \(\delta =2\) for simplicity,

thereby meeting Lyapunov’s condition for the asymptotic normality of the test statistic.

Rights and permissions

About this article

Cite this article

Choe, E.M., Zhang, J. & Chang, HH. Sequential Detection of Compromised Items Using Response Times in Computerized Adaptive Testing. Psychometrika 83, 650–673 (2018). https://doi.org/10.1007/s11336-017-9596-3

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-017-9596-3