Abstract

This paper addresses methodological issues that concern the scaling model used in the international comparison of student attainment in the Programme for International Student Attainment (PISA), specifically with reference to whether PISA’s ranking of countries is confounded by model misfit and differential item functioning (DIF). To determine this, we reanalyzed the publicly accessible data on reading skills from the 2006 PISA survey. We also examined whether the ranking of countries is robust in relation to the errors of the scaling model. This was done by studying invariance across subscales, and by comparing ranks based on the scaling model and ranks based on models where some of the flaws of PISA’s scaling model are taken into account. Our analyses provide strong evidence of misfit of the PISA scaling model and very strong evidence of DIF. These findings do not support the claims that the country rankings reported by PISA are robust.

Similar content being viewed by others

References

Adams, R.J. (2003). Response to ‘Cautions on OECD’s recent educational survey (PISA)’. Oxford Review of Education, 29, 379–389. Note: Publications from PISA can be found at http://www.oecd.org/pisa/pisaproducts/.

Adams, R., Berezner, A., & Jakubowski, M. (2010). Analysis of PISA 2006 preferred items ranking using the percent-correct method. Paris: OECD. http://www.oecd.org/pisa/pisaproducts/pisa2006/44919855.pdf.

Adams, R.J., Wilson, M., & Wang, W. (1997). The multidimensional random coefficients multinomial logit model. Applied Psychological Measurement, 21, 1–23.

Adams, R.J., Wu, M.L., & Carstensen, C.H. (2007). Application of multivariate Rasch models in international large-scale educational assessments. In M. Von Davier & C.H. Carstensen (Eds.), Multivariate and mixture distribution Rasch models (pp. 271–280). New York: Springer.

Andersen, E.B. (1973). A goodness of fit test for the Rasch model. Psychometrika, 38, 123–140.

Brown, G., Micklewrigth, J., Schnepf, S.V., & Waldmann, R. (2007). International surveys of educational achievement: how robust are the findings? Journal of the Royal Statistical Society. Series A. General, 170, 623–646.

Dorans, N.J., & Holland, P.W. (1993). DIF detection and description: Mantel-Haenszel and standardization. In P.W. Holland & H. Wainer (Eds.), Differential item functioning (pp. 35–66). Hilsdale: Lawrence Erlbaum Associates.

Fischer, G.H. & Molenaar, I.W. (Eds.) (1995). Rasch models—foundations, recent developments, and applications. Berlin: Springer.

Glass, G.V., & Hopkins, K.D. (1995). In Statistical methods in education and psychology. Boston: Allyn & Bacon.

Goldstein, H. (2004). International comparisons of student attainment: some issues arising from the PISA study. Assessment in Education, 11, 319–330.

Goodman, L.A., & Kruskal, W.H. (1954). Measures of association for cross classifications. Journal of the American Statistical Association, 49, 732–764.

Hopmann, S.T., Brinek, G., & Retzl, M. (Eds.) (2007). PISA zufolge PISA. PISA according to PISA. Wien: Lit Verlag. http://www.univie.ac.at/pisaaccordingtopisa/pisazufolgepisa.pdf.

Kelderman, H. (1984). Loglinear Rasch model tests. Psychometrika, 49, 223–245.

Kelderman, H. (1989). Item bias detection using loglinear IRT. Psychometrika, 54, 681–697.

Kirsch, I., de Jng, J., Lafontaine, D., McQueen, J., Mendelovits, J., & Monseur, C. (2002). Reading for change. performance and engagement across countries. results from PISA 2000. Paris: OECD.

Kreiner, S. (1987). Analysis of multidimensional contingency tables by exact conditional tests: techniques and strategies. Scandinavian Journal of Theoretical Statistics, 14, 97–112.

Kreiner, S. (2011a). A note on item-restscore association in Rasch models. Applied Psychological Measurement, 35, 557–561.

Kreiner, S. (2011b). Is the foundation under PISA solid? A critical look at the scaling model underlying international comparisons of student attainment. Research report 11/1, Dept. of Biostatistics, University of Copenhagen. https://ifsv.sund.ku.dk/biostat/biostat_annualreport/images/c/ca/ResearchReport-2011-1.pdf.

Kreiner, S., & Christensen, K.B. (2007). Validity and objectivity in health-related scales: analysis by graphical loglinear Rasch models. In M. Von Davier & C.H. Carstensen (Eds.), Multivariate and mixture distribution Rasch models (pp. 271–280). New York: Springer.

Kreiner, S., & Christensen, K.B. (2011). Exact evaluation of bias in Rasch model residuals. Advances in Mathematics Research, 12, 19–40.

Molenaar, I.V. (1983). Some improved diagnostics for failure of the Rasch model. Psychometrika, 48, 49–72.

OECD (2000). Measuring student knowledge and skills. the PISA 2000 assessment of reading, mathematical and scientific literacy. Paris: OECD. http://www.oecd.org/dataoecd/44/63/33692793.pdf.

OECD (2006). PISA 2006. Technical report. Paris: OECD. http://www.oecd.org/dataoecd/0/47/42025182.pdf.

OECD (2007). PISA 2006. Volume 2: data. Paris: OECD.

OECD (2009). PISA data analysis manual: SPSS (2nd ed.). Paris: OECD. http://www.oecd-ilibrary.org/education/pisa-data-analysis-manual-spss-second-edition_9789264056275-en.

Prais, S.J. (2003). Cautions on OECD’s recent educational survey (PISA). Oxford Review of Education, 29, 139–163.

Rosenbaum, P. (1989). Criterion-related construct validity. Psychometrika, 54, 625–633.

Smith, R.M. (2004). Fit analysis in latent trait measurement models. In E.V. Smith & R.M. Smith (Eds.), Introduction to Rasch measurement (pp. 73–92). Maple Grove: JAM Press.

Schmitt, A.P., & Dorans, N.J. (1987). Differential item functioning on the scholastic aptitude test. Research memorandum No. 87-1. Princeton NJ: Educational Testing Service.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A. Information on Countries

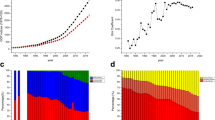

Table A.1 provides information on (a) average scores and the number of students with complete responses to 20 items, (b) DIF equated scores with Azerbaijan as reference country (Kreiner and Christensen 2007), and (c) overall tests of fit of the Rasch model in 56 countries.

Appendix B. Analyses of Data from All Booklets with Reading Items

In addition to Booklet 6 with 28 reading items, reading items can also be found in Booklets 2, 7, 9, 11, 12, and 13. Each of these booklets contained 14 items. Booklets 9, 11, and 13 had items from reading units R055, R104, and R111. We refer the these booklets together with Booklet 6 that also had these reading units as Booklet set 1. Booklet set 2 consists of Booklets 2, 6, 7, and 12 with reading units R067, R102, R219, and R220.

Table B.1 shows the overall CLR tests of the Rasch model for the two different booklet sets as a whole and for the different booklets. In addition to the CLR tests not DIF relative to country, CLR tests also provided evidence of DIF relative to booklets (Booklet set 1: CLR=1669.0, df=42, p<0.00005; Booklet set 2: CLR=3260.3, df=27p<0.00005).

Additional information on these analyses is available from the authors on request.

Appendix C. Assessment of Ranking Error

Let Y cvi be the score on item i by person v from country c (c=1,…,C; v=1,…,N c ; i=1,…,I) and let A be the indices of a subset of items A⊂{1,…,I}. The total score on all items is \(S_{cv} = \sum_{i = 1}^{I} Y_{cvi}\) and the subscore over items in A is T cv =∑ i∈A Y cvi . This Appendix is concerned with errors when countries are ranked according to averages \(S_{c} = \frac{1}{N_{c}}\sum_{v} S_{cv}\) and \(T_{c} = \frac{1}{N_{c}}\sum_{v} T_{cv}\).

We assume that item responses fit a Rasch model with a latent variable Θ. The distribution of Θ may be nonparametric or parametric. In the nonparametric case, the population parameters of interest are the score probabilities P(S cv =s) and P(T cv =t). The marginal distribution of T cv is given by P(T c =t)=∑ s P(T cv =t|S cv =s)P(S cv =s). Under the Rasch model, P(T cv =t|S cv =s) depends on item parameters, but not on Θ. Under such a model, it is consequently easy to calculate estimates of the subscore probabilities P(T cv =t) in the nonparametric case if consistent estimates of the item parameters and the score probabilities P(S cv =s) are available.

In the parametric case, we assume that the latent variables are Gaussian normal with means ξ c and standard deviations σ c . Given these distributions, Monte Carlo methods provide simple estimates of the distributions of S cv and T cv based on estimates of item parameters together with estimates of ξ c and σ c .

The country ranks according to (S 1,…,S C ) and (T 1,…,T C ) are expected to be similar under the Rasch model, but ranking errors will occur depending on the number of items and on sample sizes in different countries: the smaller the sample size and the smaller the number of items, the larger the ranking error. And, of course, the ranking errors also depend on ξ c and σ c . The results reported in this paper are derived under the parametric model with Monte Carlo estimates of the distributions of S cv and T cv based on Monte Carlo samples of 10,000 random students from each country.

To estimate the distribution of the country ranks based on country averages S c and/or T c , we generated 100,000 random values of S c and/or T c and for each set ranked the countries according to these values. Given these estimates, it is easy to find both confidence intervals and probabilities of extreme rankings for the countries.

Rights and permissions

About this article

Cite this article

Kreiner, S., Christensen, K.B. Analyses of Model Fit and Robustness. A New Look at the PISA Scaling Model Underlying Ranking of Countries According to Reading Literacy. Psychometrika 79, 210–231 (2014). https://doi.org/10.1007/s11336-013-9347-z

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-013-9347-z