Abstract

Objectives

While block randomized designs have become more common in place-based policing studies, there has been relatively little discussion of the assumptions employed and their implications for statistical analysis. Our paper seeks to illustrate these assumptions, and controversy regarding statistical approaches, in the context of one of the first block randomized studies in criminal justice—the Jersey City Drug Market Analysis Project (DMAP).

Methods

Using DMAP data, we show that there are multiple approaches that can be used in analyzing block randomized designs, and that those approaches will yield differing estimates of statistical significance. We develop outcomes using both models with and without interaction, and utilizing both Type I and Type III sums-of-squares approaches. We also examine the impacts of using randomization inference, an approach for estimating p values not based on approximations using normal distribution theory, to adjust for possible small N biases in estimating standard errors.

Results

The assumptions used for identifying the analytic approach produce a comparatively wide range of p values for the main DMAP program impacts on hot spots. Nonetheless, the overall conclusions drawn from our re-analysis remain consistent with the original analyses, albeit with more caution. Results were similar to the original analyses under different specifications supporting the identification of diffusion of benefits effects to nearby areas.

Conclusions

The major contribution of our article is to clarify statistical modeling in unbalanced block randomized studies. The introduction of blocking adds complexity to the models that are estimated, and care must be taken when including interaction effects in models, whether they are ANOVA models or regression models. Researchers need to recognize this complexity and provide transparent and alternative estimates of study outcomes.

Similar content being viewed by others

Notes

In developing a method for defining the boundaries of drug hot spot areas, Weisburd and Green (1995, p. 714) “drew heavily from the perceptions of narcotics detectives in Jersey City about how narcotics sales are organized at the street level. Although several neighborhoods in the city appear to have continuous drug dealing across a large number of streets and intersections, narcotics detectives generally do not view these places as undifferentiated areas of drug activity. For the detectives, a series of blocks, or sometimes even a single block or intersection, may be separated from others on the basis of the type of drug that is sold there.” Although initially they were skeptical about this assumption of specialization at discrete places, they note that “our own analysis of narcotics arrest information generally confirmed the detectives’ conclusion.”

When Weisburd and Green (1995, p. 714) examined the pattern of arrests across the active segments and intersections, they found that very few people arrested more than once for selling narcotics crossed an inactive segment or intersection to sell in an adjacent drug hot spot area (see Weisburd and Green 1994). Indeed, they found that people arrested in two separate areas were most likely to be arrested in different districts of the city.

We used Power and Precision software to estimate statistical power (see http://www.power-analysis.com/index.php).

We term this a nuisance characteristic because from the perspective of a randomized study any causal factor related to the outcome of interest outside of treatment can be seen as a nuisance or noise factor in the models estimated to assess the treatment effect.

Our purpose in doing this analysis is to show how the p values for the estimates change depending on the assumptions of the analysis that is used. We do not deal here with another issue that could be raised in reanalyzing the overall study results reported by Weisburd and Green. It might be argued that testing this number of outcomes (as well as single measures of violence and property crime) should be assessed with consideration of multiple test bias. If a large number of tests are developed, then there should be some correction in the error rates of the test. There is disagreement re this issue when a small number of tests are proposed (e.g. see Feise 2002; Rothman 1990). At the time of the original study, there was a convention of reporting a small number of main effects as independent tests, and it was not common for researchers to define “primary” as opposed to “secondary” outcomes. Weisburd and Green (1995) however, did argued that the intervention’s focus on street level crackdowns would be expected to have particular impact on disorder offenses.

We note that Weisburd and Green (1995) reported two tailed significance tests. One of us (Mazerolle, nee Green) recalls that the peer reviewers recommended that a two-tailed test be used in the study despite the a priori case for a one-tailed test made in the grant proposal. And given the fact that it did not alter the study conclusions, Weisburd and Green made this change. For the reasons noted here, we think that in a reconsideration of the study results, a one tailed test approach is warranted.

Though we recognize that a number of approaches could be used to try to take into account such spatial contamination today, we decided to follow the original study design in re-estimating these impacts. Our main interest is in illustrating the effects of analytic choices in block randomized experiments using ANOVA, not to fully revisit all analytic decisions made in the original study.

We want to thank Daniel Ortega and colleagues for suggesting this approach, and Donald Green for advice in computing estimates.

It is worth noting that RI can be used to estimate statistical significance of models with interaction. However, for the main effect of treatment, RI is simply computing the mean difference between the treatment and control conditions under a large number of permutations of group assignment and determining the p value based on the percentile for the observed mean difference in that simulated distribution. To test for an interaction effect, you must establish a method of computing the effect size for the interaction, generate a distribution of these effect sizes under permutations of group assignment, and then determine the significance of the observed interaction effect. One method of doing this is to use the F value for the interaction from an ANOVA model as the effect size. However, one could also use the sums-of-squares for the interaction or the absolute, rather than squared, differences between the block level treatment effects relative to the overall treatment effects. Testing interactions or other complex effects is a promising application for RI but in our assessment additional statistical and simulation work is needed to establish the best estimation method for these complex models.

References

Ariel, B., & Farrington, D. P. (2010). Randomized block designs. In A. Piquero & D. Weisburd (Eds) Handbook of quantitative criminology (pp. 437–454). New York: Springer.

Bayley, D. H. (1994). Police for the future (studies in crime and public policy). Oxford: Oxford University Press.

Blattman, C., Green, D., Ortega, D., & Tobón, S. (2017). Pushing crime around the corner? Estimating experimental impacts of large-scale security interventions (no. w23941). National Bureau of Economic Research.

Braga, A. A., & Weisburd, D. (2006). Problem-oriented policing: the disconnect between principles and practice. In D. Weisburd & A. A. Braga (Eds.), Police innovation: Contrasting perspectives (pp. 133–152). Cambridge: Cambridge University Press.

Braga, A. A., Green, L. A., Weisburd, D. L., & Gajewski, F. (1994). Police perceptions of street-level narcotics activity: evaluating drug buys as a research tool. American Journal of Police, 13, 37.

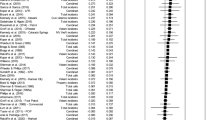

Braga, A., Papachristos, A., & Hureau, D. (2012). Hot spots policing effects on crime. Campbell Systematic Reviews, 8(8), 1–96.

Braga, A. A., Papachristos, A., & Hureau, D. (2014). The effects of hot spots policing on crime: an updated systematic review and meta-analysis. Justice Quarterly, 31(4), 633–663.

Braga, A.A., A. Papachristos, D. Hureau, & Turchan, B.. (2017). “Hot spots policing and crime control: an updated systematic review and meta-analysis.” American Society of Criminology Annual Meetings.

Clarke, R. V., & Weisburd, D. (1994). Diffusion of crime control benefits: observations on the reverse of displacement. Crime Prevention Studies, 2, 165–184.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences 2nd edn. Hillsdale: Lawrence Erlbaum Associates.

Farrington, D. P., & Welsh, B. C. (2005). Randomized experiments in criminology: what have we learned in the last two decades? Journal of Experimental Criminology, 1(1), 9–38.

Feaster, D. J., Mikulich-Gilbertson, S., & Brincks, A. M. (2011). Modeling site effects in the design and analysis of multi-site trials. The American Journal of Drug and Alcohol Abuse, 37(5), 383–391.

Feise, R.J. (2002). Do multiple outcome measures require p-value adjustment?. BMC Medical Research Methodology 2(8).

Fisher, R. A. (1925). Statistical methods for research workers. Edinburgh: Oliver and Boyd.

Fleiss, J. L. (1986). Analysis of data from multiclinic trials. Controlled Clinical Trials, 7(4), 267–275.

Gerber, A. S., & Green, D. P. (2012). Field experiments: design, analysis, and interpretation. New York: WW Norton.

Gill, C. E., & Weisburd, D. (2013). Increasing equivalence in small-sample place-based experiments taking advantage of block randomization methods. Experimental criminology: Prospects for advancing. Science and Public Policy, 141–162.

Goldstein, H. (1990). Problem-oriented policing. New York: McGraw-Hill.

Gottfredson, M. R., & Hirschi, T. (1990). A general theory of crime. Stanford: Stanford University Press.

Green, D. P., & Vavreck, L. (2007). Analysis of cluster-randomized experiments: a comparison of alternative estimation approaches. Political Analysis, 16(2), 138–152.

Hector, A., Von Felten, S., & Schmid, B. (2010). Analysis of variance with unbalanced data: an update for ecology & evolution. Journal of Animal Ecology, 79(2), 308–316.

Jaccard, J., & Turrisi, R. (2003). Interaction effects in multiple regression (no. 72). Thousand Oaks: Sage.

Jaccard, J., Turrisi, R., & Wan, C. K. (1990). Implications of behavioral decision theory and social marketing for designing social action programs. In J. Edwards, R.S. Tindel, L. Heath, & E.J. Posavac (Eds.) In Social influence processes and prevention (pp. 103-142). Boston: Springer.

Kernan, W. N., Viscoli, C. M., Makuch, R. W., Brass, L. M., & Horwitz, R. I. (1999). Stratified randomization for clinical trials. Journal of Clinical Epidemiology, 52(1), 19–26.

Kirk, R. E. (1982). Experimental design. Hoboken: John Wiley & Sons, Inc.

Langsrud, Ø. (2003). ANOVA for unbalanced data: use type II instead of type III sums of squares. Statistics and Computing, 13(2), 163–167.

Lipsey, M. W. (1990). Design sensitivity: Statistical power for experimental research (Vol. 19). Thousand Oaks: Sage.

Pierce, G., Spaar, S., & Briggs, L. (1988). The character of police work: strategic and tactical implications. Boston: NortheasternUniversity Press.

Repetto, T. A. (1976). Crime prevention and the displacement hypothesis. Crime and Delinquency, 56, 166–178.

Rothman, K. J. (1990). No adjustments are needed for multiple comparisons. Epidemiology, 1(1), 43–46.

Sherman, L. W. (1987). Repeat calls to police in minneapolic. Crime Control Reports No. 5. Washington, D.C.: Crime Control Institute.

Sherman, L. W., Gartin, P. R., & Buerger, M. E. (1989). Hot spots of predatory crime: routine activities and the criminology of place. Criminology, 27(1), 27–56.

te Grotenhuis, M., Pelzer, B., Eisinga, R., Nieuwenhuis, R., Schmidt-Catran, A., & Konig, R. (2017a). A novel method for modelling interaction between categorical variables. International Journal of Public Health, 62(3), 427–431.

te Grotenhuis, M., Pelzer, B., Eisinga, R., Nieuwenhuis, R., Schmidt-Catran, A., & Konig, R. (2017b). When size matters: advantages of weighted effect coding in observational studies. International Journal of Public Health, 62(1), 163–167.

Venables, W. N. (1998). Exegeses on linear models. In S-Plus User’s Conference, Washington DC.

Warner, B. D., & Pierce, G. L. (1993). Reexamining social disorganization theory using calls to the police as a measure of crime. Criminology, 31(4), 493–517.

Weisburd, D., & Gill, C. (2014). Block randomized trials at places: rethinking the limitations of small N experiments. Journal of Quantitative Criminology, 30, 97–112.

Weisburd, D., & Green, L. (1991). Identifying and controlling drug markets: The drug market analysis program (phase 2). Funded Proposal to the National Institute of Justice.

Weisburd, D., & Green, L. (1994). Defining the drug market: the case of Jersey City's DMAP system. Drugs and Crime: Evaluating Public Policy Initiatives. Newbury Park: Sage.(1995)." Policing drug hotspots: Findings from the Jersey City DMA." justice quarterly.

Weisburd, D., & Green, L. (1995). Policing drug hot spots: the Jersey City drug market analysis experiment. Justice Quarterly, 12(4), 711–735.

Weisburd, D., & Mazerolle, L. G. (2000). Crime and disorder in drug hot spots: implications for theory and practice in policing. Police quarterly, 3(3), 331–349.

Weisburd, D., Green, L., & Ross, D. (1994). Crime in street level drug markets: a spatial analysis. Criminology, 27, 49–67.

Weisburd, D., Lum, C., & Yang, S. M. (2003). When can we conclude that treatments or programs ‘Don’t work’? The Annals of the American Academy of Social and Political. Sciences, 587(May), 31–48.

Weisburd, D., Telep, C., Hinkle, J., & Eck, J. (2010). Is problem-oriented policing effective in reducing crime and disorder? Findings from a Campbell systematic review. Criminology & Public Policy, 9(1), 139–172.

Acknowledgements

We would like to thank Anthony Braga, Donald Green, and Alese Wooditch for helpful comments on earlier drafts of this paper, and Tori Goldberg for help in preparing the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Weisburd, D., Wilson, D.B. & Mazerolle, L. Analyzing block randomized studies: the example of the Jersey City drug market analysis experiment. J Exp Criminol 16, 265–287 (2020). https://doi.org/10.1007/s11292-018-9349-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11292-018-9349-z