Abstract

Objectives

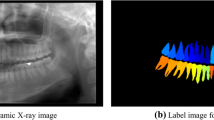

Dental state plays an important role in forensic radiology in case of large scale disasters. However, dental information stored in dental clinics are not standardized or electronically filed in general. The purpose of this study is to develop a computerized system to detect and classify teeth in dental panoramic radiographs for automatic structured filing of the dental charts. It can also be used as a preprocessing step for computerized image analysis of dental diseases.

Methods

One hundred dental panoramic radiographs were employed for training and testing an object detection network using fourfold cross-validation method. The detected bounding boxes were then classified into four tooth types, including incisors, canines, premolars, and molars, and three tooth conditions, including nonmetal restored, partially restored, and completely restored, using classification network. Based on the visualization result, multisized image data were used for the double input layers of a convolutional neural network. The result was evaluated by the detection sensitivity, the number of false-positive detection, and classification accuracies.

Results

The tooth detection sensitivity was 96.4% with 0.5 false positives per case. The classification accuracies for tooth types and tooth conditions were 93.2% and 98.0%. Using the double input layer network, 6 point increase in classification accuracy was achieved for the tooth types.

Conclusions

The proposed method can be useful in automatic filing of dental charts for forensic identification and preprocessing of dental disease prescreening purposes.

Similar content being viewed by others

References

Shanbhag VKL. Significance of dental records in personal identification in forensic sciences. J Forensic Sci Med. 2016;2:39–433.

Wood Forensic dental identification in mass disasters. the current status. J California Dent Assoc. 2014;42:379–83.

Sable G, Rindhe D. A review of dental biometrics from teeth featre extraction and matching techniques. Int J Sci Res. 2014;3:2720–2.

Lin PL, Huang PY, Huang PW, Hsu HC, Chen CC. Teeth segmentation of dental periapical radiographs based on local singularity analysis. Comput Method Prog Biomed. 2014;113:433–45.

Hosntalab M, Zoroofi RA, Tehrani-Fard AA, Shirani G. Classification and numbering of teeth in multi-slice CT images using wavelet-Fourier descriptor. Int J CARS. 2010;5:237–49.

Miki Y, Muramatsu C, Hayashi T, Zhou X, Hara T, Katsumata A, et al. Classification of teeth in cone-beam CT using deep convolutional neural network. Comput Biol Med. 2017;80:24–9.

Miki Y, Muramatsu C, Hayashi T, Zhou X, Hara T, Katsumata A, et al. Tooth labeling in cone-beam CT using deep convolutional neural network for forensic identification. Proc SPIE Med Imaging 2017;10134:101343E1–6.

Tian S, Dai N, Zhang B, Yuan F, Yu Q, Cheng X. Automatic classification and segmentation of teeth on 3D dental model using hierarchical deep learning networks. IEEE Access. 2019;7:84817–28.

Cui Z, Li C, Wang W. ToothNet: automatic tooth instance segmentation and identification from cone beam CT images. CVPR 2019:6368–77.

Wirtz A, Mirashi SG, Wesarg S. Automatic teeth segmentation in panoramic X-ray images using a coupled shape model in combination with a neural network. MICCAI 2018. LNCS 2018;11073:712–719.

Tuzoff DV, Tuzova LN, Bornstein MM, Krasnov AS, Kharchenko MA, Nikolenko SI, et al. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofacial Rad. 2019;48:20180051.

Muramatsu C, Matsumoto T, Hayashi T, Hara T, Katsumata A, Zhou X, et al. Automated measurement of mandibular cortical width on dental panoramic radiographs. Int J CARS. 2013;8:877–85.

DetectNet: Deep Neural Network for Object Detection in DIGITS. https://devblogs.nvidia.com/detectnet-deep-neural-network-object-detection-digits/. Accessed 13 Sep 2019

Szegedy C, Liu W, Jai Y, Sermanet P, Reed S, Anguelov D, et al. Going deeper with convolutions. IEEE Conf Comput Vis Pat Recog (CVPR) 2015.

Krizhevski A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural network. Adv Neural Inf Process Syst NIPS. 2013;25:1106–14.

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. arXiv:1512.03385.

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: visual explanations from deep networks via gradient-based localization. arXiv:1610.02391.

Acknowledgements

This study was supported in part by a Grant-in-Aid for Scientific Research (C) JSPS KAKENHI (19K10347) and MEXT KAKENHI (26108005).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Chisako Muramatsu, Takumi Morishita, Wataru Nishiyama, Yoshiko Ariji, Xiangrong Zhou, Takeshi Hara, Akitoshi Katsumata, Eiichiro Ariji, and Hiroshi Fujita declare that they have no conflict of interest. Ryo Takahashi and Tatsuro Hayashi are the employees of Media Co., Ltd.

Human rights statements

All procedures followed were in accordance with the ethical standards of the responsible committee on human experimentation (institutional and national) and with the Helsinki Declaration of 1975, as revised in 2008 (5).

Informed consent

Informed consent was waived by the institutional review board.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Muramatsu, C., Morishita, T., Takahashi, R. et al. Tooth detection and classification on panoramic radiographs for automatic dental chart filing: improved classification by multi-sized input data. Oral Radiol 37, 13–19 (2021). https://doi.org/10.1007/s11282-019-00418-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11282-019-00418-w