Abstract

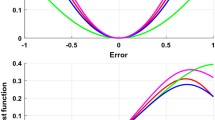

This study proves that the neural network, using the cosine modulated symmetric exponential function which is a non-monotonic function, can emulate the spline networks by approximating the polynomials and step functions. This means that the network with cosine modulated symmetric exponential function is equivalent to the spline networks. It is also equivalent to the neural network, which uses sigmoidal, hyperbolic tangent, and Gaussian activation function, as proved by DasGupta and Schnitger. In the multi-network structure, the cosine modulated symmetric exponential function has the capability to make more local hills than any other functions. In the neural network that uses this function, it has the capability to quickly localize the input space pattern, even though it makes a fewer number of layers. On the other hand, the monotonic function needs a greater number of layers to make these local hills. Therefore, in the training for the pattern classification of the neural network, we need a greater number of units and epochs. This is connected to the training speed of the neural network for the pattern classification, which also indicates the capabilities of the network. For the capacity test of the pattern classification in the cosine modulated symmetric exponential function, we have used the Cascade-Correlation neural network. Cascade-Correlation is a supervised learning algorithm that automatically determines the size and topology of the network. The Cascade-Correlation adds new hidden units one by one and creates a multi-layer structure in which each unit is in a hidden layer. In this experiment, the two benchmark problems have been used: one is the iris plant classification problem; the other is the tic-tac-toe endgame problem. The results are compared with those obtained with other activation functions. In this experiment, the evaluation items, such as the number of epochs, produced hidden units, listing of the run time, and the average crossings per second of ten trials on the training set of the problem, have been compared. For instance, In the experiment of the iris plants classification problem, the CosExp function has recorded about 53 % of the average epochs number when compared to the sigmoid function, and for the number of the hidden units, it is approximately 54 %. In the tic-tac-toe problem experiment, the average number of epochs and hidden units produced during the process has been reduced approximately by one thirds more with the CosExp function than with other activation functions. Accordingly, learning has been improved three times faster. The results of the experiments show that performance can be improved very significantly by using the cosine modulated symmetric exponential function as the activation function in neural networks with a predetermined set of parameters.

Similar content being viewed by others

References

Lee, S.-W. (2004). Neural networks using a cosine-modulated symmetric exponential activation function. Journal of Science and Culture, 1(4), 85–91.

DasGupta, B., & Schnitger, G. (1993). Efficient approximation with neural networks: A comparison of gate functions. Pennsylvania: Pennsylvania State University.

Flake, G. W. (1993). Nonmonotonic activation functions in multilayer perceptrons. Dissertation Institute for Advance Computer Studies, Department of Computer Science, University of Maryland.

Fahlman, S. E., & Lebiere, C. (1990). The cascade-correlation learning architecture. In S. Touretzky (Ed.), Advances in neural information processing systems 2. Los Altos, CA: Morgan Kaufmann.

Prechelt, L. (1994). PROBEN1-a set of neural network benchmark problems and benchmarking rules. Technical report 21/94. Fakultät für Informatik, Universität Karlsruhe. September 30.

Shultz, T. R., & Fahlman, S. E. (2010). Cascade-correlation. In Encyclopedia of machine learning (pp. 139–147).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Definition 1

(DasGupta and Schnitger) Let \(e\):\(N^{2}\rightarrow \mathbf{R}_+ \) be an error measure whose arguments are the number of nodes of the network, n, and the number of the layers \(l\). Let \(\Gamma _1 \) and \(\Gamma _2 \) be two neural network architectures, and let \(N_1 \) and \(N_2 \) represent networks from \(\Gamma _1 \) and \(\Gamma _2 \), respectively.

-

\(\Gamma _1\) emulates \(\Gamma _2 \) if and only if for all functions \(f\):\(\left[ -{1, 1} \right] ^{d}\rightarrow \mathbf{R}\), if \(N_1 \) approximates \(f\) with n hidden units, \(l\) layers, and \(e(n,l)\) error, then there exists an \(N_2 \) which approximates \(f\) with \((n+1)^{k}\) hidden units, \(k(l+1)\) layers, and \(e(n,l)\) error, for some constant k.

-

\(\Gamma _1\) and \(\Gamma _2 \) are equivalent if and only if they emulate each other with respect to \(e\).

In the next theorem, neural networks with activation functions that satisfy the specified conditions are capable of approximating polynomials.

Theorem 1

(DasGupta and Schnitger) A neural network with an activation function \(g\):\(\mathbf{R}\rightarrow \mathbf{R}\) can \(\varepsilon \)-approximate any polynomial over some finite domain \(\left[ {-D,D} \right] \) if and only if there exist real numbers \(\alpha ,\, \beta ,\, (\alpha >0)\) and an integer k such that

-

\(g\) can be represented by the power series \(\sum _{i=0}^\infty {a_i (x-\beta )^{i}} \) for all \(x\in \left[ {-\alpha ,\alpha } \right] \).

-

The coefficients are rational numbers of the form \(a_i =\frac{p_i }{q_i }\) with \(|p_i |,\, |q_i |\le 2^{poly(i)}\) (for \(i>1)\).

-

For each \(i>2\) there exists j with \(i\le j\le i^{k}\) and \(a_j \ne 0\).

Theorem 2

(DasGupta and Schnitger) A neural network with an activation function \(g\):\(\mathbf{R}\rightarrow \mathbf{R}\) and n hidden units can approximate the step function over the domain \(\left[ {-1,1} \right] -\left[ {-2^{-n},2^{-n}} \right] \) with error at most \(2^{-n}\) when

-

\(\left| {g(x)-g(x+\varepsilon )} \right| =O(\varepsilon /{x^{2}})\), for \(x\ge 1,\, \varepsilon \ge 0\).

-

\(0<\int _0^\infty {\left| {g(1+u^{2})} \right| } du<\infty \).

-

\(\left| {\int _{2^{N}}^\infty {g(u^{2})} \hbox { du }} \right| =O(1/N)\), for all \(N\ge 1\).

Theorem 3

(DasGupta and Schnitger) Assume that \(\Gamma \) is a neural network architecture which can approximate polynomials in the sense of Theorem 1 and can approximate the step function in the sense of Theorem 2

-

\(\Gamma \) can emulate spline networks.

-

If each activation function in a \(\Gamma \) neural network can be approximated over the domain \(\left[ {-2^{n}, 2^{n}} \right] \) with a spline network with n units, constant number of layers, and error \(2^{-n}\), then \(\Gamma \) is equivalent to splines.

Rights and permissions

About this article

Cite this article

Lee, SW., Song, HS. Emulation of Spline Networks Through Approximation of Polynomials and Step Function of Neural Networks with Cosine Modulated Symmetric Exponential Function. Wireless Pers Commun 79, 2579–2594 (2014). https://doi.org/10.1007/s11277-014-1664-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11277-014-1664-8