Abstract

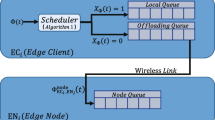

Stability in queue characteristics with average power and maximized data processing is a prominent research issue in any networks. These should be ensured even in Mobile Edge Computing (MEC) models where smooth and continuous integration of innovative applications are provided with low latency and improved quality. An offloading plan is required for ensuring this with regulation of processing capacity and network operational cost in edge nodes which will improve the efficiency of the IoT with edge computing. A task-based offloading algorithm is proposed in this paper for taking smart decisions for better resource allocation and augmentation of computational capabilities to the MEC server by allowing mobile devices (MDs) for offloading their intensive computation-based tasks to proximal Multi-eNodeBs (Multi-eNBs) based on the time-varying channel desired state. A wireless MEC devices model which is being used by many users for broadcasting their task is considered. The queue stability and minimizing the consumed energy along with maximizing the reward even under the constrained deadline is maintained by our proposed novel framework, named DCARL–ARP (Deep Convolution Attention Reinforcement Learning–Adaptive Rewarding Policy) which combines the Deep Convolution and Lyapunov optimization with feature map attention mechanism. DCARL–ARP is incorporated for the proper state selection decisions at different time frame. The performance analysis and evaluation prove that the DCARL–ARP has the optimum computation rate which is appropriate for real-time implementation in varying channel conditions. The experimental evaluation shows that this mechanism can effectively reduce the average energy consumption for execution by 0.02% and the average data queue length by 50%.

Similar content being viewed by others

References

Li, Z., Chang, V., Ge, J., Pan, L., Hu, H., & Huang, B. (2021). Energy-aware task offloading with deadline constraint in mobile edge computing. EURASIP Journal on Wireless Communications and Networking. https://doi.org/10.1186/s13638-021-01941-3

Mach, P., & Becvar, Z. (2017). Mobile edge computing: A survey on architecture and computation offloading. IEEE Communications Surveys & Tutorials, 19(3), 1628–1656.

Xu, X., Zhang, X., Gao, H., Xue, Y., Qi, L., & Dou, W. (2020). BeCome: Blockchain-enabled computation offloading for IoT in mobile edge computing. IEEE Trans. Ind. Inform., 16(6), 4187–4195.

Peng, K., Zhu, M., Zhang, Y., Liu, L., Zhang, J., Leung, V. C. M., & Zheng, L. (2019). An energy- and cost-aware computation offloading method for workfow applications in mobile edge computing. EURASIP Journal on Wireless Communications and Networking, 19, 207.

Bae, S., Han, S. & Sung, Y. (2020). A reinforcement learning formulation of the lyapunov optimization: Application to edge computing systems with queue stability. IEEE Transactions on Networking.

Bi, S., Huang, L., Wang, H., & Zhang, Y. J. A. (2021). Lyapunov-guided deep reinforcement learning for stable online computation offloading in mobile-edge computing networks. IEEE Transactions on Wireless Communications, 20, 7519.

Bertsekas, D. P. (1995). Dynamic programming and optimal control. Athena Scientific Belmont.

Yan, J., Bi, S., Zhang, Y. J., & Tao, M. (2020). Optimal task offloading and resource allocation in mobile-edge computing with inter-user task dependency. IEEE Transactions on Wireless Communications, 19(1), 235–250.

You, C., Huang, K., & Chae, H. (2016). Energy efficient mobile cloud computing powered by wireless energy transfer. IEEE Journal on Selected Areas in Communications, 34(5), 1757–1771.

Zhang, W., Wen, Y., Guan, K., Kilper, D., Luo, H., & Wu, D. O. (2013). Energy optimal mobile cloud computing under stochastic wireless channel. IEEE Transactions on Wireless Communications, 12(9), 4569–4581.

Bi, S., Huang, L., & Zhang, Y. J. (2020). Joint optimization of service caching placement and computation offloading in mobile edge computing systems. IEEE Transactions on Wireless Communications, 19(7), 4947–4963.

Bi, S., & Zhang, Y. J. (2018). Computation rate maximization for wireless powered mobile-edge computing with binary computation offloading. IEEE Transactions on Wireless Communications, 17(6), 4177–4190.

Dinh, T. Q., Tang, J., La, Q. D., & Quek, T. Q. (2017). Offloading in mobile edge computing: Task allocation and computational frequency scaling. IEEE Transactions on Communications, 65(8), 3571–3584.

Li, J., Gao, H., Lv, T. and Lu, Y. (2018). Deep reinforcement learning based computation offloading and resource allocation for MEC. In IEEE Wireless Communications and Networking Conference(WCNC).

Min, M., Xiao, L., Chen, Y., Cheng, P., Wu, D., & Zhuang, W. (2019). Learning based computation offloading for IoT devices with energy harvesting. IEEE Transactions on Vehicular Technology, 68(2), 1930–1941.

Wei, Y., Yu, F. R., Song, M., & Han, Z. (2019). Joint optimization of caching, computing, and radio resources for fog-enabled IoT using natural actor critic deep reinforcement learning. IEEE Internet of Things Journal, 6(2), 2061–2073.

Huang, L., Bi, S., & Zhang, Y. J. (2020). Deep reinforcement learning for online computation offloading in wireless powered mobile-edge computing networks. IEEE Transactions on Mobile Computing, 19(11), 2581–2593.

Chen, X., Zhang, H., Wu, C., Mao, S., Ji, Y., & Bennis, M. (2019). Optimized computation offloading performance in virtual edge computing systems via deep reinforcement learning. IEEE Internet of Things Journal, 6(3), 4005–4018.

Eom, H., Juste, P.S., Figueiredo, R., Tickoo, O., Illikkal, R. & Iyer, R. (2013). Machine learning-based runtime scheduler for mobile offloading framework. In IEEE/ACM 6th International Conference on Utility and Cloud Computing.

Liu, Y., Yu, H., Xie, S., & Zhang, Y. (2019). Deep Reinforcement learning for offloading and Resource allocation in vehicle edge computing and networks. IEEE Transactions on Vehicular Technology, 68(11), 11158–11168.

Hu, Z., Wan, K., Gao, X., & Zhai, Y. (2019). A dynamic adjusting reward function method for deep reinforcement learning with adjustable parameters. Hindawi Mathematical Problems in Engineering. https://doi.org/10.1155/2019/7619483

Kaur, A., Kaur, B., Singh, P., Devgan, M. S., & Toor, H. K. (2020). Load balancing optimization based on deep learning approach in cloud environment. International Journal of Information Technology and Computer Science, 12, 8.

Che, H., Bai, Z., Zuo, R. and Li, H. (2020) A deep reinforcement learning approach to the optimization of data center task scheduling. Wiley.

Neely, M. J. (2010). Stochastic network optimization with application to communication and queuing systems. Synthesis Lectures on Communication Networks, 3(1), 1–211.

Mao, Y., Zhang, J., & Letaief, K. B. (2016). Dynamic computation offloading for mobile-edge computing with energy harvesting devices. IEEE Journal on Selected Areas in Communications, 34(12), 3590–3605.

Du, J., Yu, F. R., Chu, X., Feng, J., & Lu, G. (2019). Computation offloading and resource allocation in vehicular networks based on dual-side cost minimization. IEEE Transactions on Vehicular Technology, 68(2), 1079–1092.

Liu, C., Bennis, M., Debbah, M., & Poor, H. V. (2019). Dynamic task offloading and resource allocation for ultra-reliable low-latency edge computing. IEEE Transactions on Communications, 67(6), 4132–4150.

Xu, X., Zhang, X., Gao, H., Xue, Y., Qi, L., & Dou, W. (2019). BeCome: Blockchain-enabled computation offloading for IoT in mobile edge computing. IEEE Transactions on Industrial Informatics, 16, 4187.

Sun, H., Chen, X., Shi, Q., Hong, M., Fu, X., & Sidiropoulos, N. D. (2017). Learning to optimize: Training deep neural networks for wireless resource management. In Proc. IEEE SPAWC, pp. 1–6.

Ye, H., Li, G. Y., & Juang, B. H. (2018). Power of deep learning for channel estimation and signal detection in OFDM systems. IEEE Wireless Communication Letters, 7(1), 114–117.

Xiao, L., Li, Y., Han, G., Dai, H., & Poor, H. V. (2018). A secure mobile crowd sensing game with deep reinforcement learning. IEEE Transactions on Information Forensics and Security, 13(1), 35–47.

Shaikh, F. K., & Zeadally, S. (2016). Energy harvesting in wireless sensor networks: A comprehensive review. Renewable and Sustainable Energy Reviews, 55, 1041.

Huang, B., Li, Z., Tang, P., Wang, S., Zhao, J., Hu, H., Li, W., & Chang, V. (2019). Security modeling and efficient computation offloading for service workflow in mobile edge computing. Future Generation Computer Systems, 97, 755.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Anusha, P., Bai, V.M.A. Online computation offloading via deep convolutional feature map attention reinforcement learning and adaptive rewarding policy. Wireless Netw 29, 3769–3779 (2023). https://doi.org/10.1007/s11276-023-03437-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11276-023-03437-y