Abstract

Due to their constrained nature, wireless sensor networks (WSNs) are often optimised for a specific application domain, for example by designing a custom medium access control protocol. However, when several WSNs are located in close proximity to one another, the performance of the individual networks can be negatively affected as a result of unexpected protocol interactions. The performance impact of this ‘protocol interference’ depends on the exact set of protocols and (network) services used. This paper therefore proposes an optimisation approach that uses self-learning techniques to automatically learn the optimal combination of services and/or protocols in each individual network. We introduce tools capable of discovering this optimal set of services and protocols for any given set of co-located heterogeneous sensor networks. These tools eliminate the need for manual reconfiguration while only requiring minimal a priori knowledge about the network. A continuous re-evaluation of the decision process provides resilience to volatile networking conditions in case of highly dynamic environments. The methodology is experimentally evaluated in a large scale testbed using both single- and multihop scenarios, showing a clear decrease in end-to-end delay and an increase in reliability of almost 25 %.

Similar content being viewed by others

References

van den Akker, D., & Blondia, C. (2011). On the effects of interference between heterogeneous sensor network MAC protocols. In IEEE international conference on mobile ad-hoc and sensor systems (IEEE MASS), pp. 560–569, IEEE Computer Society.

Wakamiya, N., Arakawa, S., & Murata, M. (2009). Self-organization based network architecture for new generation networks. In 2009 First international conference on emerging network intelligence, pp. 61–68.

De Poorter, E., Latre, B., Moerman, I., & Demeester, P. (2008). Symbiotic networks: Towards a new level of cooperation between wireless networks. In Published in special issue of the wireless personal communications journal, Springer, Netherlands, 45(4), 479–495.

Lanza-Gutierrez, J. M., Gomez-Pulido, J. A., Vega-Rodriguez, M. A., & Sanchez-Perez, J. M. (2012). Multi-objective evolutionary algorithms for energy-efficiency in heterogeneous wireless sensor networks. In SAS 2012: IEEE Sensors Applications Symposium, Feb 7, 2012–Feb 9, 2012, Brescia, Italy.

Deb, K., Agrawal, S., Pratap, A., & Meyarivan, T. (2000). A fast elitist non-dominated sorting genetic algorithm for multi-objective optimization: NSGA-II. In Parallel problem solving from nature PPSN VI.

Zitzler, E., Laumanns, M., & Thiele, L. (2001). SPEA2: Improving the strength Pareto evolutionary algorithm. In EUROGEN.

Özdemir, S., Bara, A. A., & Khalil, Ö. A. Multi-objective evolutionary algorithm based on decomposition for energy efficient coverage in wireless sensor networks.

Coello, C. A. C., Lamont, G. B., & Van Veldhuizen, D. A. (2007). Evolutionary algorithms for solving multi-objective problems (2nd ed.). Berlin: Springer.

Martins, F. V. C., Carrano, E. G., Wanner, E. F., Takahashi, R. H., & Mateus, G. R. (2011). A hybrid multi-objective evolutionary approach for improving the performance of wireless sensor networks. IEEE Sensors Journal, 11(3), 361–403.

http://www.me.utexas.edu/bard/LP/LP20Handouts/CPLEX20Tutorial20Handout.

Wang, P., & Wang, T. (2006). Adaptive routing for sensor networks using reinforcement learning. In CIT ’06 proceedings of the sixth IEEE international conference on computer and information technology, Charlotte Convention Center Charlotte, NC.

Ye, Z., & Abouzeid, A. A. (2010). Layered sequential decision policies for cross-layer design of multihop wireless networks. In Information theory and applications workshop (ITA’10), San Diego, CA.

Lee, M., Marconett, D., Ye, X., & Yoo, S. (2007). Cognitive network management with reinforcement learning for wireless mesh networks. In IP operations and management, pp. 168–179, doi:10.1007/978-3-540-75853-2-15.

Watkins, C. J. C. H., & Dayan, P. (1992). Technical note Q-learning. Machine Learning, 8, 279–292.

Ad hoc on-demand distance vector (AODV) routing. Networking group request for comments (rfc): 3561, http://tools.ietf.org/html/rfc3561 (2003).

Bertsekas, D. P. (2010). Approximate policy iteration: A survey and some new methods. Journal of Control Theory and Applications, MIT, 9, 310–335, Report LIDS—2833.

Perkins, T. J., & Precup, D. (2002). A convergent form of approximate policy iteration. In Advance in neural information processing Systems 15, NIPS 2002, Decembre 9–14. Vancouver, British, Columbia, Canada.

Falconer, D. D., Adachi, F., & Gudmundson, B. (1995). Time division multiple access methods for wireless personal communications. IEEE Communications Magazine. doi:10.1109/35.339881.

Jurdak, R., Baldi, P., & Lopes, C. V. (2007). Adaptive low power listening for wireless sensor networks. IEEE Transactions on Mobile Computing, 6(8). doi:10.1109/TMC.2007.1037.

Kleinrock, L., & Tobagi, F. A. (1975). Packetswitching in radio channels: carrier sense multiple-access modes and their throughput-delay characteristics. IEEE Transactions on Communications, 23, 1400–1416.

De Poorter, E., Bouckaert, S., Moerman, I., & Demeester, P. (2011). Non-intrusive aggregation in wireless sensor networks. Ad Hoc Networks, 9(3), 324–340.

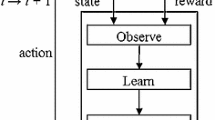

Sutton, R. S., & Barto, A. G. (1998). Reinforcement learning: An introduction. In A bradford book. MIT Press, Cambridge, MA.

Kaelblign, L. P., Littman, M. L., & Moore, A. W. (1996). Reinforcement learning: A survey. Journal of Artificial Intelligence Research, 4, 237–285.

Dietterich, T. G., & Langley, O. (2007). Machine learning for cognitive networks: Technology assessment and research challenges in cognitive networks: Towards self aware networks. Wiley, Chichester, UK. doi:10.1002/9780470515143.ch5.

Lagoudakis, M., & Parr, R. (2001). Model-free least-squares policy iteration. In Proceedings of NIPS.

Lagoudakis, M. G., & Parr, R. (2003). Least-squares policy iteration. Journal of Machine Learning Research, 4, 1107–1149.

Rovcanin, M., Poorter, E. D., Moerman, I., & Demeester, P. (2014). A reinforcement learning based solution for cognitive network cooperation between co-located, heterogeneous wireless sensor networks. AD Hoc Networks, 17, 98–113.

Akker, D. V. D., & Blondia, C. (2013). Virtual gateways: enabling connectivity between MAC heterogeneous sensor networks. International Journal of Sensor Networks, 14(3), 133–143 Inderscience.

De Poorter, E., Troubleyn, E., Moerman, I., & Demeester, P. (2011). IDRA: A flexible system architecture for next generation wireless sensor networks. Wireless Networks, 17(6), 1423–1440.

Tytgat, L., Jooris, B., De Mil, P., Latr, B., Moerman, I., & Demeester, P. UGentWiLab, a real-life wireless sensor testbed with environment emulation. In 6th European conference on wireless sensor networks (EWSN 2009), URL:https://biblio.ugent.be/publication/676545.

Acknowledgments

This research is funded by the FWO-Flanders through a FWO post-doctoral research grant for Eli De Poorter and through an Aspirant grant for Daniel van den Akker

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Rovcanin, M., De Poorter, E., van den Akker, D. et al. Experimental validation of a reinforcement learning based approach for a service-wise optimisation of heterogeneous wireless sensor networks. Wireless Netw 21, 931–948 (2015). https://doi.org/10.1007/s11276-014-0817-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11276-014-0817-8