Abstract

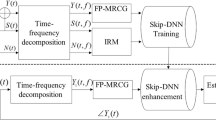

Eliminating the negative effect of adverse environmental noise has been an intriguing and challenging task for speech technology. Neural networks (NNs)-based denoising techniques have achieved favorable performance in recent years. In particular, adding skip connections to NNs has been demonstrated to significantly improve the performance of NNs-based speech enhancement systems. However, in most of the studies, the adding of skip connections was kind of tricks of the trade and lack of sufficient analyses, quantitatively and/or qualitatively, on the underlying principle. This paper presents a denoising architecture of Convolutional Neural Network (CNN) with skip connections for speech enhancement. Particularly, to investigate the inherent mechanism of NNs with skip connections in learning the noise properties, CNN with different skip connection schemes are constructed and a set of denoising experiments, in which statistically different noises being tested, are presented to evaluate the performance of the denoising architectures. Results show that CNNs with skip connections provide better denoising ability than the baseline, i.e., the basic CNN, for both stationary and nonstationary noises. In particular, benefit by adding more sophisticated skip connections is more significant for nonstationary noises than stationary noises, which implies that the complex properties of noise can be learned by CNN with more skip connections.

Similar content being viewed by others

References

Wang, D. L., & Chen, J. T. (2018). Supervised speech separation based on deep learning: An overview. IEEE Transactions on Audio, Speech, and Language Processing, 26(10), 1702–1726.

Virtanen, T., Singh, R., & Raj, B. (2012). Techniques for noise robustness in automatic speech recognition. John Wiley & Sons.

Bolner, F., Goehring, T., Monaghan, J., Dijk, B. V., Wouters, J., & Bleeck, S. (2016). Speech enhancement based on neural networks applied to cochlear implant coding strategies. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 6520-6524).

Loizou, P. C. (2013). Speech enhancement: Theory and practice. CRC Press.

Wang, Q., Du, J., Dai, L. R., & Lee, C. H. (2018). A multiobjective learning and ensembling approach to high performance speech enhancement with compact neural network architectures. IEEE Transactions on Audio, Speech, and Language Processing, 26(7), 1185–1197.

Dahl, G., Yu, D., Deng, L., & Acero, A. (2011). Large vocabulary continuous speech recognition with context-dependent DBN-HMMs. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 4688-4691).

Dahl, G. E., Yu, D., Deng, L., & Acero, A. (2012). Context-dependent pre-trained deep neural networks for large-vocabulary speech recognition. IEEE Transactions on Audio, Speech, and Language Processing, 20(1), 30–42.

Abdel-Hamid, O., Mohamed, A.-R., Jiang, H., Deng, L., Penn, G., & Yu, D. (2014). Convolutional neural networks for speech recognition. IEEE Transactions on Audio, Speech, and Language Processing, 22(10), 1533–1545.

Seltzer, M. L., Yu, D., & Wang, Y. (2013). An investigation of deep neural networks for noise robust speech recognition. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 7398-7402).

Rabiner, L. R. (1989). A tutorial on hidden Markov models and selected applications in speech recognition. Proceedings of the IEEE, 77(2), 257–286.

Fu, S.-W., Tsao, Y., & Lu, X. (2016). SNR-aware convolutional neural network modeling for speech enhancement. In Seventeenth Proceedings of the Annual Conference of the International Speech Communication Association, San Francisco, CA, USA, (pp. 8–12).

Kounovsky, T., & Malek, J. (2017). Single channel speech enhancement using convolutional neural network. Electronics, Control, Measurement, Signals and their Application to Mechatronics (ECMSM).

Park, S. R., & Lee, J. W. (2017). A fully convolutional neural network for speech enhancement. In the Eighteenth Proceedings of the Annual Conference of the International Speech Communication Association (INTERSPEECH).

Vincen, P., Larochelle, H., Lajoie, I., Bengio, Y., & Manzagol, P.-A. (2010). Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. Journal of Machine Learning Research, 11(12), 3371–3408.

Osako, K., Singh, R., & Raj, B. (2015). Complex recurrent neural networks for denoising speech signals. In Applications of Signal Processing to Audio and Acoustics.

Radford, A., Metz, L., & Chintala, S. (2015). Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434.

Ronneberger, O., Fischer, P., & Brox, T. (2015). U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer (pp. 234–241).

Pascual, S., Bonafonte, A., & Serrà, J. (2017). SEGAN: speech enhancement generative adversarial network. In the Eighteenth Proceedings of the Annual Conference of the International Speech Communication Association (INTERSPEECH).

Michelsanti, D., & Tan, Z.-H. (2017). Conditional generative adversarial networks for speech enhancement and noise-robust speaker verification. In the Eighteenth Proceedings of the Annual Conference of the International Speech Communication Association (INTERSPEECH).

He, K., Zhang, X., Ren, S., & Sun, J. (2015). Deep residual learning for image recognition. arXiv preprint arXiv:1512.03385.

Mao, X. J., Shen, C. H., & Yang, Y. B. (2016). Image denoising using very deep fully convolutional encoder-decoder networks with symmetric skip connections. arXiv preprint arXiv:1603.09056.

Shi, Y. P., Rong, W. C., & Zheng, N. N. (2018). Speech enhancement using convolutional neural network with skip connections. In the 11th international symposium on Chinese spoken language processing (ISCSLP).

Lim, J. S., & Oppenheim, A. V. (1978). All-pole modeling of degraded speech. IEEE Transactions on Acoustics, Speech, and Signal Processing, 26(3), 197–210.

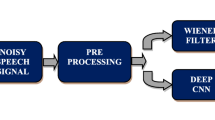

Ram, R., & Mohanty, M. N. (2018). The use of deep learning in speech enhancement. In Proceedings of the First International Conference on Information Technology and Knowledge Management, 14, 107–111.

Hu, Y., & Loizou, P. C. (2003). A perceptually motivated approach for speech enhancement. IEEE Transactions on Speech and Audio Processing, 11(5), 457–465.

Qian, Y., Bi, M., Tan, T., & Yu, K. (2016). Very deep convolutional neural networks for noise robust speech recognition. IEEE Transactions, Audio, Speech, and Language Processing, 24(12), 2263–2276.

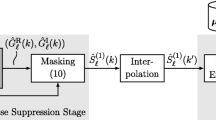

Zhao, Y., Wang, Z.-Q., & Wang, D. L. (2019). Two-stage deep learning for noisy-reverberant speech enhancement. IEEE Transactions on Audio, Speech, Language Processing, 27(1), 53–62.

Wang, D., & Zhang, X. W. (2015). THCHS-30: A free Chinese speech corpus. arXiv preprint arXiv: 1512.01882v2.

Piczak, K. J. (2015). ESC: Dataset for environmental sound classification. In Proceedings of the ACM International Conference on Multimedia (pp. 1015-1018).

Said, S. E., & Dickey, D. A. (1984). Testing for unit roots in autoregressive moving average models of unknown order. Biometrika, 71(3), 599–607.

MacKinnon, J. G. (2010). Critical values for Cointegration tests. Queen’s Economics Department Working Paper, 1227.

MacKinnon, J. G. (1994). Approximate asymptotic distribution functions for unit-root and cointegration tests. Journal of Business and Economic Statistics, 12, 167–176.

Seabold, S., & Perktold, J. (2010). Statsmodels: Econometric and statistical modeling with python. In Proceedings of the 9th Python in Science Conference.

Glorot, X., & Bengio, Y. (2010). Understanding the difficulty of training deep feedforward neural networks. In the thirteenth International Conference on Artificial Intelligence and Statistics, 9, 249–256.

Rumelhart, D. E., Hinton, G. E., & Williams, R. J. (1986). Learning representations by back-propagating errors. Nature, 323(6088), 533–536.

Rix, A. W., Beerends, J. G., Hollier, M. P., & Hekstra, A. P. (2001). Perceptual evaluation of speech quality (pesq)-a new method for speech quality assessment of telephone networks and codecs. IEEE Transactions on Acoustics, Speech, and Signal Processing, 2, 749–752.

Taal, C. H., Hendriks, R. C., Heusdens, R., & Jensen, J. (2010). A short-time objective intelligibility measure for time-frequency weighted noisy speech. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 4214-4217).

Gray, A., & Markel, J. (1976). Distance measures for speech processing. IEEE Transactions on Acoustics, Speech, and Signal Processing, 24(5), 380–391.

Hegde, V., & Pallavi, M. S. (2015). Descriptive analytical approach to analyze the student performance by comparative study using Z score factor through R language. In IEEE International Conference on Computational Intelligence and Computing Research (ICCIC) (pp. 10-12).

Abdullah, N., Rashid, N. E. A., Khan Z. I., & Musirin, I. (2015). Analysis of different Z-score data to the neural network for automatic FSR vehicle classification. In the Third IET International Radar Conference.

Chittineni, S., & Bhogapathi, R. B. (2012). A study on the behavior of a neural network for grouping the data. In the International Journal of Computer Science Issues (IJCSI), 9(1), 228–234.

Lim, J. S., & Oppenheim, A. V. (1979). Enhancement and bandwidth compression of noisy speech. Proceedings of the IEEE, 67(12), 1586–1604.

Acknowledgements

This work is jointly supported by Guangdong Key R&D Project (Grant No. 2018B030338001), NSF of China (Grant No. 61771320) and Shenzhen Science & Innovation Funds (Grant No. JCYJ 20170302145906843).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zheng, N., Shi, Y., Rong, W. et al. Effects of Skip Connections in CNN-Based Architectures for Speech Enhancement. J Sign Process Syst 92, 875–884 (2020). https://doi.org/10.1007/s11265-020-01518-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-020-01518-1