Abstract

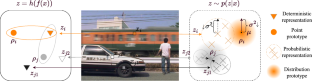

Tremendous breakthroughs have been developed in Semi-Supervised Semantic Segmentation (S4) through contrastive learning. However, due to limited annotations, the guidance on unlabeled images is generated by the model itself, which inevitably exists noise and disturbs the unsupervised training process. To address this issue, we propose a robust contrastive-based S4 framework, termed the Probabilistic Representation Contrastive Learning (PRCL) framework to enhance the robustness of the unsupervised training process. We model the pixel-wise representation as Probabilistic Representations (PR) via multivariate Gaussian distribution and tune the contribution of the ambiguous representations to tolerate the risk of inaccurate guidance in contrastive learning. Furthermore, we introduce Global Distribution Prototypes (GDP) by gathering all PRs throughout the whole training process. Since the GDP contains the information of all representations with the same class, it is robust from the instant noise in representations and bears the intra-class variance of representations. In addition, we generate Virtual Negatives (VNs) based on GDP to involve the contrastive learning process. Extensive experiments on two public benchmarks demonstrate the superiority of our PRCL framework.

Similar content being viewed by others

References

Alonso, I.n., Sabater, A., Ferstl, D., Montesano, L., & Murillo, A.C. Semi-supervised semantic segmentation with pixel-level contrastive learning from a class-wise memory bank. In: ICCV (2021)

Bishop, C.M. Mixture density networks. Neural Computing Research Group Report (1994)

Chen, T., Kornblith, S., Norouzi, M., & Hinton, G. A simple framework for contrastive learning of visual representations. In: ICML (2020)

Chen, X., Yuan, Y., Zeng, G., & Wang, J. Semi-supervised semantic segmentation with cross pseudo supervision. In: CVPR (2021)

Chen, L.C., Zhu, Y., Papandreou, G., Schroff, F., & Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In: ECCV (2018)

Cordts, M., Omran, M., Ramos, S., Rehfeld, T., Enzweiler, M., Benenson, R., Franke, U., Roth, S., & Schiele, B. The cityscapes dataset for semantic urban scene understanding. In: CVPR (2016)

Davies, D.L., & Bouldin, D.W. A cluster separation measure. TPAMI (1979)

Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., & Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In: CVPR (2009)

Everingham, M., Van Gool, L., Williams, C.K., Winn, J., & Zisserman, A. The pascal visual object classes (voc) challenge. IJCV (2010).

Fan, J., Gao, B., Jin, H., & Jiang, L. Ucc: Uncertainty guided cross-head co-training for semi-supervised semantic segmentation. In: CVPR (2022).

Feng, Z., Zhou, Q., Gu, Q., Tan, X., Cheng, G., Lu, X., Shi, J., & Ma, L. Dmt: Dynamic mutual training for semi-supervised learning. Pattern Recognition (2022).

French, G., Aila, T., Laine, S., Mackiewicz, M., & Finlayson, G. Semi-supervised semantic segmentation needs strong, high-dimensional perturbations (2020).

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., & Bengio, Y. (2020). Generative adversarial networks. Communication of the ACM, 63, 139–144.

Grill, J. B., Strub, F., Altché, F., Tallec, C., Richemond, P., Buchatskaya, E., Doersch, C., Avila Pires, B., Guo, Z., Gheshlaghi Azar, M., et al. (2020). Bootstrap your own latent-a new approach to self-supervised learning. Advances in Neural Information Processing Systems, 33, 21271–21284.

Guan, D., Huang, J., Xiao, A., & Lu, S. Unbiased subclass regularization for semi-supervised semantic segmentation. In: CVPR (2022).

Hariharan, B., Arbeláez, P., Bourdev, L., Maji, S., & Malik, J. Semantic contours from inverse detectors. In: IJCV (2011).

He, K., Fan, H., Wu, Y., Xie, S., & Girshick, R. Momentum contrast for unsupervised visual representation learning. In: CVPR (2020).

He, R., Yang, J., & Qi, X. Re-distributing biased pseudo labels for semi-supervised semantic segmentation: A baseline investigation. In: ICCV (2021).

He, K., Zhang, X., Ren, S., & Sun, J. Deep residual learning for image recognition. In: CVPR (2016).

Hoffman, J., Wang, D., Yu, F., & Darrell, T. Fcns in the wild: Pixel-level adversarial and constraint-based adaptation. arXiv (2016).

Hu, H., Cui, J., & Wang, L. Region-aware contrastive learning for semantic segmentation. In: CVPR (2021).

Hung, W.C., Tsai, Y.H., Liou, Y.T., Lin, Y.Y., & Yang, M.H. Adversarial learning for semi-supervised semantic segmentation. arXiv (2018).

Hu, H., Wei, F., Hu, H., Ye, Q., Cui, J., & Wang, L. (2021). Semi-supervised semantic segmentation via adaptive equalization learning. Advances in Neural Information Processing Systems, 34, 22106–22118.

Jabri, A., Owens, A., & Efros, A. (2020). Space-time correspondence as a contrastive random walk. Advances in Neural Information Processing Systems, 33, 19545–19560.

Jiang, Z., Li, Y., Yang, C., Gao, P., Wang, Y., Tai, Y., & Wang, C. Prototypical contrast adaptation for domain adaptive semantic segmentation. In: ECCV (2022).

Kalluri, T., Varma, G., Chandraker, M., & Jawahar, C. Universal semi-supervised semantic segmentation. In: ICCV (2019).

Ke, R., Aviles-Rivero, A.I., Pandey, S., Reddy, S., & Schönlieb, C.B. A three-stage self-training framework for semi-supervised semantic segmentation. TIP (2022).

Ke, T.W., Hwang, J.J., & Yu, S.X. Universal weakly supervised segmentation by pixel-to-segment contrastive learning. In: ICLR (2021).

Kingma, D.P., & Welling, M. Auto-encoding variational bayes. arXiv (2013).

Kwon, D., & Kwak, S. Semi-supervised semantic segmentation with error localization network. In: CVPR (2022).

Lai, X., Tian, Z., Jiang, L., Liu, S., Zhao, H., Wang, L., & Jia, J. Semi-supervised semantic segmentation with directional context-aware consistency. In: CVPR (2021).

Li, S., Xu, J., Xu, X., Shen, P., Li, S., & Hooi, B. Spherical confidence learning for face recognition. In: CVPR (2021).

Li, D., Yang, J., Kreis, K., Torralba, A., & Fidler, S. Semantic segmentation with generative models: Semi-supervised learning and strong out-of-domain generalization. In: CVPR (2021).

Liu, Y., Tian, Y., Chen, Y., Liu, F., Belagiannis, V., & Carneiro, G. Perturbed and strict mean teachers for semi-supervised semantic segmentation. In: CVPR (2022).

Liu, S., Zhi, S., Johns, E., & Davison, A. Bootstrapping semantic segmentation with regional contrast. In: ICLR (2022).

Mittal, S., Tatarchenko, M., & Brox, T. Semi-supervised semantic segmentation with high-and low-level consistency. TPAMI (2019).

Miyato, T., Maeda, S.i., Koyama, M., & Ishii, S. Virtual adversarial training: A regularization method for supervised and semi-supervised learning. TPAMI (2018).

Oh, S.J., Gallagher, A.C., Murphy, K.P., Schroff, F., Pan, J., & Roth, J. Modeling uncertainty with hedged instance embeddings. In: ICLR (2019).

Olsson, V., Tranheden, W., Pinto, J., & Svensson, L. Classmix: Segmentation-based data augmentation for semi-supervised learning. In: WACV (2021).

Ouali, Y., Hudelot, C., & Tami, M. Semi-supervised semantic segmentation with cross-consistency training. In: CVPR (2020).

Park, J., Lee, J., Kim, I.J., & Sohn, K. Probabilistic representations for video contrastive learning. In: CVPR (2022).

Peng, J., Estrada, G., Pedersoli, M., & Desrosiers, C. Deep co-training for semi-supervised image segmentation. PR (2020).

Qiao, P., Wei, Z., Wang, Y., Wang, Z., Song, G., Xu, F., Ji, X., Liu, C., & Chen, J. Fuzzy positive learning for semi-supervised semantic segmentation. In: CVPR (2023).

Rousseeuw, P. J. (1987). Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. Journal of Computational and Applied Mathematics, 20, 53–65.

Scott, T.R., Gallagher, A.C., & Mozer, M.C. von mises-fisher loss: An exploration of embedding geometries for supervised learning. In: ICCV (2021).

Scott, T.R., Ridgeway, K., & Mozer, M.C. Stochastic prototype embeddings. arXiv (2019).

Shi, Y., & Jain, A. Probabilistic face embeddings. In: ICCV (2019).

Sohn, K., Berthelot, D., Carlini, N., Zhang, Z., Zhang, H., Raffel, C. A., Cubuk, E. D., Kurakin, A., & Li, C. L. (2020). Fixmatch: Simplifying semi-supervised learning with consistency and confidence. Advances in Neural Information Processing Systems, 33, 596–608.

Tarvainen, A., & Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. Advances in Neural Information Processing Systems (2017).

Van den Oord, A., Li, Y., & Vinyals, O. Representation learning with contrastive predictive coding. arXiv (2018).

van der Maaten, L., & Hinton, G. Visualizing data using t-sne. JMLR (2008).

Vaseghi, S.V. Advanced digital signal processing and noise reduction. John Wiley & Sons (2008).

Wang, Y., Wang, H., Shen, Y., Fei, J., Li, W., Jin, G., Wu, L., Zhao, R., & Le, X. Semi-supervised semantic segmentation using unreliable pseudo labels. In: CVPR (2022).

Wang, C., Xie, H., Yuan, Y., Fu, C., & Yue, X. Space engage: Collaborative space supervision for contrastive-based semi-supervised semantic segmentation. In: ICCV (2023).

Wang, X., Zhang, B., Yu, L., & Xiao, J. Hunting sparsity: Density-guided contrastive learning for semi-supervised semantic segmentation. In: CVPR (2023).

Wang, W., Zhou, T., Yu, F., Dai, J., Konukoglu, E., & Van Gool, L. Exploring cross-image pixel contrast for semantic segmentation. In: ICCV (2021).

Wei, C., Sohn, K., Mellina, C., Yuille, A., & Yang, F. Crest: A class-rebalancing self-training framework for imbalanced semi-supervised learning. In: CVPR (2021).

Wu, Z., Xiong, Y., Yu, S.X., & Lin, D. Unsupervised feature learning via non-parametric instance discrimination. In: CVPR (2018).

Xiao, T., Liu, S., De Mello, S., Yu, Z., Kautz, J., & Yang, M.H. Learning contrastive representation for semantic correspondence. IJCV (2022).

Xie, B., Li, S., Li, M., Liu, C.H., Huang, G., & Wang, G. Sepico: Semantic-guided pixel contrast for domain adaptive semantic segmentation. TPAMI (2023).

Xie, Z., Lin, Y., Zhang, Z., Cao, Y., Lin, S., & Hu, H. Propagate yourself: Exploring pixel-level consistency for unsupervised visual representation learning. In: CVPR (2021).

Xie, E., Wang, W., Yu, Z., Anandkumar, A., Alvarez, J.M., & Luo, P. Segformer: Simple and efficient design for semantic segmentation with transformers. NeruIPS (2021).

Xu, H. M., Liu, L., Bian, Q., & Yang, Z. (2022). Semi-supervised semantic segmentation with prototype-based consistency regularization. Advances in Neural Information Processing Systems, 35, 26007–26020.

Yang, L., Zhuo, W., Qi, L., Shi, Y., & Gao, Y. St++: Make self-training work better for semi-supervised semantic segmentation. In: CVPR (2022).

Ye, M., Zhang, X., Yuen, P.C., & Chang, S.F. Unsupervised embedding learning via invariant and spreading instance feature. In: CVPR (2019).

Zheng, X., Luo, Y., Wang, H., Fu, C., & Wang, L. Transformer-cnn cohort: Semi-supervised semantic segmentation by the best of both students. arXiv (2022).

Zhou, B., Cui, Q., Wei, X.S., & Chen, Z.M. Bbn: Bilateral-branch network with cumulative learning for long-tailed visual recognition. In: CVPR (2020).

Zhou, T., Wang, W., Konukoglu, E., & Van Gool, L. Rethinking semantic segmentation: A prototype view. In: CVPR (2022).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Communicated by Ming-Hsuan Yang

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Proof of Equation 4

Proof of Equation 4

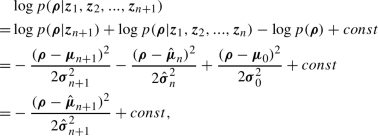

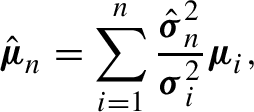

Generally, we regard the prototype as the posterior distribution after the \(n\textrm{th}\) observations of representations \(\{z_1, z_2, \dots , z_n\}\). Meanwhile, we assume that all the observations are conditionally independent, the distribution prototype can be derived as \(p(\varvec{\rho }|\varvec{z}_1, \varvec{z}_2,..., \varvec{z}_{n})\). Without loss of generality, we only consider a one-dimensional case here. It is easy to extend the proof to all dimensions since each dimension of the feature is supposed to be independent. We assume that the distribution prototype \(p(\varvec{\rho }|\varvec{z}_1, \varvec{z}_2,..., \varvec{z}_{n})\) is with \(\hat{\mu }_n\) and \(\hat{\sigma }^2_n\) as mean and variance, respectively. Now we need to add a new representation as the observation to obtain a new prototype \(p(\varvec{\rho }|\varvec{z}_1, \varvec{z}_2,..., \varvec{z}_{n+1})\), if we take log on this prototype, we have:

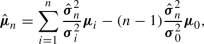

where "const" means the constant which is irrelevant to the prototype \(\varvec{\rho }\) and

\(\varvec{\sigma }_0\) is the \(\varvec{\sigma }\) of the first representation. At the beginning of the training process, the representation is unreasonable, so we consider that the reliability is quite low and \(\varvec{\sigma }_0\rightarrow \infty \) (corresponds to an extremely large value in experiments). We have

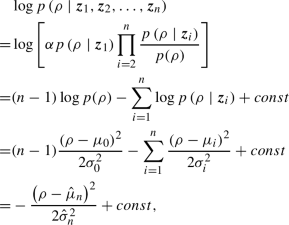

Above is the process of obtaining \(\rho _{n+1}\) through \(\rho _{n}\). Next, we briefly give the solution of obtaining \(\rho _{n+1}\) using \(n+1\) representations.

where \(\alpha =\frac{\prod _{i=1}^n p(\varvec{\rho }_i)}{p(\varvec{z}_1, \varvec{z}_2,..., \varvec{z}_{n})}\) and

Because \(\varvec{\sigma }_0\rightarrow \infty \), we have

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xie, H., Wang, C., Zhao, J. et al. PRCL: Probabilistic Representation Contrastive Learning for Semi-Supervised Semantic Segmentation. Int J Comput Vis (2024). https://doi.org/10.1007/s11263-024-02016-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11263-024-02016-8