Abstract

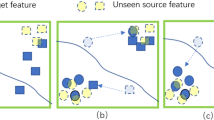

Universal domain adaptation aims to transfer the knowledge of common classes from the source domain to the target domain without any prior knowledge on the label set, which requires distinguishing in the target domain the unknown samples from the known ones. Recent methods usually focused on categorizing a target sample into one of the source classes rather than distinguishing known and unknown samples, which ignores the inter-sample affinity between known and unknown samples, and may lead to suboptimal performance. Aiming at this issue, we propose a novel UniDA framework where such inter-sample affinity is exploited. Specifically, we introduce a knowability-based labeling scheme which can be divided into two steps: (1) Knowability-guided detection of known and unknown samples based on the intrinsic structure of the neighborhoods of samples, where we leverage the first singular vectors of the affinity matrix to obtain the knowability of every target sample. (2) Label refinement based on neighborhood consistency to relabel the target samples, where we refine the labels of each target sample based on its neighborhood consistency of predictions. Then, auxiliary losses based on the two steps are used to reduce the inter-sample affinity between the unknown and the known target samples. Finally, experiments on four public datasets demonstrate that our method significantly outperforms existing state-of-the-art methods.

Similar content being viewed by others

Data Availability

The datasets generated during and/or analysed during the current study are available in the Office-31 repository https://faculty.cc.gatech.edu/~judy/domainadapt/, the OfficeHome repository https://www.hemanthdv.org/officeHomeDataset.html, the VisDA repository https://github.com/VisionLearningGroup/taskcv-2017-public/tree/master/classification , and the DomainNet repository http://ai.bu.edu/M3SDA/.

References

Bucci, S., Loghmani, M. R., & Tommasi, T. (2020). On the effectiveness of image rotation for open set domain adaptation. In: European conference on computer vision (pp. 422–438). Springer.

Cao, Z., Long, M., Wang, J., & Jordan, M. I. (2018). Partial transfer learning with selective adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2724–2732).

Cao, Z., Ma, L., Long, M., & Wang, J. (2018). Partial adversarial domain adaptation. In: Proceedings of the European conference on computer vision (ECCV) (pp. 135–150).

Cao, Z., You, K., Long, M., Wang, J., & Yang, Q. (2019). Learning to transfer examples for partial domain adaptation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 2985–2994).

Chen, Y., Zhu, X., Li, W., & Gong, S. (2020). Semi-supervised learning under class distribution mismatch. In: Proceedings of the AAAI Conference on Artificial Intelligence (vol. 34, pp. 3569–3576).

Deng, J., Dong, W., Socher, R., Li, L. J., Li, K., & Fei-Fei, L. (2009). Imagenet: A large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition (pp. 248–255). IEEE.

Feng, Q., Kang, G., Fan, H., & Yang, Y. (2019). Attract or distract: Exploit the margin of open set. In: Proceedings of the IEEE/CVF international conference on computer vision (pp. 7990–7999).

Fu, B., Cao, Z., Long, M., & Wang, J. (2020). Learning to detect open classes for universal domain adaptation. In: European conference on computer vision (pp. 567–583). Springer.

Ganin, Y., & Lempitsky, V. (2015). Unsupervised domain adaptation by backpropagation. In: International conference on machine learning (pp. 1180–1189). PMLR.

Golan, I., & El-Yaniv, R. (2018). Deep anomaly detection using geometric transformations. Advances in Neural Information Processing Systems 31.

Gong, B., Grauman, K., & Sha, F. (2013). Connecting the dots with landmarks: Discriminatively learning domain-invariant features for unsupervised domain adaptation. In: International conference on machine learning (pp. 222–230). PMLR.

Guo, L. Z., Zhang, Z. Y., Jiang, Y., Li, Y. F., & Zhou, Z. H. (2020) Safe deep semi-supervised learning for unseen-class unlabeled data. In: International conference on machine learning (pp. 3897–3906). PMLR.

He, K., Zhang, X., Ren, S., & Sun, J. (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770–778).

Hendrycks, D., & Gimpel, K. (2016). A baseline for detecting misclassified and out-of-distribution examples in neural networks. arXiv preprint arXiv:1610.02136

Hendrycks, D., Mazeika, M., & Dietterich, T. (2018) Deep anomaly detection with outlier exposure. arXiv preprint arXiv:1812.04606

Hendrycks, D., Mazeika, M., Kadavath, S., & Song, D. (2019). Using self-supervised learning can improve model robustness and uncertainty. Advances in Neural Information Processing Systems 32.

Hsu, Y.C., Shen, Y., Jin, H., & Kira, Z. (2020). Generalized odin: Detecting out-of-distribution image without learning from out-of-distribution data. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 10951–10960).

Huang, Y., Dai, S., Nguyen, T., Baraniuk, R. G., & Anandkumar, A. (2019). Out-of-distribution detection using neural rendering generative models. arXiv preprint arXiv:1907.04572

Kim, T., Ko, J., Choi, J., Yun, S. Y., et al. (2021). Fine samples for learning with noisy labels. Advances in Neural Information Processing Systems, 34, 24137–24149.

Kundu, J. N., Venkat, N., & Babu, R. V. (2020). Universal source-free domain adaptation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 4544–4553).

Lee, K., Lee, K., Lee, H., & Shin, J. (2018). A simple unified framework for detecting out-of-distribution samples and adversarial attacks. Advances in Neural Information Processing Systems 31.

Li, G., Kang, G., Zhu, Y., Wei, Y., & Yang, Y. (2021). Domain consensus clustering for universal domain adaptation. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR).

Liang, J., Hu, D., Feng, J., & He, R. (2022). Dine: Domain adaptation from single and multiple black-box predictors. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 8003–8013).

Liang, J., Wang, Y., Hu, D., He, R., & Feng, J. (2020). A balanced and uncertainty-aware approach for partial domain adaptation. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XI (pp. 123–140). Springer.

Liang, S., Li, Y., & Srikant, R. (2017). Enhancing the reliability of out-of-distribution image detection in neural networks. arXiv preprint arXiv:1706.02690

Liu, H., Cao, Z., Long, M., Wang, J., & Yang, Q. (2019). Separate to adapt: Open set domain adaptation via progressive separation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 2927–2936).

Long, M., Zhu, H., Wang, J., & Jordan, M. I. (2016). Unsupervised domain adaptation with residual transfer networks. arXiv preprint arXiv:1602.04433

Van der Maaten, L., & Hinton, G. (2008). Visualizing data using t-sne. Journal of Machine Learning Research 9(11).

Nalisnick, E. T., Matsukawa, A., Teh, Y. W., & Lakshminarayanan, B. (2019). Detecting out-of-distribution inputs to deep generative models using a test for typicality.

Panareda Busto, P., & Gall, J. (2017) Open set domain adaptation. In: Proceedings of the IEEE international conference on computer vision (pp. 754–763).

Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L., et al. (2019). Pytorch: An imperative style, high-performance deep learning library. Advances in Neural Information Processing Systems, 32, 8026–8037.

Peng, X., Bai, Q., Xia, X., Huang, Z., Saenko, K., & Wang, B. (2019). Moment matching for multi-source domain adaptation. In: Proceedings of the IEEE/CVF international conference on computer vision (pp. 1406–1415).

Peng, X., Usman, B., Kaushik, N., Hoffman, J., Wang, D., & Saenko, K. (2017). Visda: The visual domain adaptation challenge. arXiv preprint arXiv:1710.06924

Saenko, K., Kulis, B., Fritz, M., & Darrell, T. (2010). Adapting visual category models to new domains. In: European conference on computer vision (pp. 213–226). Springer.

Saito, K., Kim, D., Sclaroff, S., & Saenko, K. (2020). Universal domain adaptation through self-supervision. Advances in Neural Information Processing Systems, 33, 16282–16292.

Saito, K., & Saenko, K. (2021). Ovanet: One-vs-all network for universal domain adaptation. In: Proceedings of the IEEE/CVF international conference on computer vision (ICCV) (pp. 9000–9009).

Saito, K., Watanabe, K., Ushiku, Y., & Harada, T. (2018). Maximum classifier discrepancy for unsupervised domain adaptation. In: Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3723–3732).

Saito, K., Yamamoto, S., Ushiku, Y., & Harada, T. (2018). Open set domain adaptation by backpropagation. In: Proceedings of the European conference on computer vision (ECCV) (pp. 153–168).

Sastry, C. S., & Oore, S. (2020). Detecting out-of-distribution examples with gram matrices. In: International conference on machine learning (pp. 8491–8501). PMLR.

Sehwag, V., Chiang, M., & Mittal, P. (2021). Ssd: A unified framework for self-supervised outlier detection. arXiv preprint arXiv:2103.12051

Selvaraju, R.R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., & Batra, D. (2017). Grad-cam: Visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE international conference on computer vision (pp. 618–626).

Serrà, J., Álvarez, D., Gómez, V., Slizovskaia, O., Núñez, J. F., & Luque, J. (2019). Input complexity and out-of-distribution detection with likelihood-based generative models. arXiv preprint arXiv:1909.11480

Sharma, A., Kalluri, T., & Chandraker, M. (2021). Instance level affinity-based transfer for unsupervised domain adaptation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 5361–5371).

Simonyan, K., & Zisserman, A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Tack, J., Mo, S., Jeong, J., & Shin, J. (2020). Csi: Novelty detection via contrastive learning on distributionally shifted instances. Advances in Neural Information Processing Systems, 33, 11839–11852.

Venkateswara, H., Eusebio, J., Chakraborty, S., & Panchanathan, S. (2017). Deep hashing network for unsupervised domain adaptation. In: Proceedings of the IEEE conference on computer vision and pattern recognition. (pp. 5018–5027).

Wang, S., Zhao, D., Zhang, C., Guo, Y., Zang, Q., Gu, Y., Li, Y., & Jiao, L. (2022). Cluster alignment with target knowledge mining for unsupervised domain adaptation semantic segmentation. IEEE Transactions on Image Processing, 31, 7403–7418.

Winkens, J., Bunel, R., Roy, A. G., Stanforth, R., Natarajan, V., Ledsam, J. R., MacWilliams, P., Kohli, P., Karthikesalingam, A., Kohl, S., Taylan Cemgil, A., Ali Eslami, S. M., & Ronneberger, O. (2020). Contrastive training for improved out-of-distribution detection. arXiv preprint arXiv:2007.05566

You, K., Long, M., Cao, Z., Wang, J., & Jordan, M. I. (2019). Universal domain adaptation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 2720–2729).

Yu, Q., & Aizawa, K. (2019). Unsupervised out-of-distribution detection by maximum classifier discrepancy. In: Proceedings of the IEEE/CVF international conference on computer vision (pp. 9518–9526).

Yu, Q., Ikami, D., Irie, G., & Aizawa, K. (2020). Multi-task curriculum framework for open-set semi-supervised learning. In: European conference on computer vision (pp. 438–454). Springer.

Zaeemzadeh, A., Joneidi, M., Rahnavard, N., & Shah, M. (2019). Iterative projection and matching: Finding structure-preserving representatives and its application to computer vision. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 5414–5423).

Zhang, J., Ding, Z., Li, W., & Ogunbona, P. (2018). Importance weighted adversarial nets for partial domain adaptation. In: Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 8156–8164).

Zhao, Y., Cai, L., et al. (2021). Reducing the covariate shift by mirror samples in cross domain alignment. Advances in Neural Information Processing Systems, 34, 9546–9558.

Zou, Y., Yu, Z., Kumar, B., & Wang, J. (2018). Unsupervised domain adaptation for semantic segmentation via class-balanced self-training. In: Proceedings of the European conference on computer vision (ECCV) (pp. 289–305).

Funding

This work was supported in part by the National Natural Science Foundation of China under Grants 62076148, U22A2057, and 61991411, in part by the Shandong Excellent Young Scientists Fund Program (Overseas) under Grant 2022HWYQ-042, and in part by the Young Taishan Scholars Program of Shandong Province No. tsqn201909029.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interest to disclose.

Additional information

Communicated by Oliver Zendel.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, Y., Zhang, L., Song, R. et al. Exploiting Inter-Sample Affinity for Knowability-Aware Universal Domain Adaptation. Int J Comput Vis 132, 1800–1816 (2024). https://doi.org/10.1007/s11263-023-01955-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-023-01955-y