Abstract

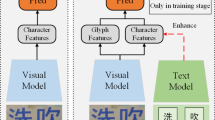

The transformer-based encoder-decoder framework is becoming popular in scene text recognition, largely because it naturally integrates recognition clues from both visual and semantic domains. However, recent studies show that the two kinds of clues are not always well registered and therefore, feature and character might be misaligned in difficult text (e.g., with a rare shape). As a result, constraints such as character position are introduced to alleviate this problem. Despite certain success, visual and semantic are still separately modeled and they are merely loosely associated. In this paper, we propose a novel module called multi-domain character distance perception (MDCDP) to establish a visually and semantically related position embedding. MDCDP uses the position embedding to query both visual and semantic features following the cross-attention mechanism. The two kinds of clues are fused into the position branch, generating a content-aware embedding that well perceives character spacing and orientation variants, character semantic affinities, and clues tying the two kinds of information. They are summarized as the multi-domain character distance. We develop CDistNet that stacks multiple MDCDPs to guide a gradually precise distance modeling. Thus, the feature-character alignment is well build even though various recognition difficulties are presented. We verify CDistNet on ten challenging public datasets and two series of augmented datasets created by ourselves. The experiments demonstrate that CDistNet performs highly competitively. It not only ranks top-tier in standard benchmarks, but also outperforms recent popular methods by obvious margins on real and augmented datasets presenting severe text deformation, poor linguistic support, and rare character layouts. In addition, the visualization shows that CDistNet achieves proper information utilization in both visual and semantic domains. Our code is available at https://github.com/simplify23/CDistNet.

Similar content being viewed by others

References

Baek, J., Kim, G., Lee, J., Park, S., Han, D., Yun, S., Oh, S.J., Lee, H. (2019). What is wrong with scene text recognition model comparisons? Dataset and model analysis. In: ICCV (pp. 4714–4722).

Baek, J., Matsui, Y., Aizawa, K. (2021). What if we only use real datasets for scene text recognition? toward scene text recognition with fewer labels. In: CVPR (pp. 3113–3122).

Bai, J., Chen, Z., Feng, B., & Xu, B. (2014). Chinese image text recognition on grayscale pixels. 2014 IEEE International Conference on Acoustics (pp. 1380–1384). IEEE: Speech and Signal Processing (ICASSP).

Bautista, D. & Atienza, R. (2022). Scene text recognition with permuted autoregressive sequence models. In: ECCV, Springer (pp. 178–196).

Bhunia, A.K., Sain, A., Kumar, A., Ghose, S., Chowdhury, P.N. & Song, Y.Z. (2021). Joint visual semantic reasoning: Multi-stage decoder for text recognition. In: ICCV (pp. 14920–14929).

Chen, X., Wang, T., Zhu, Y., Jin, L., & Luo, C. (2020). Adaptive embedding gate for attention-based scene text recognition. Neurocomputing, 381, 261–271.

Chen, X., Jin, L., Zhu, Y., Luo, C., & Wang, T. (2021). Text recognition in the wild: A survey. ACM Computing Surveys (CSUR), 54(2), 1–35.

Cheng, Z., Bai, F., Xu, Y., Zheng, G., Pu, S. & Zhou, S. (2017). Focusing attention: Towards accurate text recognition in natural images. In: ICCV (pp. 5076–5084).

Cheng, Z., Xu, Y., Bai, F., Niu, Y., Pu, S. & Zhou, S. (2018). Aon: Towards arbitrarily-oriented text recognition. In: CVPR (pp. 5571–5579).

Chng, C.K., Liu, Y., Sun, Y., Ng, C.C., Luo, C., Ni, Z., Fang, C., Zhang, S., Han, J., Ding, E., et al. (2019). Icdar2019 robust reading challenge on arbitrary-shaped text-RRC-ART. In: ICDAR, IEEE (pp. 1571–1576).

Da, C., Wang, P. & Yao, C. (2022). Levenshtein ocr. In: ECCV, Springer (pp. 322–338).

Devlin, J., Chang, M.W., Lee, K. & Toutanova, K. (2019). Bert: Pre-training of deep bidirectional transformers for language understanding. In: NAACL-HLT

Du, Y., Chen, Z., Jia, C., Yin, X., Zheng, T., Li, C., Du, Y. & Jiang, Y.G. (2022). SVTR: Scene text recognition with a single visual model. In: IJCAI (pp. 884–890).

Fang, S., Xie, H., Wang, Y., Mao, Z., Zhang, Y. (2021). Read like humans: Autonomous, bidirectional and iterative language modeling for scene text recognition. In: CVPR (pp. 7094–7103).

Fang, S., Mao, Z., Xie, H., Wang, Y., Yan, C., Zhang, Y. (2022). Abinet++: Autonomous, bidirectional and iterative language modeling for scene text spotting. IEEE Transactions on Pattern Analysis and Machine Intelligence

Gupta, A., Vedaldi, A. & Zisserman, A. (2016) Synthetic data for text localisation in natural images. In: CVPR (pp. 2315–2324).

He, P., Huang, W., Qiao, Y., Loy, C.C. & Tang, X. (2016). Reading scene text in deep convolutional sequences. In: AAAI (pp. 3501–3508).

He, Y., Chen, C., Zhang, J., Liu, J., He, F., Wang, C. & Du, B. (2022). Visual semantics allow for textual reasoning better in scene text recognition. In: AAAI (pp. 888–896).

Hu, W., Cai, X., Hou, J., Yi, S., & Lin, Z. (2020). GTC: Guided training of CTC towards efficient and accurate scene text recognition. AAAI, 34, 11005–11012.

Jaderberg, M., Simonyan, K., Vedaldi, A. & Zisserman, A. (2014). Synthetic data and artificial neural networks for natural scene text recognition. arXiv preprint arXiv:1406.2227

Jaderberg, M., Simonyan, K., Vedaldi, A., & Zisserman, A. (2016). Reading text in the wild with convolutional neural networks. International Journal of Computer Vision, 116(1), 1–20.

Karatzas, D., Gomez-Bigorda, L., Nicolaou, A., Ghosh, S., Bagdanov, A., Iwamura, M., Matas, J., Neumann, L., Chandrasekhar, V.R., & Lu, S. (2015) ICDAR 2015 competition on robust reading. In: ICDAR (pp. 1156–1160).

Lan, Z., Chen, M., Goodman, S., Gimpel, K., Sharma, P., & Soricut, R. (2019) Albert: A lite bert for self-supervised learning of language representations. arXiv preprint arXiv:1909.11942

Lee, C.Y. & Osindero, S. (2016). Recursive recurrent nets with attention modeling for OCR in the wild. In: CVPR (pp. 2231–2239).

Li, H., Wang, P., Shen, C., & Zhang, G. (2019). Show, attend and read: A simple and strong baseline for irregular text recognition. AAAI, 33, 8610–8617.

Li, Y., Qi, H., Dai, J., Ji, X., & Wei, Y. (2017). Fully convolutional instance-aware semantic segmentation. In: CVPR (pp. 2359–2367).

Liao, M., Lyu, P., He, M., Yao, C., Wu, W., & Bai, X. (2019). Mask textspotter: An end-to-end trainable neural network for spotting text with arbitrary shapes. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(2), 532–548.

Liao, M., Zhang, J., Wan, Z., Xie, F., Liang, J., Lyu, P., Yao, C., & Bai, X. (2019). Scene text recognition from two-dimensional perspective. AAAI, 33, 8714–8721.

Long, S., He, X., & Yao, C. (2021). Scene text detection and recognition: The deep learning era. International Journal of Computer Vision, 129(1), 161–184.

Luo, C., Jin, L., & Sun, Z. (2019). MORAN: A multi-object rectified attention network for scene text recognition. Pattern Recognition, 90, 109–118.

Luo, C., Zhu, Y., Jin, L., & Wang, Y. (2020). Learn to augment: Joint data augmentation and network optimization for text recognition. In: CVPR (pp. 13746–13755).

Luo, C., Lin, Q., Liu, Y., Jin, L., & Shen, C. (2021). Separating content from style using adversarial learning for recognizing text in the wild. International Journal of Computer Vision, 129(4), 960–976.

Lyu, P., Liao, M., Yao, C., Wu, W., Bai, X. (2018). Mask textspotter: An end-to-end trainable neural network for spotting text with arbitrary shapes. In: ECCV (pp 67–83).

Lyu, P., Yang, Z., Leng, X., Wu, X., Li, R., Shen, X. (2019). 2d attentional irregular scene text recognizer. arXiv preprint arXiv:1906.05708

Mishra, A., Alahari, K., & Jawahar, C. (2012) Scene text recognition using higher order language priors. In: BMVC (pp. 1–11).

Nayef, N., Yin, F., Bizid, I., Choi, H., Feng, Y., Karatzas, D., Luo, Z., Pal, U., Rigaud, C., & Chazalon, J. (2017). Icdar 2017 robust reading challenge on multi-lingual scene text detection and script identification-RRC-MLT. ICDAR, IEEE, 1, 1454–1459.

Nayef, N., Patel, Y., Busta, M., Chowdhury, P.N., Karatzas, D., Khlif, W., Matas, J., Pal, U., Burie, J.C., & Liu, C.l. et al. (2019) Icdar2019 robust reading challenge on multi-lingual scene text detection and recognition-RRC-MLT-2019. In: ICDAR, IEEE (pp. 1582–1587).

Nguyen, N., Nguyen, T., Tran, V., Tran, M.T., Ngo, T.D., Nguyen, T.H., & Hoai, M. (2021). Dictionary-guided scene text recognition. In: CVPR (pp. 7383–7392).

Peng, D., Jin, L., Liu, Y., Luo, C., & Lai, S. (2022). Pagenet: Towards end-to-end weakly supervised page-level handwritten Chinese text recognition. International Journal of Computer Vision, 130(11), 2623–2645.

Phan, T.Q., Shivakumara, P., Tian, S., & Tan, C.L. (2013) Recognizing text with perspective distortion in natural scenes. In: ICCV (pp. 569–576).

Qiao, Z., Zhou, Y., Yang, D., Zhou, Y., & Wang, W. (2020) Seed: Semantics enhanced encoder-decoder framework for scene text recognition. In: CVPR (pp. 13525–13534).

Risnumawan, A., Shivakumara, P., Chan, C. S., & Tan, C. L. (2014). A robust arbitrary text detection system for natural scene images. ESA, 41(18), 8027–8048.

Rodriguez-Serrano, J. A., Gordo, A., & Perronnin, F. (2015). Label embedding: A frugal baseline for text recognition. International Journal of Computer Vision, 113(3), 193–207.

Sheng, F., Chen, Z., & Xu, B. (2019) Nrtr: A no-recurrence sequence-to-sequence model for scene text recognition. In: ICDAR (pp. 781–786).

Shi, B., Bai, X., & Yao, C. (2017). An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 39(11), 2298–2304.

Shi, B., Yang, M., Wang, X., Lyu, P., Yao, C., & Bai, X. (2018). Aster: An attentional scene text recognizer with flexible rectification. IEEE Transactions on Pattern Analysis and Machine Intelligence, 41(9), 2035–2048.

Su, B., & Lu, S. (2017). Accurate recognition of words in scenes without character segmentation using recurrent neural network. Pattern Recognition, 63, 397–405.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, L. & Polosukhin, I. (2017). Attention is all you need. In: NIPS (pp. 5998–6008).

Wan, Z., He, M., Chen, H., Bai, X., & Yao, C. (2020). Textscanner: Reading characters in order for robust scene text recognition. AAAI, 34, 12120–12127.

Wang, K., Babenko, B., & Belongie, S. (2011) End-to-end scene text recognition. In: ICCV (pp. 1457–1464).

Wang, P., Yang, L., Li, H., Deng, Y., Shen, C. & Zhang, Y. (2019a). A simple and robust convolutional-attention network for irregular text recognition. arXiv preprint arXiv:1904.01375

Wang, P., Da, C., & Yao, C. (2022a) Multi-granularity prediction for scene text recognition. In: ECCV, Springer (pp. 339–355).

Wang, S., Wang, Y., Qin, X., Zhao, Q., & Tang, Z. (2019b) Scene text recognition via gated cascade attention. In: ICME (pp. 1018–1023).

Wang, T., Zhu, Y., Jin, L., Luo, C., Chen, X., Wu, Y., Wang, Q., & Cai, M. (2020). Decoupled attention network for text recognition. AAAI, 34, 12216–12224.

Wang, Y., Xie, H., Fang, S., Wang, J., Zhu, S., Zhang, Y. (2021). From two to one: A new scene text recognizer with visual language modeling network. In: ICCV (pp. 14174–14183).

Wang, Y., Xie, H., Fang, S., Xing, M., Wang, J., Zhu, S., & Zhang, Y. (2022). Petr: Rethinking the capability of transformer-based language model in scene text recognition. IEEE Transactions on Image Processing, 31, 5585–5598.

Xie, X., Fu, L., Zhang, Z., Wang, Z., Bai, X. (2022). Toward understanding wordart: Corner-guided transformer for scene text recognition. In: ECCV, Springer (pp. 303–321).

Xing, L., Tian, Z., Huang, W. & Scott, M.R. (2019). Convolutional character networks. In: ICCV (pp. 9126–9136).

Yan, R., Peng, L., Xiao, S. & Yao, G. (2021). Primitive representation learning for scene text recognition. In: CVPR (pp. 284–293).

Ye, Q., & Doermann, D. (2014). Text detection and recognition in imagery: A survey. IEEE Transactions on Pattern Analysis and Machine Intelligence, 37(7), 1480–1500.

Yu, D., Li, X., Zhang, C., Liu, T., Han, J., Liu, J., & Ding, E. (2020) Towards accurate scene text recognition with semantic reasoning networks. In: CVPR (pp. 12113–12122).

Yue, X., Kuang, Z., Lin, C., Sun, H. & Zhang, W. (2020) Robustscanner: Dynamically enhancing positional clues for robust text recognition. In: ECCV (pp. 135–151)

Zhan, F., & Lu, S. (2019) Esir: end-to-end scene text recognition via iterative image rectification. In: CVPR (pp. 2059–2068).

Zhang, Y., Gueguen, L., Zharkov, I., Zhang, P., Seifert, K., & Kadlec, B. (2017). Uber-text: a large-scale dataset for optical character recognition from street-level imagery. In: SUNw: Scene Understanding Workshop-CVPR

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grants 62172103 and 62102384.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Dimosthenis Karatzas.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zheng, T., Chen, Z., Fang, S. et al. CDistNet: Perceiving Multi-domain Character Distance for Robust Text Recognition. Int J Comput Vis 132, 300–318 (2024). https://doi.org/10.1007/s11263-023-01880-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-023-01880-0